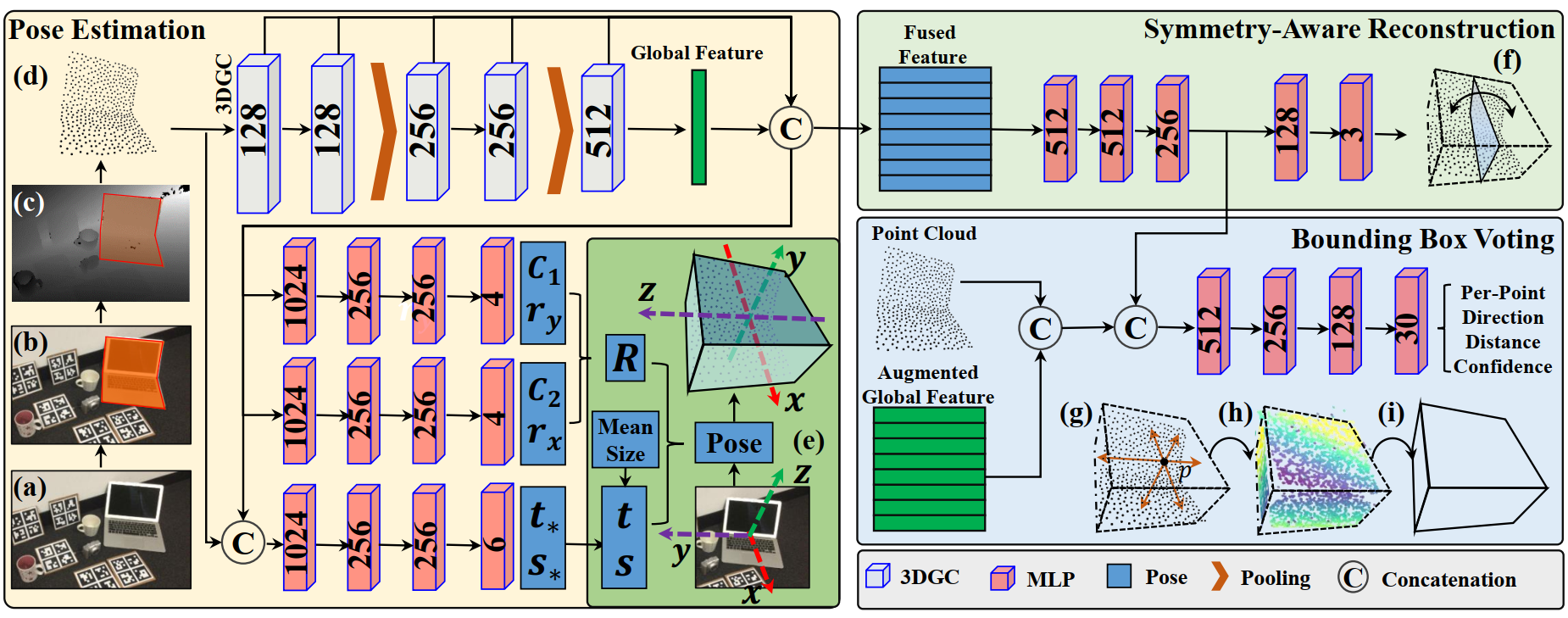

Pytorch implementation of GPV-Pose: Category-level Object Pose Estimation via Geometry-guided Point-wise Voting. (link)

The results on NOCS and the trained model on CAMERA can be found here.

A new version of code which integrates shape prior information has been updated to the shape-prior-integrated branch in this repo! A brief introuction will be presented in this file. Since L_PC_(s) is not really useful (also indicated in the paper), we remove the loss term and transform it into a pre-processing procedure. You can find it in the updated branch.

- Ubuntu 18.04

- Python 3.8

- Pytorch 1.10.1

- CUDA 11.3.

- Install the main requirements in 'requirement.txt'.

- Install Detectron2.

To generate your own dataset, use the data preprocess code provided in this git. Download the detection results in this git.

Download the trained model from this link.

Please note, some details are changed from the original paper for more efficient training.

Specify the dataset directory and run the following command.

python -m engine.train --data_dir YOUR_DATA_DIR --model_save SAVE_DIRDetailed configurations are in 'config/config.py'.

python -m evaluation.evaluate --data_dir YOUR_DATA_DIR --detection_dir DETECTION_DIR --resume 1 --resume_model MODEL_PATH --model_save SAVE_DIROur implementation leverages the code from 3dgcn, FS-Net, DualPoseNet, SPD.