-

- 2.1. Traditional Entity Linking: Representaion Learning

- 2.2. PLM-based Entity Linking: Dual Encoder and Dense Retrieval

- 2.3. The Future of Entity Linking: Paradigm Shift

This repository aims to give an comprehensive view about Entity Linking, and track the recent trends of EL research.

Please refer to izuna385's repository for papers before NAACL'21 and ICLR'21.

- Find the Funding: Entity Linking with Incomplete Funding Knowledge Bases

- Two major challenges of identifying and linking funding entities are:

- (i) sparse graph structure of the Knowledge Base (KB)

- (ii) missing entities in KB.

- Two new datasets for entity disambiguation (EDFund) and end-to-end entity linking (ELFund) use the Crossref funding registry as their KB, containing information about 25,859 funding organizations.

- Funding entity Disambiguation model, referred to as FunD, utilizes five light-weight features. A mention is linked to the entity or NIL with the highest GBM score if higher than threshold.

- Two major challenges of identifying and linking funding entities are:

- Improving Candidate Retrieval with Entity Profile Generation for Wikidata Entity Linking

- Wikidata is the most extensive crowdsourced KB, but its massive number of entities also makes EL challenging. To effectively narrow down the search space, we propose a novel candidate retrieval paradigm based on entity profiling rather than using the traditional alias table.

- Entity profiling means to generate the profile (description and attributes) of an entity given its context. The model adopts the encoder-decoder pretrained language model BART to generate the profile.

- The model then uses a Gradient Boosted Tree (GBT) model to combine the candidate entities retrieved by ElasticSearch via alias table and entity profile. A cross-attention reranker is then adopted to rank the candidate entities and find the correct entity.

- ENTQA: ENTITY LINKING AS QUESTION ANSWERING (ICLR'22 Spotlight)

- The traditional entity linking paradigm can be viewed as a two-stage pipeline: first finding the mention spans in text (i.e. Named Entity Recognition, NER), followed by disambiguating their corresponding entity in the knowledge base (which is the focus of most EL works). The NER step requires finding mentions without knowing their entities, which is unnatural and difficult.

- EntQA views entity linking as a reversed open-domain question answering problem, finding related entities before performing NER stage. The model first retrieves entities related to the full text with a bi-encoder architecture, then predicts the mention span of each candidate entity. Spans with probability lower than

(1,1)will be discarded. - EntQA reverses the order of two stages, thus sheding new light on the EL field. Entity linking is viewed as a sequence matching problem, but this paper proves the MRC paradigm to be applicable. What about other paradigms, e.g. seq2seq(GENRE), sequence labeling, masked language prediction, etc...?

-

Highly Parallel Autoregressive Entity Linking with Discriminative Correction

- Encodes the text with Longformer, then predicts the mention spans with FNN, and generates corresponding entity with a simple LSTM. A correction step with MLP is followed afterwards to ensure the chosen entity has the highest probablity.

- Simple structure after the encoder, enabling parallel linking. Also a combination of autoregressive EL and multi-choice EL.

-

Low-Rank Subspaces for Unsupervised Entity Linking

- Decomposites the embedding of context(by Word2Vec) and embedding(by DeepWalk) into low-dimension vectors, then combines similarity ranking-based weights to score the candidates.

- Rare work about unsupervised entity linking(without annotated mention-entity pairs)

-

- Replaces the large BERT-based encoder with an efficient Residual Convolutional Neural Network for biomedical entity linking in order to capture the local information.

- Two probing experiments show that the input word order and the attention scope of transformers in BERT are not so important for the performance, which means that the local information may be more important.

-

Named Entity Recognition for Entity Linking: What Works and What’s Next

- Enhances a strong EL baseline with i) NER-enriched entity representations, ii) NER-enhanced candidate selection, iii) NER-based negative sampling, and iv) NER-constrained decoding.

- NER helps EL baseline to exceed GENRE when trained on a small amount of instances.

-

MOLEMAN: Mention-Only Linking of Entities with a Mention Annotation Network

- The model seeks the most similar mentions rather than entities, viewing each mention in the training set as an "psuedo entity" reflecting a certain aspect of the corresponding entity.

- A tradeoff between scale(more psuedo entities) and accuracy.

-

LNN-EL: A Neuro-Symbolic Approach to Short-text Entity Linking

- Uses logical neural network(LNN) to build interpretable rules based on first-order logic. The model is somewhat similar to tree-like neural networks, combining various features to form the final score.

Given text

- The widely accepted approach in EL is to embed the mention context and candidate entities into the same vector space, and use the similarity between vectors(cosine, inner product, euclidan, etc) as ranking scores.

- Traditional EL models tend to use KGE(knowledge graph embedding) techniques to model the entities. Popular KGE models include TransE, RESCAL, ConvE, TuckER, etc.

- Structured information also frequently apperar in traditional EL models, like inter-entity similarity, entity type, entity relations.

- Traditional EL models need to train the entity embedding model on the whole KB, and is unfriendly to new entities and long-tail entities. PLM-based EL models encode the textual descriptions via PLMs to get entity embeddings, and suits large-scale KBs (Wikipedia, Wikidata) better.

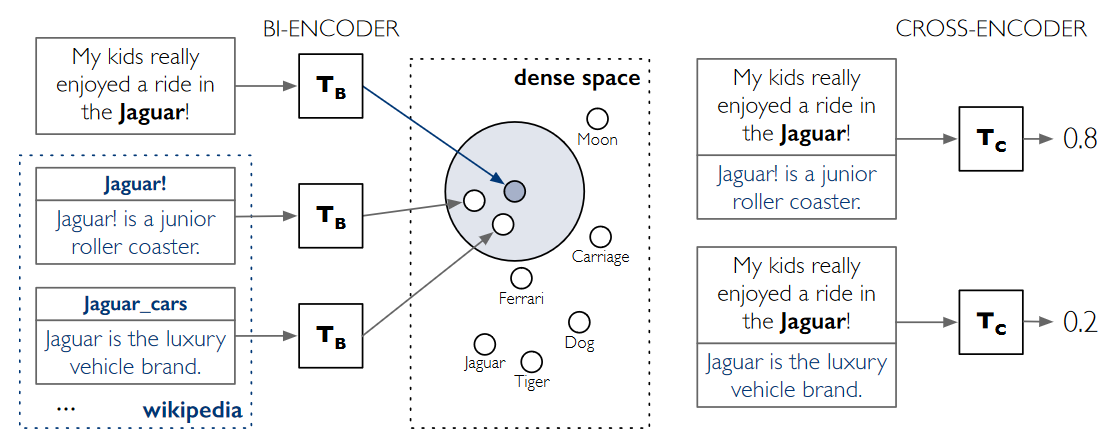

- The popular structures in PLM-based EL models are bi-encoder and cross-encoder.

- The paper timeline about PLM-based Entity Linking: Gillick et al.@CoNLL'19, Agarwal et al.@Arxiv'20, BLINK, Gillick et al.@EMNLP'20, MOLEMAN

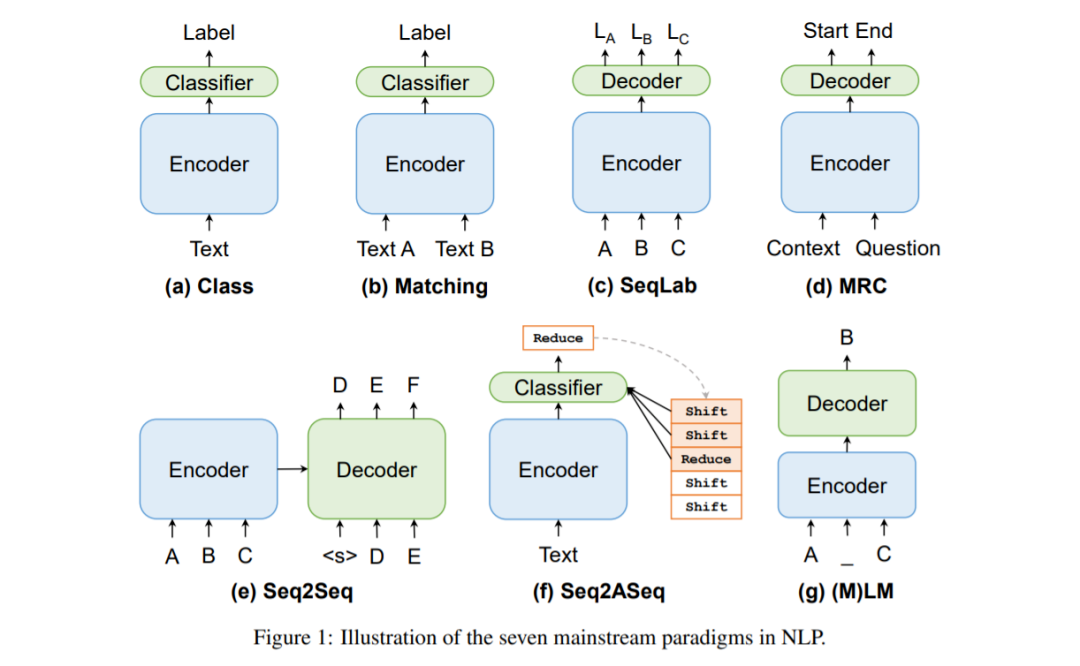

- EL is commonly viewed as a matching problem: most EL models compute the similarity between mention context and candidate entities, selecting the entity with highest similarity score as the answer.

- However EL can also be tackled in other paradigms. For example, Seq2Seq(GENRE, mGENRE, Heng Ji et al.@ACL 2022 (Findings)), MRC(EntQA).

- What about other paradigms? Can they be applied on the EL problem?