-

🔥 🆕 December 2024: We open-sourced the latest version of the CogAgent-9B-20241220 model. Compared to the previous version of CogAgent,

CogAgent-9B-20241220features significant improvements in GUI perception, reasoning accuracy, action space completeness, task universality, and generalization. It supports bilingual (Chinese and English) interaction through both screen captures and natural language. -

🏆 June 2024: CogAgent was accepted by CVPR 2024 and recognized as a conference Highlight (top 3%).

-

December 2023: We open-sourced the first GUI Agent: CogAgent (with the former repository available here) and published the corresponding paper: 📖 CogAgent Paper.

| Model | Model Download Links | Technical Documentation | Online Demo |

|---|---|---|---|

| cogagent-9b-20241220 | 🤗 HuggingFace 🤖 ModelScope 🟣 WiseModel 🧩 Modelers (Ascend) |

📄 Official Technical Blog 📘 Practical Guide (Chinese) |

🤗 HuggingFace Space 🤖 ModelScope Space 🧩 Modelers Space (Ascend) |

CogAgent-9B-20241220 model is based on GLM-4V-9B, a bilingual open-source

VLM base model. Through data collection and optimization, multi-stage training, and strategy improvements,

CogAgent-9B-20241220 achieves significant advancements in GUI perception, inference prediction accuracy, action space

completeness, and generalizability across tasks. The model supports bilingual (Chinese and English) interaction with

both screenshots and language input. This version of the CogAgent model has already been applied in

ZhipuAI's GLM-PC product. We hope the release of this model can assist researchers

and developers in advancing the research and applications of GUI agents based on vision-language models.

The CogAgent-9b-20241220 model has achieved state-of-the-art results across multiple platforms and categories in GUI Agent tasks and GUI Grounding Benchmarks. In the CogAgent-9b-20241220 Technical Blog, we compared it against API-based commercial models (GPT-4o-20240806, Claude-3.5-Sonnet), commercial API + GUI Grounding models (GPT-4o + UGround, GPT-4o + OS-ATLAS), and open-source GUI Agent models (Qwen2-VL, ShowUI, SeeClick). The results demonstrate that CogAgent leads in GUI localization (Screenspot), single-step operations (OmniAct), the Chinese step-wise in-house benchmark (CogAgentBench-basic-cn), and multi-step operations (OSWorld), with only a slight disadvantage in OSWorld compared to Claude-3.5-Sonnet, which specializes in Computer Use, and GPT-4o combined with external GUI Grounding models.

2024-12-24.14.01.04.mov

CogAgent wishes you a Merry Christmas! Let the large model automatically send Christmas greetings to your friends.

2024-12-24.140421_compressed.mp4

Want to open an issue? Let CogAgent help you send an email.

Table of Contents

- CogAgent

- The model requires at least 29GB of VRAM for inference at

BF16precision. UsingINT4precision for inference is not recommended due to significant performance loss. The VRAM usage forINT4inference is about 8GB, while forINT8inference it is about 15GB. In theinference/cli_demo.pyfile, we have commented out these two lines. You can uncomment them and useINT4orINT8inference. This solution is only supported on NVIDIA devices. - All GPU references above refer to A100 or H100 GPUs. For other devices, you need to calculate the required GPU/CPU memory accordingly.

- During SFT (Supervised Fine-Tuning), this codebase freezes the

Vision Encoder, uses a batch size of 1, and trains on8 * A100GPUs. The total input tokens (including images, which account for1600tokens) add up to 2048 tokens. This codebase cannot conduct SFT fine-tuning without freezing theVision Encoder.

For LoRA fine-tuning,Vision Encoderis not frozen; the batch size is 1, using1 * A100GPU. The total input tokens (including images,1600tokens) also amount to 2048 tokens. In the above setup, SFT fine-tuning requires at least60GBof GPU memory per GPU (with 8 GPUs), while LoRA fine-tuning requires at least70GBof GPU memory on a single GPU (cannot be split). Ascend deviceshave not been tested for SFT fine-tuning. We have only tested them on theAtlas800training server cluster. You need to modify the inference code accordingly based on the loading mechanism described in theAscend devicedownload link.- Currently, we do not support inference with the

vLLMframework. We will submit a PR as soon as possible to enable it. - The online demo link does not support controlling computers; it only allows you to view the model's inference results. We recommend deploying the model locally.

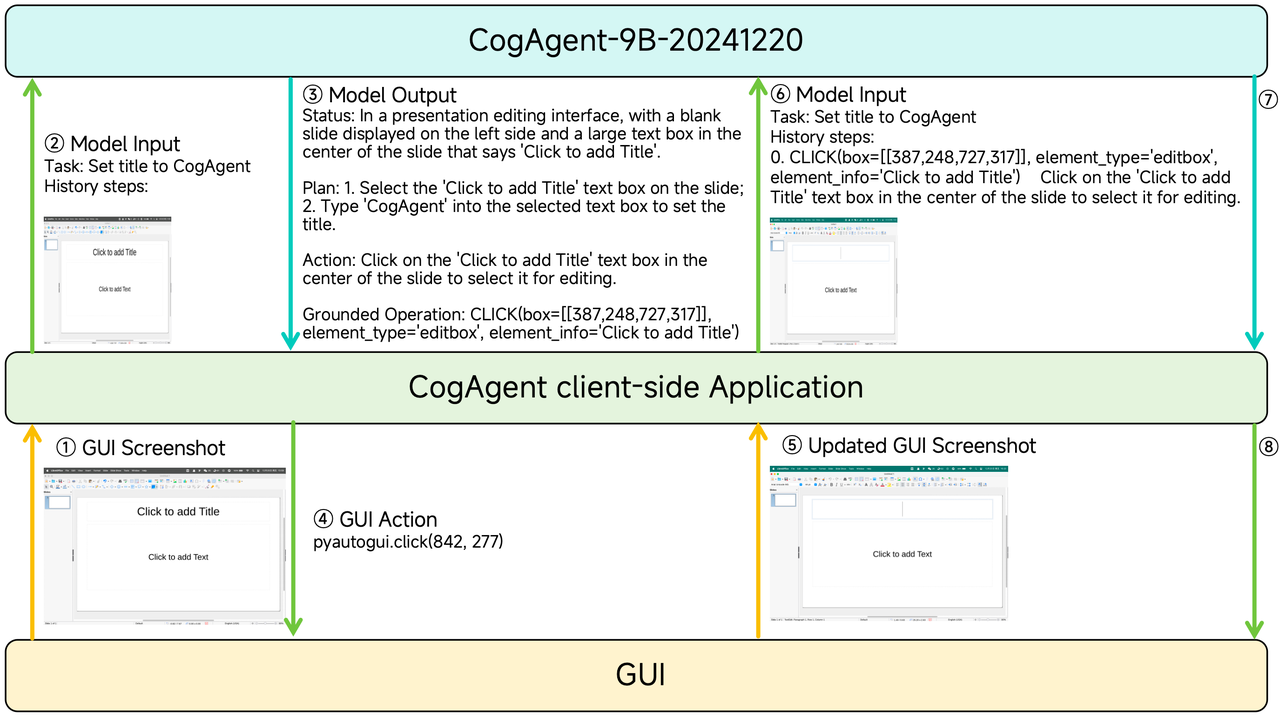

cogagent-9b-20241220 is an agent-type execution model rather than a conversational model. It does not support

continuous dialogue, but it does support a continuous execution history. (In other words, each time a new

conversation session needs to be started, and the past history should be provided to the model.) The workflow of

CogAgent is illustrated as following:

To achieve optimal GUI Agent performance, we have adopted a strict input-output format. Below is how users should format their inputs and feed them to the model, and how to interpret the model’s responses.

You can refer to app/client.py#L115 for constructing user input prompts. A minimal example of user input concatenation code is shown below:

current_platform = identify_os() # "Mac" or "WIN" or "Mobile". Pay attention to case sensitivity.

platform_str = f"(Platform: {current_platform})\n"

format_str = "(Answer in Action-Operation-Sensitive format.)\n" # You can use other format to replace "Action-Operation-Sensitive"

history_str = "\nHistory steps: "

for index, (grounded_op_func, action) in enumerate(zip(history_grounded_op_funcs, history_actions)):

history_str += f"\n{index}. {grounded_op_func}\t{action}" # start from 0.

query = f"Task: {task}{history_str}\n{platform_str}{format_str}" # Be careful about the \nThe concatenated Python string:

"Task: Search for doors, click doors on sale and filter by brands \"Mastercraft\".\nHistory steps: \n0. CLICK(box=[[352,102,786,139]], element_info='Search')\tLeft click on the search box located in the middle top of the screen next to the Menards logo.\n1. TYPE(box=[[352,102,786,139]], text='doors', element_info='Search')\tIn the search input box at the top, type 'doors'.\n2. CLICK(box=[[787,102,809,139]], element_info='SEARCH')\tLeft click on the magnifying glass icon next to the search bar to perform the search.\n3. SCROLL_DOWN(box=[[0,209,998,952]], step_count=5, element_info='[None]')\tScroll down the page to see the available doors.\n4. CLICK(box=[[280,708,710,809]], element_info='Doors on Sale')\tClick the \"Doors On Sale\" button in the middle of the page to view the doors that are currently on sale.\n(Platform: WIN)\n(Answer in Action-Operation format.)\n"Printed prompt:

Task: Search for doors, click doors on sale and filter by brands "Mastercraft".

History steps:

- CLICK(box=[[352,102,786,139]], element_info='Search') Left click on the search box located in the middle top of the screen next to the Menards logo.

- TYPE(box=[[352,102,786,139]], text='doors', element_info='Search') In the search input box at the top, type ' doors'.

- CLICK(box=[[787,102,809,139]], element_info='SEARCH') Left click on the magnifying glass icon next to the search bar to perform the search.

- SCROLL_DOWN(box=[[0,209,998,952]], step_count=5, element_info='[None]') Scroll down the page to see the available doors.

- CLICK(box=[[280,708,710,809]], element_info='Doors on Sale') Click the "Doors On Sale" button in the middle of the page to view the doors that are currently on sale.

(Platform: WIN)

(Answer in Action-Operation format.)

If you want to understand the meaning and representation of each field in detail, please continue reading or refer to the Practical Documentation (in Chinese), "Prompt Concatenation" section.

-

taskfield

The user’s task description, in text format similar to a prompt. This input instructs thecogagent-9b-20241220model on how to carry out the user’s request. Keep it concise and clear. -

platformfield

cogagent-9b-20241220supports agent operations on multiple platforms with graphical interfaces. We currently support three systems:- Windows 10, 11: Use the

WINfield. - macOS 14, 15: Use the

Macfield. - Android 13, 14, 15 (and other Android UI variants with similar GUI operations): Use the

Mobilefield.

If your system is not among these, the effectiveness may be suboptimal. You can try using

Mobilefor mobile devices,WINfor Windows, orMacfor Mac. - Windows 10, 11: Use the

-

formatfield

The format in which the user wantscogagent-9b-20241220to return data. We provide several options:Answer in Action-Operation-Sensitive format.: The default demo return type in this repo. Returns the model’s actions, corresponding operations, and the sensitivity level.Answer in Status-Plan-Action-Operation format.: Returns the model’s status, plan, and corresponding operations.Answer in Status-Action-Operation-Sensitive format.: Returns the model’s status, actions, corresponding operations, and sensitivity.Answer in Status-Action-Operation format.: Returns the model’s status and actions.Answer in Action-Operation format.: Returns the model’s actions and corresponding operations.

-

historyfield

This should be concatenated in the following order:query = f'{task}{history}{platform}{format}' -

Continuefield

CogAgent allows users to let the modelcontinue answering. This requires users to append the[Continue]\nfield after{task}. In such cases, the concatenation sequence and result should be as follows:query = f'{task}[Continue]\n{history}{platform}{format}'

- Sensitive operations: Includes

<<敏感操作>>(“sensitive operation”) and<<一般操作>>(“general operation”). These are only returned if you request theSensitiveformat. Plan,Status,Actionfields: Used to describe the model’s behavior and operations. Only returned if you request the corresponding fields. For example, if the format includesAction, then the model returns theActionfield.- General answer section: A summary that appears prior to the formatted answer.

Grounded Operationfield:

Describes the model’s specific operations, including the location of the operation, the operation type, and the action details. Theboxattribute indicates the coordinate region for execution,element_typeindicates the element type, andelement_infodescribes the element. These details are wrapped within a “操作指令” (operation command). For the definition of the action space, please refer to here.

Suppose the user wants to mark all emails as read. The user is on a Mac, and the user wants the model to return in

Action-Operation-Sensitive format. The properly concatenated prompt should be:

Task: Please mark all my emails as read

History steps:

(Platform: Mac)

(Answer in Action-Operation-Sensitive format.)

Note: even if there are no historical actions, "History steps:" still needs to be appended in the prompt. Below are * sample outputs* for different format requirements:

Answer in Action-Operation-Sensitive format

Action: Click the 'Mark all as read' button in the top toolbar of the page to mark all emails as read.

Grounded Operation: CLICK(box=[[219,186,311,207]], element_type='Clickable text', element_info='Mark all emails as read')

<<一般操作>>

Answer in Status-Plan-Action-Operation format

Status: Currently in the email interface [[0, 2, 998, 905]], with the email categories on the left [[1, 216, 144, 570]], and the inbox in the center [[144, 216, 998, 903]]. The "Mark all as read" button has been clicked [[223, 178, 311, 210]].

Plan: Future tasks: 1. Click the 'Mark all as read' button; 2. Task complete.

Action: Click the "Mark all as read" button at the top center of the inbox page to mark all emails as read.

Grounded Operation: CLICK(box=[[219,186,311,207]], element_type='Clickable text', element_info='Mark all emails as read')

Answer in Status-Action-Operation-Sensitive format

Status: Currently in the email interface [[0, 2, 998, 905]], with the email categories on the left [[1, 216, 144, 570]], and the inbox in the center [[144, 216, 998, 903]]. The "Mark all as read" button has been clicked [[223, 178, 311, 210]].

Action: Click the "Mark all as read" button at the top center of the inbox page to mark all emails as read.

Grounded Operation: CLICK(box=[[219,186,311,207]], element_type='Clickable text', element_info='Mark all emails as read')

<<一般操作>>

Answer in Status-Action-Operation format

Status: Currently in the email interface [[0, 2, 998, 905]], with the email categories on the left [[1, 216, 144, 570]], and the inbox in the center [[144, 216, 998, 903]]. The "Mark all as read" button has been clicked [[223, 178, 311, 210]].

Action: Click the "Mark all as read" button at the top center of the inbox page to mark all emails as read.

Grounded Operation: CLICK(box=[[219,186,311,207]], element_type='Clickable text', element_info='Mark all emails as read')

Answer in Action-Operation format

Action: Right-click the first email in the left email list to open the action menu.

Grounded Operation: RIGHT_CLICK(box=[[154,275,343,341]], element_info='[AXCell]')

- This model is not a conversational model and does not support continuous dialogue. Please send specific commands and reference our recommended method for concatenating the history.

- The model requires images as input; pure text conversation cannot achieve GUI Agent tasks.

- The model’s output adheres to a strict format. Please parse it strictly according to our requirements. The output is in string format; JSON output is not supported.

Make sure you have installed Python 3.10.16 or above, and then install the following dependencies:

pip install -r requirements.txtTo run local inference based on transformers, you can run the command below:

python inference/cli_demo.py --model_dir THUDM/cogagent-9b-20241220 --platform "Mac" --max_length 4096 --top_k 1 --output_image_path ./results --format_key status_action_op_sensitiveThis is a command-line interactive code. You will need to provide the path to your images. If the model returns results

containing bounding boxes, it will output an image with those bounding boxes, indicating the region where the operation

should be executed. The image is saved to output_image_path, with the file name {your_input_image_name}_{round}.png.

The format_key indicates in which format you want the model to respond. The platform field specifies which platform

you are using (e.g., Mac). Therefore, all uploaded screenshots must be from macOS if platform is set to Mac.

If you want to run an online web demo, which supports continuous image uploads for interactive inference, you can run:

python inference/web_demo.py --host 0.0.0.0 --port 7860 --model_dir THUDM/cogagent-9b-20241220 --format_key status_action_op_sensitive --platform "Mac" --output_dir ./resultsThis code provides the same experience as the HuggingFace Space online demo. The model will return the corresponding

bounding boxes and execution categories.

We have prepared a basic demo app for developers to illustrate the GUI capabilities of cogagent-9b-20241220. The demo

shows how to deploy the model on a GPU-equipped server and run the cogagent-9b-20241220 model locally to perform

automated GUI operations.

We cannot guarantee the safety of AI behavior; please exercise caution when using it.

This example is only for academic reference. We assume no legal responsibility for any issues resulting from this example.

If you are interested in this APP, feel free to check out the documentation.

If you are interested in fine-tuning the cogagent-9b-20241220 model, please refer to here.

In November 2023, we released the first generation of CogAgent. You can find related code and model weights in the CogVLM & CogAgent Official Repository.

|

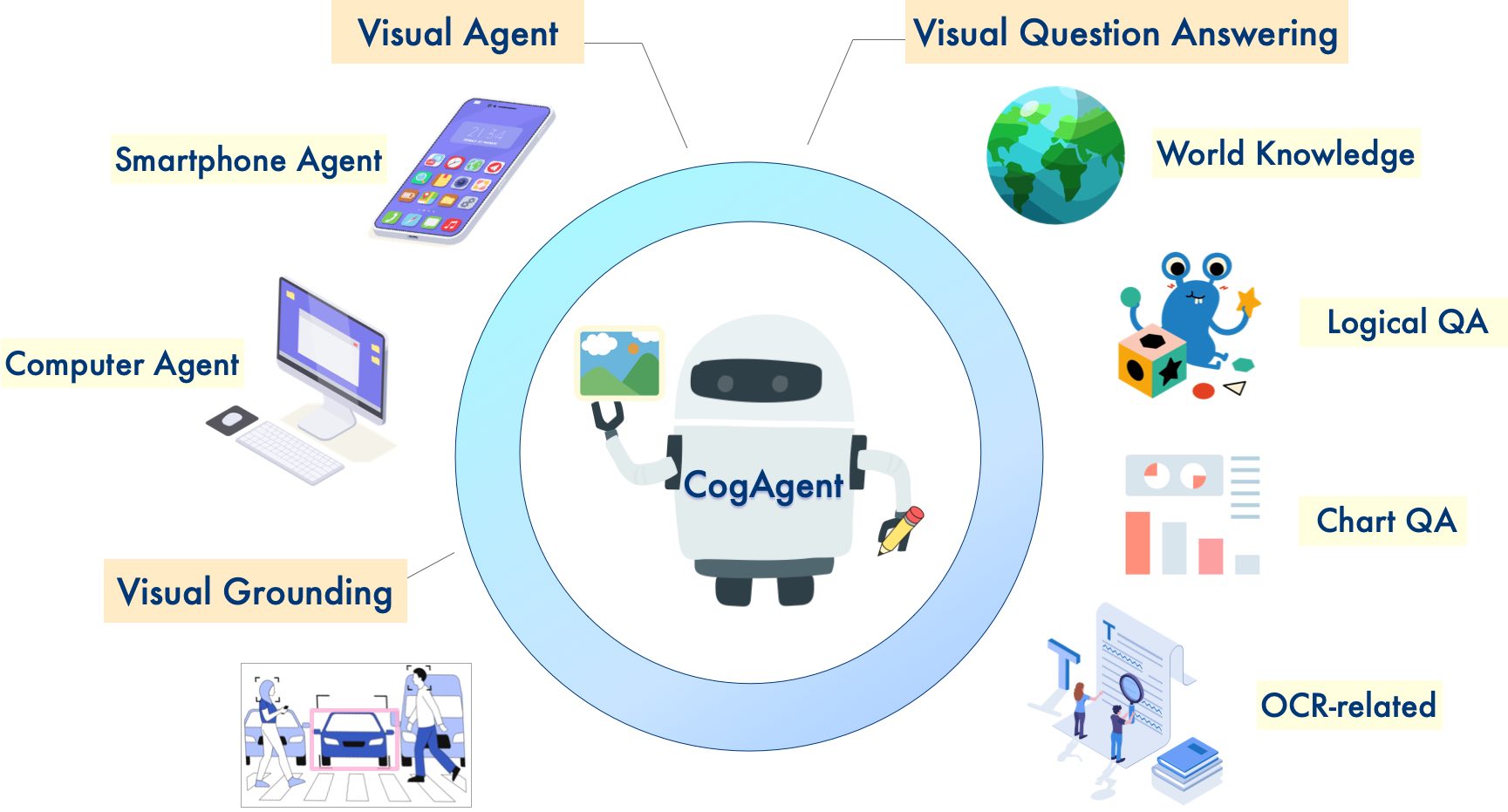

📖 Paper: CogVLM: Visual Expert for Pretrained Language Models CogVLM is a powerful open-source Vision-Language Model (VLM). CogVLM-17B has 10B visual parameters and 7B language parameters, supporting image understanding at a resolution of 490x490, as well as multi-round dialogue. CogVLM-17B achieves state-of-the-art performance on 10 classic multimodal benchmarks, including NoCaps, Flicker30k captioning, RefCOCO, RefCOCO+, RefCOCOg, Visual7W, GQA, ScienceQA, VizWiz VQA, and TDIUC. |

📖 Paper: CogAgent: A Visual Language Model for GUI Agents CogAgent is an open-source vision-language model improved upon CogVLM. CogAgent-18B has 11B visual parameters and 7B language parameters. It supports image understanding at a resolution of 1120x1120. Building on CogVLM’s capabilities, CogAgent further incorporates a GUI image agent ability. CogAgent-18B delivers state-of-the-art general performance on 9 classic vision-language benchmarks, including VQAv2, OK-VQ, TextVQA, ST-VQA, ChartQA, infoVQA, DocVQA, MM-Vet, and POPE. It also significantly outperforms existing models on GUI operation datasets such as AITW and Mind2Web. |

- The Apache2.0 LICENSE applies to the use of the code in this GitHub repository.

- For the model weights, please follow the Model License.

If you find our work helpful, please consider citing the following papers

@misc{hong2023cogagent,

title={CogAgent: A Visual Language Model for GUI Agents},

author={Wenyi Hong and Weihan Wang and Qingsong Lv and Jiazheng Xu and Wenmeng Yu and Junhui Ji and Yan Wang and Zihan Wang and Yuxiao Dong and Ming Ding and Jie Tang},

year={2023},

eprint={2312.08914},

archivePrefix={arXiv},

primaryClass={cs.CV}

}

R&D Institutions: Tsinghua University, Zhipu AI

Team members: Wenyi Hong, Junhui Ji, Lihang Pan, Yuanchang Yue, Changyu Pang, Siyan Xue, Guo Wang, Weihan Wang, Jiazheng Xu, Shen Yang, Xiaotao Gu, Yuxiao Dong, Jie Tang

Acknowledgement: We would like to thank the Zhipu AI data team for their strong support, including Xiaohan Zhang, Zhao Xue, Lu Chen, Jingjie Du, Siyu Wang, Ying Zhang, and all annotators. They worked hard to collect and annotate the training and testing data of the CogAgent model. We also thank Yuxuan Zhang, Xiaowei Hu, and Hao Chen from the Zhipu AI open source team for their engineering efforts in open sourcing the model.