Technique adopted in AutoGLM, a series of Phone Use and Web Browser Use Foundation Agents

📃 Paper | 🤗 WebRL-GLM-4-9B | WebRL-LLaMA-3.1-8B | ModelScope

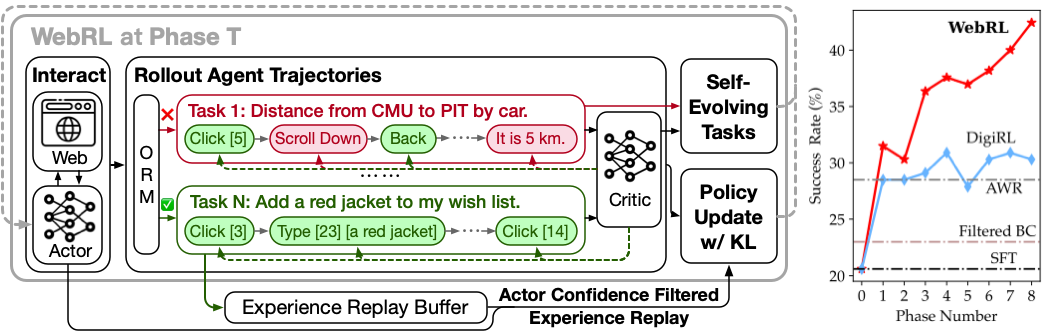

WebRL, a self-evolving online curriculum learning framework designed for training web agents, targeting the WebArena environment.

First, create a conda environment and install all pip package requirements.

conda create -n webrl python==3.10

conda activate webrl

cd WebRL

pip install -e .The WebRL-GLM-4-9B checkpoint was released here and we use it:

We will also provide the checkpoint of ORM soon.

We use LLaMA-Factory to train the SFT baseline, which is the starting model for WebRL. We release the code and data used for training. You can train the SFT baseline with the following commands:

cd LLaMA-Factory

bash run.sh examples/train_full/llama3_full_policy_web.yamlAfter training the SFT baseline, you should use it as the initial model of the actor and critic. You can train WebRL with the following commands:

bash run_multinode.shThis command is used to train the actor and critic in each phase.

You can generate new instructions with the following commands:

python scripts/gen_task.pyThe instruction and script for interaction with WebArena is provided in VAB-WebArena-Lite.

You can implement the interaction process of WebRL according to the Evaluating in WebRL Setting (Text Modal) section of VAB-WebArena-Lite.

@artical{qi2024webrl,

title={WebRL: Training LLM Web Agents via Self-Evolving Online Curriculum Reinforcement Learning},

author={Zehan Qi and Xiao Liu and Iat Long Iong and Hanyu Lai and Xueqiao Sun and Xinyue Yang and Jiadai Sun and Yu Yang and Shuntian Yao and Tianjie Zhang and Wei Xu and Jie Tang and Yuxiao Dong},

journal={arXiv preprint arXiv:2411.02337},

year={2024},

}