Abstract:

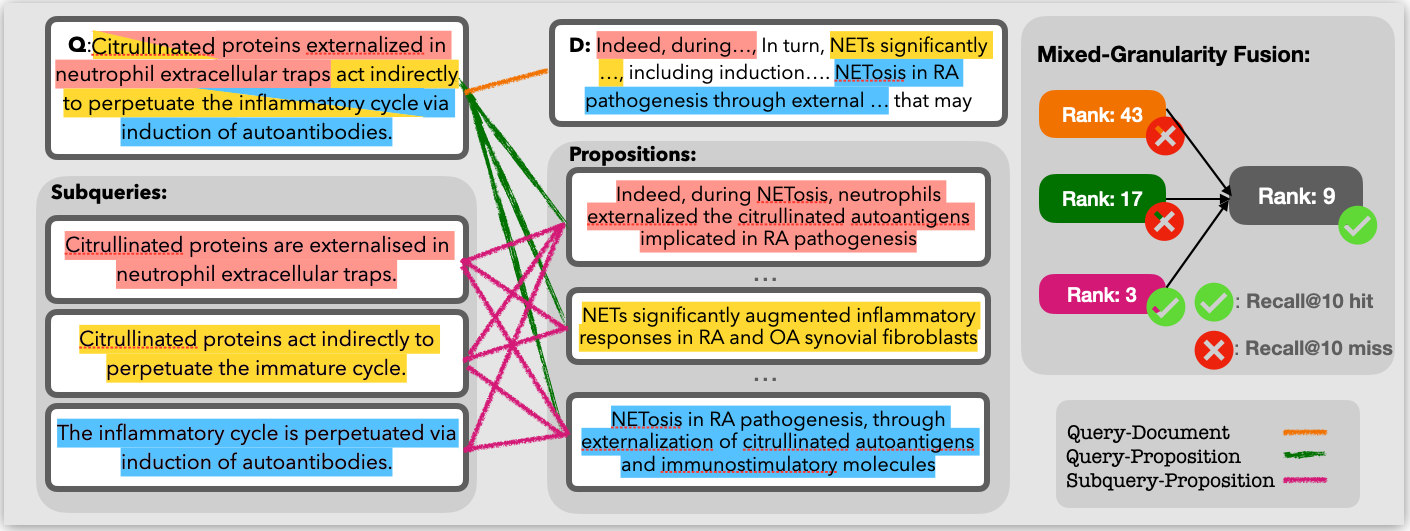

Recent studies show the growing significance of document retrieval in generating LLMs within the scientific domain by bridging their knowledge gap; however, dense retrievers struggle with domain-specific retrieval and complex query-document relationships. To address these challenges, MixGR improves dense retrievers' awareness of query-document matching across various levels of granularity using a zero-shot approach, fusing various metrics into a united score for comprehensive query-document similarity. Our experiments demonstrate MixGR outperforms previous retrieval methods by 22.6% and 10.4% on nDCG@5 for unsupervised and supervised retrievers, respectively, and enhances efficacy in two downstream scientific question-answering tasks, boosting LLM applications in the scientific domain.

Install the environment based on requirements.txt:

pip install -r requirements.txtIn our paper, we conduct experiment on four scineitific datasets, i.e., NFCorpus, SciDocs, SciFact, and SciQ.

In the folder data/, we include:

- queries including multiple subqueries and their decomposed subqueries;

- the documents and their decomposed propositions;

- qrels, recording the golden query-document maps.

For the procedure of subquery and proposition generation, please refer to the instruction of Query and Document Decomposition in the following section.

Regarding query and document decomposition, we apply the off-the-shelf tool, propositioner which is hosted on huggingface. For the environment for the usage of propositioner, please refer to the original repository.

Before all, we would suggest setting the current directory as the environment variable $ROOT_DIR. Additionally, corpus indexing and query searching depend on pyserini.

For query and docuemnt decomposition, please refer to script/decomposition/query.sh and script/decomposition/document.sh, respectively.

The tool itself is not 100 percent perfect. For the potential error, please refer to the error analysis in Appendix of the paper.

Both documents and propositions are indexed through the default framework pyserini.

Additionally, we need to generate the BM25 index for both documents and propositions. This can help avoid the maintenace of the corpus in the memory, instead fetch the text from the disk.

Given queries/subqueries and documents/propositions, we would calculate the quandrant combination between different granularities of queries and documents.

| Document | Proposition | |

|---|---|---|

| Query |

|

|

| Subquery |

|

|

As mentioned in the paper, we retrieve the top-$k$ results scripts/rrf/union.sh.

Lastly, we merge the retrieval results from different query and document granularities through RRF.

The resuls presented in the paper is convered by result_collection.ipynb, where the evaluation is based on the package beir.

This repository contains experimental software intended to provide supplementary details for the corresponding publication. If you encounter any issues, please contact Fengyu Cai.

The software in this repocitory is licensed under the Apache License, Version 2.0. See LICENSE for the full license text.

@misc{cai2024textttmixgr,

title={$\texttt{MixGR}$: Enhancing Retriever Generalization for Scientific Domain through Complementary Granularity},

author={Fengyu Cai and Xinran Zhao and Tong Chen and Sihao Chen and Hongming Zhang and Iryna Gurevych and Heinz Koeppl},

year={2024},

eprint={2407.10691},

archivePrefix={arXiv},

primaryClass={cs.IR}

}