This package is unofficial PyBrain extension for multi-agent reinforcement learning in general sum stochastic games. The package provides 1) the framework for modeling general sum stochastic games and 2) its multi-agent reinforcement learning algorithms.

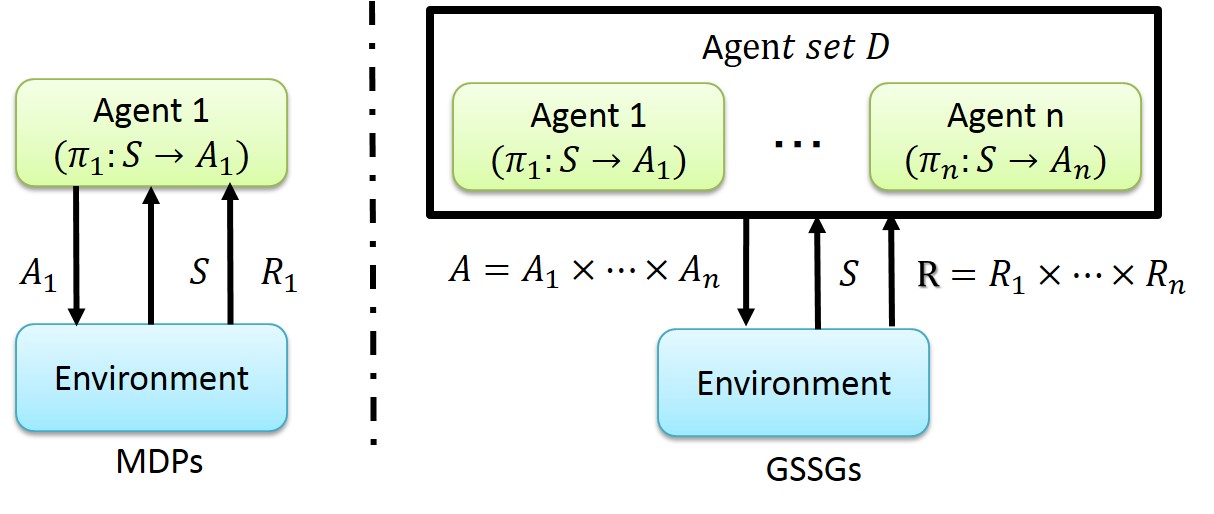

GSSGs is generalized Markov decision processes (MDPs) for multi-agent situations, and represented as a tuple <D, S, A, T, R> (right side of following figure). D represents a agents set, S represents a state of an environment, A represents a joint action of all agents, and R represents a joint reward for each agent. In contrast to MDPs, GSSGs allows multiple agents to affect the environment and receive rewards simultaneously. We can model many phenomena in the real world with GSSGs (e.g., trading in market place, negotiation of stakeholders, or collaborative task of robots).

MARL is used for learning agent policies

To use this package, we need 1) install all requirements, 2) implement GSSGs to specify target domain, and 3) apply MARL to implemented GSSGs to learn agent policies.

- Python 2.7.6

- Numpy 1.11.0rc1+

- Scipy 0.17.0+

- PyBrain 0.3.3+

Implement the class extending EpisodicTaskSG (pybrainSG.rl.environments.episodicSG) and the class extending Environment class (pybrain.rl.environments.environment). Some examples of implementation are put on following package:

- pybrainSG.rl.examples.tasks

For example, "gridgames.py" provides examples of grid world domain, and "staticgame.py" provides examples of bi-matrix game domain.

To apply MARL to implemented GSSGs, we need construct an agent set and an experiment. You can find examples of a construction in the following folder:

- pybrainSG.rl.examples

For example, "example_gridgames.py" in "ceq" package shows how to use one of Correlated-Q learning implementations in the grid game domain.

Refactoring and cleaning up source codes. Introducing inverse reinforcement learning for estimation of other agents reward structure.