This document outlines the batch-challenge project, a Go application designed to parse CSV data, process transactions, and generate summaries.

The application leverages concurrency to process large CSV files efficiently. It uses a modular design with distinct components handling parsing, writing, relaying streams, and summarizing data. T he project is configured to run in a Docker environment, with PostgreSQL as the database backend.

This guide covers the essentials to get the Batch Processing Application up and running using Docker and Docker Compose.

- Go 1.21

- Docker

- Docker Compose

Set the following environment variables for the docker-compose file or use a .env file:

- Database credentials:

DB_HOST,DB_PORT,DB_USER,DB_PASS,DB_NAME - Data paths:

DATA_PATH

this is the path to the directory containing the CSV file to be processed ("data.csv" as default)

default values are provided for all of these but TARGET_MAIL which is required

SMTP_HOSTSMTP_PORTSMTP_USERSMTP_PASS

/gen/csv_gen.go generates a random csv file with 100000 rows using a simple format ready to use

Execute the following script to build and start the application:

#!/bin/bash

# Build the Docker image

echo "Building Docker image..."

docker build -t demo_app:latest .

# Start the entire stack using Docker Compose

echo "Starting services with Docker Compose..."

docker-compose up -d

# Tail the application logs

echo "Tailing application logs..."

docker-compose logs -f appSave this script as start.sh, make it executable with chmod +x start.sh, and run it with ./start.sh.

This guide and script provide a streamlined process to build and run the application.

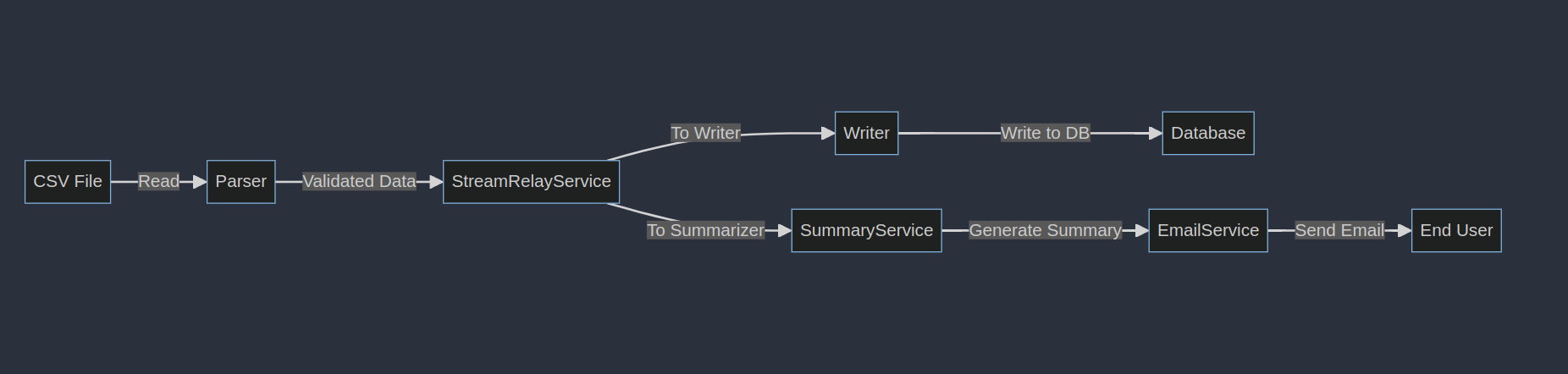

Parser: Reads and validates CSV files according to a defined schema.Writer: Buffers and writes the parsed transactions to the database.StreamRelayService: Manages the propagation of data to the writer and summarizer.SummaryService: Aggregates transaction data and sends a summary report via email.EmailService: Configures and sends emails using provided SMTP settings.

This guide and script provide a streamlined process to build and run the application.

The central orchestrator that initializes all services and triggers the processing flow.

Responsible for consuming CSV files. It validates and transforms the data based on a schema definition and sends the data to the StreamRelayService.

Acts as a conduit, taking in parsed data from the Parser and distributing it to both the Writer and SummaryService through a subscription system

Buffers the data and periodically flushes this buffer to the Database. It listens for data from the StreamRelayService.

Aggregates data for summary and sends an email report. It receives data from the StreamRelayService and utilizes the EmailService to dispatch emails.

Persistently stores transaction records. It is accessed by the Writer to insert data records.

Configures and sends out emails. Used by the SummaryService to send out summary reports to users.

- Initialization: The

Applicationstarts and initializes all components, setting up their interconnections. - Parsing: The

Parserreads the CSV file, validates, and transforms the data. - Relaying: The

StreamRelayServicereceives parsed data and relays it to both theWriterandSummaryService. - Writing: The

Writerbuffers transactions and writes them to theDatabaseat set intervals or buffer sizes. - Summarizing: Concurrently, the

SummaryServiceaggregates data to create a summary report. - Emailing: Once the summary is ready, the

SummaryServicesends it via theEmailService.