This is the origin pytorch implementation of the paper in the following paper: LEARNING MUSIC SEQUENCE REPRESENTATION FROM TEXT SUPERVISION. Special thanks to Yuan Xie@xy980523 for building this repo.

python demo.py

-

For CLIP, we provide bpe_simple_vocab_16e6.txt.gz and CLIP.pt

-

The other weights pre-trained on AudioSet, including wavelet_encoder.pt and MUSER.pt

If you find the repository useful in your research, please consider citing the following paper:

@inproceedings{chen2022learning,

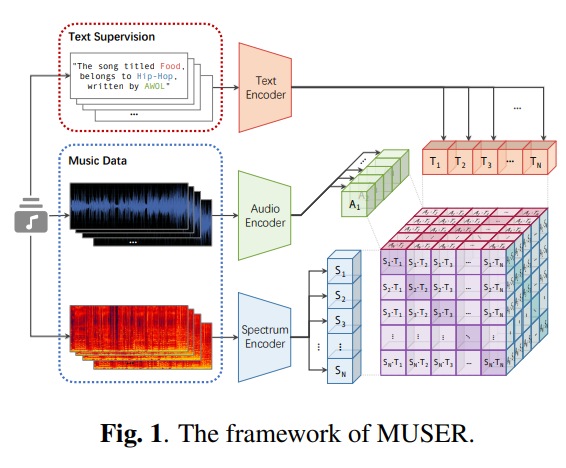

title={Learning Music Sequence Representation From Text Supervision},

author={Chen, Tianyu and Xie, Yuan and Zhang, Shuai and Huang, Shaohan and Zhou, Haoyi and Li, Jianxin},

booktitle={ICASSP 2022-2022 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP)},

pages={4583--4587},

year={2022},

organization={IEEE}

}