| title | emoji | colorFrom | colorTo | sdk | sdk_version | app_file | pinned | license |

|---|---|---|---|---|---|---|---|---|

Stable Diffusion XL 0.9 |

🔥 |

yellow |

gray |

gradio |

3.11.0 |

app.py |

true |

mit |

This is a gradio demo supporting Stable Diffusion XL 0.9. This demo loads the base and the refiner model.

This is forked from StableDiffusion v2.1 Demo. Refer to the git commits to see the changes.

Update: Seems like Reddit people released the weights to the public: reddit post on the leaked weights. The weights, if downloaded in the full folder, may be loaded with Option 1. Though I have not tried the weights. Nor do I encourage using leaked weights.

Update: Colab is supported! You can run this demo on Colab for free even on T4.

Update: See a more comprehensive comparison with 1200+ images here. Both SD XL and SD v2.1 are benchmarked on prompts from StableStudio.

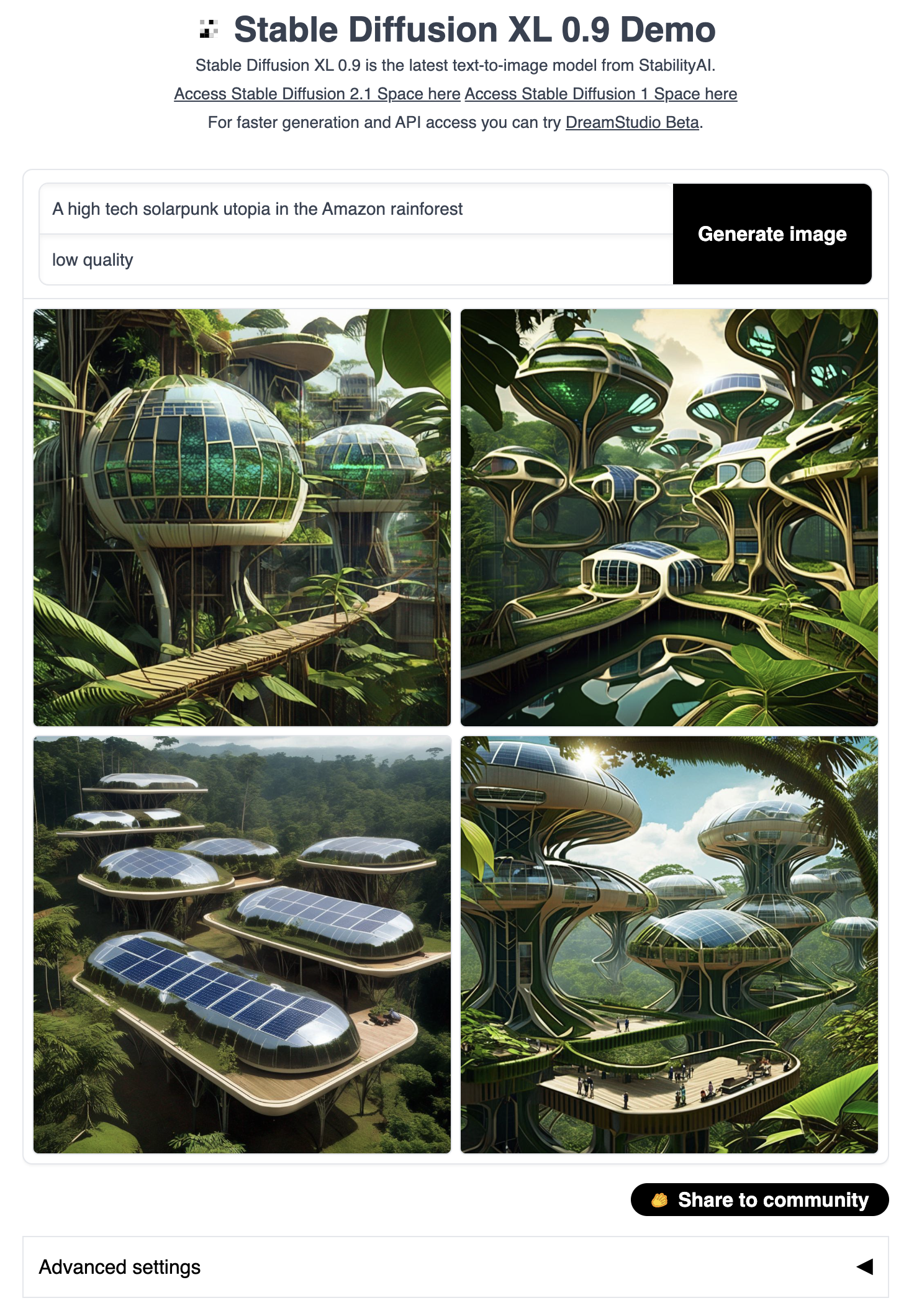

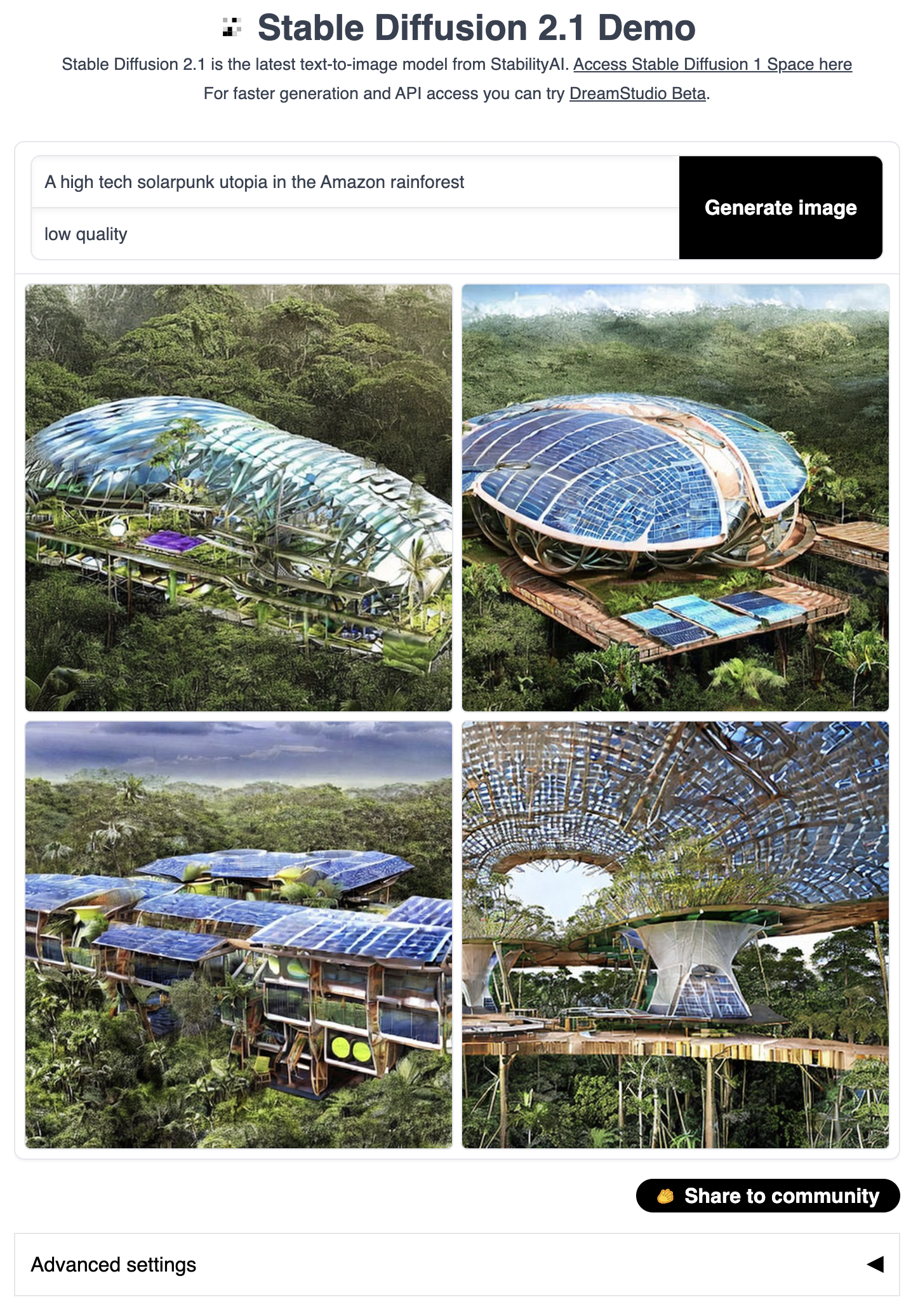

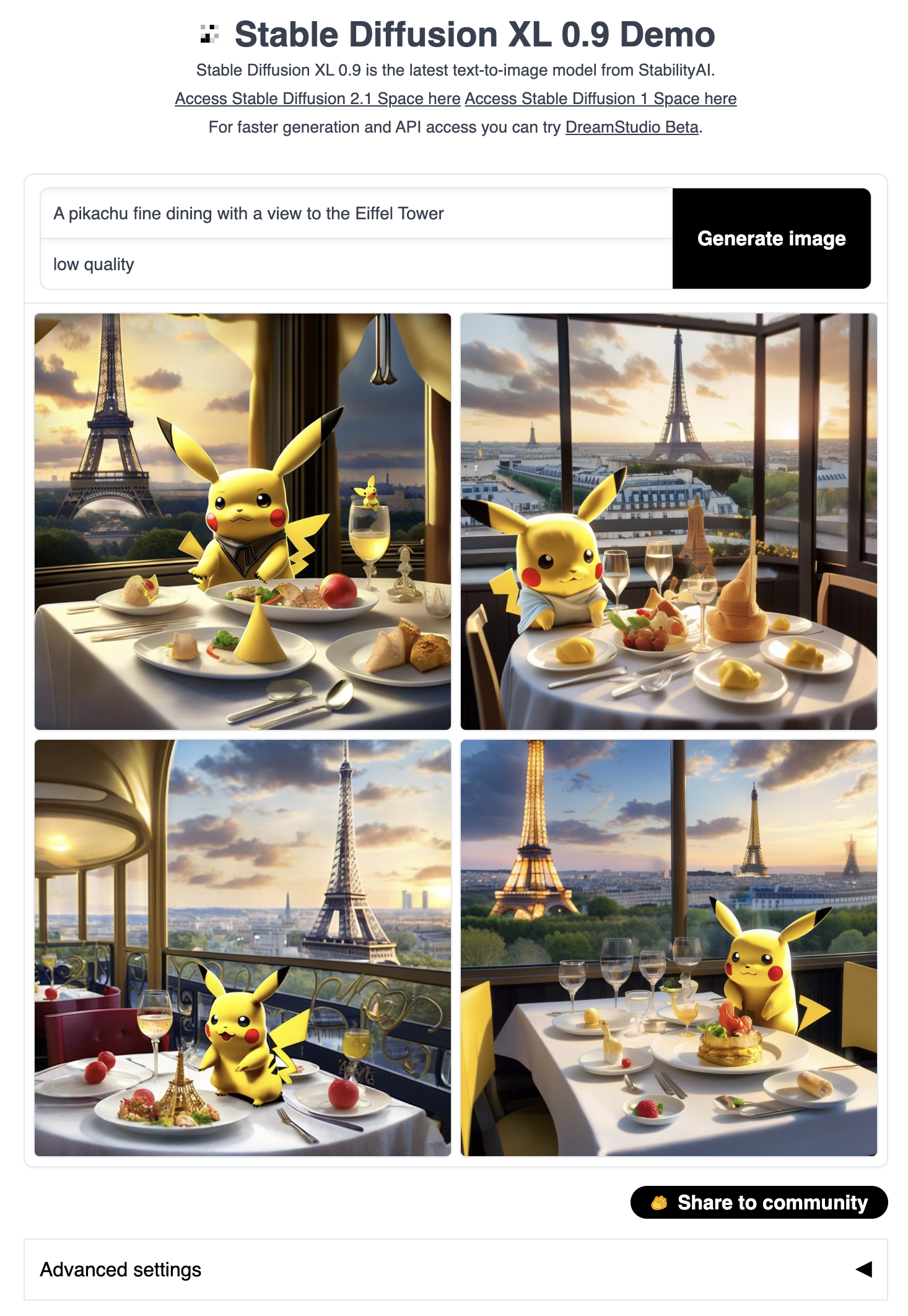

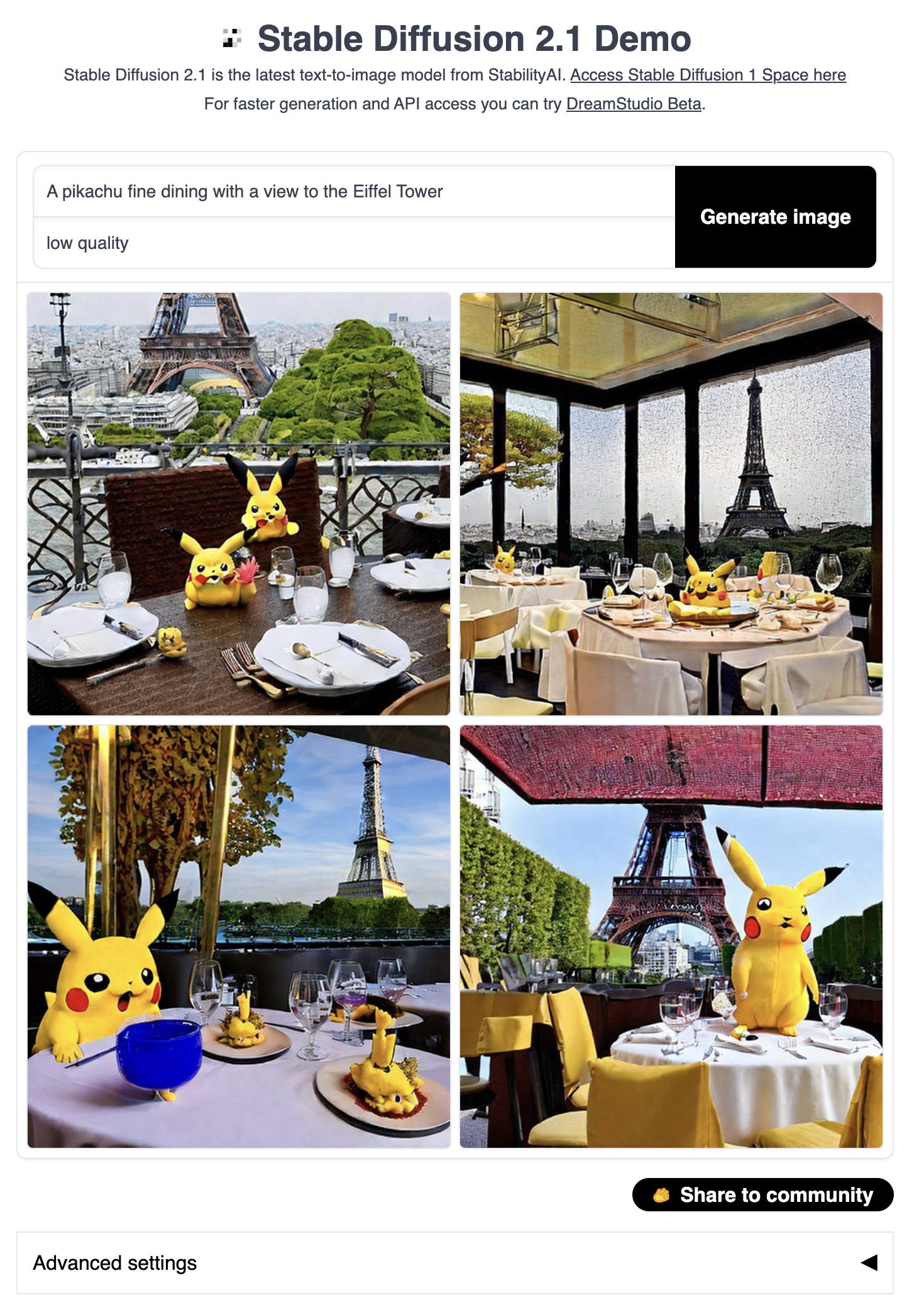

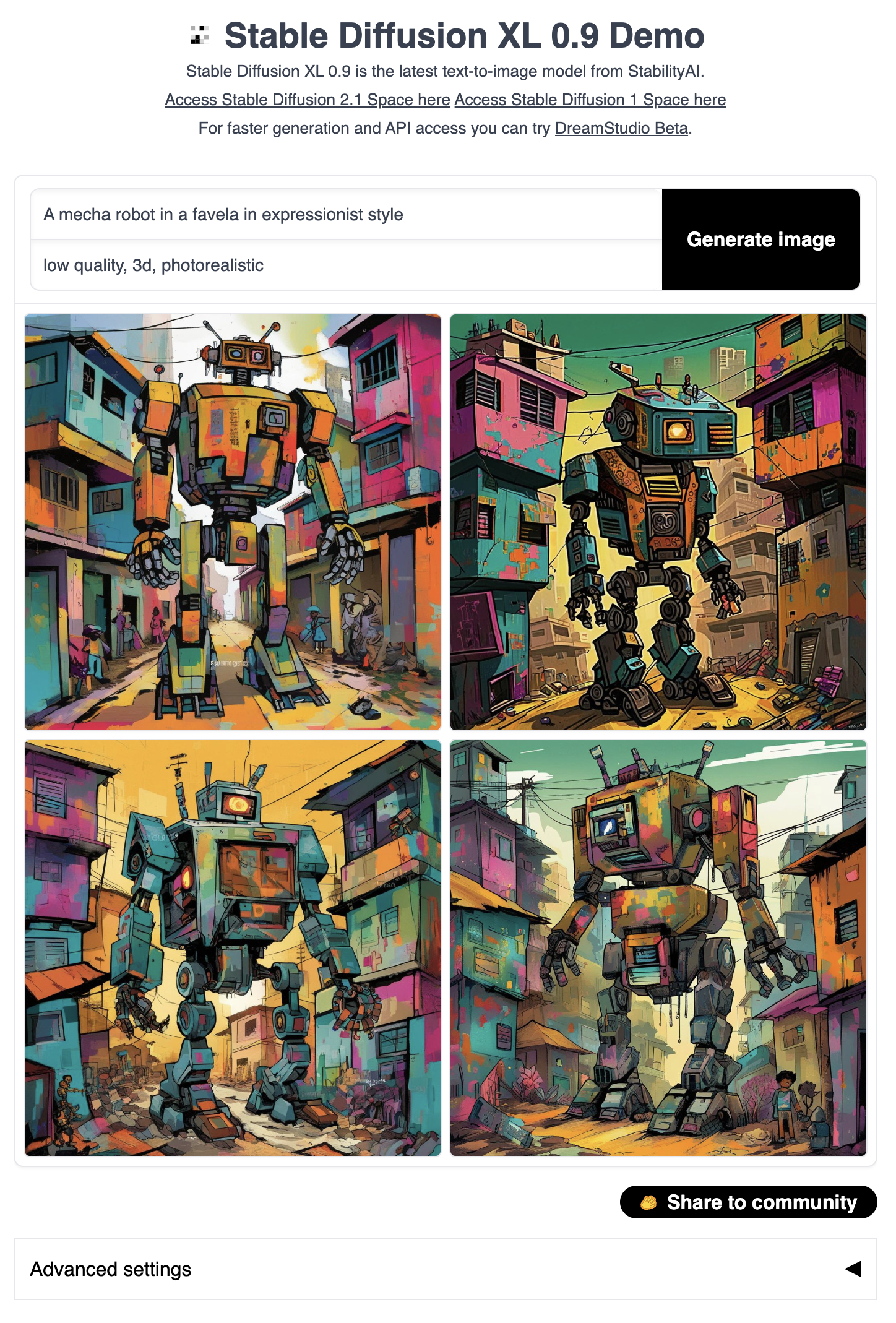

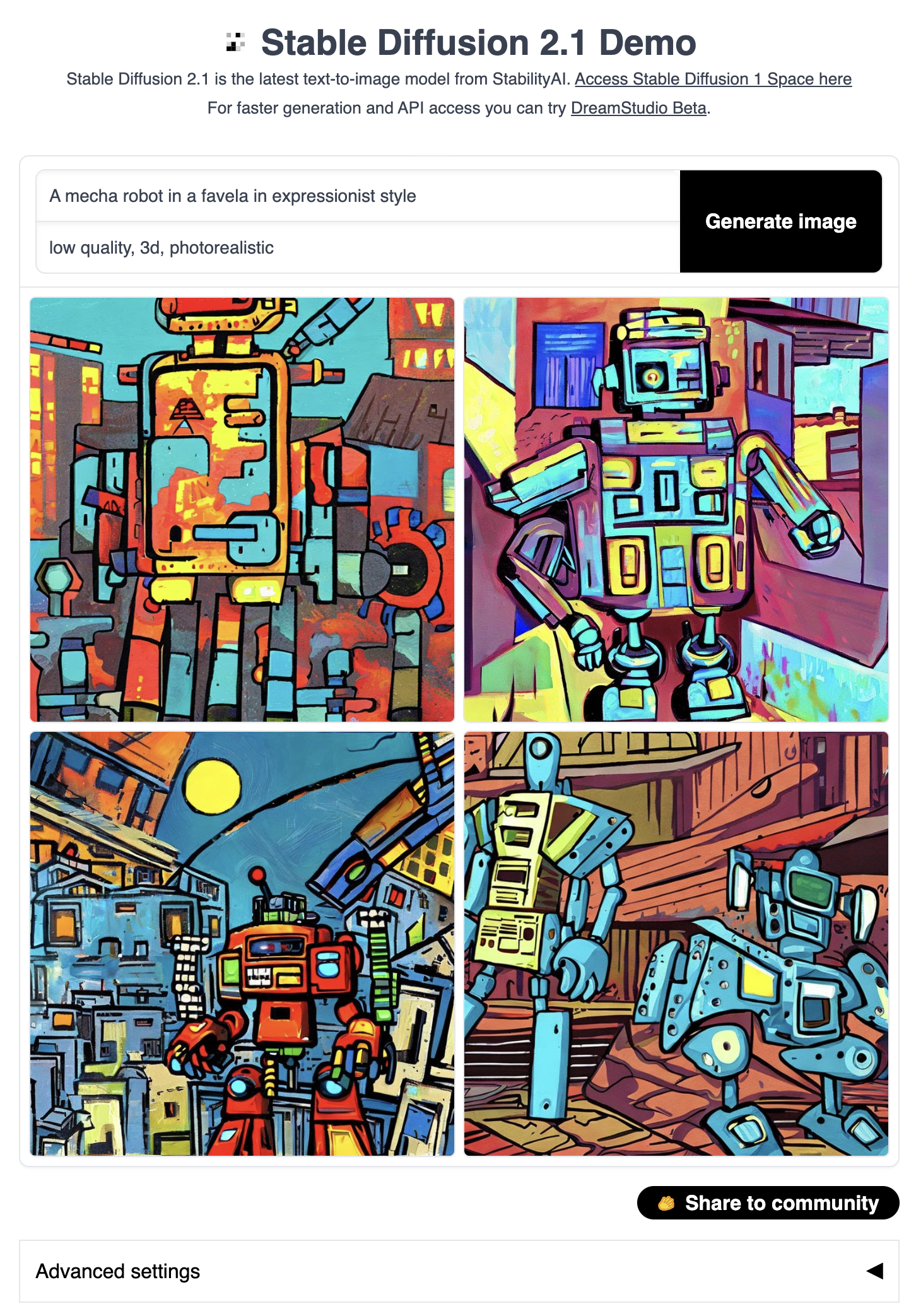

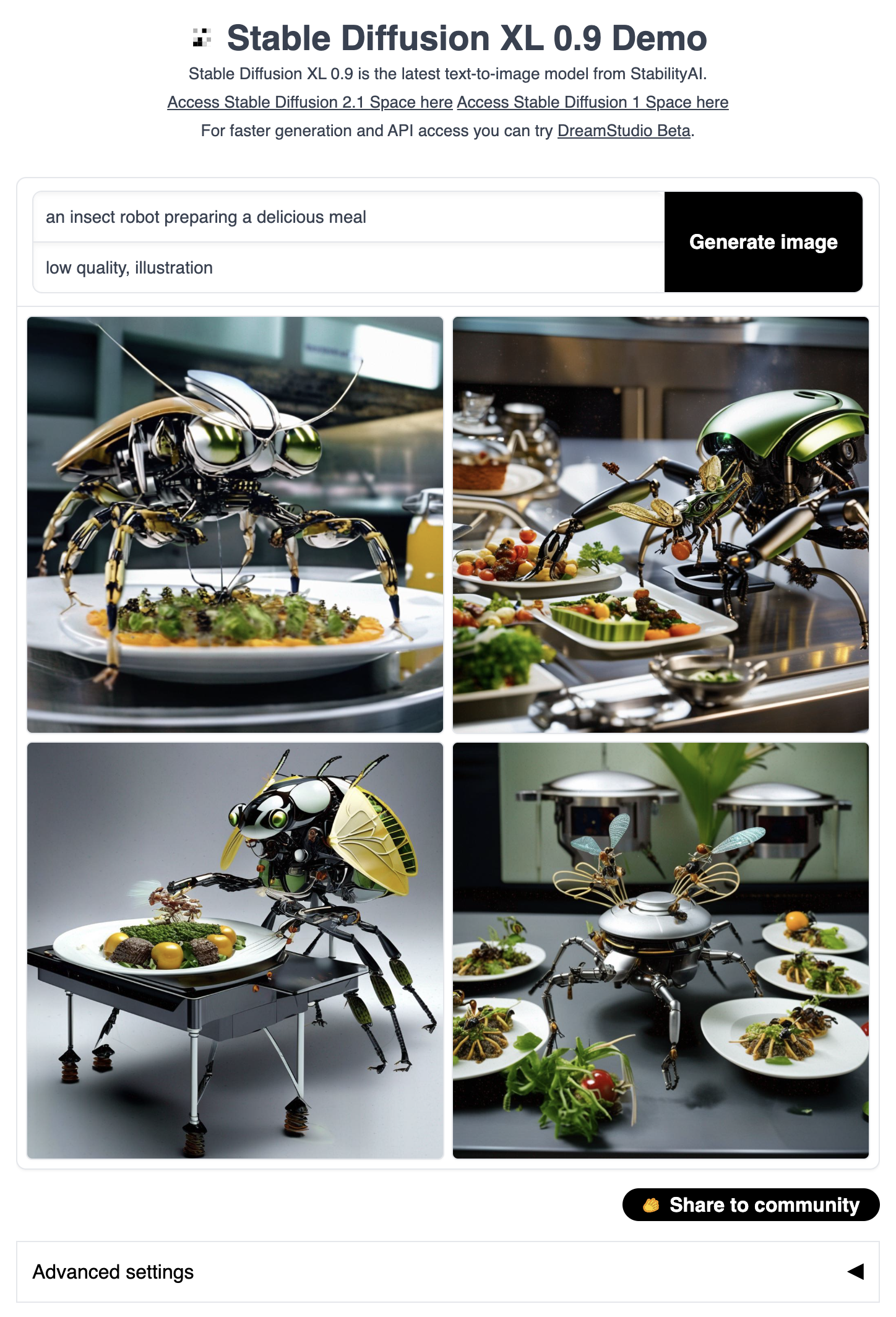

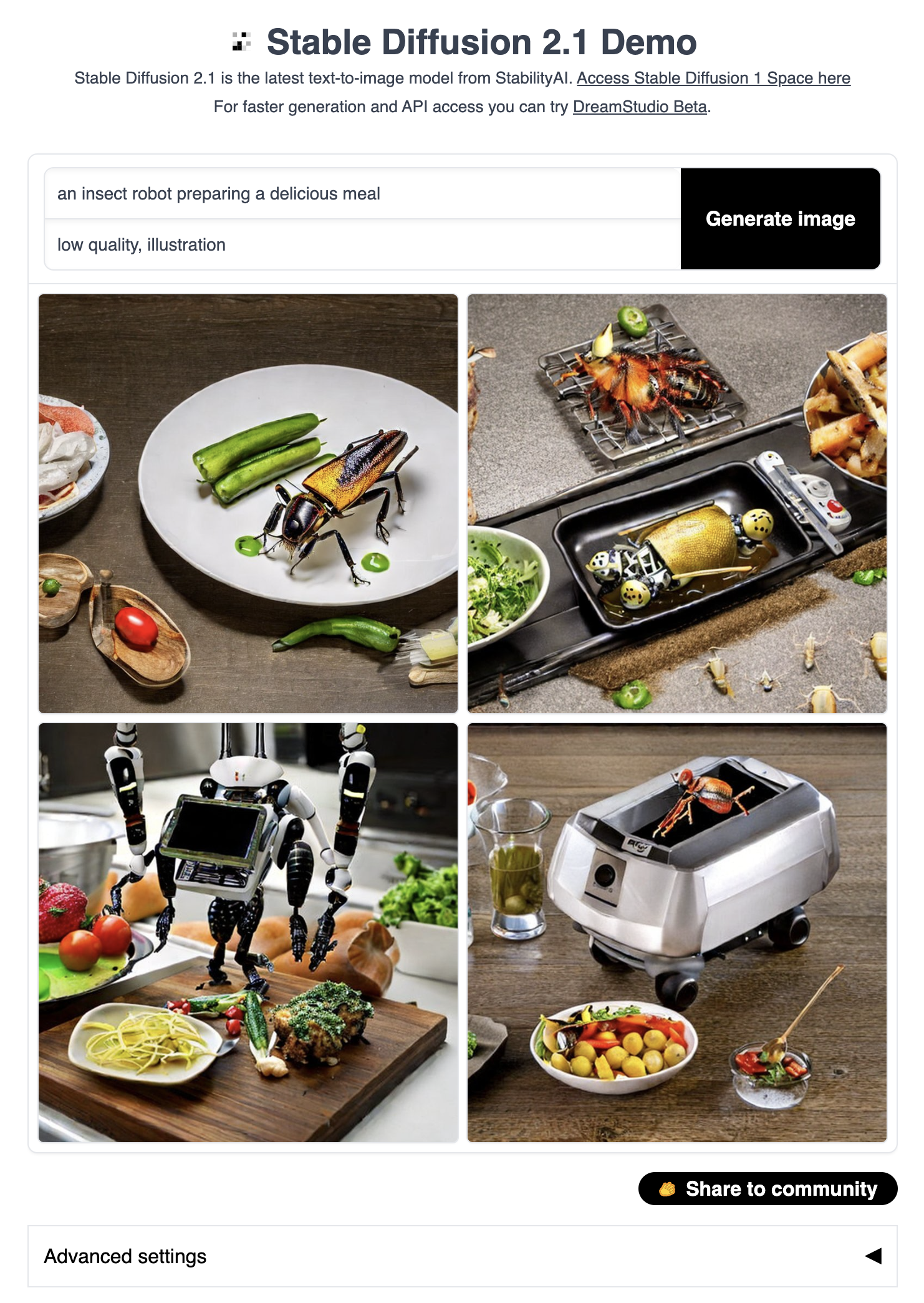

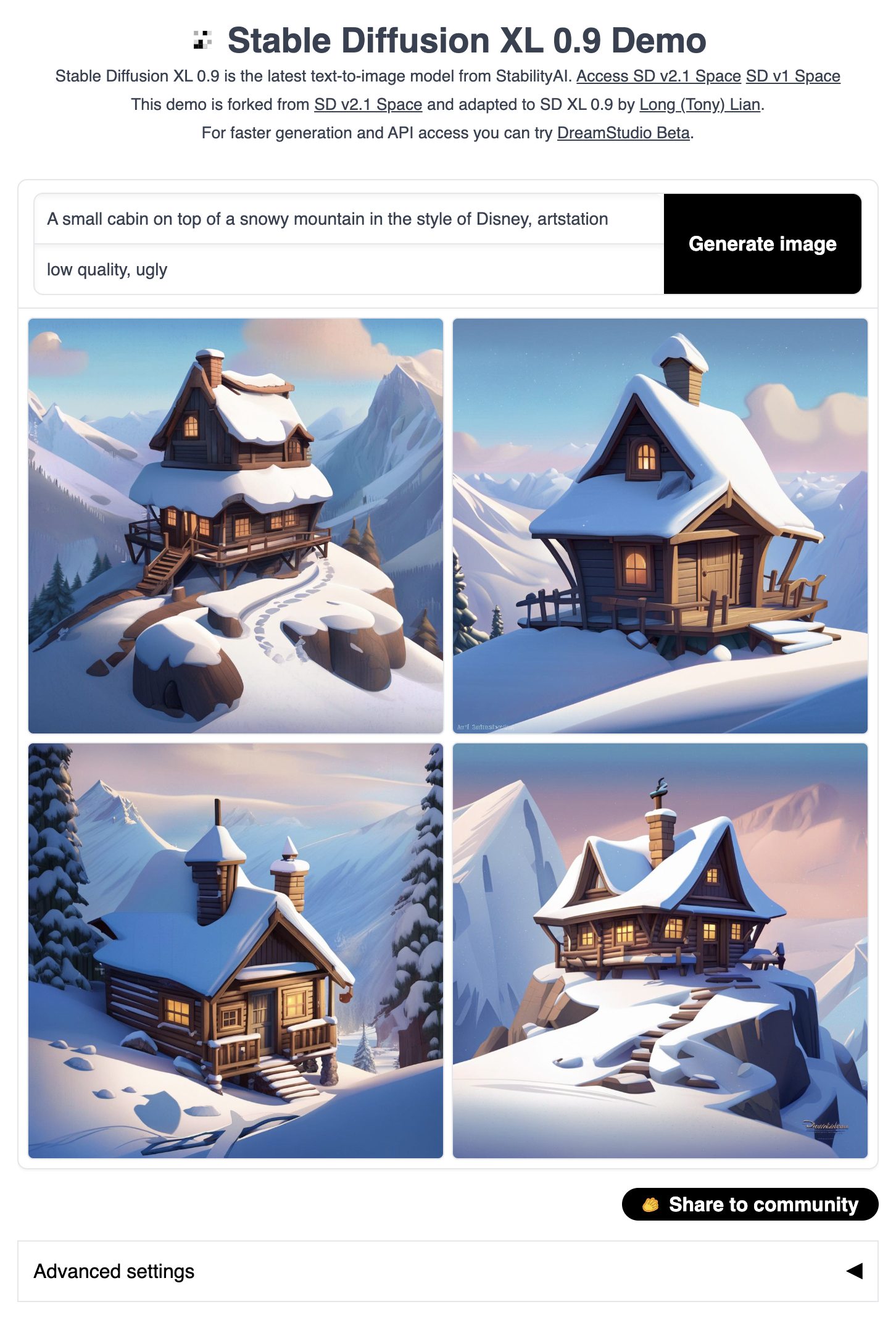

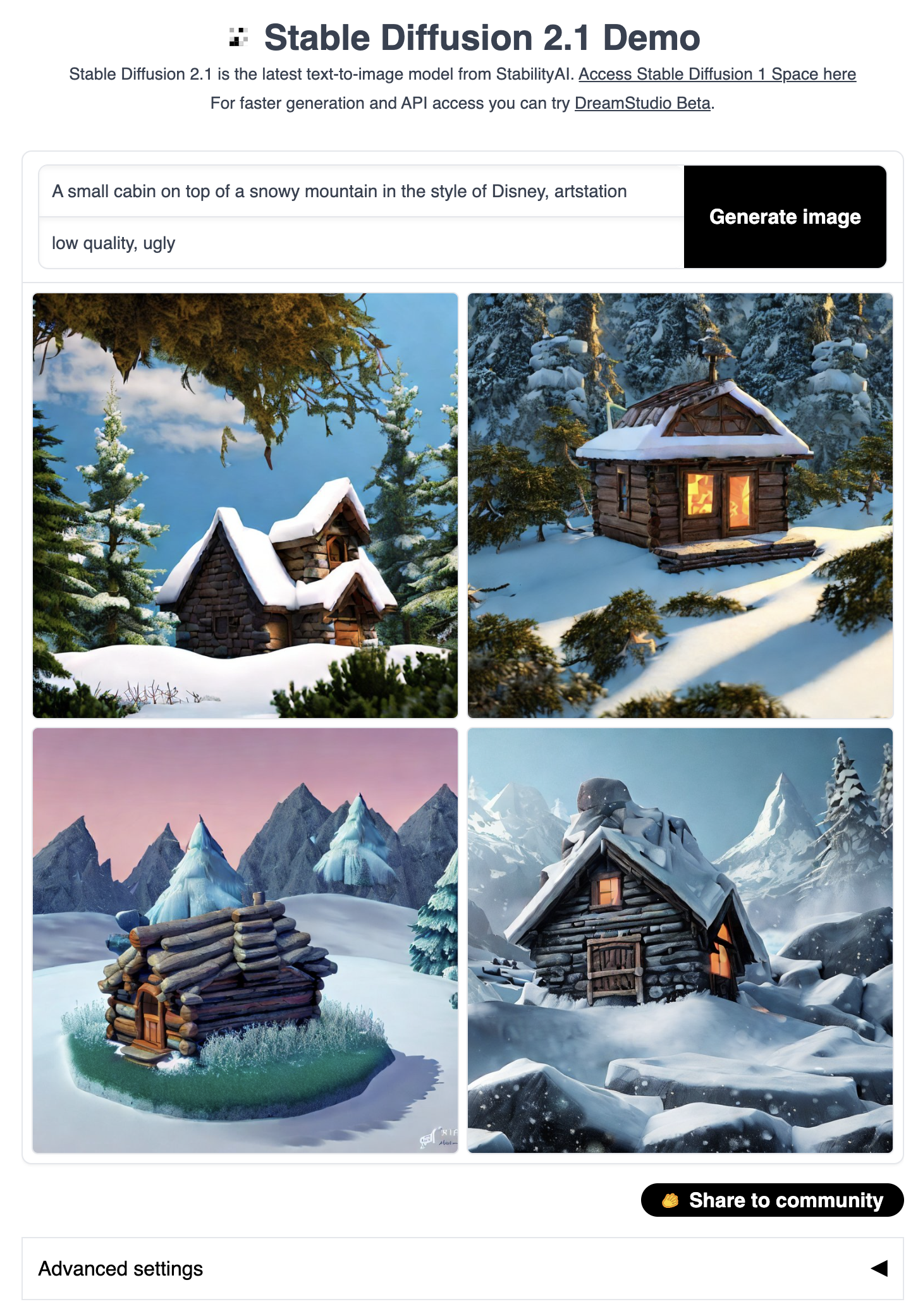

Left: SDXL 0.9. Right: SD v2.1.

Without any tuning, SDXL generates much better images compared to SD v2.1!

With torch 2.0.1 installed, we also need to install:

pip install accelerate transformers invisible-watermark "numpy>=1.17" "PyWavelets>=1.1.1" "opencv-python>=4.1.0.25" safetensors "gradio==3.11.0"

pip install git+https://github.com/huggingface/diffusers.git@sd_xlIt's free but you need to submit a quick form to get access to the weights. Leaked weights seem to be available on reddit, but I have not used/tested them.

There are two ways to load the weights. After getting access to weights, you can either clone them locally or this repo can load them for you.

If you have cloned both repo (base, refiner) locally (please change the path_to_sdxl):

PYTORCH_CUDA_ALLOC_CONF=max_split_size_mb:512 SDXL_MODEL_DIR=/path_to_sdxl python app.py

If you want to load from the huggingface hub (please set up a HuggingFace access token):

PYTORCH_CUDA_ALLOC_CONF=max_split_size_mb:512 ACCESS_TOKEN=YOUR_HF_ACCESS_TOKEN python app.py

Turn on torch.compile will make overall inference faster. However, this will add some overhead to the first run (i.e., have to wait for compilation during the first run).

- Turn on

pipe.enable_model_cpu_offload()and turn offpipe.to("cuda")inapp.py. - Turn off refiner by setting

enable_refinerto False. - More ways to save memory and make things faster.

SDXL_MODEL_DIRandACCESS_TOKEN: load SDXL locally or from HF hub.ENABLE_REFINER=true/falseturn on/off the refiner (refiner refines the generation).OUTPUT_IMAGES_BEFORE_REFINER=true/falseuseful is refiner is enabled. Output images before and after the refiner stage.SHARE=true/falsecreates public link (useful for sharing and on colab)