Technica has previous implemented an anomaly detection solution for Federated Learning on low swap devices that allows models to continuously learn and train locally, then share new knowledge with other devices. However, we continually strive to find solutions that utilize even less resources and provide more functionality. We have replaced our previous Federated Learning solution with IPLS to decentralize the processes of model training and convergence, weight aggregation, and weight redistribution. The result is improved data privacy, performance, and elimination of single points of failure. This repository contains a new Anomaly Detection solution utilizing IPLS.

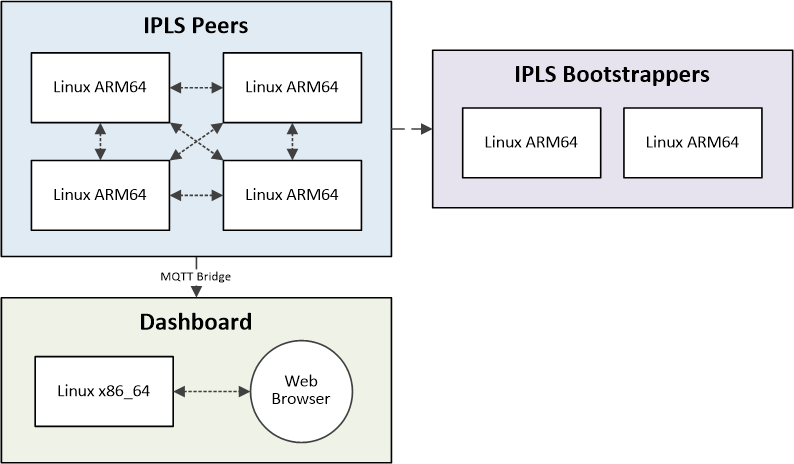

Below is the architecture for this solution:

-

IPLS Bootstrappers:

- You need at least one device, but can use as many as you want

- Having more than one will eliminate a single point of failure

- Containers are Docker or Singularity

- Tested on Jetson Nano (Linux ARM64)

- You need at least one device, but can use as many as you want

-

IPLS Peers:

- Four devices are recommended, but you should have at least two

- Containers are Docker or Singularity

- Tested on Raspberry Pi 4 (Linux ARM64)

-

Data Generators:

- One for each IPLS Peer

- Setup is simpler if data generators are on the same device as their Peers, but is not required

- Containers are Docker

- Tested on Raspberry Pi 4 (Linux ARM64)

- One for each IPLS Peer

-

Dashboard

- You need one device separate from the Peers

- Container is Docker

- Tested on Linux x86_64

The following steps will walk through building each of the containers used for IPLS Anomaly Detection.

On each of the devices, pull down the repository:

git clone https://github.com/Technica-Corporation/ipls_anomaly_detection.gitcd ipls_anomaly_detectionThis step compiles the IPLS Java API that was edited by Technica. The original Github Repository is IPLS-Java-API by ChristodoulosPappas. You may want to read the presentation pdf included in that repository to get a better understanding on how IPLS itself works.

The repository is included here as we have made a few changes:

-

Everything in the 'default' package was moved into a new package called 'originalDefault'

-

AnomalyDetectionDriver.java added to the 'default' package

-

An 'anomalydetection' package was added which includes all our code for IPLS Anomaly Detection

-

In the pom.xml the version for deeplearning4j and nd4j was upgraded to 1.0.0-M1.1

NOTE: The previous version pulled in a specific version of OpenBLAS that has a bug on Arm64 platforms causing calculations to sporadically result in NaN.

This step must be completed on all devices that will be running the Bootstrapper or Peer containers.

From ipls_anomaly_detection, navigate to compile_container:

cd compile_container/sh build_container.shsh run_docker.sh -lNOTE: run_docker.sh -l will copy the .jar file and a directory of libs/ to ipls_anomaly_detection/resources.

Running it without the -l flag will stop it from copying the libs; these are needed, but it can take a while on small devices. You may want to run it with the flag once to generate the libs and then remove the flag for subsequent builds of the IPLS jar.

Recommendation: Once compiled, look into ipls_anomaly_detection/resources/libs. The Maven build will include dependencies for multiple platforms; manually delete the ones you don't need to reduce the size of the containers.

This step builds the base container for the IPLS Bootstrapper and IPLS Peer containers. It is built on openjdk:8u302-jre-slim-buster and contains go and go-IPFS along with a few other required system libraries.

This step must be completed on all devices that will be running the Bootstrapper or Peer containers.

From ipls_anomaly_detection, navigate to base_container:

cd base_container/sh build_container.shThis step builds the IPLS Bootstrapper Container and must be run on all Bootstrapper Devices.

NOTE: You only need to build this on the Bootstrapper Devices, it does not need to be built on the Peer Devices.

There is really only one difference between the Bootstrapper and Peer containers, but it is a big one. The Bootstrapper Container will init ipfs as part of the Docker Build to ensure the IPFS Peer ID of the Bootstrapper remains constant for the Peer configurations. The Peers on the other hand will init ipfs when the containers are run, getting a new IPFS Peer ID each time.

From ipls_anomaly_detection, navigate to bootstrapper_container:

cd bootstrapper_container/sh build_container.shAfter the container is built, get the container's IPFS Peer ID:

sh get_peerid.shThis will result in console output that looks something like this:

----------

BOOTSTRAPPER_PEERID:

12D3KooWHtpV9iqWJQrXCr95Foe5tpgiVih5YFXNeyVPUknVvAw8

----------

BOOTSTRAPPER_ADDRESS:

/ip4/192.168.56.55/tcp/4001/ipfs/12D3KooWHtpV9iqWJQrXCr95Foe5tpgiVih5YFXNeyVPUknVvAw8

----------NOTE: The IDs above are just examples, it is very unlikely that yours will be the same

Copy the BOOTSTRAPPER_PEERID and BOOTSTRAPPER_ADDRESS; you will need these to configure the Peers.

NOTE: The configuration file at ipls_anomaly-detection/bootstrapper_container/resources/adconfig.json is used when the bootstrapper container is run and should not need to be changed.

This is an optional step that copies the Docker container and converts it to Singularity; it requires the Docker container step above to be complete before proceeding.

From ipls_anomaly_detection navigate to bootstrapper_container/singularity:

cd bootstrapper_container/sh convert_to_singularity.shNOTE: This will take a long time. The conversion saves the Docker container to a tar, converts it to a .sif file and then uses that as a base to build the final Singularity container.

This puts bootstrapper.sif into the directory ipls_anomaly_detection/bootstrapper_container/singularity.

This container will have the same Peer ID as the container built in the Docker step; use the same ID when configuring Peers. This container will also use the adConfig.json from the Docker Version step.

This step builds the IPLS Peer Container and must be run on all Peer Devices.

NOTE: You only need to build this on the Peer Devices, it does not need to be built on the Bootstrapper Devices.

From ipls_anomaly_detection, navigate to peer_container:

cd peer_container/sh build_container.shAfter the container is built, you will have to adjust the configurations.

From ipls_anomaly_detection, navigate to /peer_container/resources:

cd peer_container/resourcesRecall the values you used on the Bootstrapper Containers' get_peer_id.sh and use those values here for ${BOOTSTRAPPER_ADDRESS} and ${BOOTSTRAPPER_PEERID}.

These are lists, so you should add a value for each bootstrapper node you are using.

Select a ${NODE_NUMBER} for each Peer device; this number will be used to by the Dashboard to identify each Peer. The Dashboard is configured to listen for 1, 2, 3 and 4.

Edit the file 'adConfig.json':

{

"modelPath" : "/workspace/ad_ipls_params",

"scalerPath" : "/workspace/scaler",

"mqttProtocol" : "tcp://",

"mqttBrokerip" : "0.0.0.0",

"mqttPort" : "7883",

"mqttSubTopic" : "vehicle/${NODE_NUMBER}",

"mqttPubTopic" : "v${NODE_NUMBER}/anomaly",

"ipfsAddress" : "/ip4/127.0.0.1/tcp/5001",

"ipfsBootstrapperAddresses": [

"${BOOTSTRAPPER_ADDRESS}"

],

"ipfsBootstrapperIds" : [

"${BOOTSTRAPPER_PEERID}"

],

"iplsIsBootstrapper" : "false",

"peerDataisSynchronous" : "false",

"epochs" : "3",

"learningRate" : "0.001",

"iplsModelPartitions" : "8",

"iplsMinPartitions" : "2",

"iplsMinPeers" : "1",

"updateFrequency" : 32,

"stabilityCount" : 5

}This is an optional step that copies the Docker container and converts it to Singularity; it requires the Docker container step above to be complete before proceeding.

From ipls_anomaly_detection, navigate to peer_container/singularity:

cd peer_container/singularitysh convert_to_singularity.shNOTE: This will take a long time. The conversion saves the Docker container to a tar, converts it to a .sif file and then uses that as a base to build the final Singularity container.

From ipls_anomaly_detection navigate to peer_container/singularity.

This container will also use the adConfig.json from the Docker Version step.

This step builds the Dashboard container; this build is only for Docker on Linux x86_64.

The Dashboard listens for error scores that the Peer containers publish and displays them. The Dashboard itself does not interact with the Peer container, instead Peer containers are configured to bridge to the Dashboard's MQTT Broker.

This step should be completed on the device designated for the Dashboard.

From ipls_anomaly_detection, navigate to frontend:

cd frontend/sh build_container.shNOTE: If you need to adjust anything for MQTT, the configs are at frontend/resources/mosquitto.conf and frontend/config.js. This should only happen if there are port conflicts.

This step builds a container that will continuously publish either "normal" or "anomaly" data to the IPLS Peer Containers. It also sets up an MQTT container to act as a broker for each IPLS Peer. You need to build this on each of the Peer Devices. This is build is for Docker on Linux ARM64 only.

From ipls_anomaly_detection, navigate to data_generator:

cd data_generator/sh build_container.shFrom ipls_anomaly_detection, navigate to data_generator/resources:

cd data_generator/resourcesEdit "mosquitto.conf":

####### MQTT Insecure

port 7883

bind_address 0.0.0.0

allow_anonymous true

#######

max_queued_messages 20000

connection cloud-bridge_0

address ${DASHBOARD_IP}:1883

topic # out 1 "" ""Change ${DASHBOARD_IP} to the IP of the Dashboardf

Edit "generators.conf":

#Local MQTT broker configuration

[mqtt]

host=0.0.0.0

port=7883

pub_topic=vehicle/${NODE_NUMBER}

[service]

publish_interval=.25

total_runtime=0

normal_file=/workspace/data/${NORMAL_DATA}

anomaly_file=/workspace/data/${ANOMALY_DATA}Change ${NODE_NUMBER} to the one used in the previous step on each Peer Device

Change ${NORMAL_DATA} and ${ANOMALY_DATA} to the file names in ipls_anomaly_detection/resources/data.

NOTE: There are four files each for "normal" and "anomaly" data; you may use any data on any device so long as you use normal for normal and anomaly for anomaly.

With four of each type, each Peer device can use different normal/anomaly files from each other.

The following steps will walk through running the IPLS Anomaly Detection Solution.

-

From ipls_anomaly_detection, navigate to frontend on the Dashboard Device

sh run_docker.sh

NOTE: This will also start an mqtt container.

If you need to restart MQTT:

docker stop ipls_mqtt

docker stop ad_gui

and then start the Dashboard again:

sh run_docker.sh

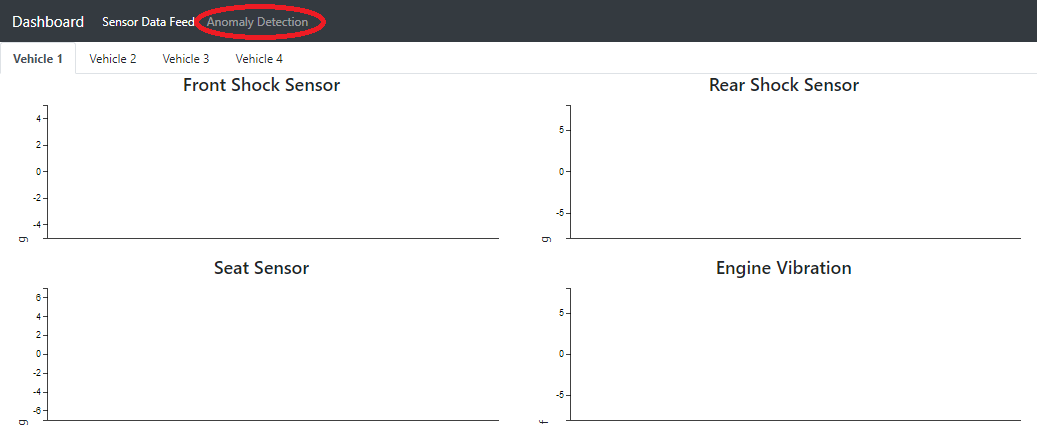

Open a browser and navigate to

http://${DASHBOARD_IP}:5601/At the top of the page click the tab titled "Anomaly Detection"

-

Start the Bootstrapper Containers

-

Docker Version:

From ipls_anomaly_detection, navigate to bootstrapper_container on the Bootstrapper Devices

sh run_docker.shNOTE: To detach without killing the container: CTRL+p then CTRL+q

To view the running container logs:

docker container logs -f ipls_test_peer -

Singularity Version:

From ipls_anomaly_detection, navigate to bootstrapper_container/singularity on the Bootstrapper Devices

sh run_singularity.shNOTE: You may also run the container in the background as an instance via:

sh start_instance.shandsh stop_instance.shNOTE: The terminal for this container will should read 'Daemon is ready' before it runs the Java code, and then several lines of 'Updater Started...' and 'java.util.concurrent.ForkJoinPool'

This will signal that the Bootstrapper is properly running and waiting for Peers to join

-

-

From ipls_anomaly_detection, navigate to data_generator on the Peer Devices

sh mqtt.sh

NOTE:

Running the script when a MQTT container is not running will start the container.

Running the script when a MQTT container is running will stop the container.

-

Start the Peer Containers:

-

Docker Version:

From ipls_anomaly_detection, navigate to peer_container on the Peer Devices

sh run_docker.shNOTE: To detach without killing the container: CTRL+p then CTRL+q

To view the running container logs:

docker container logs -f ipls_test_peer -

Singularity Version:

From ipls_anomaly_detection, navigate to peer_container/singularity on the Peer Devices

sh run_singularity.shNOTE: You may also run the container in the background as an instance via:

sh start_instance.shandsh stop_instance.shNOTE: The terminal for this container will should read 'Daemon is ready' before it runs the Java code, and then 'Connected to tcp://0.0.0.0:7883 with client ID' and 'Subscribing to topic "vehicle/1" qos 1'.

At that point the Peers are joined in to IPLS and waiting for data.

-

-

From ipls_anomaly_detection, navigate to data_generator on the Peer Devices

sh run_docker.sh -n

NOTE: The "-n" flag will publish normal data, while the "-a" flag will publish anomaly data

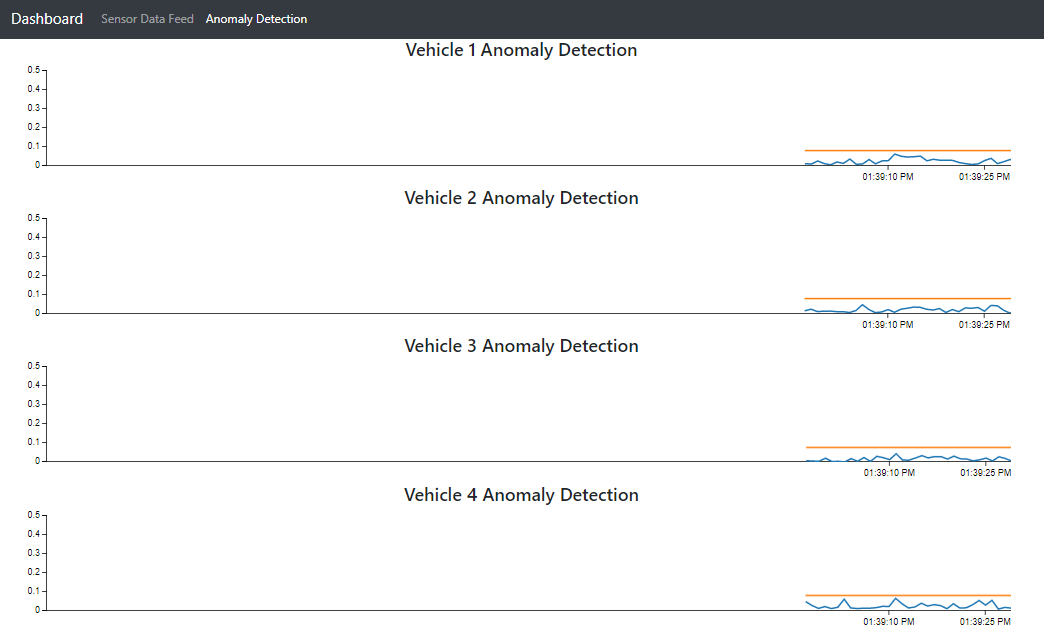

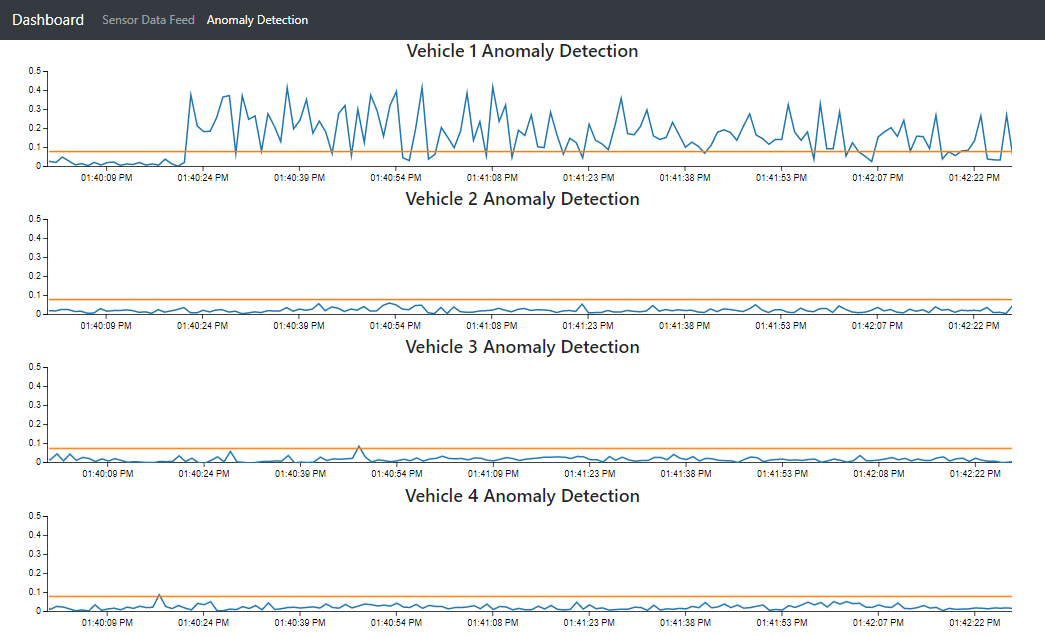

Once the data is flowing you will see the error scores being published back to the Dashboard.

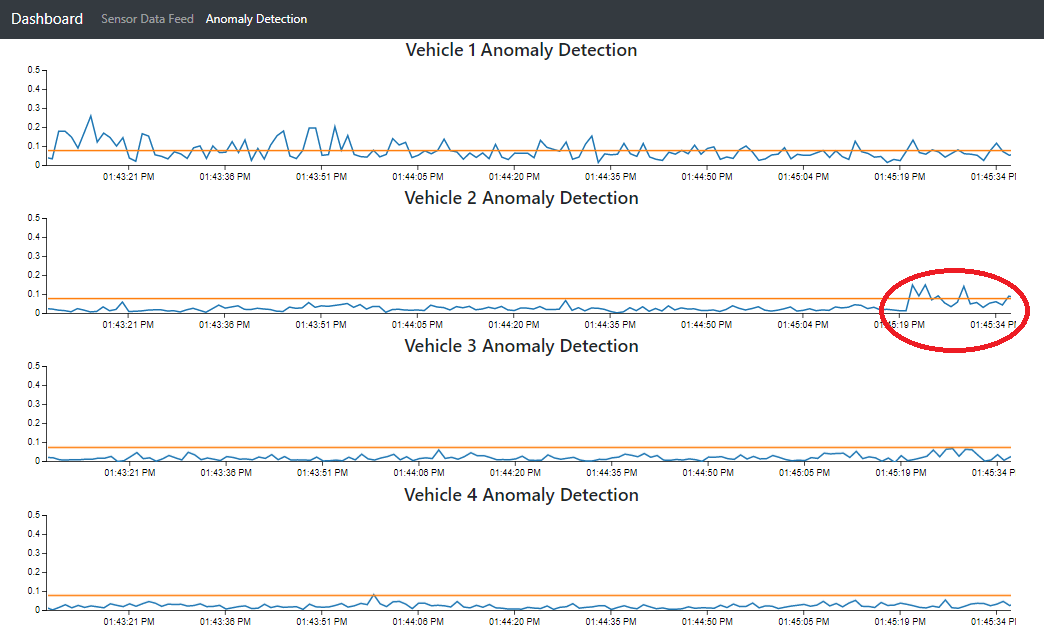

As shown here, Vehicle 4 is using "normal" data so the blue line, the error scores, will be below the yellow line. The other three vehicles are using "anomaly" data.

The general "flow" for the demonstration is usually to start all four devices on normal data then swap one to anomaly data and let it train for several minutes.

After the blue error score line dips back below the yellow line, swap one or more of the other vehicles to anomaly data. Here you will notice that their error score lines do not spike like the first one did due to the training done on the first device and sharing of model gradients via IPLS.

NOTE: If you terminate a Peer container, it will break the training of the other Peers; this is due to a bug with the IPLS code. You will need to terminate the rest of the containers, then start them again to proceed.

If a new Peer joins after some initial Peers have been training for a bit, you might observe an error score spike on the initial Peers; this is due to a bug with the IPLS code. That spike is not persistent and will return to normal after the models update again.