Note: Supporting article available here.

This repository presents yet another extension to the Stable Diffusion model for image generation: a simple and fast strategy towards prompting Stable Diffusion with an arbitrary composition of image and text tokens.

-

Clone this repository. This would also clone the original Stable Diffusion repository as a submodule. Before proceeding to the next steps, make sure to follow the instructions to set up text-to-image generation inside the

stable-diffusionfolder by following the steps from their repository. Note that this includes downloading their model into the appropriate place insidestable-diffusionand installing the requirements ofstable-diffusion. -

This repository contains the n-gram prompts data inside

data. Additionally, the preprocessed CLIP embeddings of these prompts need to be downloaded intodatafrom here. -

Design a multimodal prompt by replacing images with

[img]token in the prompt string. For example, the prompt in the Figure above is:prompt = "A tiger taking a walk on [img]". -

Let the comma separated paths to all images in the prompt be

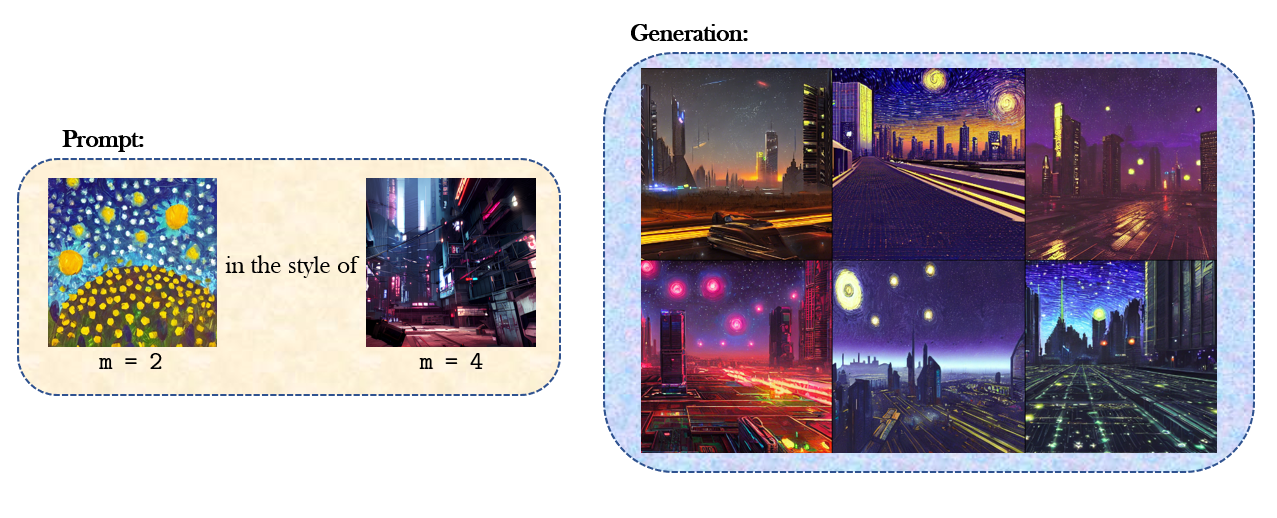

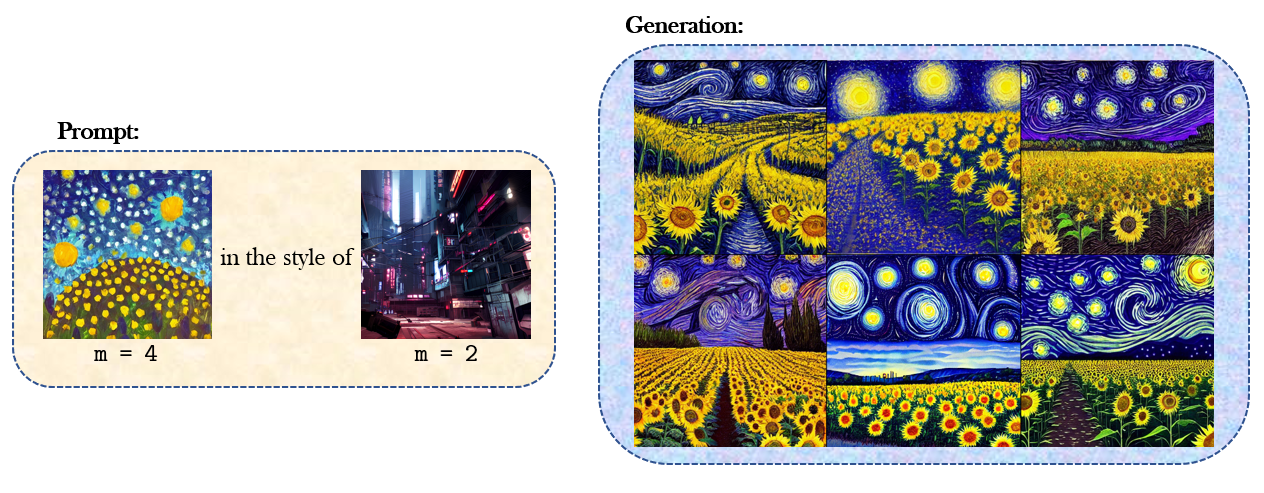

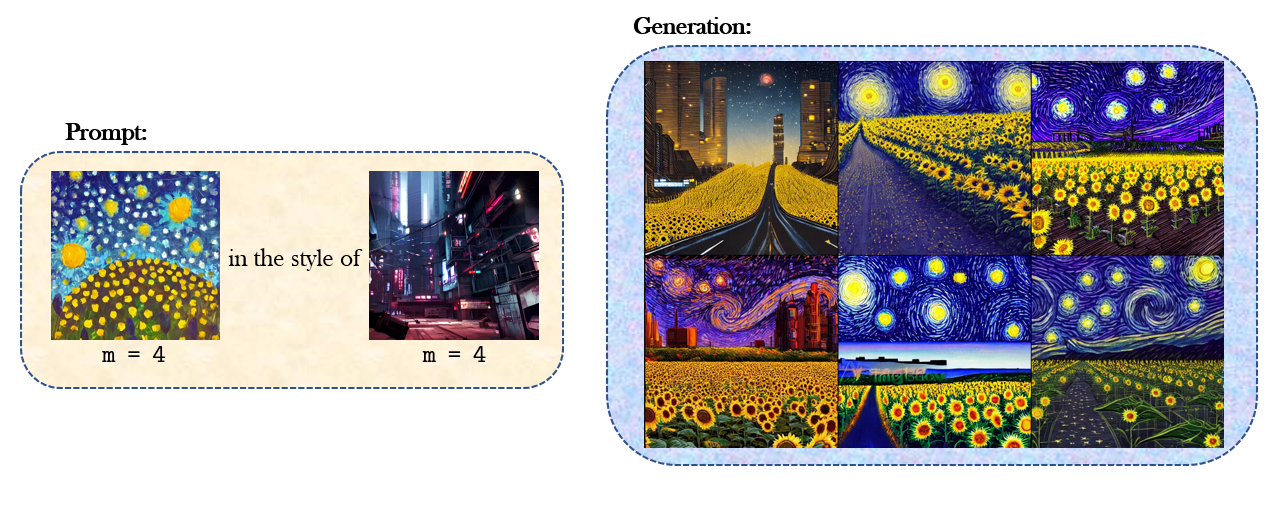

image_paths. Each image can optionally be assigned absolute weights (denoted as$m$ , see this for more details) ideally in the integer range 1-10. Let the comma separated weights beimage_weights. -

Run the following command:

python mp2img.py \

--prompt $prompt \

--plms \

--prompt-images $image_paths \

--image-weights $image_weightsSome sample commands are shown in commands.sh.

We see the effect of varying