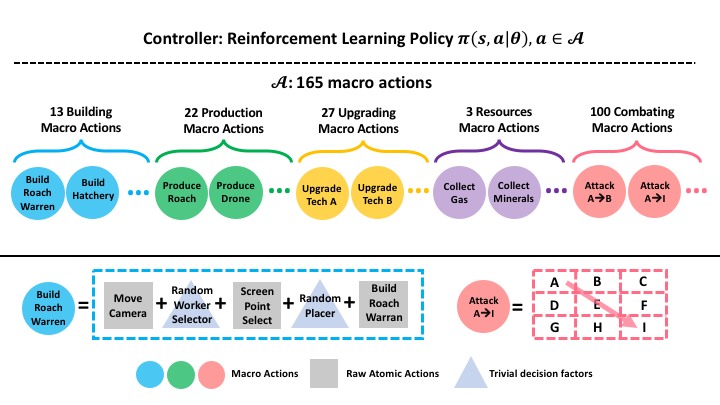

SC2Learner is a macro-action-based StarCraft-II reinforcement learning research platform. It exposes the re-designed StarCraft-II action space, which has more than one hundred discrete macro actions, based on the raw APIs exposed by DeepMind and Blizzard's PySC2. The macro action space relieves the learning algorithms from a disastrous burden of directly handling a massive number of atomic keyboard and mouse operations, making learning more tractable. The environments and wrappers strictly follow the interface of OpenAI Gym, making it easier to be adapted to many off-the-shelf reinforcement learning algorithms and implementations.

TStartBot1, a reinforcement learning agent, is also released with two off-the-shelf reinforcement learning algorithms Dueling Double Deep Q Network (DDQN) and Proximal Policy Optimization (PPO), as examples. Distributed versions of both algorithms are released, enabling learners to scale up the rollout experience collection across thousands of CPU cores on a cluster of machines. TStarBot1 is able to beat level-9 built-in AI (cheating resources) with 97% win-rate and level-10 (cheating insane) with 81% win-rate.

A whitepaper of TStarBots is available at here.

- Python >= 3.5 required.

- PySC2 Extension required.

Git clone this repository and then install it with

pip3 install -e sc2learnerRun a random agent playing against a builtin AI of difficulty level 1.

python3 -m sc2learner.bin.evaluate --agent random --difficulty '1'To train an agent with PPO algorithm, actor workers and learner worker must be started respectively. They can run either locally or across separate machines (e.g. actors usually run in a CPU cluster consisting of hundreds of machines with tens of thousands of CPU cores, and a learner runs in a GPU machine). With the designated ports and learner's IP, rollout trajectories and model parameters are communicated between actors and learner.

- Start 48 actor workers (run the same script in all actor machines)

for i in $(seq 0 47); do

CUDA_VISIBLE_DEVICES= python3 -m sc2learner.bin.train_ppo --job_name=actor --learner_ip localhost &

done;- Start a learner worker

CUDA_VISIBLE_DEVICES=0 python3 -m sc2learner.bin.train_ppo --job_name learnerSimilarly, DQN algorithm can be tried with sc2learner.bin.train_dqn.

After training, the agent's in-game performance can be observed by letting it play the game against a build-in AI of a certain difficulty level. Win-rate is also estimated meanwhile with multiple such games initialized with different game seeds.

python3 -m sc2learner.bin.evaluate --agent ppo --difficulty 1 --model_path REPLACE_WITH_YOUR_OWN_MODLE_PATHWe can also try ourselves playing against the learned agent by first starting a human player client and then a learned agent.

They can run either locally or remotely.

When run across two machines, --remote argument needs to be set for the human player side to create an SSH tunnel to the remote agent's machine and ssh keys must be used for authentication.

- Start a human player client

CUDA_VISIBLE_DEVICES= python3 -m pysc2.bin.play_vs_agent --human --map AbyssalReef --user_race zerg- Start a PPO agent

python3 -m sc2learner.bin.play_vs_ppo_agent --model_path REPLACE_WITH_YOUR_OWN_MODLE_PATHBesides, a self-play training (playing vs. past versions) is also provided to make learning more diversified strategies possible.

- Start Actors

for i in $(seq 0 48); do

CUDA_VISIBLE_DEVICES= python3 -m sc2learner.bin.train_ppo_selfplay --job_name=actor --learner_ip localhost &

done;- Start Learner

CUDA_VISIBLE_DEVICES=0 python3 -m sc2learner.bin.train_ppo_selfplay --job_name learnerThe environments and wrappers strictly follow the interface of OpenAI Gym.

The macro action space is defined in ZergActionWrapper and the observation space defined in ZergObservationWrapper, based on which users can easily make their own changes and restart the training to see what happens.

You are welcome to submit questions and bug reports in Github Issues. You are also welcome to contribute to this project.