This project is designed to help you select and analyse your research interests in a visually appealing way. With this project, you will be able to carry out the following tasks:

- Examine trends for a particularly interesting area of research and follow its trend over a period of time and in various conferences.

- View topics a particular researcher has been researching, based on his or her history of published research.

- Target a particular institution and see the latest and most popular research being conducted there.

Quick links to Research Trends documentations

- Data Collection

- Data Processing

- Accessing the Application

- Running the Application

- System Diagram

- Extension

- Documentation

- Directory:

./collect - Example outputs

- Step1 outputs:

- raw xml, Scrapy's outputs in XML format. This file only contains papers published in 2010.

- papers.xml, formatted Scrapy's outputs for better observation, lacking affiliation and index term information. This file only contains 100 papers.

- Step2 output:

- papers.xml, 100 papers, sampled Scrapy's outputs + PDF information in XML format.

- Step1 outputs:

The aim of data collection was to build a corpus of all the publically available research publications from research conference websites. In order to achieve this goal, we used the following flow:

From each research publication, we collected the following data-points:

- Title of the paper

- Abstract of the paper

- List of authors

- URL

- List of affliliated Institutions

- Keywords/Index terms

- Documentation

- Directory:

./process - Example outputs:

- papers.xml: 100 example papers with augmented keywords.

- XML to JSON results: 100 example papers. We later use Django commands to load these JSON files to MySQL database.

In addition to extracting information using the above mentioned techniques, we also employed several techniques to enhance our collection of keywords and to make the spectrum of keywords even wider. We applied the following techniques for Keyword extraction.

- Tf-IDf based Keyword

- Capitalized words extraction

- Topic Modelling

- Clustering

Since the index terms dataset is sparse and has the long-tail phenomenon, it was necessary to employ the above-mentioned techniques for the dataset.

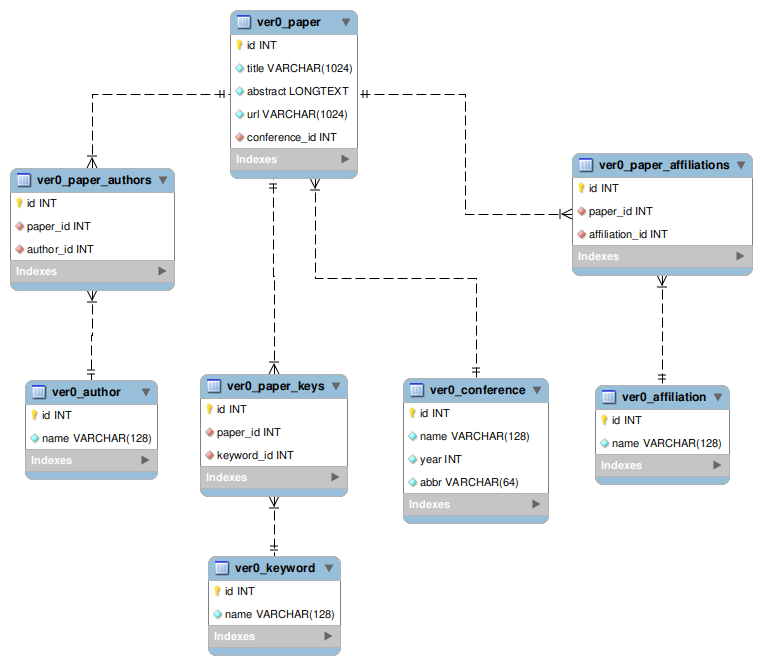

We use a relational database and Django for accessing the application, therefore we needed to decouple the XML files into 5 tables: conference, author, affiliations, keywords, and paper. Because Django comes with its own modelling functionality for JSON files in the databases, we used JSON to represent these 5 tables.

There are three many-to-many relations:

- paper and author

- paper and keyword

- paper and affiliation

The conference_id is a foreign key in paper table.

- Documentation: screenshots and usages

- Directory:

./research_trends - Example database

- mini.sql: This mini-database is extracted from a larger one. It has 100 papers and contains 5 main tables and the many-to-many relations.

Finally, to make our entire pipeline accessible to the end-user, we used the python Django Framework as it is based on Model View Architecture (MVC). For Rendering the visual features, charts, and plots in our project, we also used Chart.js.

The application is based on Django framework and this means that running the application is exactly the same. However, in order to install the dependencies of the project, we advise you to install a virtual environment and create the virtual environment as follows:

pip install -r requirements.txtThe required file requirements.txt can be found in the project folder itself.

Once all the packages have been installed, you can navigate to the research_trends directory, follow the Usage session in the document to run our application.

This module compares the write and read efficiency of three database types: MySQL, MongoDB and ElasticSearch. This comparison gives us insights as to which database backend we should plug in to the Django framework.

A detailed report could be found here