We welcome contributions from the community to help enhance GraphRAG Local Ollama! Please see our Contributing Guidelines for more details on how to get involved.

Need support for llama integration.

Welcome to GraphRAG Local Ollama! This repository is an exciting adaptation of Microsoft's GraphRAG, tailored to support local models downloaded using Ollama. Say goodbye to costly OpenAPI models and hello to efficient, cost-effective local inference using Ollama!

For more details on the GraphRAG implementation, please refer to the GraphRAG paper.

Paper Abstract

The use of retrieval-augmented generation (RAG) to retrieve relevant information from an external knowledge source enables large language models (LLMs)to answer questions over private and/or previously unseen document collections.However, RAG fails on global questions directed at an entire text corpus, suchas “What are the main themes in the dataset?”, since this is inherently a queryfocused summarization (QFS) task, rather than an explicit retrieval task. PriorQFS methods, meanwhile, fail to scale to the quantities of text indexed by typicalRAG systems. To combine the strengths of these contrasting methods, we proposea Graph RAG approach to question answering over private text corpora that scaleswith both the generality of user questions and the quantity of source text to be indexed. Our approach uses an LLM to build a graph-based text index in two stages:first to derive an entity knowledge graph from the source documents, then to pregenerate community summaries for all groups of closely-related entities. Given aquestion, each community summary is used to generate a partial response, beforeall partial responses are again summarized in a final response to the user. For aclass of global sensemaking questions over datasets in the 1 million token range,we show that Graph RAG leads to substantial improvements over a na¨ıve RAGbaseline for both the comprehensiveness and diversity of generated answers.

- Local Model Support: Leverage local models with Ollama for LLM and embeddings.

- Cost-Effective: Eliminate dependency on costly OpenAPI models.

- Easy Setup: Simple and straightforward setup process.

Follow these steps to set up this repository and use GraphRag with local models provided by Ollama :

-

Create and activate a new conda environment: (please stick to the given python version 3.10 for no errors)

conda create -n graphrag-ollama-local python=3.10 conda activate graphrag-ollama-local

-

Install Ollama:

- Visit Ollama's website for installation instructions.

- Or, run:

curl -fsSL https://ollama.com/install.sh | sh #ollama for linux pip install ollama

-

Download the required models using Ollama, we can choose from (mistral,gemma2, qwen2) for llm and any embedding model provided under Ollama:

ollama pull mistral #llm ollama pull nomic-embed-text #embedding

-

Clone the repository:

git clone https://github.com/TheAiSingularity/graphrag-local-ollama.git

-

Navigate to the repository directory:

cd graphrag-local-ollama/ -

Install the graphrag package ** This is the most important step :

pip install -e . -

Create the required input directory: This is where the experiments data and results will be stored - ./ragtest

mkdir -p ./ragtest/input

-

Copy sample data folder input/ to ./ragtest. Input/ has the sample data to run the setup. You can add your own data here in .txt format.

cp input/* ./ragtest/input -

Initialize the ./ragtest folder to create the required files:

python -m graphrag.index --init --root ./ragtest

-

Move the settings.yaml file, this is the main predefined config file configured with ollama local models :

cp settings.yaml ./ragtest

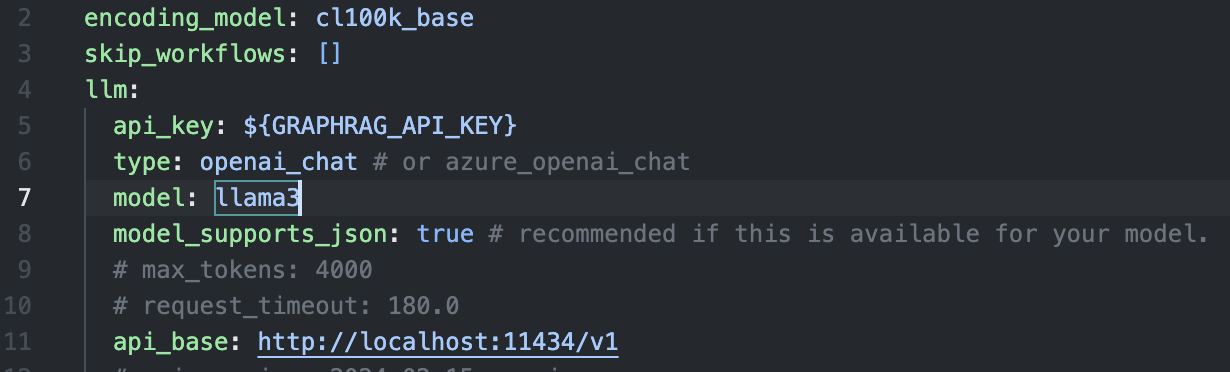

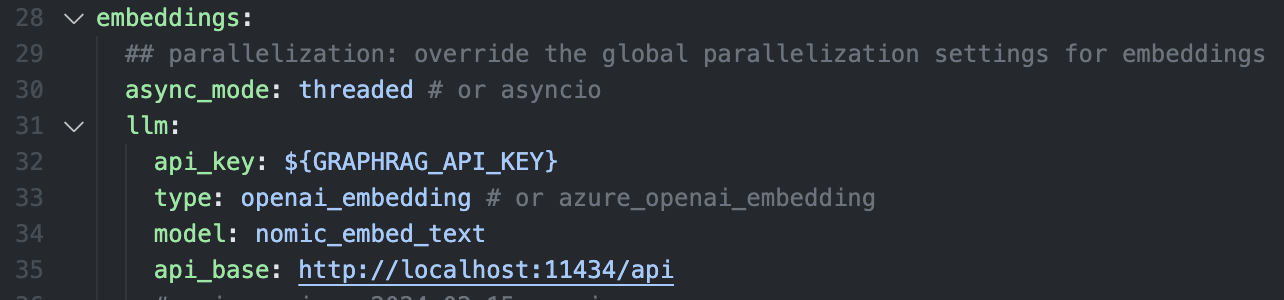

Users can experiment by changing the models. The llm model expects language models like llama3, mistral, phi3, etc., and the embedding model section expects embedding models like mxbai-embed-large, nomic-embed-text, etc., which are provided by Ollama. You can find the complete list of models provided by Ollama here https://ollama.com/library, which can be deployed locally. The default API base URLs are http://localhost:11434/v1 for LLM and http://localhost:11434/api for embeddings, hence they are added to the respective sections.

-

Run the indexing, which creates a graph:

python -m graphrag.index --root ./ragtest

-

Run a query: Only supports Global method

python -m graphrag.query --root ./ragtest --method global "What is machine learning?"

Graphs can be saved which further can be used for visualization by changing the graphml to "true" in the settings.yaml :

snapshots:

graphml: true

To visualize the generated graphml files, you can use : https://gephi.org/users/download/ or the script provided in the repo visualize-graphml.py :

Pass the path to the .graphml file to the below line in visualize-graphml.py:

graph = nx.read_graphml('output/20240708-161630/artifacts/summarized_graph.graphml')

-

Visualize .graphml :

python visualize-graphml.py

By following the above steps, you can set up and use local models with GraphRAG, making the process more cost-effective and efficient.