Current approach allows you use controlnet diffusion models for video to video processing.

It does't require any training or something. All models can be used from scratch as is.

This repo uses Controlnet pipeline from diffusers.

Also you can feel free use any Unet models from other finetuned models. For creation this gif images I have used deliberate.

PS. Empirically, deliberate + softedge (and depth) give the most stable result.

It takes ~7 GPU MEM with default model and ~8.5GB GPU MEM with addition unet.

git clone https://github.com/TheDenk/Attention-Interpolation.git

cd Attention-Interpolation

pip install -r requirements.txtrun.py script uses config file in configs folder. By default it is default.yaml. Script contains all parameters for generation, such as prompt, seed etc.

python run.pyor overwrite config prompt

python run.py --prompt="Iron man in helmet, high quality, 4k"or with config

python run.py --config=./configs/default.yamlor with selected gpu

CUDA_VISIBLE_DEVICES=0 python run.py --config=./configs/default.yamlor with video path and prompts

python run.py --prompt="Iron man in helmet, high quality, 4k" \

--input_video_path="./video/input/man.mp4" \

--output_video_path="./video/output/default.mp4"Any Controlnet model can be used for image generation.

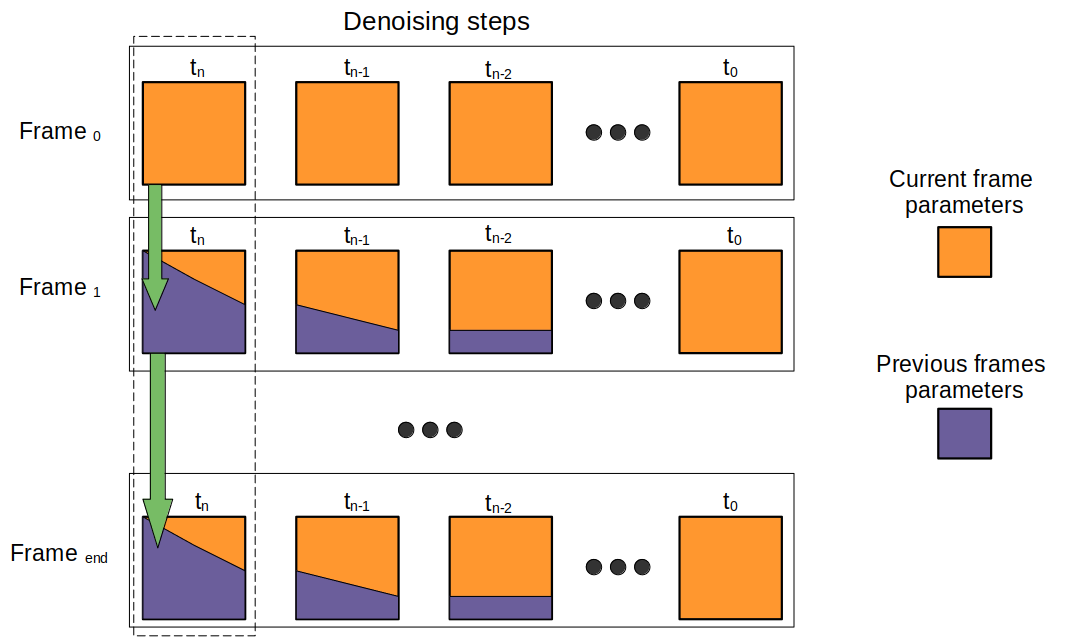

Attention Interpolation can be used for different layers in Attention mechanism.

For example: Query, Key, Value, Attention Map, Output Linear Layer.

In practice Interpolation for Attention Map and Output Linear Layer gives the best result.

interpolation_scheduler: ema # <-- rule for changing the ema parameter

ema: 0.625 # <-- interpolation point between the previous frames and the current frame

eta: 0.875 # <-- for ema scheduler each denoising step ema changes: ema=ema*eta (inapplicable to cos and linear)

...

use_interpolation: # <-- layers for which interpolation is applied

key: false

...

out_linear: true

attention_res: 32 # <-- maximum attention map resolution for which interpolation is applied

allow_names: # <-- part of Unet for which interpolation is applied

- down

...Issues should be raised directly in the repository. For professional support and recommendations please welcomedenk@gmail.com.