Experimentation setup for the "Lottery Ticket" hypothesis for neural networks.

Used for our report titled "An Analysis of Neural Network Pruning in Relation to the Lottery Ticket Hypothesis" for a course at the University of Groningen.

The lottery ticket hypothesis refers to an idea relating to neural network pruning, as presented by Frankle and Carbin (J. Frankle and M. Carbin. The lottery ticket hypothesis: Training pruned neural networks. CoRR, abs/1803.03635, 2018. URL http://arxiv.org/abs/1803.03635. Accessed: February 2020.). With the code in this repository, we experimented on this hypothesis with a LeNet model on the MNIST dataset.

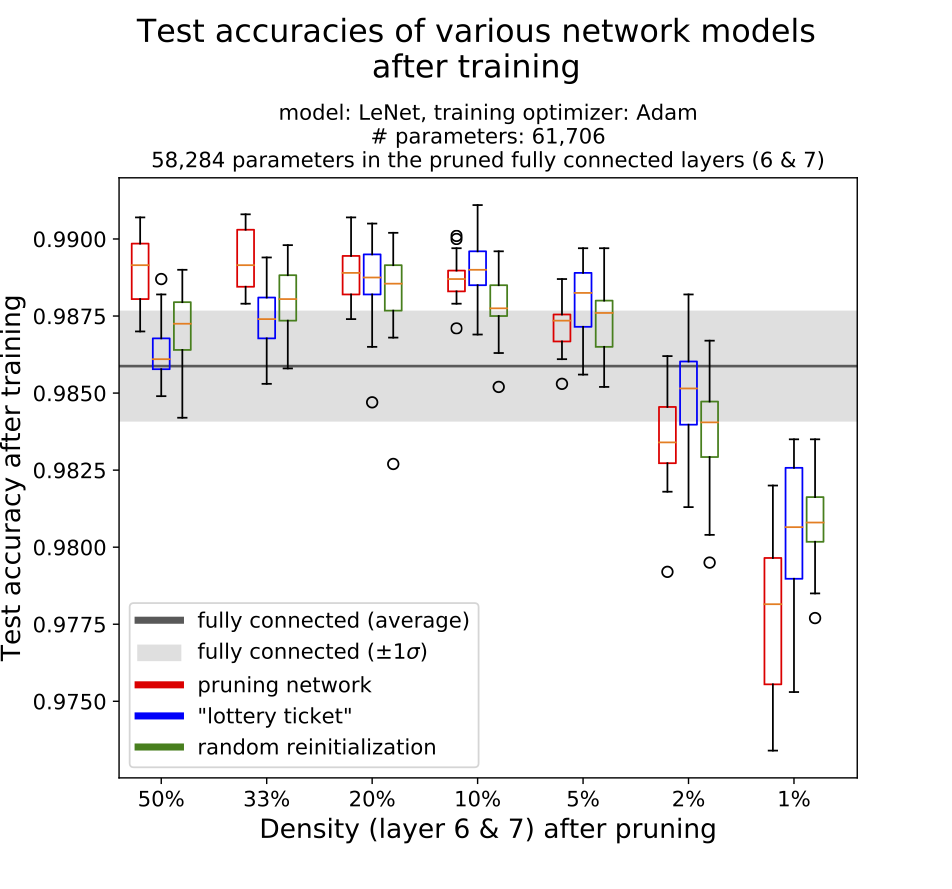

Consider the following figure.

We can interpret the figure above as this: When leveraging the "lottery ticket" principle after pruning the selected neural network model (LeNet) down to -say- 5% density, we achieve a higher test accuracy (compared to the original fully dense model or another pruning approach). The subject dataset is MNIST, by the way.

Check out our complete report for more information.

All the experiment code can be found in the code folder. Currently, this consists of two un-refactored scripts for running baseline and lottery-ticket experiments, respectively: LotteryTicket_baseline_lr.py and LotteryTicket_lr.py.

To run the code you need a Python 3 installation (any version will probably do) and an installation of Tensorflow (tensorflow.org). Furthermore, you will have to install at least the Python packages keras, tensorflow-model-optimization and matplotlib (possibly more).

To run the code you will need the dataset (MNIST) in the same folder as the code. This consists of 4 files which can be found on yann.lecun.com/exdb/mnist:

train-images-idx3-ubyte.gztrain-labels-idx1-ubyte.gzt10k-images-idx3-ubyte.gzt10k-labels-idx1-ubyte.gz

The LotteryTicket_lr.py script takes two arguments:

PRUNING_FACTOR- The factor to which the dense layers of the neural network will be pruned. For example, a value of 0.1 will result in a final model with only 10% of the neurons active in the dense layers.EPOCHS- The number of training epochs. The training procedure is equipped with an early-stopping mechanism, but besides this you'll have to determine an adequate number of epochs. For our experiments, we used 500-1000 epochs, depending on the pruning factor. Aggresive pruning, i.e. a small pruning factor, generally requires more epochs.

The LotteryTicket_baseline_lr.py script only takes the EPOCHS argument as it does not prune. We used a value of 500 here for our experiments.

When running the experiment on a good GPU, this should run quite fast (<10µs/sample for 60,000 samples).

The figure from the top of this readme is generated by another Python script which I might add to this repository later.