I am reading these papers:

✅ LLaMA: Open and Efficient Foundation Language Models

✅ Llama 2: Open Foundation and Fine-Tuned Chat Models

☑️ OPT: Open Pre-trained Transformer Language Models

✅ Attention Is All You Need

✅ Root Mean Square Layer Normalization

✅ GLU Variants Improve Transformer

✅ RoFormer: Enhanced Transformer with Rotary Position Embedding

✅ Self-Attention with Relative Position Representations

☑️ BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding

☑️ To Fold or Not to Fold: a Necessary and Sufficient Condition on Batch-Normalization Layers Folding

✅ Fast Transformer Decoding: One Write-Head is All You Need

✅ GQA: Training Generalized Multi-Query Transformer Models from Multi-Head Checkpoints

☑️ PaLM: Scaling Language Modeling with Pathways

✅ Understand the concept of dot product of two matrices.

✅ Understand the concept of autoregressive language models.

✅ Understand the concept of attention computation.

✅ Understand the workings of Byte-Pair Encoding (BPE) algorithm and tokenizer.

✅ Read and implement the workings of the SentencePiece library and tokenizer.

✅ Understand the concept of tokenization, input ids and embedding vectors.

✅ Understand & implement the concept of positional encoding.

✅ Understand the concept of single head self-attention.

✅ Understand the concept of scaled dot-product attention.

✅ Understand & implement the concept of multi-head attention.

✅ Understand & implement the concept of layer normalization.

✅ Understand the concept of masked multi-head attention & softmax layer.

✅ Understand and implement the concept of RMSNorm and difference with LayerNorm.

✅ Understand the concept of internal covariate shift.

✅ Understand the concept and implementation of feed-forward network with ReLU activation.

✅ Understand the concept and implementation of feed-forward network with SwiGLU activation.

✅ Understand the concept of absolute positional encoding.

✅ Understand the concept of relative positional encoding.

✅ Understand and implement the rotary positional embedding.

✅ Understand and implement the transformer architecture.

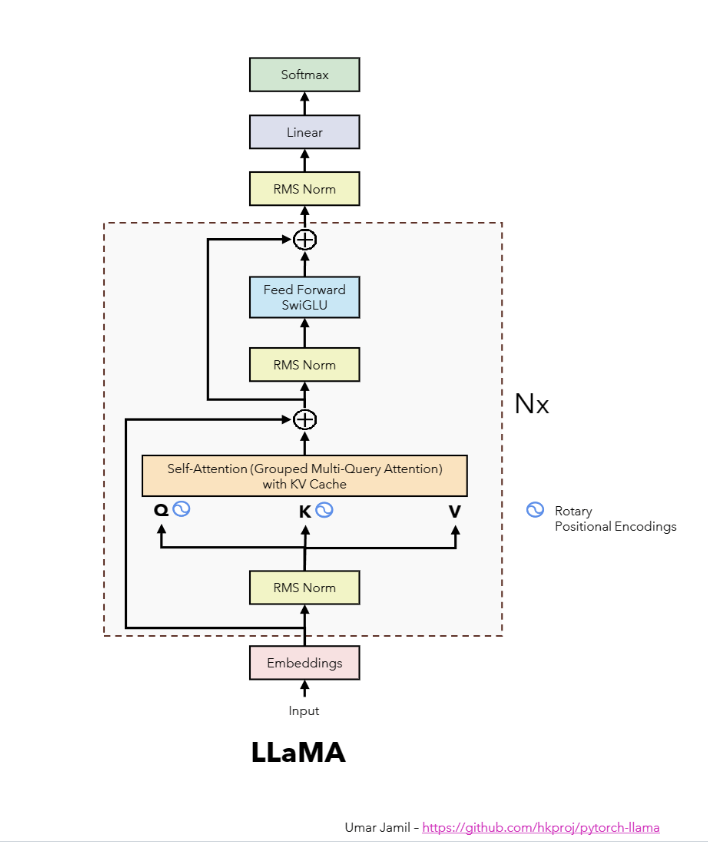

✅ Understand and implement the original Llama (1) architecture.

✅ Understand the concept of multi-query attention with single KV projection.

✅ Understand and implement grouped query attention from scratch.

✅ Understand and implement the concept of KV cache.

✅ Understand and implement the concept of Llama2 architecture.

✅ Test the Llama2 implementation using the checkpoints from Meta.

✅ Download the checkpoints of Llama2 and inspect the inference code and working.

☑️ Documentation of the Llama2 implementation and repo.

✅ Work on implementation of enabling and disabling the KV cache.

✅ Add the attention mask when disabling the KV cache in Llama2.

✅ LLAMA: OPEN AND EFFICIENT LLM NOTES

✅ UNDERSTANDING KV CACHE

✅ GROUPED QUERY ATTENTION (GQA)

🌐 pytorch-llama - PyTorch implementation of LLaMA by Umar Jamil.

🌐 pytorch-transformer - PyTorch implementation of Transformer by Umar Jamil.

🌐 llama - Facebook's LLaMA implementation.

🌐 tensor2tensor - Google's transformer implementation.

🌐 rmsnorm - RMSNorm implementation.

🌐 roformer - Rotary Tranformer implementation.

🌐 xformers - Facebook's implementation.

✅ Understanding SentencePiece ([Under][Standing][_Sentence][Piece])

✅ SwiGLU: GLU Variants Improve Transformer (2020)