What Is AKG

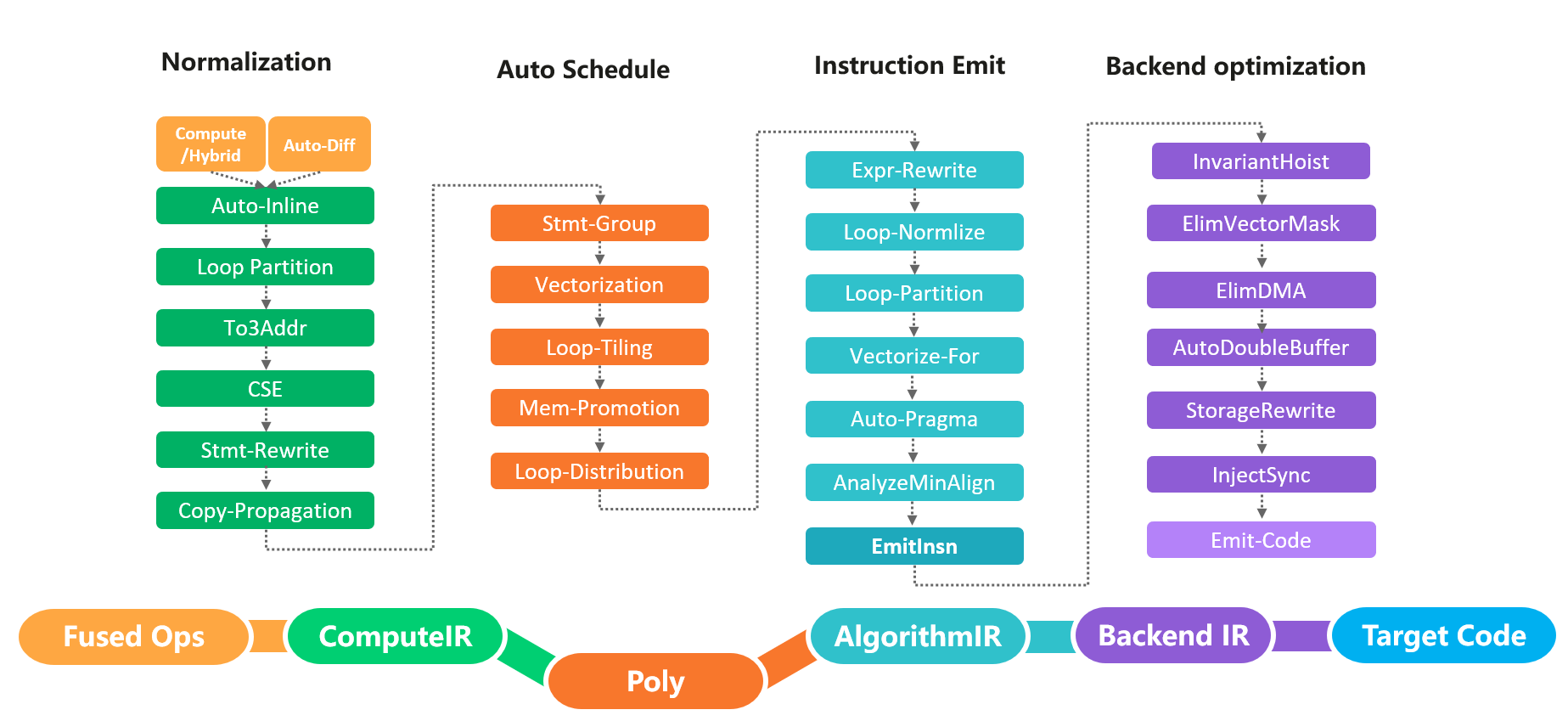

AKG(Auto Kernel Generator) is an optimizer for operators in Deep Learning Networks. It provides the ability to automatically fuse ops with specific patterns. AKG works with MindSpore-GraphKernel to improve the performance of networks running on different hardware backends.

AKG composes with four basic optimization module, normalization, auto schedule, instruction emit and backend optimization.

-

normalization. The mainly optimization of normalization includes three address transform, common subexpression elimination, copy propagation and so on.

-

auto schedule. The auto schedule module mainly have vectorization, loop tiling, mem promotion and loop distribution.

-

instruction emit. The instruction emitting module has the optimization about loop normalization, auto pragma and emit instruction.

-

backend optimization. The backend optimization module consists of double buffer optimization, storage rewrite optimization and inject sync optimization.

Hardware Backends Support

At present, Ascend910 is supported only. More Backends are on the list.

Build

Build With MindSpore

See MindSpore README.md for details.

Build Standalone

We suggest you build and run akg together with MindSpore. And we also provide a way to run case in standalone mode for convenience sake. Ascend platform is needed to build this mode. Refer to MindSpore Installation for more information about compilation dependencies.

git submodule update --init

bash build.sh

Run Standalone

- Set Environment

cd tests

source ./test_env.sh amd64

export RUNTIME_MODE='air_cloud'

export PATH=${PATH}:${YOUR_CCEC_COMPILER_PATH}

- Run test

cd tests/operators/vector

pytest -s test_abs_001.py -m "level0" # run level0 testcases

Contributing

Welcome contributions. See MindSpore Contributor Wiki for more details.

Release Notes

The release notes, see our RELEASE.