2Department of Computer Science, City University of Hong Kong

3OPPO Research Institute

4Department of Computing, The Hong Kong Polytechnic University

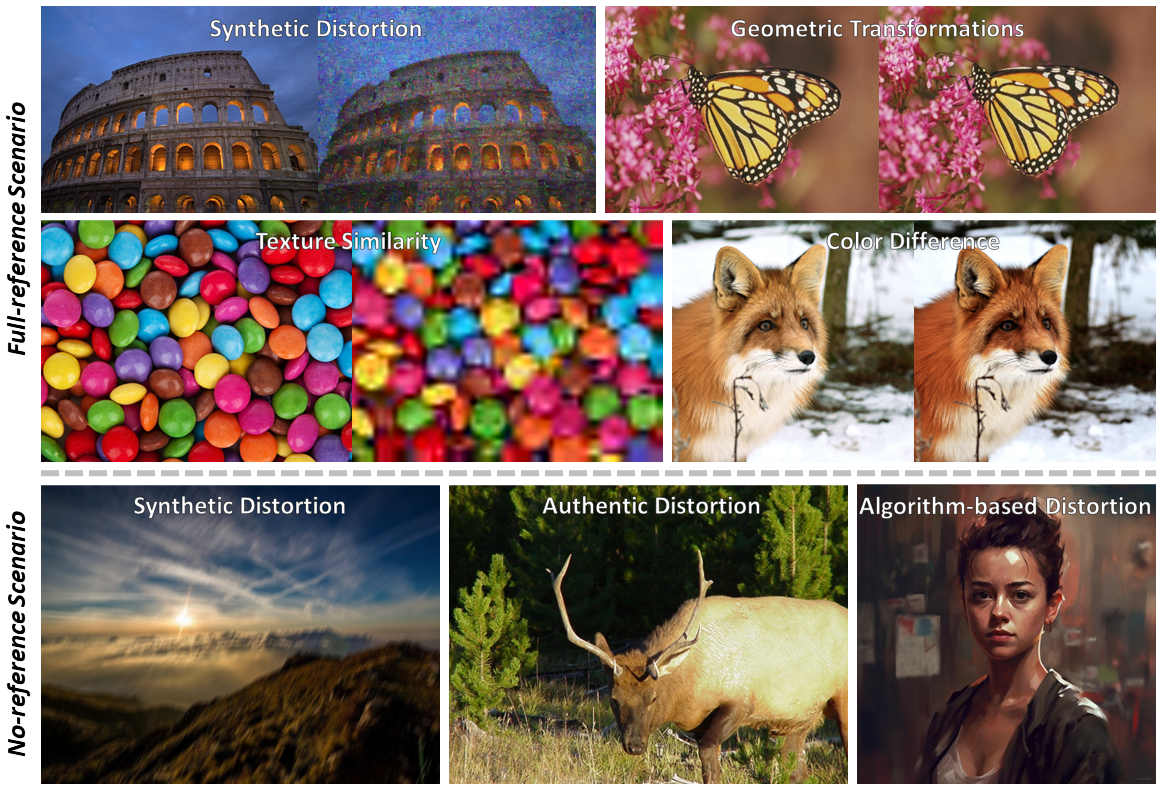

While Multimodal Large Language Models (MLLMs) have experienced significant advancement in visual understanding and reasoning, their potential to serve as powerful, flexible, interpretable, and text-driven models for Image Quality Assessment (IQA) remains largely unexplored. In this paper, we conduct a comprehensive and systematic study of prompting MLLMs for IQA. We first investigate nine prompting systems for MLLMs as the combinations of three standardized testing procedures in psychophysics (i.e., the single-stimulus, double-stimulus, and multiple-stimulus methods) and three popular prompting strategies in natural language processing (i.e., the standard, in-context, and chain-of-thought prompting). We then present a difficult sample selection procedure, taking into account sample diversity and uncertainty, to further challenge MLLMs equipped with the respective optimal prompting systems. We assess three open-source and one closed-source MLLMs on several visual attributes of image quality (e.g., structural and textural distortions, geometric transformations, and color differences) in both full-reference and no-reference scenarios. Experimental results show that only the closed-source GPT-4V provides a reasonable account for human perception of image quality, but is weak at discriminating fine-grained quality variations (e.g., color differences) and at comparing visual quality of multiple images, tasks humans can perform effortlessly.

We assess three open-source and one close-source MLLMs on several visual attributes of image quality (e.g., structural and textural distortions, color differences, and geometric transformations) in both full-reference and no-reference scenarios.

Full-reference scenario:

- Structural and textural distortions (synthetic distortion): FR-KADID

- Geometric transformations: Aug-KADID

- Texture similarity: TQD

- Color difference: SPCD

No-reference scenario:

- Structural and textural distortions (synthetic distortion): NR-KADID

- Structural and textural distortions (authentic distortion): SPAQ

- Structural and textural distortions (algorithm-based distortion): AGIQA-3K

To execute computational sample selection method for selecting difficult data, implement with below command.

python sample_selection.py

Before inference with MLLMs, please modify settings.yaml. Here is an example.

# FR_KADID, AUG_KADID, TQD, NR_KADID, SPAQ, AGIQA3K

DATASET_NAME:

FR_KADID

# GPT-4V api-key

KEY:

You need to input your GPT-4V API key

# single, double, multiple

PSYCHOPHYSICAL_PATTERN:

single

# standard, cot (chain-of-thought), ic (in-context)

NLP_PATTERN:

standard

# distorted image dataset path

DIS_DATA_PATH:

C:/wutianhe/sigs/research/IQA_dataset/kadid10k/images

# reference image dataset path

REF_DATA_PATH:

C:/wutianhe/sigs/research/IQA_dataset/kadid10k/images

# IC path (image paths)

IC_PATH:

[]

Inference with simple command.

python test_gpt4v.py

@article{wu2024comprehensive,

title={A Comprehensive Study of Multimodal Large Language Models for Image Quality Assessment},

author={Wu, Tianhe and Ma, Kede and Liang, Jie and Yang, Yujiu and Zhang, Lei},

journal={arXiv preprint arXiv:2403.10854v3},

year={2024}

}

I would like to thank my two friends Xinzhe Ni and Yifan Wang in our Tsinghua 1919 group for providing valuable NLP and MLLM knowledge in my personal difficult period.

If you have any question, please email wth22@mails.tsinghua.edu.cn.