This work is published in 2022 IEEE 32nd International Workshop on Machine Learning for Signal Processing (MLSP).

- Release 1.0, (22.06.2022)

- Git tag: MLSP-v1.0

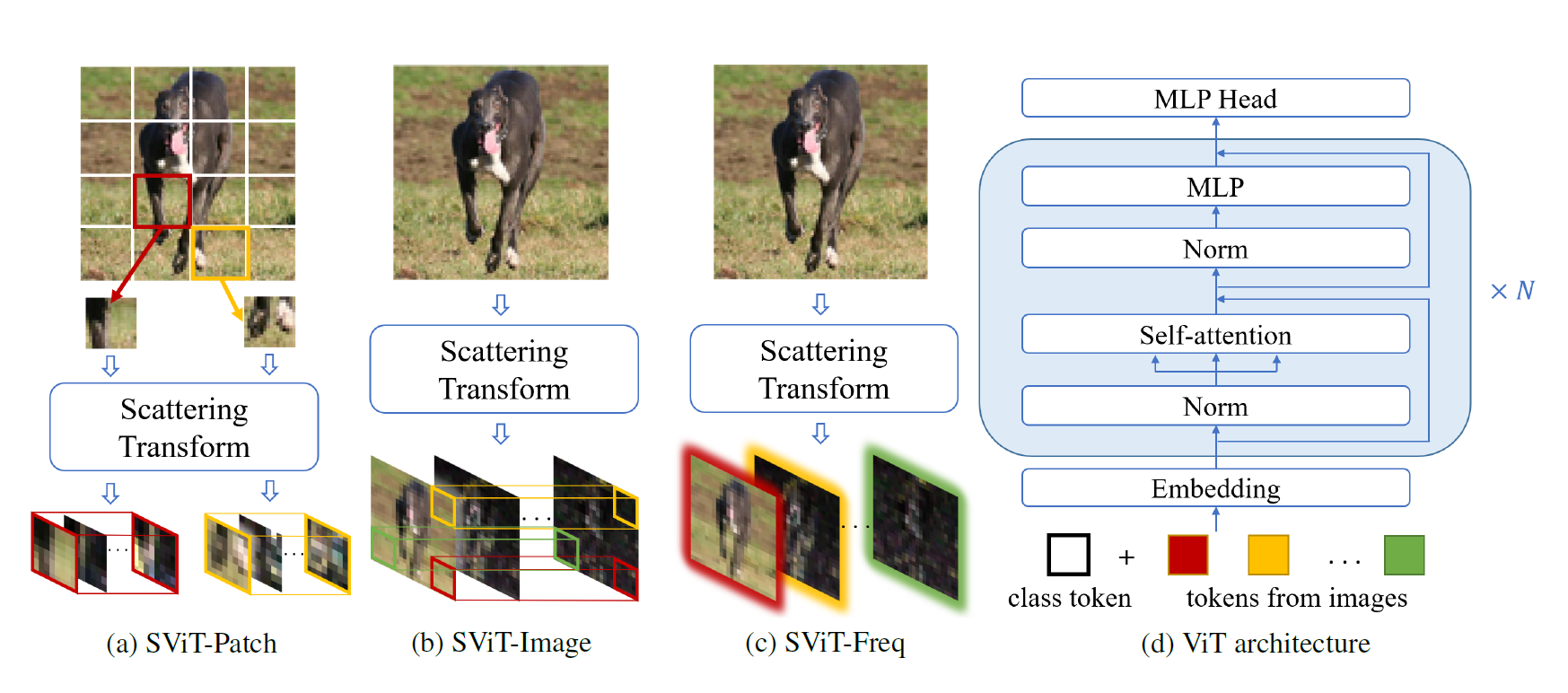

Overview of the model: we propose hybrid ViT models with scattering transform called Scattering Vision Transformer (SViT). More specifically, we investigate three tokenizations using scattering transform for ViT: patch-wise scattering tokens (SViTPatch), scattering image feature tokens (SViT-Image), and scattering frequency sub-band response tokens (SViT-Freq).

Overview of the model: we propose hybrid ViT models with scattering transform called Scattering Vision Transformer (SViT). More specifically, we investigate three tokenizations using scattering transform for ViT: patch-wise scattering tokens (SViTPatch), scattering image feature tokens (SViT-Image), and scattering frequency sub-band response tokens (SViT-Freq).

- Clone repository and install Python dependencies

$ git clone https://github.com/TianmingQiu/scattering_transformer

$ cd scattering_transformer

$ pip install -r requirements.txt - Create local save folder and log folder

$ cd scattering_transformer

$ mkdir checkpoint

$ mkdir log- Download the dataset

$ cd input/dataset- Configure the parameters of the model in the "custom_dataset.py" and "transforms.py" (if needed)

- Change the variable "DATA_TYPE" to the dataset you want to test into in the main function