A simple benchmark for python-based data processing libraries.

Guiding Principles:

- Python Only: I have no intention of using another language to process data. That said, non-Python implementations with Python bindings are perfectly fine (e.g. Polars).

- Single Node: I'm not interested in benchmarking performance on clusters of multiple machines.

- Simple Implementation: I'm not interested in heavily optimizing the benchmarking implementations. My target user is myself: somebody who largely uses pandas but would like to either speed up their computations or work with larger datasets than fit in memory on their machine.

Libraries:

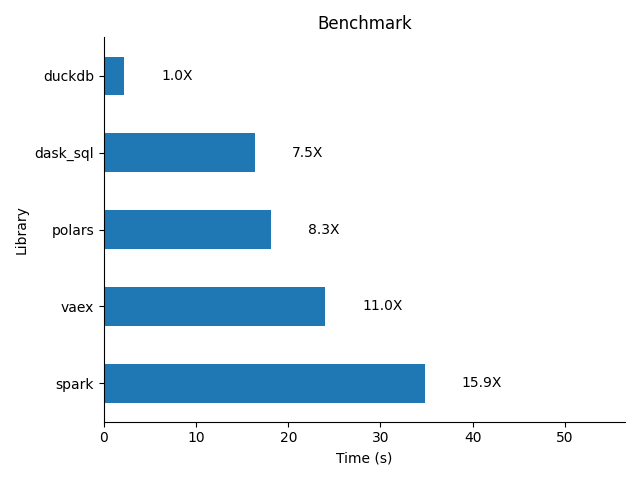

Results:

The following results are from running the benchmark locally on my desktop that has:

- Intel Core i7-7820X 3.6 GHz 8-core Processor (16 virtual cores, I think)

- 32 GB DDR4-3200 RAM.

- Ubuntu 16.04 😱

The New York City bikeshare, Citi Bike, has a real time, public API. This API conforms to the General Bikeshare Feed Specification. As such, this API contains information about the number of bikes and docks available at every station in NYC.

Since 2016, I have been pinging the public API every 2 minutes and storing the results. The benchmark dataset contains all of these results, from 8/15/2016 - 12/8/2021. The dataset consists of a collection of 50 CSVs stored here on Kaggle. The CSVs total almost 27 GB in size. The files are partitioned by station.

For the benchmark, I first convert the CSVs to snappy-compressed parquet files. This reduces the dataset down to ~4GB in size on disk.

To start, the bakeoff computation is extremely simple. It's almost "word count". I calculate the average number of bikes available at each station across the full time period of the dataset. In SQL, this is basically

SELECT

station_id

, AVG(num_bikes_available)

FROM citi_bike_dataset

GROUP BY 1Since the files are partitioned by station, predicate pushdown likely plays a large benefit for this computation.

The benchmark data is stored here in Kaggle. In order to access this data, you must create a Kaggle account and obtain an API key. You can obtain a key by clicking on your icon on the upper right of the homepage, clicking Account, and then clicking to create a new API token. This will allow you to download a kaggle.json credentials file which contains the API key.

Move the kaggle.json file that you downloaded during the Kaggle setup to ~/.kaggle/kaggle.json.

Install poetry, and then use poetry to install the medium-data-bakeoff library:

poetry install

medium-data-bakeoff comes with a CLI that you can explore. Run the following for more info about the CLI.

python -m medium_data_bakeoff --help

Run the following to run the full benchmark:

python -m medium_data_bakeoff make-dataset bakeoff

Note: I haven't fully tested this!

Copy your kaggle.json file that you created during the Kaggle setup to the root directory of this repo.

Build the docker container and then run the benchmark:

docker build -t medium-data-bakeoff .

docker run -t medium-data-bakeoff python -m medium_data_bakeoff bakeoff

Copy the results out of the container

docker cp medium-data-bakeoff:/app/data/results.* .

- Run benchmarks on AWS for reproducibility.

- Run benchmarks as a function of number of CPUs.

- Run benchmarks as a function of number of parquet files.

- Add some harder benchmark computations.

- Add more libraries (e.g.

cudf). - Benchmark memory usage.