This is the official code of "Self-supervised Character-to-Character Distillation for Text Recognition". For more details, please refer to our paper or 中文解读 or poster. If you have any questions please contact me by email (gtk0615@sjtu.edu.cn).

We also released CVPR23 work on scene text recognition:

# 3090 Ubuntu 16.04 Cuda 11

conda create -n CCD python==3.7

source activate CCD

conda install pytorch==1.10.0 torchvision==0.11.0 torchaudio==0.10.0 cudatoolkit=11.3 -c pytorch -c conda-forge

# The following optional dependencies are necessary

please refer to requirement.txt

pip install tensorboard==1.15.0

pip install tensorboardX==2.2# We recommend using multiple 3090s for training.

CUDA_VISIBLE_DEVICES=0,1,2,3 python -m torch.distributed.launch --nproc_per_node=4 train.py --config ./Dino/configs/CCD_pretrain_ViT_xxx.yaml#update model.pretrain_checkpoint in CCD_vision_model_xxx.yaml

CUDA_VISIBLE_DEVICES=0,1 python train_finetune.py --config ./Dino/configs/CCD_vision_model_xxx.yaml#update model.checkpoint in CCD_vision_model_xxx.yaml

CUDA_VISIBLE_DEVICES=0 python test.py --config ./Dino/configs/CCD_vision_model_xxx.yaml- Pretrain: CCD-ViT-Small,3090 Finetune: ARD,3090 and STD,3090

- pretrain: CCD-ViT-Base,3090 Finetune:ARD,v100 and STD,3090

Data (please refer to DiG)

OCR-CC Link:

链接:https://pan.baidu.com/s/1PW7ef17AkwG27H_RaP0pDQ

提取码:C2CD

data_lmdb

├── charset_36.txt

├── Mask

├── TextSeg

├── Super_Resolution

├── training

│ ├── label

│ │ └── synth

│ │ ├── MJ

│ │ │ ├── MJ_train

│ │ │ ├── MJ_valid

│ │ │ └── MJ_test

│ │ └── ST

│ │── URD

│ │ └── OCR-CC

│ ├── ARD

│ │ ├── Openimages

│ │ │ ├── train_1

│ │ │ ├── train_2

│ │ │ ├── train_5

│ │ │ ├── train_f

│ │ │ └── validation

│ │ └── TextOCR

├── validation

│ ├── 1.SVT

│ ├── 2.IIIT

│ ├── 3.IC13

│ ├── 4.IC15

│ ├── 5.COCO

│ ├── 6.RCTW17

│ ├── 7.Uber

│ ├── 8.ArT

│ ├── 9.LSVT

│ ├── 10.MLT19

│ └── 11.ReCTS

└── evaluation

└── benchmark

├── SVT

├── IIIT5k_3000

├── IC13_1015

├── IC15_2077

├── SVTP

├── CUTE80

├── COCOText

├── CTW

├── TotalText

├── HOST

├── WOST

├── MPSC

└── WordArt

- optional, kmeans results of Synth and URD

- if you don't want to generate mask, you can generate mask results online. please rewrite code1 and code2

cd ./mask_create

run generate_mask.py #parallelly process mask --> lmdb file

run merge.py #merge multiple lmdb files into single fileIf you find our method useful for your reserach, please cite

@InProceedings{Guan_2023_ICCV,

author = {Guan, Tongkun and Shen, Wei and Yang, Xue and Feng, Qi and Jiang, Zekun and Yang, Xiaokang},

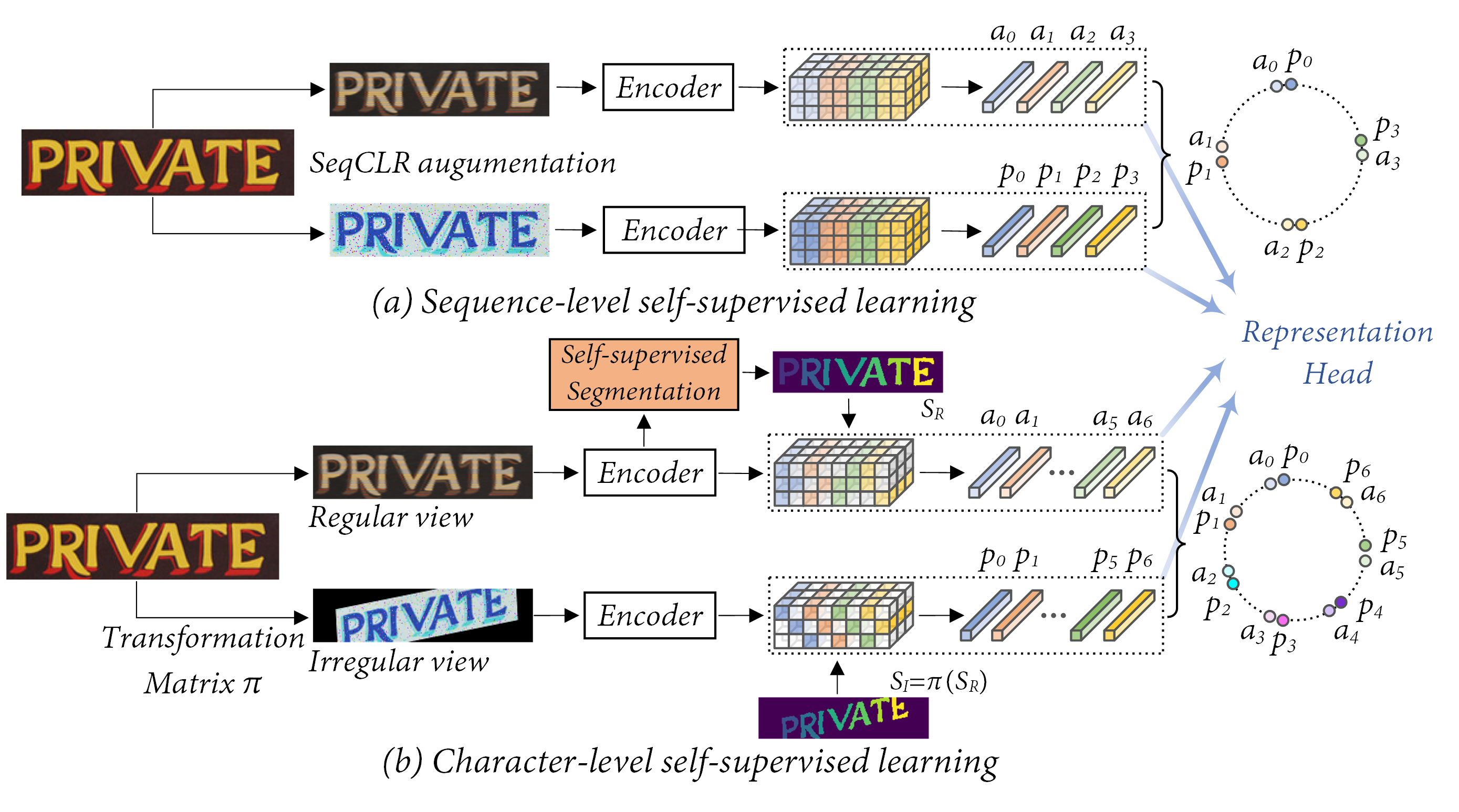

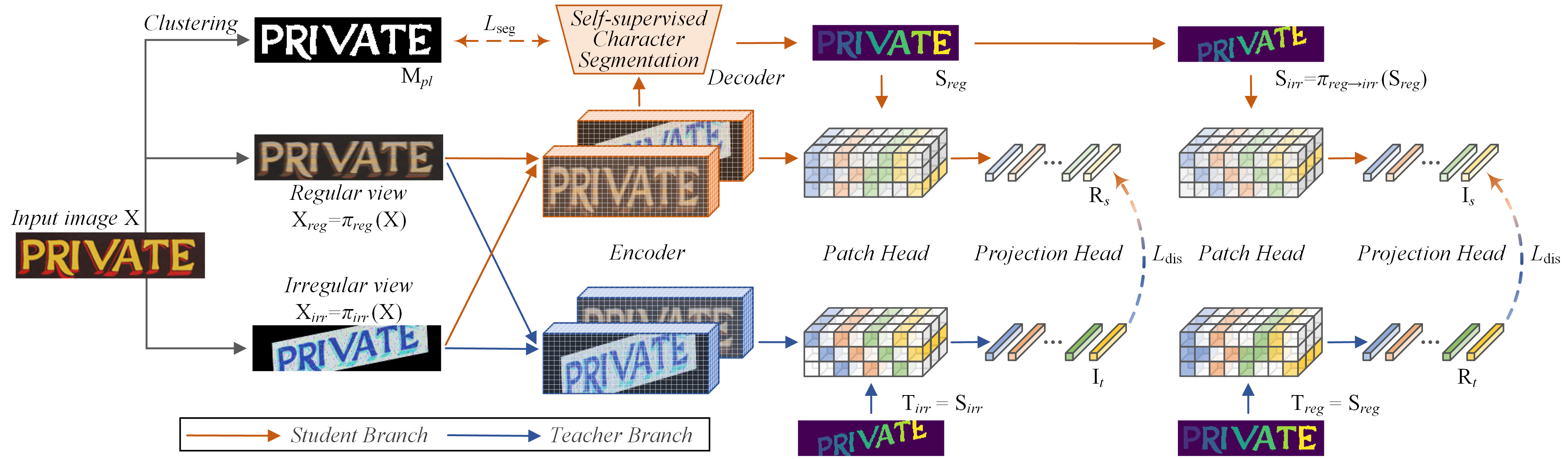

title = {Self-Supervised Character-to-Character Distillation for Text Recognition},

booktitle = {Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV)},

month = {October},

year = {2023},

pages = {19473-19484}

}- This code are only free for academic research purposes and licensed under the 2-clause BSD License - see the LICENSE file for details.