The official implementation of Mask-aware IoU and maYOLACT detector. Our implementation is based on mmdetection.

Mask-aware IoU for Anchor Assignment in Real-time Instance Segmentation,

Kemal Oksuz, Baris Can Cam, Fehmi Kahraman, Zeynep Sonat Baltaci, Emre Akbas, Sinan Kalkan, BMVC 2021. (arXiv pre-print)

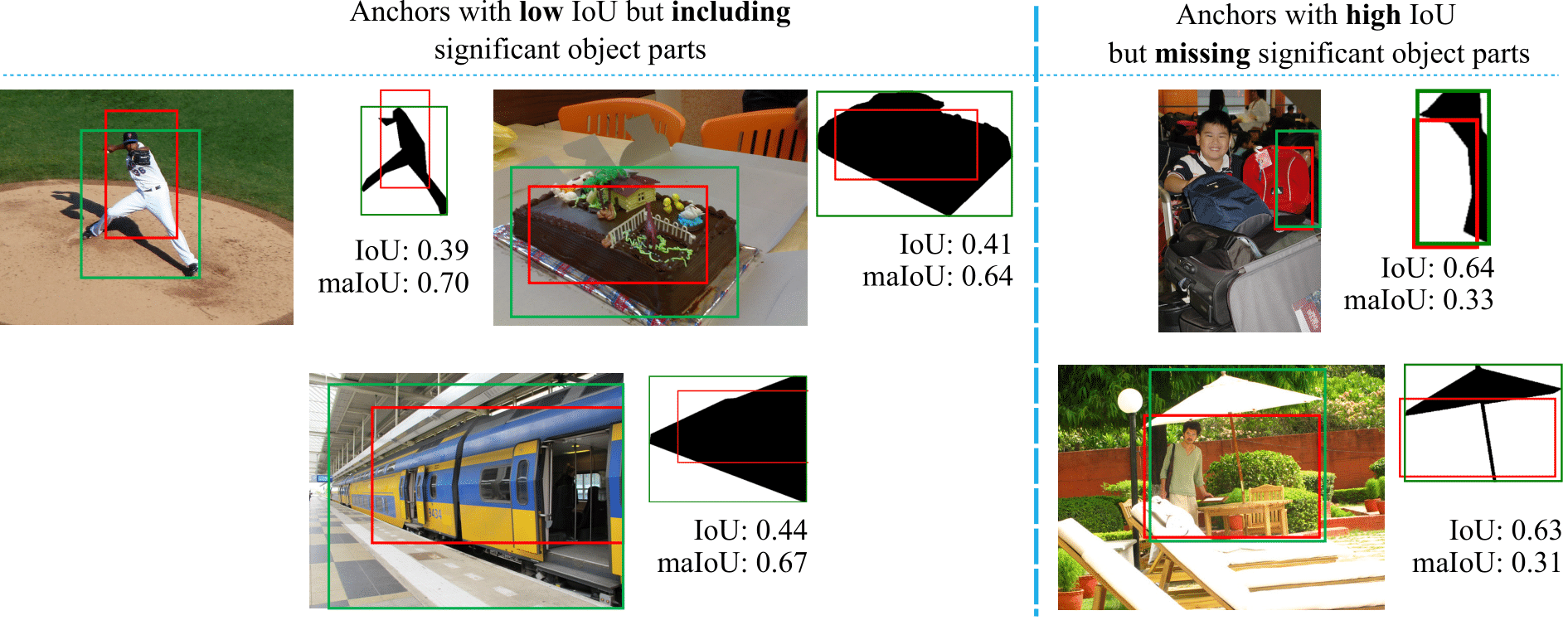

Mask-aware IoU: Mask-aware IoU (maIoU) is an IoU variant for better anchor assignment to supervise instance segmentation methods. Unlike the standard IoU, Mask-aware IoU also considers the ground truth masks while assigning a proximity score for an anchor. As a result, for example, if an anchor box overlaps with a ground truth box, but not with the mask of the ground truth, e.g. due to occlusion, then it has a lower score compared to IoU. Please check out the examples below for more insight. Replacing IoU by our maIoU in the state of the art ATSS assigner yields both performance improvement and efficiency (i.e. faster inference) compared to the standard YOLACT method.

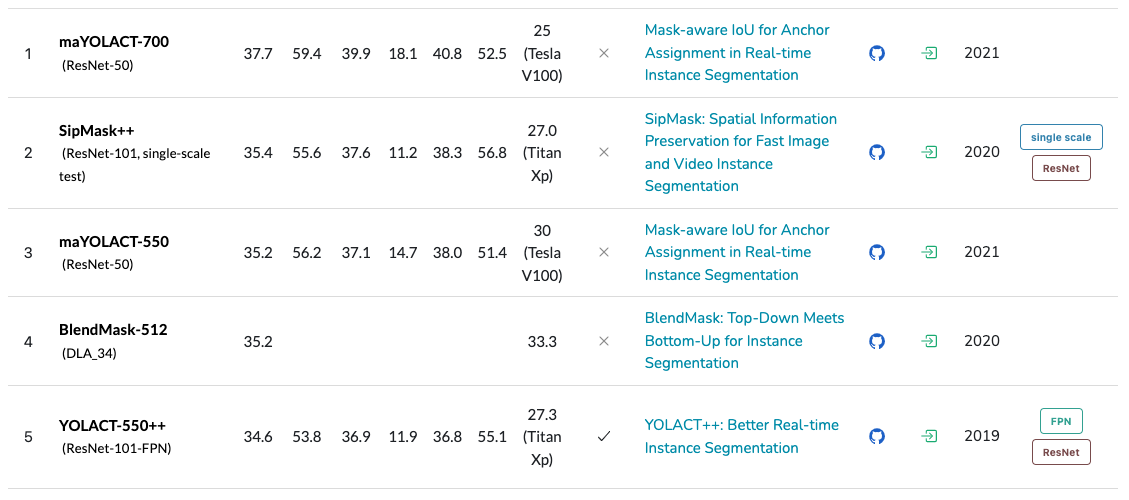

maYOLACT Detector: Thanks to the efficiency due to ATSS with maIoU assigner, we incorporate more training tricks into YOLACT, and built maYOLACT Detector which is still real-time but significantly powerful (around 6 AP) than YOLACT. Our best maYOLACT model reaches SOTA performance by 37.7 mask AP on COCO test-dev at 25 fps.

Please cite the paper if you benefit from our paper or the repository:

@inproceedings{maIoU,

title = {Mask-aware IoU for Anchor Assignment in Real-time Instance Segmentation},

author = {Kemal Oksuz and Baris Can Cam and Fehmi Kahraman and Zeynep Sonat Baltaci and Sinan Kalkan and Emre Akbas},

booktitle = {The British Machine Vision Conference (BMVC)},

year = {2021}

}

- Please see get_started.md for requirements and installation of mmdetection.

- Please refer to introduction.md for dataset preparation and basic usage of mmdetection.

Here, we report results in terms of AP (higher better) and oLRP (lower better).

| Scale | Assigner | mask AP | mask oLRP | Log | Config | Model |

|---|---|---|---|---|---|---|

| 400 | Fixed IoU | 24.8 | 78.3 | log | config | model |

| 400 | ATSS w. IoU | 25.3 | 77.7 | log | config | model |

| 400 | ATSS w. maIoU | 26.1 | 77.1 | log | config | model |

| 550 | Fixed IoU | 28.5 | 75.2 | log | config | model |

| 550 | ATSS w. IoU | 29.3 | 74.5 | log | config | model |

| 550 | ATSS w. maIoU | 30.4 | 73.7 | log | config | model |

| 700 | Fixed IoU | 29.7 | 74.3 | log | config | model |

| 700 | ATSS w. IoU | 30.8 | 73.3 | log | config | model |

| 700 | ATSS w. maIoU | 31.8 | 72.5 | log | config | model |

| Scale | Backbone | mask AP | fps | Log | Config | Model |

|---|---|---|---|---|---|---|

| maYOLACT-550 | ResNet-50 | 35.2 | 30 | log | config | model |

| maYOLACT-700 | ResNet-50 | 37.7 | 25 | Coming Soon |

Our method ranked 1st and 3rd among real-time instance segmentation methods on papers with code:

The configuration files of all models listed above can be found in the configs/mayolact folder. You can follow get_started.md for training code. As an example, to train maYOLACT using images with 550 scale on 4 GPUs as we did, use the following command:

./tools/dist_train.sh configs/mayolact/mayolact_r50_4x8_coco_scale550.py 4

The configuration files of all models listed above can be found in the configs/mayolact folder. You can follow get_started.md for test code. As an example, first download a trained model using the links provided in the tables below or you train a model, then run the following command to test a model model on multiple GPUs:

./tools/dist_test.sh configs/mayolact/mayolact_r50_4x8_coco_scale550.py ${CHECKPOINT_FILE} 4 --eval bbox segm

You can also test a model on a single GPU with the following example command:

python tools/test.py configs/mayolact/mayolact_r50_4x8_coco_scale550.py ${CHECKPOINT_FILE} --eval bbox segm