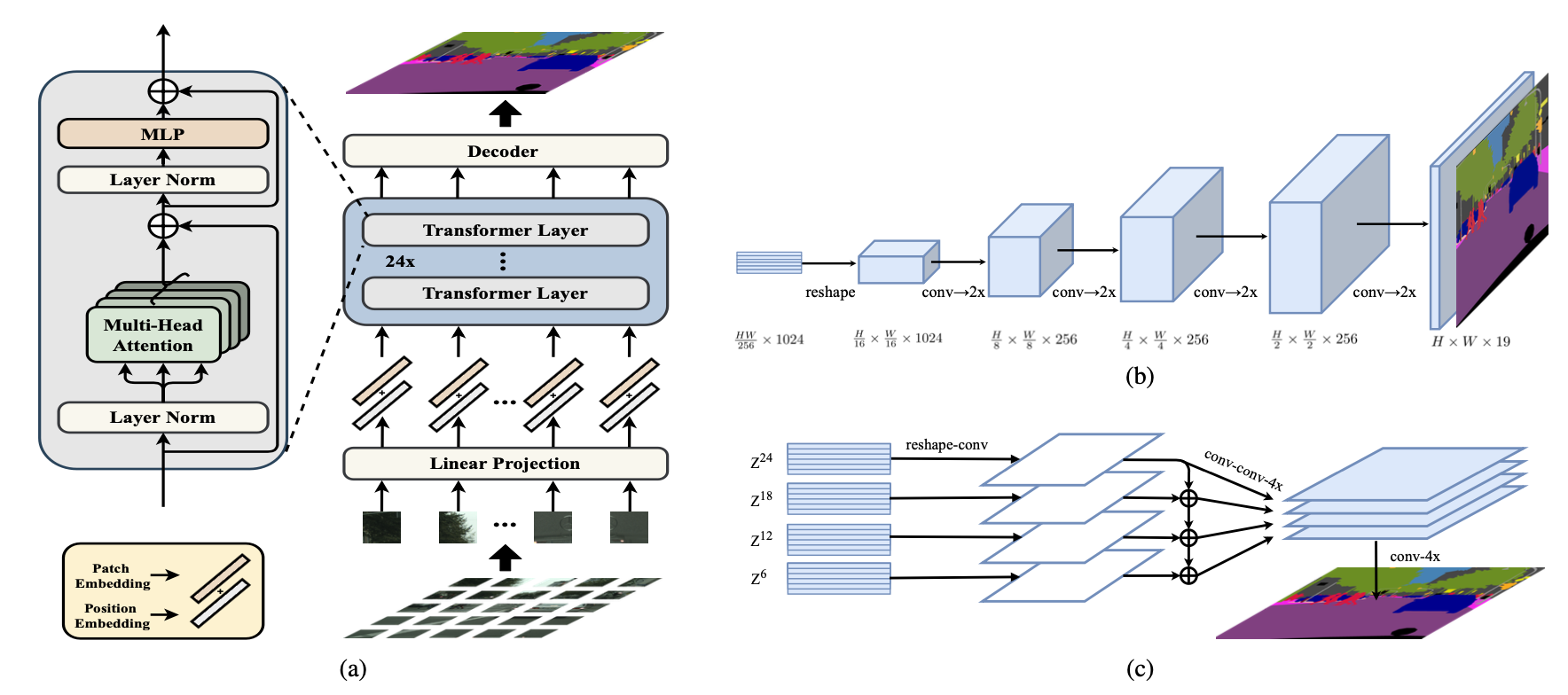

Rethinking Semantic Segmentation from a Sequence-to-Sequence Perspective with Transformers, Sixiao Zheng, Jiachen Lu, Hengshuang Zhao, Xiatian Zhu, Zekun Luo, Yabiao Wang, Yanwei Fu, Jianfeng Feng, Tao Xiang, Philip HS Torr, Li Zhang, CVPR 2021

Our project is developed based on MMsegmentation. Please follow the official MMsegmentation INSTALL.md and getting_started.md for installation and dataset preparation.

🔥🔥 SETR is on MMsegmentation. 🔥🔥

Here is a full script for setting up SETR with conda and link the dataset path (supposing that your dataset path is $DATA_ROOT).

conda create -n open-mmlab python=3.7 -y

conda activate open-mmlab

conda install pytorch=1.6.0 torchvision cudatoolkit=10.1 -c pytorch -y

pip install mmcv-full==1.2.2 -f https://download.openmmlab.com/mmcv/dist/cu101/torch1.6.0/index.html

git clone https://github.com/fudan-zvg/SETR.git

cd SETR

pip install -e . # or "python setup.py develop"

pip install -r requirements/optional.txt

mkdir data

ln -s $DATA_ROOT dataHere is a full script for setting up SETR with conda and link the dataset path (supposing that your dataset path is %DATA_ROOT%. Notice: It must be an absolute path).

conda create -n open-mmlab python=3.7 -y

conda activate open-mmlab

conda install pytorch=1.6.0 torchvision cudatoolkit=10.1 -c pytorch

set PATH=full\path\to\your\cpp\compiler;%PATH%

pip install mmcv

git clone https://github.com/fudan-zvg/SETR.git

cd SETR

pip install -e . # or "python setup.py develop"

pip install -r requirements/optional.txt

mklink /D data %DATA_ROOT%| Method | Crop Size | Batch size | iteration | set | mIoU | model | config |

|---|---|---|---|---|---|---|---|

| SETR-Naive | 768x768 | 8 | 40k | val | 77.37 | google drive | config |

| SETR-Naive | 768x768 | 8 | 80k | val | 77.90 | google drive | config |

| SETR-MLA | 768x768 | 8 | 40k | val | 76.65 | google drive | config |

| SETR-MLA | 768x768 | 8 | 80k | val | 77.24 | google drive | config |

| SETR-PUP | 768x768 | 8 | 40k | val | 78.39 | google drive | config |

| SETR-PUP | 768x768 | 8 | 80k | val | 79.34 | google drive | config |

| SETR-Naive-Base | 768x768 | 8 | 40k | val | 75.54 | google drive | config |

| SETR-Naive-Base | 768x768 | 8 | 80k | val | 76.25 | google drive | config |

| SETR-Naive-DeiT | 768x768 | 8 | 40k | val | 77.85 | config | |

| SETR-Naive-DeiT | 768x768 | 8 | 80k | val | 78.66 | config | |

| SETR-MLA-DeiT | 768x768 | 8 | 40k | val | 78.04 | config | |

| SETR-MLA-DeiT | 768x768 | 8 | 80k | val | 78.98 | config | |

| SETR-PUP-DeiT | 768x768 | 8 | 40k | val | 78.79 | config | |

| SETR-PUP-DeiT | 768x768 | 8 | 80k | val | 79.45 | config |

| Method | Crop Size | Batch size | iteration | set | mIoU | mIoU(ms+flip) | model | Config |

|---|---|---|---|---|---|---|---|---|

| SETR-Naive | 512x512 | 16 | 160k | Val | 48.06 | 48.80 | google drive | config |

| SETR-MLA | 512x512 | 8 | 160k | val | 47.79 | 50.03 | google drive | config |

| SETR-MLA | 512x512 | 16 | 160k | val | 48.64 | 50.28 | config | |

| SETR-MLA-Deit | 512x512 | 16 | 160k | val | 46.15 | 47.71 | config | |

| SETR-PUP | 512x512 | 16 | 160k | val | 48.62 | 50.09 | google drive | config |

| SETR-PUP-Deit | 512x512 | 16 | 160k | val | 46.34 | 47.30 | config |

| Method | Crop Size | Batch size | iteration | set | mIoU | mIoU(ms+flip) | model | Config |

|---|---|---|---|---|---|---|---|---|

| SETR-Naive | 480x480 | 16 | 80k | val | 52.89 | 53.61 | google drive | config |

| SETR-MLA | 480x480 | 8 | 80k | val | 54.39 | 55.39 | google drive | config |

| SETR-MLA | 480x480 | 16 | 80k | val | 55.01 | 55.83 | google drive | config |

| SETR-MLA-DeiT | 480x480 | 16 | 80k | val | 52.91 | 53.74 | config | |

| SETR-PUP | 480x480 | 16 | 80k | val | 54.37 | 55.27 | google drive | config |

| SETR-PUP-DeiT | 480x480 | 16 | 80k | val | 52.00 | 52.50 | config |

The pre-trained model will be automatically downloaded and placed in a suitable location when you run the training command. If you are unable to download due to network reasons, you can download the pre-trained model from here (ViT) and here (DeiT).

./tools/dist_train.sh ${CONFIG_FILE} ${GPU_NUM}

# For example, train a SETR-PUP on Cityscapes dataset with 8 GPUs

./tools/dist_train.sh configs/SETR/SETR_PUP_768x768_40k_cityscapes_bs_8.py 8-

Tensorboard

If you want to use tensorboard, you need to

pip install tensorboardand uncomment the Line 6dict(type='TensorboardLoggerHook')ofSETR/configs/_base_/default_runtime.py.

./tools/dist_test.sh ${CONFIG_FILE} ${CHECKPOINT_FILE} ${GPU_NUM} [--eval ${EVAL_METRICS}]

# For example, test a SETR-PUP on Cityscapes dataset with 8 GPUs

./tools/dist_test.sh configs/SETR/SETR_PUP_768x768_40k_cityscapes_bs_8.py \

work_dirs/SETR_PUP_768x768_40k_cityscapes_bs_8/iter_40000.pth \

8 --eval mIoUUse the config file ending in _MS.py in configs/SETR.

./tools/dist_test.sh ${CONFIG_FILE} ${CHECKPOINT_FILE} ${GPU_NUM} [--eval ${EVAL_METRICS}]

# For example, test a SETR-PUP on Cityscapes dataset with 8 GPUs

./tools/dist_test.sh configs/SETR/SETR_PUP_768x768_40k_cityscapes_bs_8_MS.py \

work_dirs/SETR_PUP_768x768_40k_cityscapes_bs_8/iter_40000.pth \

8 --eval mIoU-

Cityscapes

First, add following to config file

configs/SETR/SETR_PUP_768x768_40k_cityscapes_bs_8.py,data = dict( test=dict( img_dir='leftImg8bit/test', ann_dir='gtFine/test'))

Then run test

./tools/dist_test.sh configs/SETR/SETR_PUP_768x768_40k_cityscapes_bs_8.py \ work_dirs/SETR_PUP_768x768_40k_cityscapes_bs_8/iter_40000.pth \ 8 --format-only --eval-options "imgfile_prefix=./SETR_PUP_768x768_40k_cityscapes_bs_8_test_results"You will get png files under directory

./SETR_PUP_768x768_40k_cityscapes_bs_8_test_results. Runzip -r SETR_PUP_768x768_40k_cityscapes_bs_8_test_results.zip SETR_PUP_768x768_40k_cityscapes_bs_8_test_results/and submit the zip file to evaluation server. -

ADE20k

ADE20k dataset could be download from this link

First, add following to config file

configs/SETR/SETR_PUP_512x512_160k_ade20k_bs_16.py,data = dict( test=dict( img_dir='images/testing', ann_dir='annotations/testing'))

Then run test

./tools/dist_test.sh configs/SETR/SETR_PUP_512x512_160k_ade20k_bs_16.py \ work_dirs/SETR_PUP_512x512_160k_ade20k_bs_16/iter_1600000.pth \ 8 --format-only --eval-options "imgfile_prefix=./SETR_PUP_512x512_160k_ade20k_bs_16_test_results"You will get png files under

./SETR_PUP_512x512_160k_ade20k_bs_16_test_resultsdirectory. Runzip -r SETR_PUP_512x512_160k_ade20k_bs_16_test_results.zip SETR_PUP_512x512_160k_ade20k_bs_16_test_results/and submit the zip file to evaluation server.

Please see getting_started.md for the more basic usage of training and testing.

@inproceedings{SETR,

title={Rethinking Semantic Segmentation from a Sequence-to-Sequence Perspective with Transformers},

author={Zheng, Sixiao and Lu, Jiachen and Zhao, Hengshuang and Zhu, Xiatian and Luo, Zekun and Wang, Yabiao and Fu, Yanwei and Feng, Jianfeng and Xiang, Tao and Torr, Philip H.S. and Zhang, Li},

booktitle={CVPR},

year={2021}

}MIT

Thanks to previous open-sourced repo: MMsegmentation pytorch-image-models