The discrete normalizing flow code is originally taken and modified from: https://github.com/google/edward2/blob/master/edward2/tensorflow/layers/discrete_flows.py and https://github.com/google/edward2/blob/master/edward2/tensorflow/layers/utils.py Which was introduced in the paper: https://arxiv.org/abs/1905.10347

The demo file, MADE, and MLP were modified and taken from: https://github.com/karpathy/pytorch-normalizing-flows

To my knowledge as of July 3rd 2020, this is the only functional demo of discrete normalizing flows in PyTorch. The code in edward2 (implemented in TF2 and Keras, lacks any tutorials. Since the release of this repo and because of correspondence with the authors of the original paper, demo code for reproducing Figure 2 using Edward2 has been shared here.

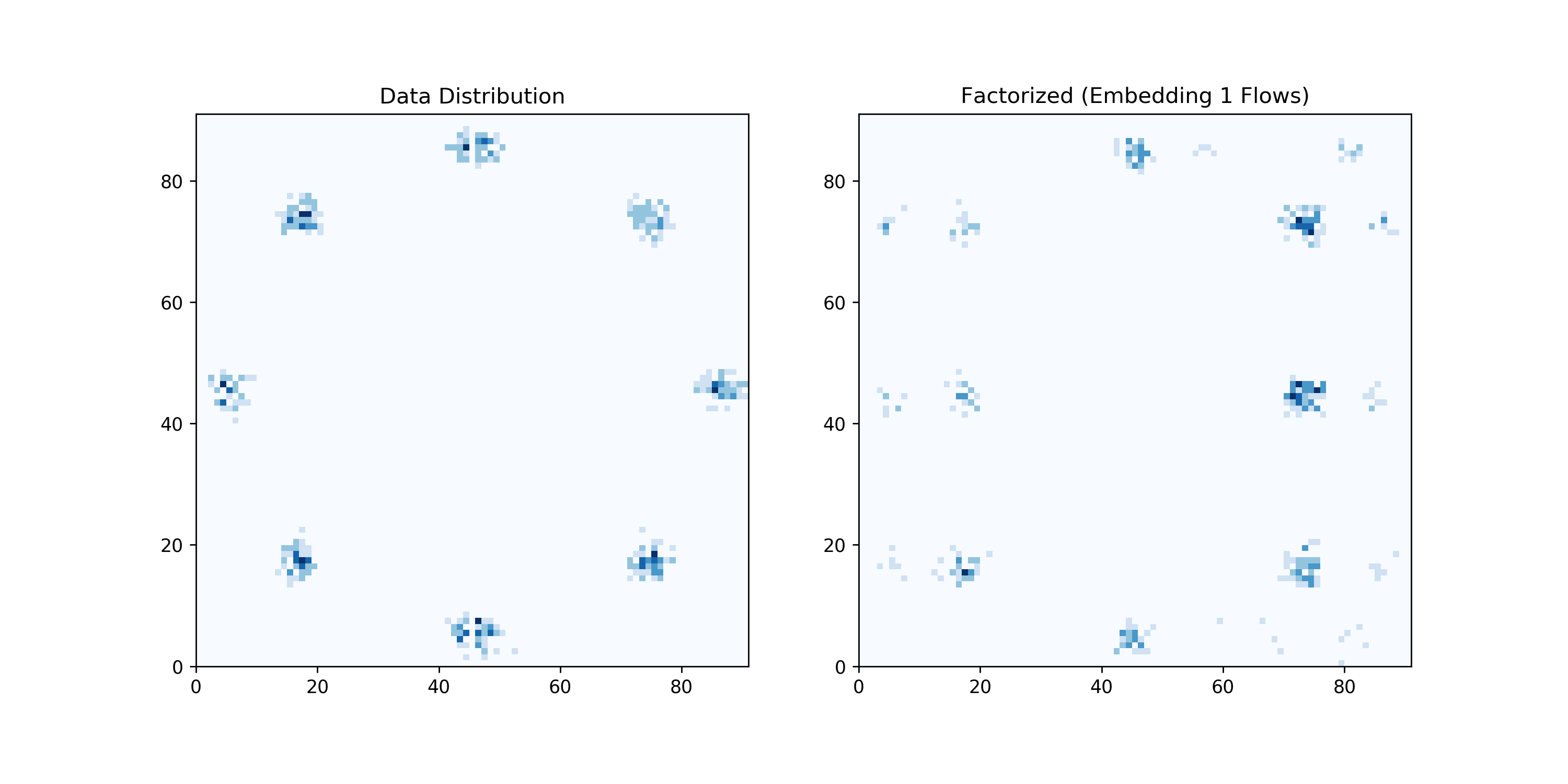

With collaboration from Yashas Annadani and Jan Francu, I have been able to reproduce the paper's Figure 2 discretized mixture of Gaussians with this library.

To use this package, clone the repo satisfy the below package requirements, then run Figure2Replication.ipynb.

Requirements: Python 3.0+ PyTorch 1.2.0+ < 1.9.0 (if you are using 1.9.0 you will have issues with the Fast Fourier Transform. See this issue and solution #6 (comment)) Numpy 1.17.2+

NB. Going from Andre Karpathy's notation, flow.reverse() goes from the latent space to the data and flow.forward() goes from the data to the latent space. This is the inverse of some other implementations including the original Tensorflow one. Implements Bipartite and Autoregressive discrete normalizing flows. Also has an implementation of MADE and a simple MLP.

- Write testing script to ensure all of the models are indeed invertible.

- Reproduce the remanining figures/results from the original paper starting with the Potts models.

- Implement the Sinkhorn autoregressive flow: https://github.com/google/edward2/blob/master/edward2/tensorflow/layers/discrete_flows.py#L373

- Add non block splitting for bipartite.

- Ensure that the scaling functionality works (this should not matter for being able to reproduce the first few figures).

Figure 2 in the paper looks like this:

This library's replication is: