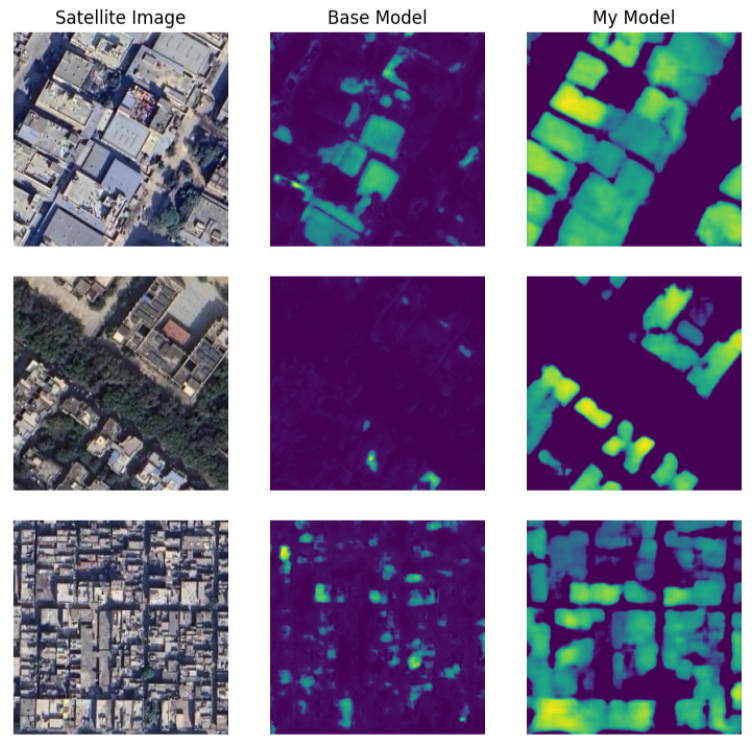

A multitask model for building height Estimation and shadow, footprint mask generation, trained on the unbalanced GRSS-2023 dataset. Domain adapted for India with Google Open Building data, Introduced a novel seam carving based augmentation technique. Trained on UNet architecture with ASSP based encoder and two decoder one for height estimation known as regression deoder and other for shadow mask and footprint generation known as segmentation decoder, regression decoder uses windowed cross attention to query about shadow and footprint information from the segmentation decoder.

Report

The project focuses on seam carving, a content-aware image resizing technique that adjusts image dimensions by removing or inserting seams, preserving important content. It explains the implementation of seam carving using the Discrete Cosine Transform (DCT) and dynamic programming to find the minimum energy seams. Performance improvements were achieved by removing multiple seams simultaneously, reducing time complexity by 68%. The report also covers a deep learning-based seam carving approach using a VGG16-based UNet model and perceptual loss, showing promising results with a high structural similarity index (SSIM) of 0.8186.

Report

In this project, a Generative Adversarial Network (GAN) was implemented from scratch to generate images using the CIFAR-10 dataset. The architecture featured a generator that produces images from random noise and a discriminator that classifies them as real or fake. The model included batch normalization and LeakyReLU layers, with an Inception Score of 3.5, indicating moderate image quality and diversity. While the model performed reasonably well, there is room for improvement in terms of image sharpness and variety across classes.

Report

In this project, a Super Resolution Generative Adversarial Network (SRGAN) was implemented to upsample satellite images. The model enhances image resolution while preserving texture and detail. The architecture consists of a generator using convolutional layers and residual blocks for upsampling and a discriminator that classifies images as real or fake. Loss functions like adversarial, perceptual, and total variation losses were combined to improve image quality. The SRGAN effectively generates high-resolution satellite images, enabling better interpretation of geographical features and improving the utility of satellite data.

Report

This project demonstrates neural network inversion and adversarial attacks on the MNIST dataset. It utilizes a simple sigmoid-based convolutional neural network (CNN) model trained on this dataset. The project employs image inversion through gradient descent to reconstruct input images that yield desired outputs from the model. Additionally, it showcases a targeted adversarial attack, where images are manipulated to resemble a specific target class, fooling the model into consistently predicting this class. The effectiveness of the attack is evaluated using a confusion matrix, highlighting the model's vulnerabilities.

Report

| 0 | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 |

| 858 | 0 | 4629 | 188 | 4 | 142 | 48 | 0 | 53 | 1 |

| 1 | 0 | 6692 | 37 | 0 | 0 | 0 | 6 | 6 | 0 |

| 36 | 53 | 5405 | 81 | 91 | 16 | 60 | 85 | 100 | 31 |

| 232 | 3 | 5009 | 385 | 12 | 102 | 236 | 7 | 135 | 10 |

| 16 | 4 | 5193 | 217 | 144 | 18 | 13 | 3 | 161 | 73 |

| 52 | 0 | 4173 | 963 | 7 | 53 | 53 | 8 | 89 | 23 |

| 31 | 0 | 5755 | 45 | 4 | 18 | 52 | 0 | 13 | 0 |

| 144 | 7 | 5686 | 95 | 8 | 58 | 47 | 21 | 63 | 136 |

| 35 | 3 | 5494 | 138 | 10 | 39 | 37 | 2 | 87 | 6 |

| 28 | 3 | 5362 | 390 | 108 | 6 | 0 | 33 | 12 | 7 |

The following confusion matrix illustrates the effectiveness of the targeted attack on the model, which consistently predicts the inputs belonging to the target class 2.

The project develops a multi-modal model for a book cover dataset to predict book genres, combining BERT for text and ResNet for images, this effectively integrates

textual and visual information in the latent space before final classification. The model achieved a training accuracy of 54%, with Class 15 performing best (precision 0.87, recall 0.95), while Class 21 struggled significantly (precision 0.35, recall 0.02). On test data, overall accuracy achieved was 48%, with an average precision and recall of 49%.

Report

This assignment had three parts.

Report

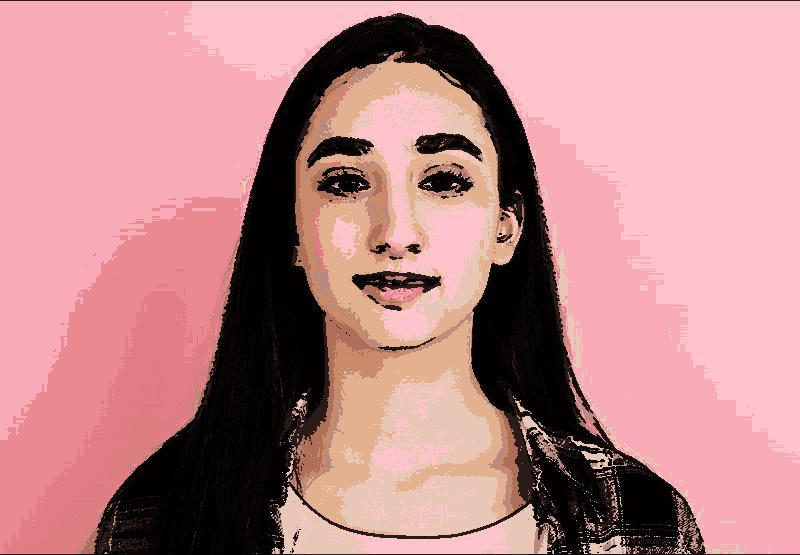

The goal was to transform real photos into non-photorealistic, painting-like images by enhancing light-shadow contrast, emphasizing lines, and using vivid colors. This process consists of two main steps: Artistic Enhancement and Color Adjustment.

- Artistic Enhancement Step:

- Shadow Map Generation: Convert the input image from RGB to HSI color space and create a shadow map by assigning flags based on light or shadow areas. Merge this shadow map with the original image.

- Line Draft Generation: Convert the original image to grayscale, apply bilateral filtering to smooth it while preserving edges, and use Sobel filters to detect edges. Produce a binary line draft image through thresholding to emphasize important lines.

- Color Adjustment Step: Create a chromatic map by decomposing the image in LAB color space to remove lightness influence and enhance the RGB components with this map. Apply linear saturation correction to address brightness changes.

- Final Output: Combine the enhanced image and the line draft to create the final artistic rendered image, effectively balancing color and line emphasis.

|

|

Focuses on analyzing a mechanism for rendering output through image quantization, specifically using the median-cut method for color quantization. The process involves working with a 24-bit input image, which contains 8 bits each for the red, green, and blue components. For comparison, results are generated both with and without Floyd-Steinberg dithering.

The assignment requires implementing both the median-cut algorithm and Floyd-Steinberg dithering from scratch, avoiding the use of direct built-in functions. The median-cut quantization is approached using a divide-and-conquer strategy.

The result below shows 12 bit quantization of the original 24 bit image.

|

|

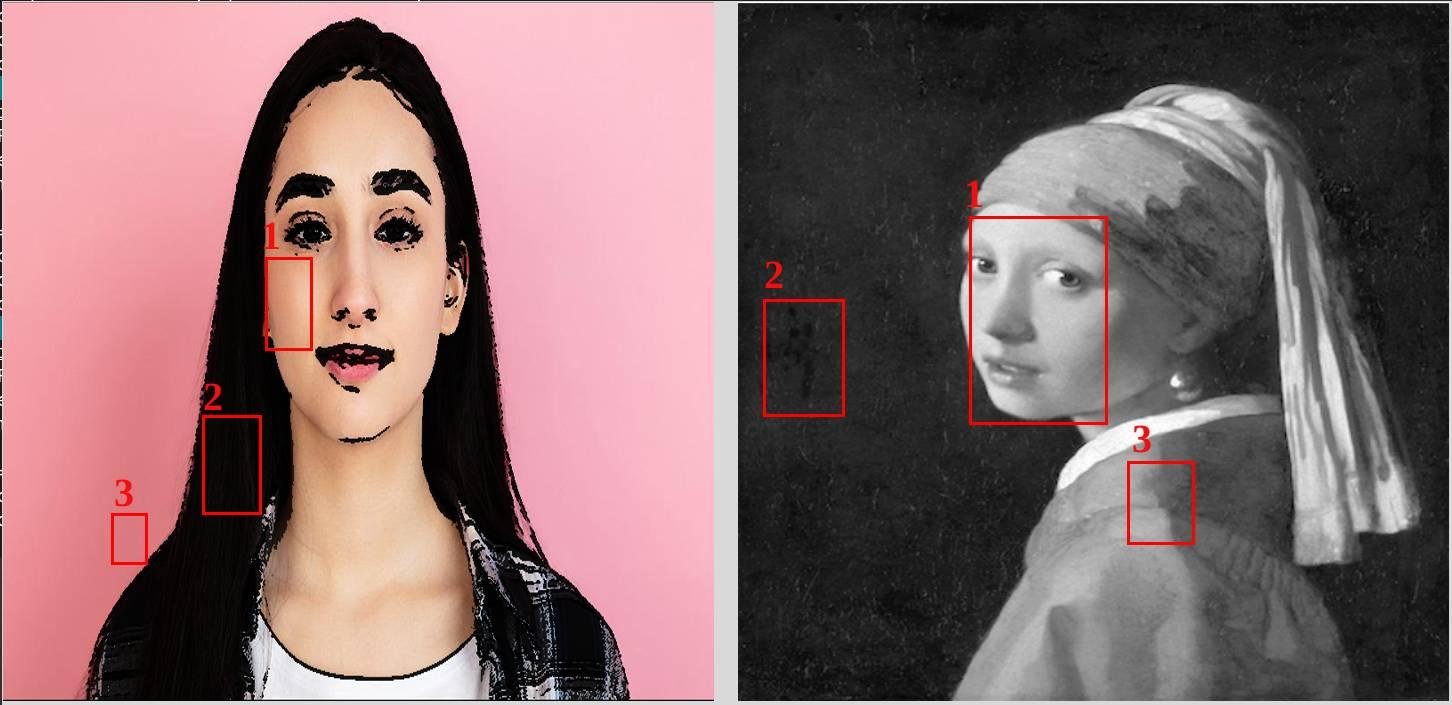

This part focuses on transferring color from a source image to a grayscale image using swatches. The objective is to utilize the similar intensity values between the source and target images within the respective swatches to effectively paint the entire target image.

A basic GUI application was developed to effectively select n swatches from both the source and the target image as shown below.

Neural Style Transfer (NST) is a technique in computer vision that combines the content of one image with the artistic style of another, producing a new image that retains the original content's structure while adopting stylistic elements. In this part, I also implemented NST from scratch using a pre-trained convolutional neural network (CNN), specifically VGG16. They extracted content features from the higher layers of the network and style features from the lower layers. The process involved optimizing a randomly initialized image by minimizing a loss function that balances content and style differences.

Below is the result of performing the Iterative SGD version of Neural Style Transfer.

Implementing Linear Regression, Logistic Regression, GDA. Report

Classification using Naive Bayes, SVM. Report

Exploring Decision Trees, Random Forests, Gradient Boosted Trees and Implementing Neural Network. Report

Exploring Activation Functions, Transfer Learning, Optimisers. Report

UC Merced Classifier. Report

Implementing Bilinear Interpolation. Report

Face Expression Transfer. Report