This is a PyTorch implementation of the DMAE paper.

Install PyTorch and ImageNet dataset following the official PyTorch ImageNet training code. Please refer to MAE official codebase for other enrironment requirements.

This implementation only supports multi-gpu, DistributedDataParallel training, which is faster and simpler; single-gpu or DataParallel training is not supported.

To pre-train models in an 8-gpu machine, please first download the ViT-Large model as the teacher model, and then run:

bash pretrain.sh

To fintune models in an 8-gpu machine, run:

bash finetune.sh

The checkpoints of our pre-trained and finetuned ViT-Base on ImageNet-1k can be downloaded as following:

| Pretrained Model | Epoch | |

|---|---|---|

| ViT-Base | download link | 100 |

| Finetuned Model | Acc | |

|---|---|---|

| ViT-Base | download link | 84.0 |

This project is under the CC-BY-NC 4.0 license. See LICENSE for details.

This work is partially supported by TPU Research Cloud (TRC) program, and Google Cloud Research Credits program.

@inproceedings{bai2022masked,

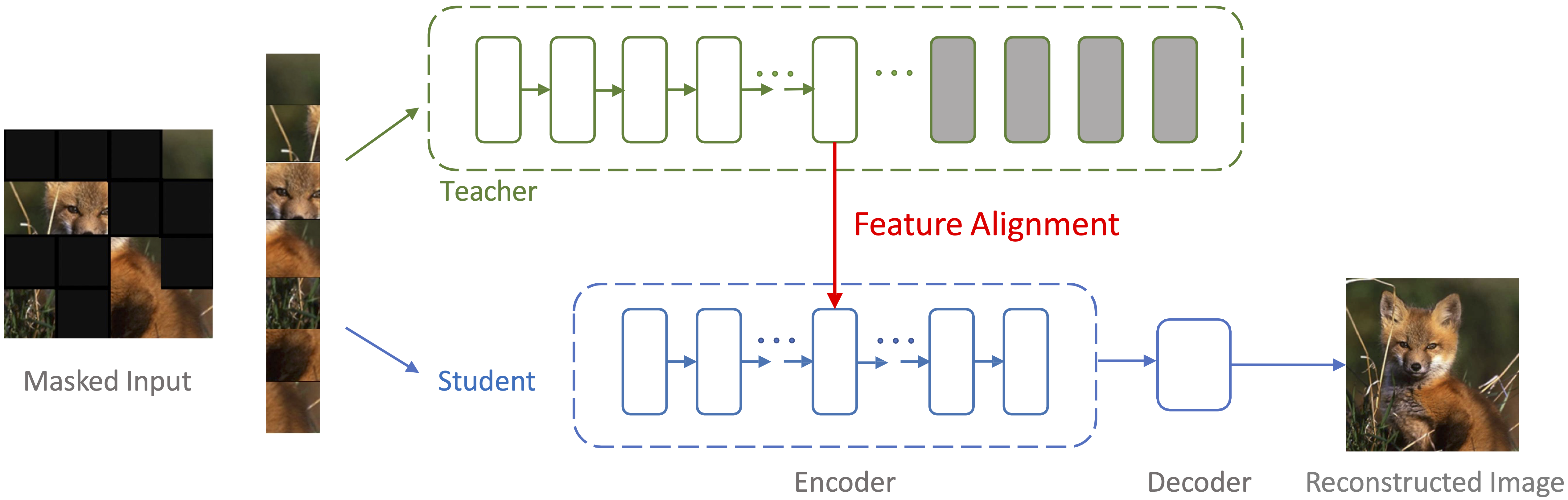

title = {Masked autoencoders enable efficient knowledge distillers},

author = {Bai, Yutong and Wang, Zeyu and Xiao, Junfei and Wei, Chen and Wang, Huiyu and Yuille, Alan and Zhou, Yuyin and Xie, Cihang},

booktitle = {CVPR},

year = {2023}

}