Official website for "Continual Learning on Graphs: Challenges, Solutions, and Opportunities"

This repository is activately maintained by Xikun ZHANG from The University of Sydney. As this research topic has recently gained significant popularity, with new articles emerging daily, we will update our repository and survey regularly.

If you find some ignored papers, feel free to create pull requests, open issues, or email Xikun ZHANG.

Please consider citing our survey paper if you find it helpful :), and feel free to share this repository with others!

@article{zhang2024continual,

title={Continual Learning on Graphs: Challenges, Solutions, and Opportunities},

author={Zhang, Xikun and Song, Dongjin and Tao, Dacheng},

journal={arXiv preprint arXiv:2402.11565}, year={2024}

}

Continual learning on graph data has recently garnered significant attention for its aim to resolve the catastrophic forgetting problem on existing tasks while adapting the existing model to newly emerged graph tasks. While there have been efforts to summarize progress on continual learning research over Euclidean data, such as images and texts, a systematic review of continual graph learning (CGL) works is still absent. Graph data are far more complex in terms of data structures and application scenarios, making CGL task settings, model designs, and applications extremely complicated.

To address this gap, we provide a comprehensive review of existing CGL works by:

- Elucidating the different task settings and categorizing the existing works based on their adopted techniques.

- Reviewing the benchmark works that are crucial to CGL research.

- Discussing the remaining challenges and propose several future directions.

|

|---|

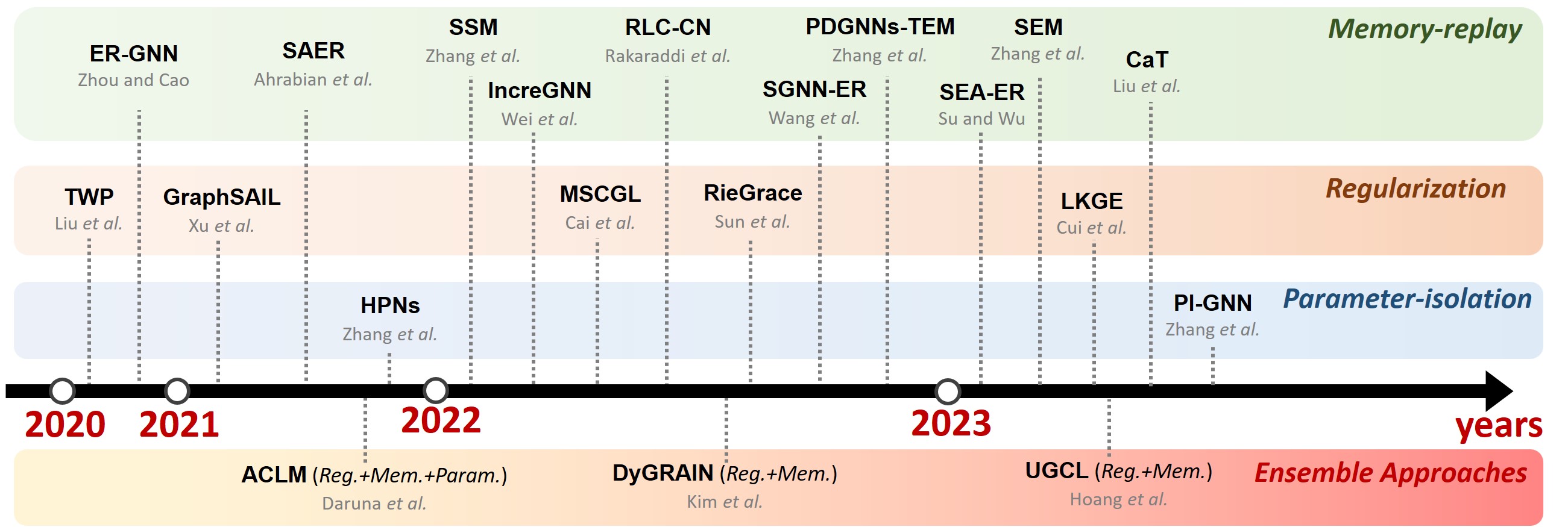

| Figure 1: Timeline of the Works Introduced in Our Survey |

-

Continual Graph Learning (SDM2023) link project page

-

Continual Graph Learning (WWW2023) link project page

-

Continual Learning on Graphs: Challenges, Solutions, and Opportunities project page

- CGLB: Benchmark tasks for continual graph learning (2022) paper

- Catastrophic forgetting in deep graph networks: an introductory benchmark for graph classification (2021) paper

-

GraphSAIL: Graph Structure Aware Incremental Learning for Recommender Systems arxiv ACM CIKM

-

Overcoming Catastrophic Forgetting in Graph Neural Networks arxiv link AAAI link GitHub

-

Self-Supervised Continual Graph Learning in Adaptive Riemannian Spaces arxiv AAAI

-

Multimodal Continual Graph Learning with Neural Architecture Search link

-

Lifelong Embedding Learning and Transfer for Growing Knowledge Graphs arxiv AAAI

-

Overcoming Catastrophic Forgetting in Graph Neural Networks with Experience Replay arxiv link AAAI link

-

Ricci Curvature-Based Graph Sparsification for Continual Graph Representation Learning IEEE TNNLS early access

-

Towards Robust Inductive Graph Incremental Learning via Experience Replay arxiv

-

Structure Aware Experience Replay for Incremental Learning in Graph-based Recommender Systems ACM CIKM

-

Streaming Graph Neural Networks with Generative Replay pdf ACM KDD

-

Sparsified Subgraph Memory for Continual Graph Representation Learning pdf IEEE ICDM

-

Topology-aware Embedding Memory for Learning on Expanding Networks arxiv

-

IncreGNN: Incremental Graph Neural Network Learning by Considering Node and Parameter Importance springer

-

CaT: Balanced Continual Graph Learning with Graph Condensation arxiv

-

Hierarchical prototype networks for continual graph representation learning arxiv IEEE TPAMI

-

Continual Learning on Dynamic Graphs via Parameter Isolation arxiv

-

Multimodal Continual Graph Learning with Neural Architecture Search pdf ACM WWW

-

DyGRAIN: An Incremental Learning Framework for Dynamic Graphs pdf

-

Continual Learning of Knowledge Graph Embeddings arxiv

-

Disentangle-based Continual Graph Representation Learning pdf

-

Lifelong Learning of Graph Neural Networks for Open-World Node Classification paper openreview link

-

Graph Neural Networks with Continual Learning for Fake News Detection from Social Media paper Combined GCN with existing continual learning techniques like some regularizations

-

Universal Graph Continual Learning TMLR

| Method | Applications | Task Granularity | Technique | Characteristics |

|---|---|---|---|---|

| TWP | General | Node/Graph | Reg. | Preserve the topology learnt from previous tasks |

| RieGrace | General | Node | Reg. | Maintain previous knowledge via knowledge distillation |

| GraphSAIL | Recommender Systems | Node | Reg. | Local and global structure preservation, node information preservation |

| MSCGL | General | Node | Reg. | Parameter changes orthogonal to previous parameters |

| LKGE | Knowledge Graph | Node | Reg. | Alleviating forgetting issue with l2 regularization |

| ER-GNN | General | Node | Mem. | Replay representative nodes |

| SSM | General | Node | Mem. | Replay representative sparsified computation subgraphs |

| SEM | General | Node | Mem. | Sparsify computation subgraphs based on information bottleneck |

| PDGNNs-TEM | General | Node | Mem. | Replay representative topology-aware embeddings |

| IncreGNN | General | Node | Mem. | Replay nodes according to their influence |

| RLC-CN | General | Node | Mem. | Model structure adaption and dark experience replay |

| SGNN-ER | General | Node | Mem. | Model retraining with generated fake historical data |

| SAER | Recommender System | Node | Mem. | Buffer the representative user-item pairs based on reservoir sampling |

| SEA-ER | General | Node | Mem. | Minimize the structural difference between the memory buffer and the original graph |

| CaT | General | Node | Mem. | Train the model solely on balanced condensed graphs from all tasks |

| HPNs | General | Node | Para. | Extracting and storing basic features to encourage knowledge sharing across tasks, model expanding to accommodate new patterns |

| PI-GNN | General | Node | Para. | Separate parameters for encoding stable and changed graph parts |

| DyGRAIN | General | Node | Mem.+Reg. | Alleviate catastrophic forgetting and concept shift of previous task nodes via memory replay and knowledge distillation |

| ACLM | Knowledge Graph | Node | Mem.+Reg.+Para. | Adapting general CL techniques to CGL tasks |

| UGCL | General | Node/Graph | Mem.+Reg. | local/global structure preservation |