Accompanying repository for the paper Aspect-Controlled Neural Argument Generation.

We rely on arguments in our daily lives to deliver our opinions and base them on evidence, making them more convincing in turn. However, finding and formulating arguments can be challenging. To tackle this challenge, we trained a language model (based on the CTRL by Keskar et al. (2019)) for argument generation that can be controlled on a fine-grained level to generate sentence-level arguments for a given topic, stance, and aspect. We define argument aspect detection as a necessary method to allow this fine-granular control and crowdsource a dataset with 5,032 arguments annotated with aspects. We release this dataset, as well as the training data for the argument generation model, its weights, and the arguments generated with the model.

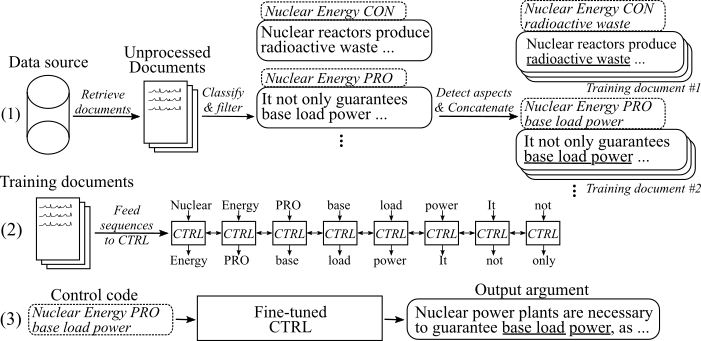

The following figure shows how the argument generation model was trained:

(1) We gather several million documents for eight different topics from two large data sources. All sentences are classified into pro-, con-, and non-arguments. We detect aspects of all arguments with a model trained on a novel dataset and concatenate arguments with the same topic, stance, and aspect into training documents. (2) We use the collected classified data to condition the CTRL model on the topics, stances, and aspects of all gathered arguments. (3) At inference, passing the control code [Topic] [Stance] [Aspect] will generate an argument that follows these commands.

15. May 2020

We have added the code for the aspect-controlled neural argument generation model and detailled descriptions on how to use it. The model and code modifies the work by Keskar et al. (2019). The link to the fine-tuned model weights and training data can be found in the Downloads section.

8. May 2020

The Argument Aspect Detection dataset can be downloaded from here (argument_aspect_detection_v1.0.7z). From there, you can also download the arguments generated with the argument generation models (generated_arguments.7z) and the data to reproduce the fine-tuning of the argument generation model (reddit_training_data.7z, cc_training_data.7z).

Note: Due to license reasons, these files cannot be distributed freely. Clicking on any of the files will redirect you to a form, where you have to leave you name and email. After submitting the form, you will receive a download link shortly.

-

Download datasets from here. You can download the following files:

- argument_aspect_detection_v1.0.7z: The argument aspect detection dataset

- generated_arguments.7z: All arguments generated with our fine-tuned models

- reddit_training_data.7z: Holds classified samples used to fine-tune our model based on Reddit-comments data

- cc_training_data.7z: Holds classified samples used to fine-tune our model based on Common-Crawl data

Note: Due to license reasons, these files cannot be distributed freely. Clicking on any of the files will redirect you to a form, where you have to leave you name and email. After submitting the form, you will receive a download link shortly.

-

Use scripts/download_weights.sh to download the model weights. The script will download the weights for the model fine-tuned on Reddit-comments and Common-Crawl data and unzips them into the main project folder.

The code was tested with Python3.6. Install all requirements with

pip install -r requirements.txt

and follow the instructions in the original Readme at Usage, Step 1 and 2.

In the following, we describe three approaches to use the aspect-controlled neural argument generation model:

A. Use model for generation only

B. Use available training data to reproduce/fine-tune the model

C. Use your own data to fine-tune a new aspect-controlled neural argument generation model

In order to generate arguments, please first download the weights for the models (download script at scripts/download_weights.sh).

Run the model via python generation.py --model_dir reddit_seqlen256_v1 for the model trained

on Reddit-comments data or python generation.py --model_dir cc_seqlen256_v1 for the model trained on Common-Crawl data.

After loading is complete, type in a control code, for example nuclear energy CON waste, to generate arguments

that follow this control code. To get better results for the first generated argument, you can end the control code with

a period or colon ("." or ":"). For more details, please refer to the paper.

Note: Allowed control codes for each topic and data source can be found in the training_data folder.

In order to fine-tune the model as we have done in our work, please follow these steps:

-

Download the pre-trained weights from the original paper (original Readme at Usage, Step 3).

-

Download the training data (see Downloads section. You need the file reddit_training_data.7z or cc_training_data.7z. Depending on the source (cc or reddit), put the archives either into folder training_data/common-crawl-en/ or training_data/redditcomments-en/ and unzip via:

7za x [FILENAME].7z -

To reproduce the same training documents from the training data as we used for fine-tuning, please use the script at training_utils/pipeline/prepare_documents_all.sh and adapt the INDEX parameter. Depending on your hardware, the training document generation can take an hour or more to compute.

-

Lastly, TFRecords need to be generated from all training documents. To do so, please run:

python make_tf_records_multitag.py --files_folder [FOLDER] --sequence_len 256[FOLDER] needs to point to the folder of the training documents, e.g. training_data/common-crawl-en/abortion/final/. After generating, the number of training sequences generated for this specific topic is printed. Use this to determine the number of steps the model should be trained on. The TFRecords are stored in folder training_utils.

-

Train the model:

python training.py --model_dir [WEIGHTS FOLDER] --iterations [NUMBER OF TRAINING STEPS]The model takes the generated TFRecords automatically from the training_utils folder. Please note that the weights in [WEIGHTS FOLDER] will be overwritten. For generation with the newly fine-tuned model, follow the instructions in "A. Use model for generation only".

To ease the process of gathering your own training data, we add our implementation of the pipeline described in our publication (see I. Use our pipeline (with ArgumenText API)). To label sentences as arguments and to identify their stances and aspects, we use the ArgumenText-API. Alternatively, you can also train your own models (see II. Create your own pipeline (without ArgumenText API)).

Please request a userID and apiKey for the ArgumenText-API. Write both id and key in the respective constants at training_utils/pipeline/credentials.py.

As a first step, training documents for a topic of interest need to be gathered. (Note: This step is not part of the code and has to be self-implented). We did so by downloading a dump from Common-Crawl and Reddit-comments and indexing them with ElasticSearch. The outcome needs to be documents that are stored at training_data/[INDEX_NAME]/[TOPIC_NAME]/unprocessed/, where [INDEX_NAME] is the name of the data source (e.g. common-crawl-en) and [TOPIC_NAME] is the search topic for which documents were gathered (replace whitespaces in the [INDEX_NAME] and [TOPIC_NAME] with underscores). Each document is a separate JSON-File with at least the key "sents" which holds a list of sentences from this document:

{

"sents": ["sentence #1", "sentence #2", ...]

}

The argument_classification.py takes all documents gathered for a given topic and classifies their sentences into pro-/con-/non-Arguments. The following command starts the classification:

python argument_classification.py --topic [TOPIC_NAME] --index [TOPIC_NAME]

Non-arguments are discarded and the final classified arguments are stored into files with a maximum of 200,000 arguments each at training_data/[INDEX_NAME]/[TOPIC_NAME]/processed/.

The aspect_detection.py parses all previously classified arguments and detects their aspects. The following command starts the aspect detection:

python aspect_detection.py --topic [TOPIC_NAME] --index [TOPIC_NAME]

All arguments with their aspects are then stored into a single file merged.jsonl at training_data/[INDEX_NAME]/[TOPIC_NAME]/processed/.

The prepare_documents.py appends all arguments that have the same topic, stance, and (stemmed) aspect to a training document:

python prepare_documents.py --max_sents [MAX_SENTS] --topic [TOPIC_NAME] --index [INDEX_NAME] --max_aspect_cluster_size [MAX_ASPECT_CLUSTER_SIZE] --min_aspect_cluster_size [MIN_ASPECT_CLUSTER_SIZE]

[MAX_SENTS] sets the maximum number of arguments to use (evenly devided between pro and con arguments if possible) and [MIN_ASPECT_CLUSTER_SIZE]/[MAX_ASPECT_CLUSTER_SIZE] sets the min/max number of allowed arguments to append for a single training document. The final documents are stored in folder training_data/[INDEX_NAME]/[TOPIC_NAME]/final/. The script prepare_all_documents.sh can be used to automate the process.

Finally, to create training sequences from the documents and start fine-tuning the model, please download our fine-tuned weights (see Download section) and follow B. Use given training data to reproduce/fine-tune the model, Steps 4-5.

IMPORTANT: In addition to the training documents, a file with all control codes based on the training documents is created at training_data/[INDEX_NAME]/[TOPIC_NAME]/generation_data/control_codes.jsonl. This file holds all control codes to generate arguments from after fine-tuning has finished.

To train an argument and stance classification model, you can use the UKP Corpus and the models described in the corresponding publication by Stab et al. (2018). For better results, however, we suggest to use BERT (Devlin et al., 2019).

To train an aspect detection model, please download our Argument Aspect Detection dataset (due to license reasons, it is necessary to fill the form with your name and email). As a model, we suggest BERT from Huggingface for sequence tagging.

In order to prepare training documents and fine-tune the model, you can use the prepare_documents.py as described in I. Use our pipeline (with ArgumenText API), step d. if you keep your classified data in the following format:

-

The file should be named merged.jsonl and located in the directory training_data/[INDEX_NAME]/[TOPIC_NAME]/processed/, where [INDEX_NAME] is the data source from where the samples were gathered and [TOPIC_NAME] the name of the respective search query for this data.

-

Each line represents a training sample in the following format:

{"id": id of the sample, starting with 0 (int), "stance": "Argument_against" or "Argument_for", depending on the stance (string), "sent": The argument sentence (string), "aspect_string": A list of aspects for this argument (list of string)}

If you find this work helpful, please cite our publication Aspect-Controlled Neural Argument Generation:

@inproceedings{schiller-etal-2021-aspect,

title = "Aspect-Controlled Neural Argument Generation",

author = "Schiller, Benjamin and

Daxenberger, Johannes and

Gurevych, Iryna",

booktitle = "Proceedings of the 2021 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies",

month = jun,

year = "2021",

address = "Online",

publisher = "Association for Computational Linguistics",

url = "https://aclanthology.org/2021.naacl-main.34",

doi = "10.18653/v1/2021.naacl-main.34",

pages = "380--396",

abstract = "We rely on arguments in our daily lives to deliver our opinions and base them on evidence, making them more convincing in turn. However, finding and formulating arguments can be challenging. In this work, we present the Arg-CTRL - a language model for argument generation that can be controlled to generate sentence-level arguments for a given topic, stance, and aspect. We define argument aspect detection as a necessary method to allow this fine-granular control and crowdsource a dataset with 5,032 arguments annotated with aspects. Our evaluation shows that the Arg-CTRL is able to generate high-quality, aspect-specific arguments, applicable to automatic counter-argument generation. We publish the model weights and all datasets and code to train the Arg-CTRL.",

}

Contact person: Benjamin Schiller