Cheng-Chun Hsu, Zhenyu Jiang, Yuke Zhu

ICRA 2023

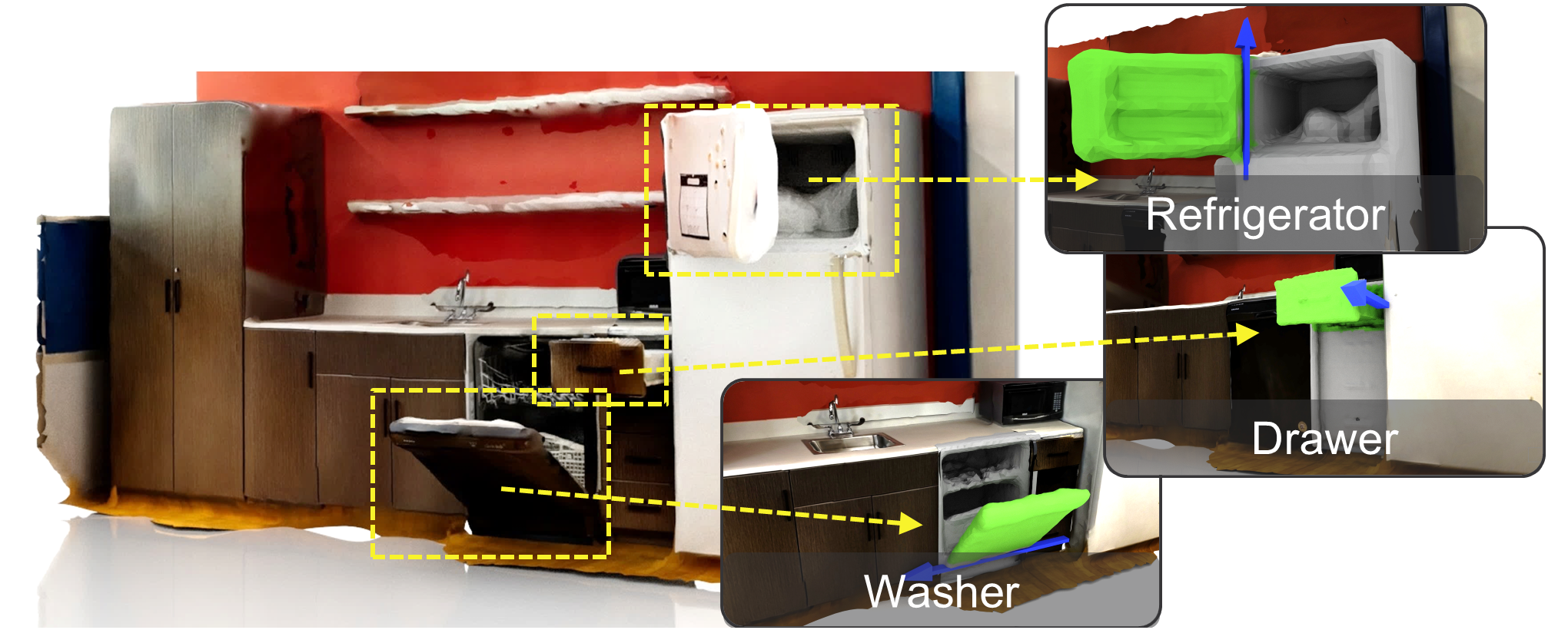

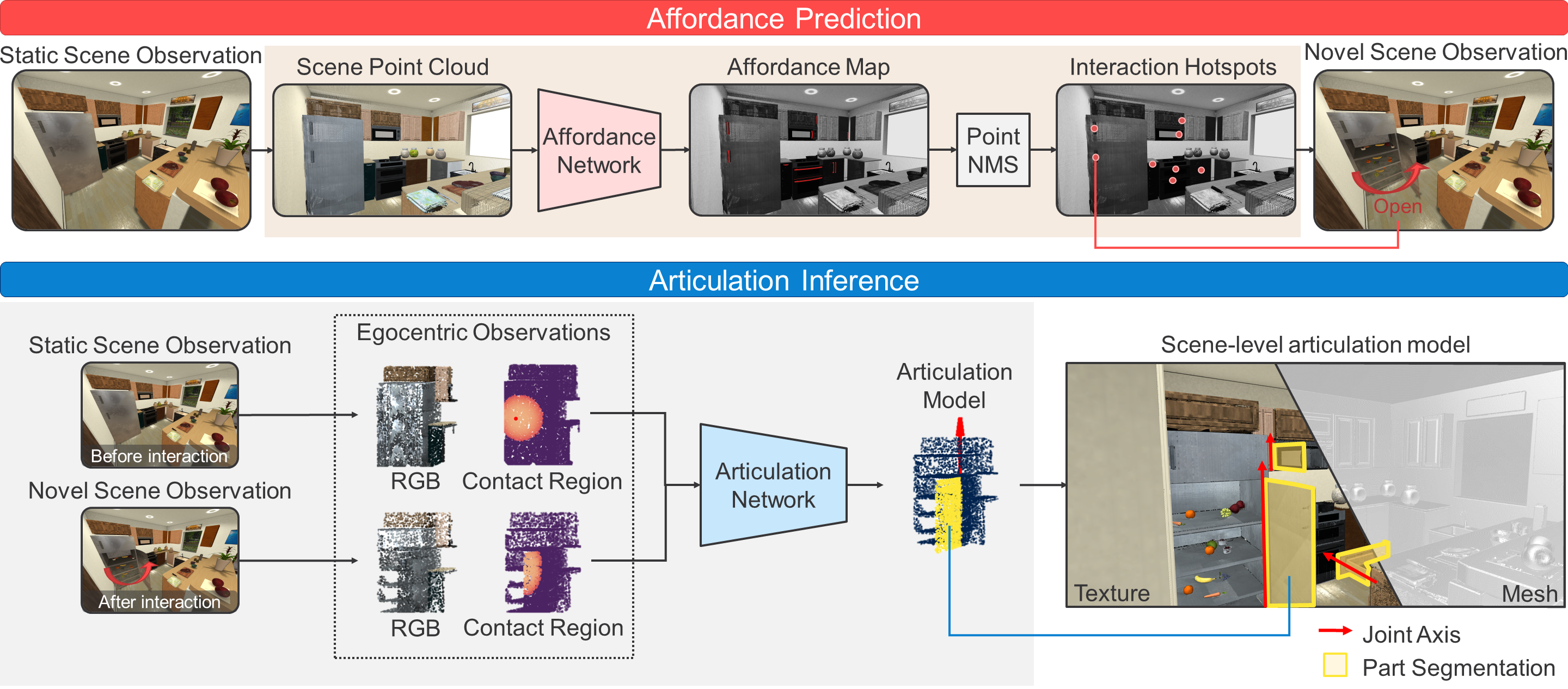

Our approach, named Ditto in the House, discovers possible articulated objects through affordance prediction, interacts with these objects to produce articulated motions, and infers the articulation properties from the visual observations before and after each interaction. The approach consists of two stages — affordance prediction and articulation inference. During affordance prediction, we pass the static scene point cloud into the affordance network and predict the scene-level affordance map. Then, the robot interacts with the object based on those contact points. During articulation inference, we feed the point cloud observations before and after each interaction into the articulation model network to obtain articulation estimation. By aggregating the estimated articulation models, we build the articulation models of the entire scene.

If you find our work useful in your research, please consider citing.

The codebase consists of three modules:

DITH-igibson: interaction and observation collection in iGibson simulatorDITH-pointnet: affordance predictionDITH-ditto: articulation inference

Create conda environments and install required packages by running

cd DITH-igibson

conda env create -f conda_env.yaml -n DITH-igibson

cd ../DITH-pointnet

conda env create -f conda_env.yaml -n DITH-pointnet

cd ../DITH-ditto

conda env create -f conda_env.yaml -n DITH-dittoBuild Ditto's dependents by running

cd DITH-ditto && conda activate DITH-ditto

python scripts/convonet_setup.py build_ext --inplace- Run

cd DITH-igibson && conda activate DITH-igibson - Follow these instructions to import CubiCasa5k scenes into iGibon simulator.

- Generate training and testing data by running

python room_dataset_generate.py

python room_dataset_split.py

python room_dataset_preprocess.pyThe generated data can be found under dataset/cubicasa5k_rooms_processed.

-

Run

cd DITH-pointnet && conda activate DITH-pointnet -

Set

datadirinconfigs/train_pointnet2.yamlandconfigs/test_pointnet2.yaml. -

Train the model

python train.py-

Set

ckpt_pathinconfigs/test_pointnet2.yaml -

Test the model

python test.py- Run

cd DITH-igibson && conda activate DITH-igibson - Interact with the scene and save the results

python affordance_prediction_generate.py- Collect novel scene observations

# generate articulation observation for training

python object_dataset_generate_train_set.py

# for testing

python object_dataset_generate_test_set.py- Preprocess for further training

python object_dataset_preprocess.pyThe generated data can be found under dataset/cubicasa5k_objects_processed.

-

Run

cd DITH-ditto && conda activate DITH-ditto -

Set

data_dirinconfigs/config.yaml. -

Train the model

python run.py experiment=Ditto-

Set

resume_from_checkpointinconfigs/experiment/Ditto_test.yaml -

Test the model

python run_test.py experiment=Ditto_test-

The codebase is based on the amazing Lightning-Hydra-Template.

-

We use Ditto and PointNet++ as our backbone.

@inproceedings{Hsu2023DittoITH,

title={Ditto in the House: Building Articulation Models of Indoor Scenes through Interactive Perception},

author={Cheng-Chun Hsu and Zhenyu Jiang and Yuke Zhu},

booktitle={IEEE International Conference on Robotics and Automation (ICRA)},

year={2023}

}