Yifeng Zhu, Abhishek Joshi, Peter Stone, Yuke Zhu

Project | Paper | Simulation Datasets | Real-Robot Datasets | Real Robot Control

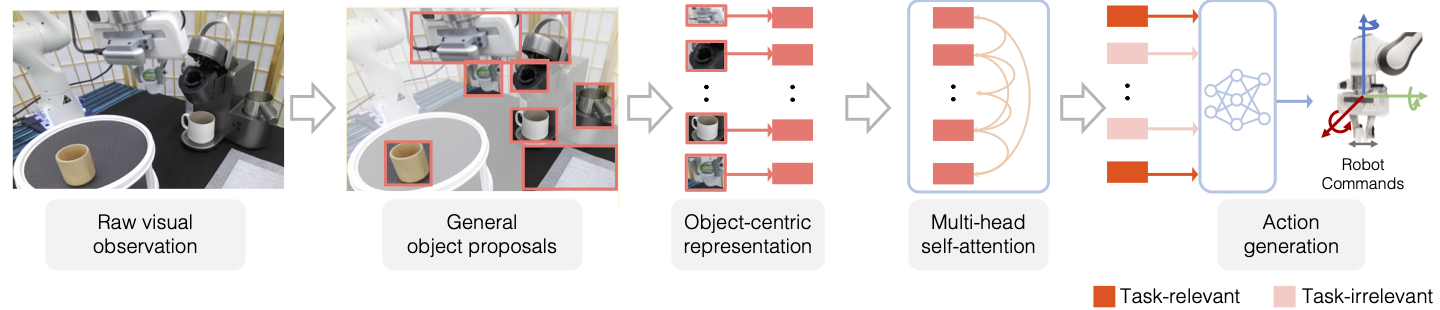

We introduce VIOLA, an object-centric imitation learning approach to learning closed-loop visuomotor policies for robot manipulation. Our approach constructs object-centric representations based on general object proposals from a pre-trained vision model. It uses a transformer-based policy to reason over these representations and attends to the task-relevant visual factors for action prediction. Such object-based structural priors improve deep imitation learning algorithm’s robustness against object variations and environmental perturbations. We quanti- tatively evaluate VIOLA in simulation and on real robots. VIOLA outperforms the state-of-the-art imitation learning methods by 45.8% in success rates. It has also been deployed successfully on a physical robot to solve challenging long- horizon tasks, such as dining table arrangements and coffee making. More videos and model details can be found in supplementary materials and the anonymous project website: https://ut-austin-rpl.github.io/VIOLA.

This codebase does not include the real robot experiment setup. If you are interested in using the real robot control infra we use, please checkout Deoxys! It comes with a detailed documentation for getting started.

Git clone the repo by:

git clone --recurse-submodules git@github.com:UT-Austin-RPL/VIOLA.gitThen go into VIOLA/third_party, install each dependencies according

to their instructions: detectron2, Detic

Then install all the other dependencies. Most important packages are:

torch, robosuite and robomimic.

pip install -r requirements.txtWe by default assume the dataset is collected through spacemouse teleoperation.

python data_generation/collect_demo.py --controller OSC_POSITION --num-demonstration 100 --environment stack-two-types --pos-sensitivity 1.5 --rot-sensitivity 1.5Then create dataset from a data collection hdf5 file.

python data_generation/create_dataset.py --use-actions

--use-camera-obs --dataset-name training_set --demo-file PATH_TO_DEMONSTRATION_DATA/demo.hdf5 --domain-name stack-two-typesAdd color augmentation to the original dataset:

python data_generation/aug_post_processing.py --dataset-folder DATASET_FOLDER_NAMEThen we generate general object proposals using Detic models:

python data_generation/process_data_w_proposals.py --nms 0.05To train a policy model with our generated dataset, run

python viola_bc/exp.py experiment=stack_viola ++hdf5_cache_mode="low_dim"

And for evaluation, run

python viola_bc/final_eval_script.py --state-dir checkpoints/stack --eval-horizon 1000 --hostname ./ --topk 20 --task-name normal

We also make the datasets we used in our paper publicly available. You can download them:

Datasets:

Used datasets: datasets, and unzip it under the folder and rename

the folder's name to be datasets. Note that our simulation datasets are collected with robosuite v1.3.0, so the textures of robots, robots, and floors in datasets will not match robosuite v1.4.0.

Checkpoints:

Best checkpoint performance: checkpoints

unziip it under the root folder of the repo and rename it to be results.