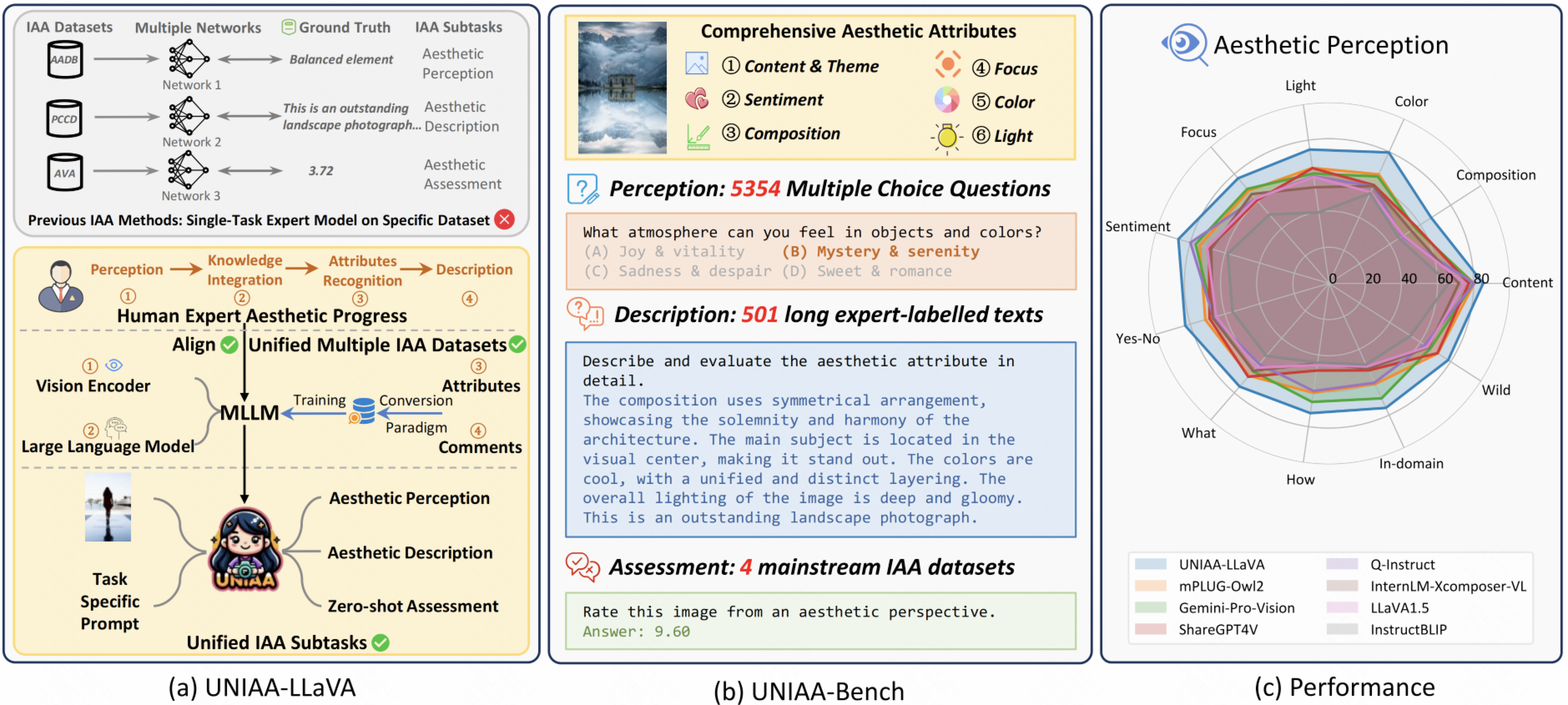

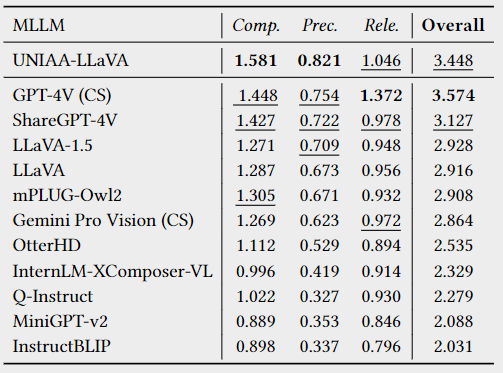

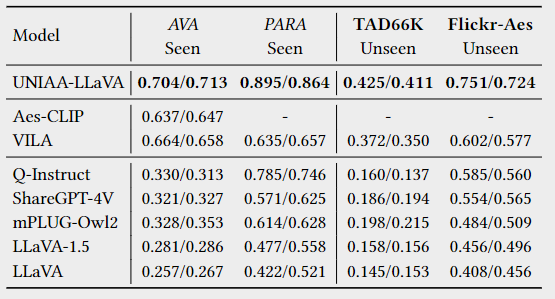

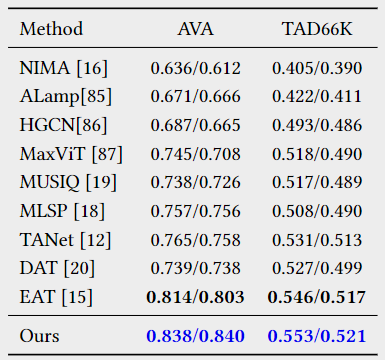

The Unified Multi-modal Image Aesthetic Assessment Framework, containing a baseline (a) and a benchmark (b). The aesthetic perception performance of UNIAA-LLaVA and other MLLMs is shown in (c).

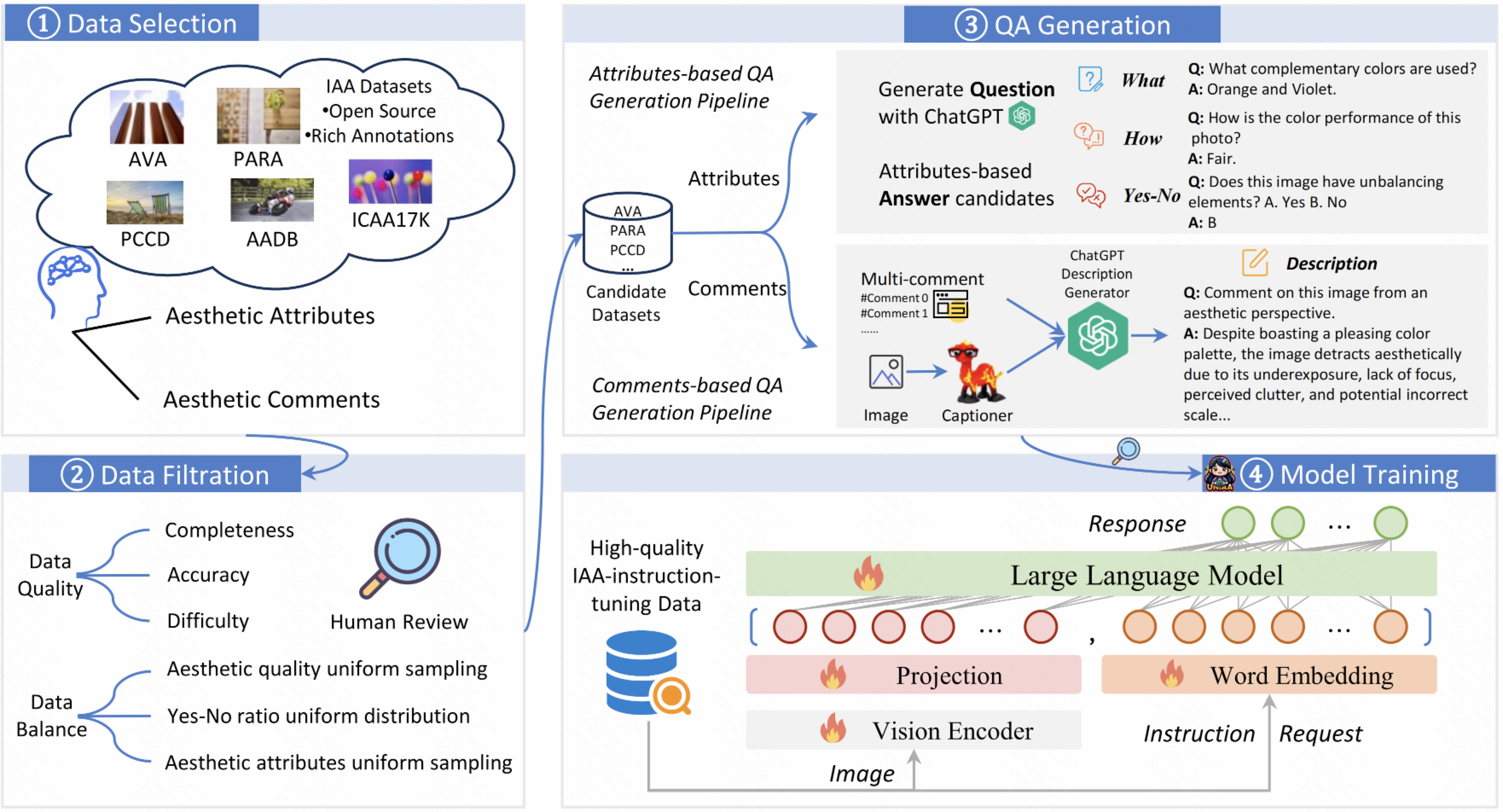

The IAA Datasets Conversion Paradigm for UNIAA-LLaVA.

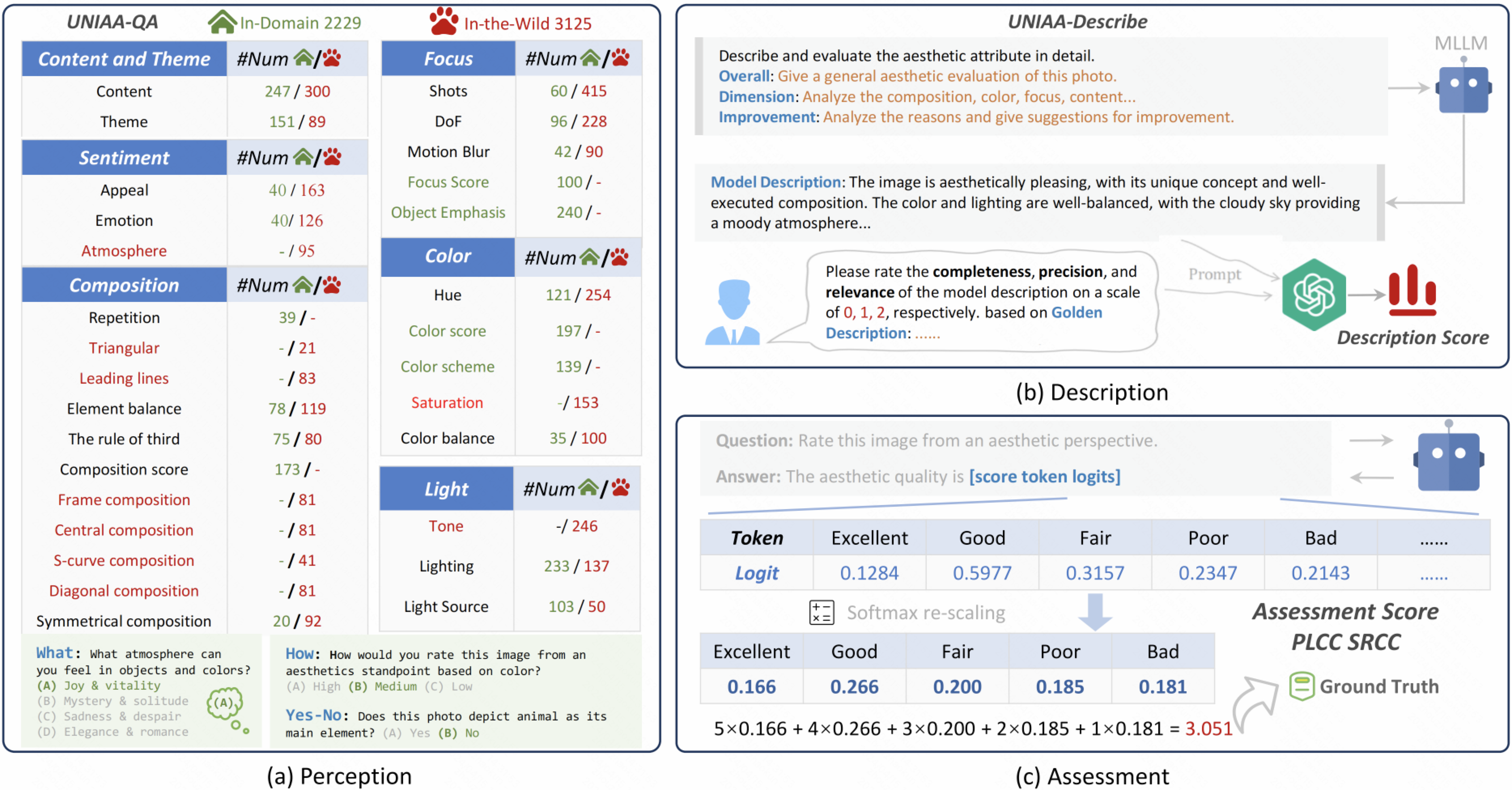

The UNIAA-Bench overview. (a) UNIAA-QA contains 5354 Image-Question-Answer samples and (b) UNIAA-Describe contains 501 Image-Description samples. (c) For open-source MLLMs, Logits can be extracted to calculate the score.

- [4/15] 🔥 We build the page of UNIAA!

If you find UNIAA useful for your your research and applications, please cite using this BibTeX:

@misc{zhou2024uniaa,

title={UNIAA: A Unified Multi-modal Image Aesthetic Assessment Baseline and Benchmark},

author={Zhaokun Zhou and Qiulin Wang and Bin Lin and Yiwei Su and Rui Chen and Xin Tao and Amin Zheng and Li Yuan and Pengfei Wan and Di Zhang},

year={2024},

eprint={2404.09619},

archivePrefix={arXiv},

primaryClass={cs.CV}

}If you have any questions, please feel free to email wangqiulin@kuaishou.com and zhouzhaokun@stu.pku.edu.cn.