Dynamic-OFA

Official repo for paper 'Dynamic-OFA: Runtime DNN Architecture Switching for Performance Scaling on Heterogeneous Embedded Platforms'.

Dynamic-OFA: Runtime DNN Architecture Switching for Performance Scaling on Heterogeneous Embedded Platforms,

Wei Lou*, Lei Xun*, Amin Sabet, Jia Bi, Jonathon Hare, Geoff V. Merrett

In Conference on Computer Vision and Pattern Recognition (CVPR) Workshops, 2021

arXiv preprint (arXiv 2105.03596)

Please also check out our talk at CVPR ECV workshop 2021, and our recent invited seminar at Arm Research ML Group on 28 July 2021 and University of Cambridge Machine Learning Systems Lab on 25 August 2021 (no recording).

About Dynamic-OFA

Motivation

Deep neural networks are typically compressed before deployed on embedded platforms because they are very computational intensive for such platforms. However, the assumed hardware resources at a design time (A&E) may not be available at runtime (BCD & FGH).

To solve this issue, we purpose Dynamic-OFA which contains a library of optimal sub-networks for different accuracy-latency trade-offs. At runtime, Dynamic-OFA selects and switches to optimal sub-network architectures to fit time-varying available hardware resources.

Workflow of Dynamic-OFA

Dynamic-OFA uses a pre-trained Once-for-all (OFA) network which contains 2*10^19 sub-network architectures as the backbone. Sub-network architectures are sampled from OFA for both CPU and GPU at the offline stage. These architectures have different performance (e.g. latency, accuracy) and are stored in a look-up table to build a dynamic version of OFA without any additional training required. At the online stage, we use runtime manager to choose the optimal sub-network that can constantly meet performance requirements when hardware availability changes.

Compare with SOTA

Runtime manager example

The sub-network architectures of Dynamic-OFA can be switched to constantly meet software performance constraints while fewer computing resources are available. The above figure shows results where GPU computing resources are shared between Dynamic-OFA and a DNN training task. The training tasks starts to run at 2500 ms (donated by 'X'), and Dynamic-OFA becomes slower (the green line is separated) since fewer GPU cores are available to it. The sub-network architecture is gradually switched from sub-network level 4 to level 2 to meet the latency constraint by temporarily trading 2.6% accuracy.

How to use / evaluate Dynamic-OFA Network

Search for optimal sub-networks

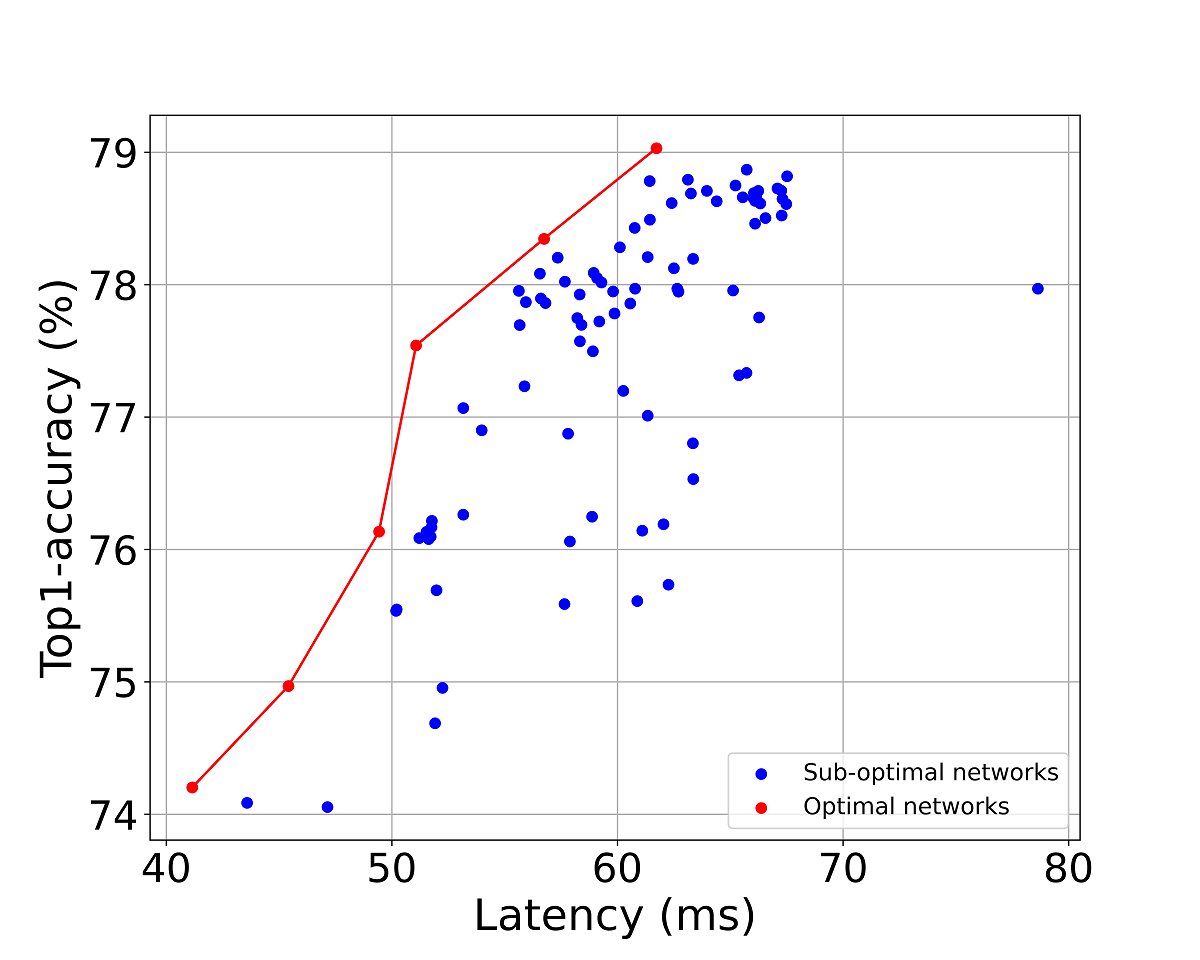

The optimal search process aims at searching for optimal sub-networks on the Pareto front from all the sub-networks of OFA model.

This code can be used for different mobile and embedded devices. For different devices, the accuracy predictor and flop look-up table are the same which are restored in optimal_search/flop&latency/checkpoints repository, however, the specialized latency look-up tables need to be built based on each device.

The search can be constrained either by latency or FLOPs, only with different pre-calculated look-up tables. After searching for a certain number of sub-networks, please do re-measure the latency and accuracy on your device since the predictor and loop-up table is not 100% accurate, and then use re-measured data to build a latency-accuracy scatter plot to find those points on the Pareto front.

Search

For latency based search:

Search without accuracy constraint: python optimal_search/latency/search.py (The latency value is corresponding to evolution_finder.py)

Search with accuracy constraint: python optimal_search/latency/search_accu.py (The latency value is corresponding to evolution_finder_accu.py)

For flop based search:

python optimal_search/flop/flop_search.py

Evaluation

python optimal_search/latency/evaluation.py (For GPU)

python optimal_search/latency/evaluation_cpu.py (For CPU)

Please change the devices of all the function in imagenet_eval_helper.py

Examples of optimal search results

Runtime management

Here we provide a simple runtime demo in /runtime_demo/runtime_adjustment. In this directory, the nets_6.pickle and nets_7.pickle are the selected optimal models. The users can use the run.sh to see the runtime adjustment of different sub-networks under the constraints of latency and accuracy.

The running code is "sh run.sh", and users can change the parameters in this file, like initial model

The codes in /runtime_demo/time/test1.py are able to test the latency for each selected sub-networks.

Requirement

- PyTorch and Torchvision (If you use Nvidia Jetson platform, please install from here)

- Python 3.6+

- ImageNet dataset

Q&A and feedback

We are constantly improving readability and useability of our codebase. Any feedback and questions for our paper and code are welcomed, please leave them as GitHub issues.

Related papers and talks

- [DATE 2020] Optimising Resource Management for Embedded Machine Learning (Paper)

- [TinyML EMEA] Runtime DNN Performance Scaling through Resource Management on Heterogeneous Embedded Platforms (Talk)

- [KTN & eFutures Online Webinar] Adapting AI to Available Resource in Mobile/Embedded Devices (Talk)

Acknowledgements

This work was supported in part by the Engineering and Physical Sciences Research Council (EPSRC) under Grant EP/S030069/1, and under International Centre for Spatial Computational Learning

Thanks to the amazing authors of the paper Once-for-All: Train One Network and Specialize it for Efficient Deployment open source their code and models, so we can do our project based on their codebase. Please do checkout their works at MIT Han lab.