I add the rename_model.py to the download link below.

Download link: here. The code is exactly the same I used in my demo website. (Sorry, I do not have time to polish it...)

Simplified tutorial: Using the function getInputPhoto and processImg in the TF.py

There are too many people asked me to release the code even the code is not polished and is ugly as me. Therefore, I put my ugly code and the data here. I also provide the supervised code. There are a lot of unnecessary parts in the code. I will refactor the code ASAP. Regarding the data, I put the name of the images we used on MIT-Adobe FiveK dataset. I directly used Lightroom to decode the images to TIF format and used Lightroom to resize the long side of the images to 512 resolution (The label images are from retoucher C). I am not sure whether I have right to release the HDR dataset we collected from Flickr so I put the ID of them. You can download the images according to the IDs. (The code was run on 0.12 version of TensorFlow. The A-WGAN part in the code did not implement decreasing the lambda since the initial lambda was relatively small in that case.)

Some useful issues: #6, #16, #18, #24, #27, #38, #39

| Method | Description |

|---|---|

| Label | Retouched by photographer from MIT-Adobe 5K dataset [1] |

| Our (HDR) | Our model trained on our HDR dataset with unpaired data |

| Our (SL) | Our model trained on MIT-Adobe 5K dataset with paired data (supervised learning) |

| Our (UL) | Our model trained on MIT-Adobe 5K dataset with unpaired data |

| CycleGAN (HDR) | CycleGAN's model [2] trained on our HDR dataset with unpaired data |

| DPED_device | DPED's model [3] trained on a specified device with paired data (supervised learning) |

| CLHE | Heuristic method from [4] |

| NPEA | Heuristic method from [5] |

| FLLF | Heuristic method from [6] |

| Input | Label | Our (HDR) |

|---|---|---|

|

|

|

| Our (SL) | Our (UL) | CycleGAN (HDR) |

|

|

|

| DPED_iPhone6 | DPED_iPhone7 | DPED_Nexus5x |

|

|

|

| CLHE | NPEA | FLLF |

|

|

|

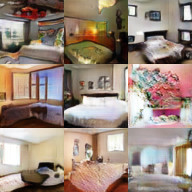

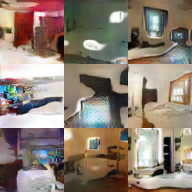

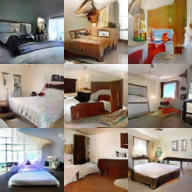

| Input (MIT-Adobe) | Our (HDR) | DPED_iPhone7 | CLHE |

|---|---|---|---|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

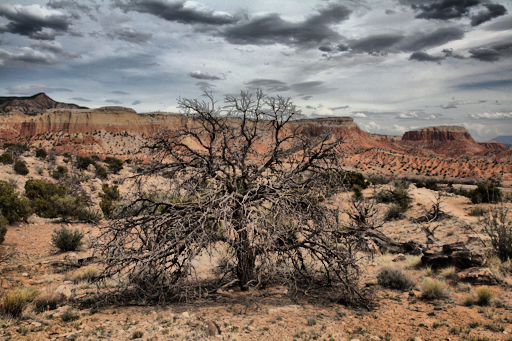

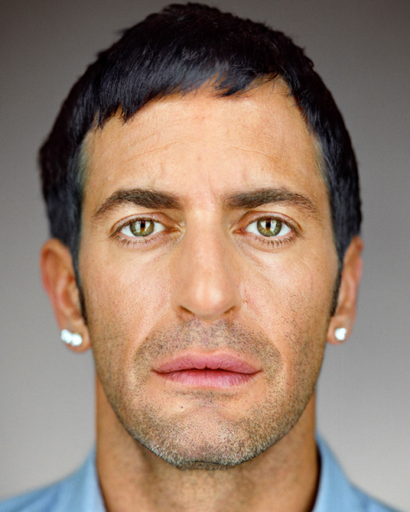

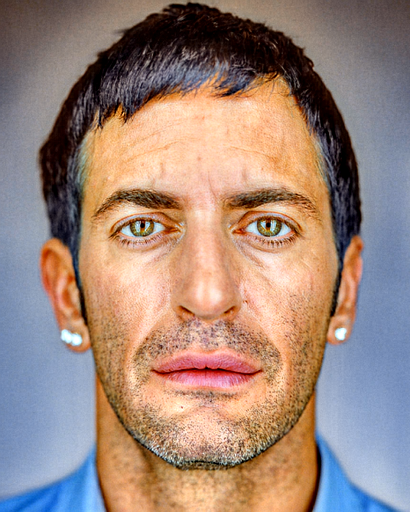

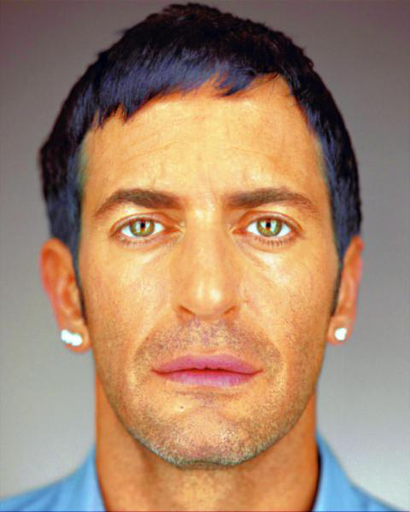

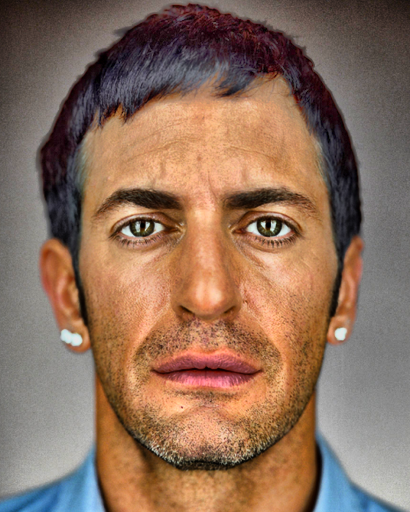

| Input (Internet) | Our (HDR) | DPED_iPhone7 | CLHE |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

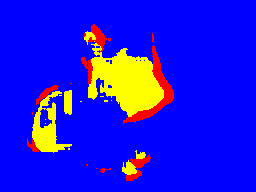

| Preference Matrix (20 participants and 20 images using pairwise comparisons) |

||||||

| CycleGAN | DPED | NPEA | CLHE | Ours | Total | |

|---|---|---|---|---|---|---|

| CycleGAN | - | 32 | 27 | 23 | 11 | 93 |

| DPED | 368 | - | 141 | 119 | 29 | 657 |

| NPEA | 373 | 259 | - | 142 | 50 | 824 |

| CLHE | 377 | 281 | 258 | - | 77 | 993 |

| Ours | 389 | 371 | 350 | 323 | - | 1433 |

| Our model trained on HDR images ranked the first and CLHE was the runner-up. When comparing our model with CLHE, 81% of users (323 among 400) preferred our results. | ||||||

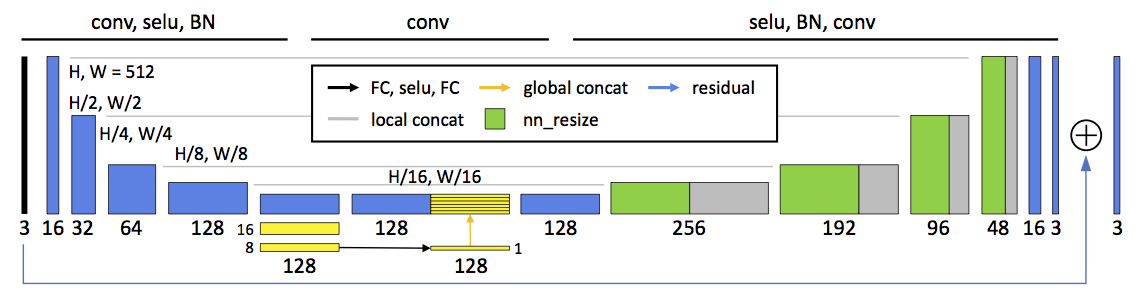

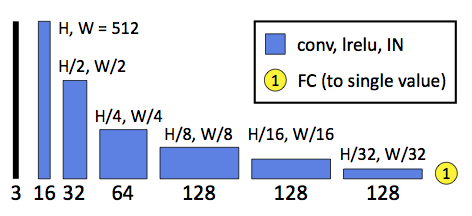

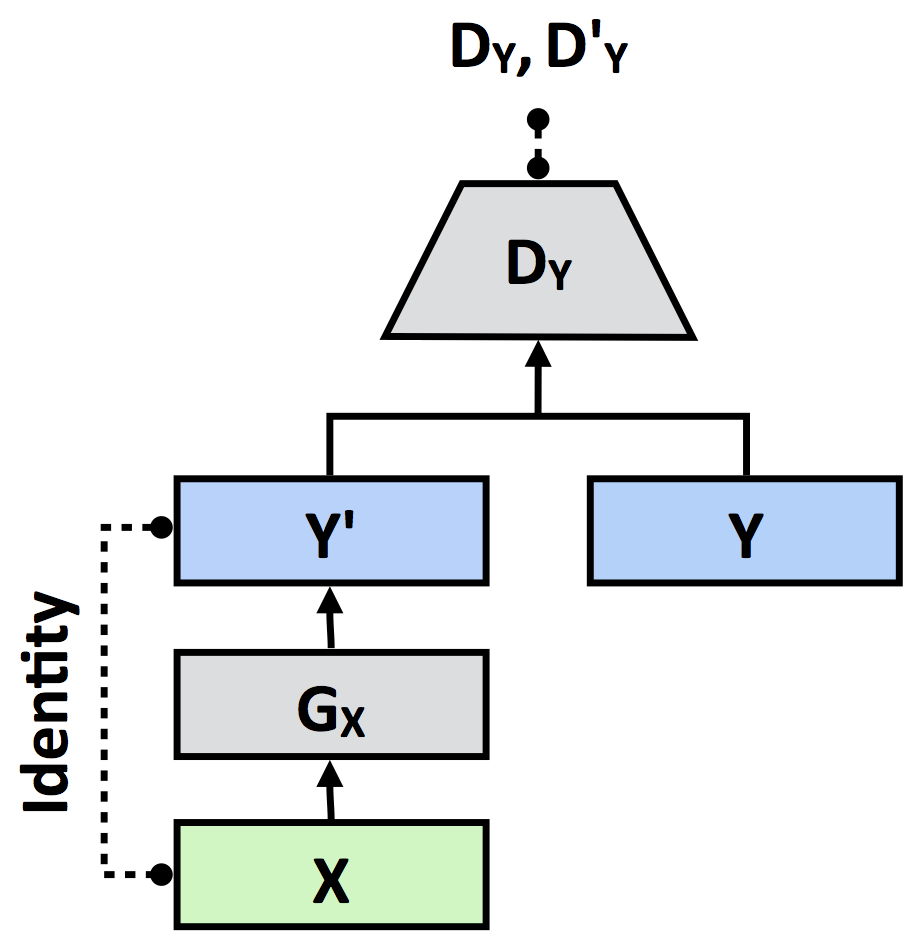

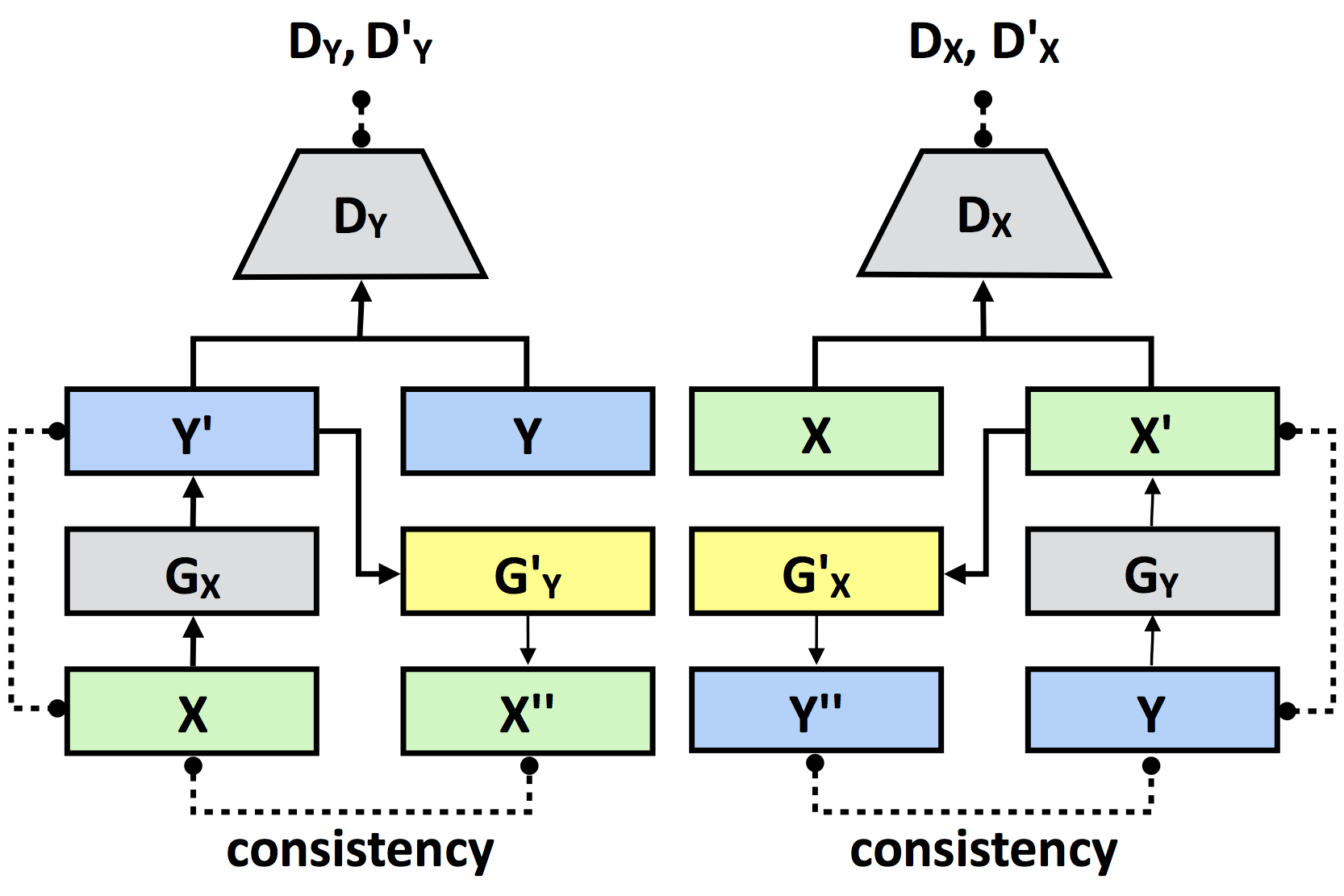

This paper proposes three improvements: global U-Net, adaptive WGAN (A-WGAN) and individual batch normalization (iBN). They generally improve results; and for some applications, the improvement is sufficient for crossing the bar and leading to success. We have applied them to some other applications.

| λ = 0.1 | λ = 10 | λ = 1000 | |

|---|---|---|---|

| WGAN-GP |  |

|

|

| A-WGAN |  |

|

|

| With different λ values, WGAN-GP could succeed or fail. The proposed A-WGAN is less dependent with λ and succeeded with all three λ values. | |||

| Generator | |

|---|---|

|

|

| Discriminator | |

|

|

| 1-way GAN | 2-way GAN |

|

|

Yu-Sheng Chen, Yu-Ching Wang, Man-Hsin Kao and Yung-Yu Chuang.

Deep Photo Enhancer: Unpaired Learning for Image Enhancement from Photographs with GANs. Proceedings of IEEE International Conference on Computer Vision and Pattern Recognition 2018 (CVPR 2018), to appear, June 2018, Salt Lake City, USA.

@INPROCEEDINGS{Chen:2018:DPE,

AUTHOR = {Yu-Sheng Chen and Yu-Ching Wang and Man-Hsin Kao and Yung-Yu Chuang},

TITLE = {Deep Photo Enhancer: Unpaired Learning for Image Enhancement from Photographs with GANs},

YEAR = {2018},

MONTH = {June},

BOOKTITLE = {Proceedings of IEEE International Conference on Computer Vision and Pattern Recognition (CVPR 2018)},

PAGES = {6306--6314},

LOCATION = {Salt Lake City},

}

- Bychkovsky, V., Paris, S., Chan, E., Durand, F.: Learning photographic global tonal adjustment with a database of input/output image pairs. In: Proceedings of the 2011 IEEE Conference on Computer Vision and Pattern Recognition. pp. 97-104. CVPR'11 (2011)

- Zhu, J. Y., Park, T., Isola, P., Efros, A. A.: Unpaired image-to-image translation using cycle-consistent adversarial networks. In: Proceedings of the 2017 IEEE International Conference on Computer Vision. pp. 2242-2251. ICCV'17 (2017)

- Ignatov, A., Kobyshev, N., Vanhoey, K., Timofte, R., Van Gool, L.: DSLR-quality photos on mobile devices with deep convolutional networks. In: Proceedings of the 2017 IEEE International Conference on Computer Vision. pp. 3277-3285. ICCV'17 (2017)

- Wang, S., Cho, W., Jang, J., Abidi, M. A., Paik, J.: Contrast-dependent saturation adjustment for outdoor image enhancement. JOSA A. pp. 2532-2542. (2017)

- Wang, S., Zheng, J., Hu, H. M., Li, B.: Naturalness preserved enhancement algorithm for non-uniform illumination images. IEEE Transactions on Image Processing. pp. 3538-3548. TIP'13 (2013)

- Aubry, M., Paris, S., Hasinoff, S. W., Kautz, J., Durand, F.: Fast local laplacian filters: Theory and applications. ACM Transactions on Graphics. Article 167. TOG'14 (2014)

Feel free to contact me if there is any questions (Yu-Sheng Chen nothinglo@cmlab.csie.ntu.edu.tw).