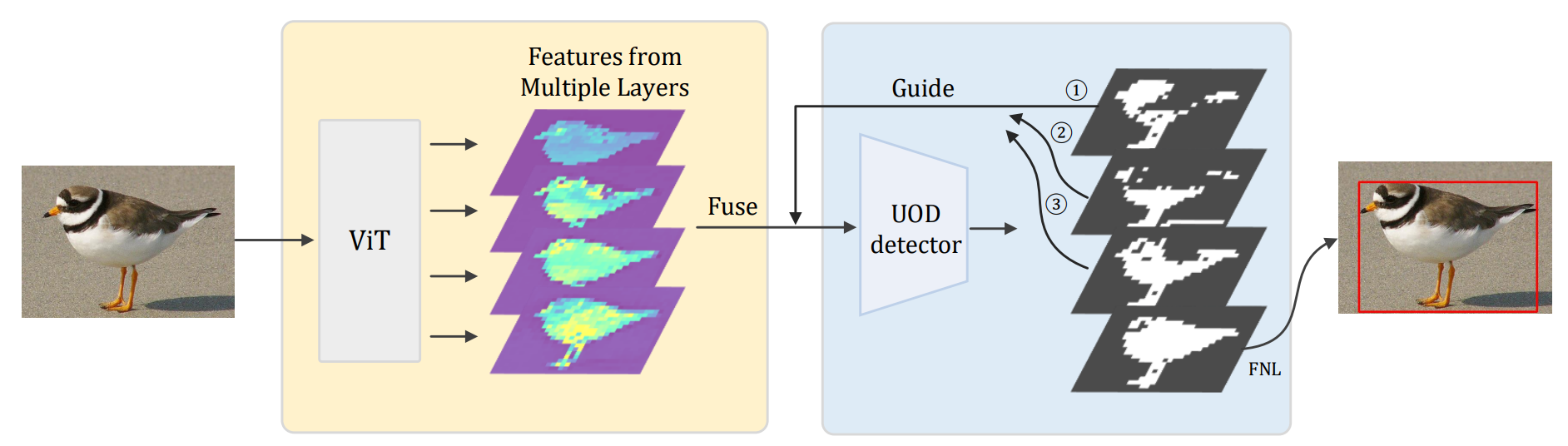

Foreground Guidance and Multi-Layer Feature Fusion for Unsupervised Object Discovery with Transformers

This is the official implementation of the Foreground Guidance and Multi-Layer Feature Fusion for Unsupervised Object Discovery with Transformers (WACV2023)

Step 1. Please install PyTorch.

Step 2. To install other dependencies, please launch the following command:

pip install -r requirements.txt

Please download the PASCAL VOC07 and PASCAL VOC12 datasets (link) and put the data in the folder datasets.

Please download the COCO dataset and put the data in datasets/COCO. We use COCO20k (a subset of COCO train2014) following previous works.

The structure of the datasets folder will be like:

├── datasets

│ ├── VOCdevkit

│ │ ├── VOC2007

│ │ │ ├──ImageSets & Annotations & ...

│ │ ├── VOC2012

│ │ │ ├──ImageSets & Annotations & ...

| ├── COCO

│ │ ├── annotations

│ │ ├── images

│ │ │ ├──train2014 & ...

Following the steps to get the results presented in the paper.

# for voc

python main_formula_LOST.py --dataset VOC07 --set trainval

python main_formula_LOST.py --dataset VOC12 --set trainval

# for coco

python main_formula_LOST.py --dataset COCO20k --set train# for voc

python main_formula_TokenCut.py --dataset VOC07 --set trainval --arch vit_base

python main_formula_TokenCut.py --dataset VOC12 --set trainval --arch vit_base

# for coco

python main_formula_TokenCut.py --dataset COCO20k --set train --arch vit_baseThe results of this repository:

| Method | arch | VOC07 | VOC12 | COCO_20k |

|---|---|---|---|---|

| FORMULA-L | ViT-S/16 | 64.28 | 67.65 | 54.04 |

| FORMULA-TC | ViT-B/16 | 69.13 | 73.08 | 59.57 |

Please following LOST to conduct the experiments of CAD and OD.

The project is only free for academic research purposes, but needs authorization for commerce. For commerce permission, please contact wyt@pku.edu.cn.

If you use our code/model/data, please cite our paper:

@InProceedings{Zhiwei_2023_WACV,

author = {Zhiwei Lin and Zengyu Yang and Yongtao Wang},

title = {Foreground Guidance and Multi-Layer Feature Fusion for Unsupervised Object Discovery with Transformers},

booktitle = {Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision (WACV)},

year = {2023}

}

FORMULA is built on top of LOST, DINO and TokenCut. We sincerely thanks those authors for their great works and codes.