Authors: Yuyang Yin, Dejia Xu, Zhangyang Wang, Yao Zhao, Yunchao Wei

[Project Page] | [Video (narrated)] | [Video (results only)] | [Paper] | [Arxiv]

2024/5/26Release our new work. Diffusion4D: Fast Spatial-temporal Consistent 4D Generation via Video Diffusion Models. You can click project page to learn more detail.2023/12/28First release code and paper.2024/2/14Update text-to-4d and image-to-4d functions and cases.2024/3/17Add a completed example script.

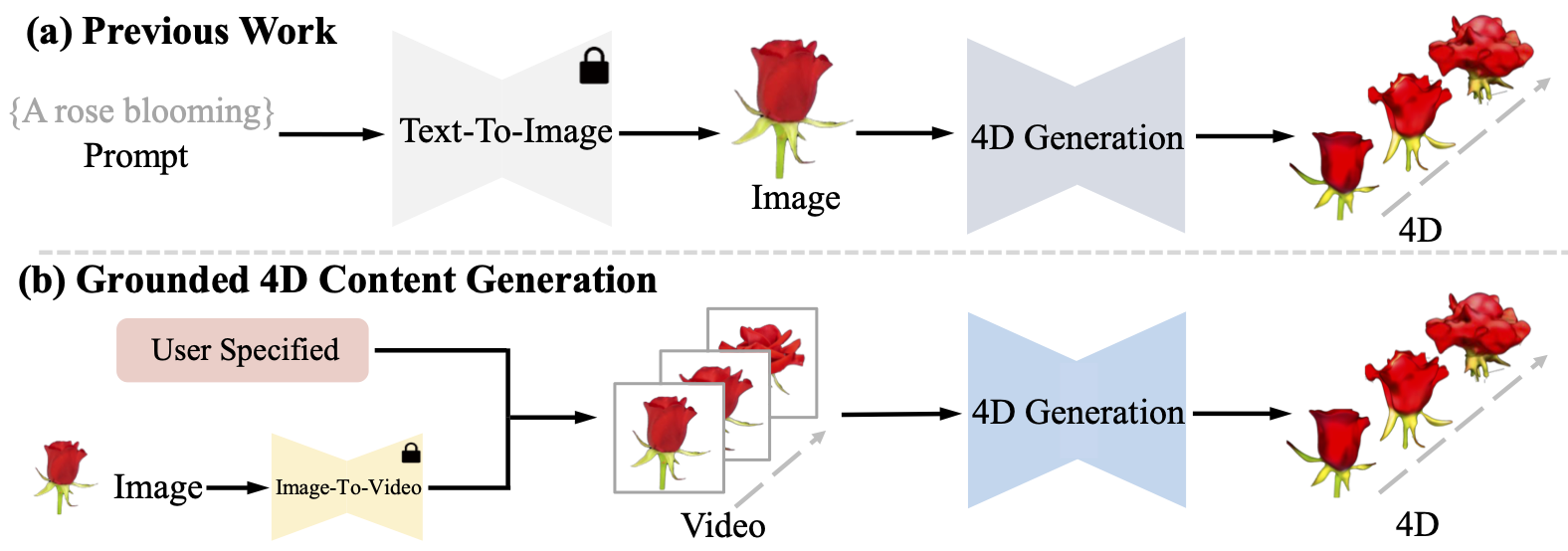

As show in figure above, we define grounded 4D generation, which focuses on video-to-4D generation. Video is not required to be user-specified but can also be generated by video diffusion. With the help of stable video diffusion, we implement the function of image-to-video-to-4d and text-to-image-to-video-to-4d . Due to the unsatisfactory performance of the text-to-video model, we use stable diffusion-XL and stable video diffusion implement the function of text-to-image-to-video-to-4d. Therefore, our model support text-to-4D and image-to-4D tasks.

conda env create -f environment.yml

conda activate 4DGen

pip install -r requirements.txt

# 3D Gaussian Splatting modules, skip if you already installed them

# a modified gaussian splatting (+ depth, alpha rendering)

git clone --recursive https://github.com/ashawkey/diff-gaussian-rasterization

pip install ./diff-gaussian-rasterization

pip install ./simple-knn

# install kaolin for chamfer distance (optional)

# https://kaolin.readthedocs.io/en/latest/notes/installation.html

# CHANGE the torch and CUDA toolkit version if yours are different

# pip install kaolin -f https://nvidia-kaolin.s3.us-east-2.amazonaws.com/torch-1.12.1_cu116.htmlWe have organized a complete pipeline script in main.bash for your reference. You need to modify the necessary paths.

We release our collected data in Google Drive. Some of these data are user-specified, while others are generated.

Each test case contains two folders: {name}_pose0 and {name}_sync. pose0 refers to the monocular video sequence. sync refers to the pseudo labels generated by SyncDreamer.

We recommend using Practical-RIFE if you need to introduce more frames in your video sequence.

Text-To-4D data prepartion

Use stable diffusion-XL to generate your own images. Then use image-to-video script below.

Image-To-4D data prepartion

python image_to_video.py --data_path {your image.png} --name {file name} #It may be necessary to try multiple seeds to obtain the desired results.Preprocess data format for training

To preprocess your own images into RGBA format, you can use preprocess.py .

To preprocess your own images to multi view images, you can use SyncDreamer script,then use preprocess_sync.py to get a uniform format.

# for monocular image sequence

python preprocess.py --path xxx

# for images generated by syncdreamer

python preprocess_sync.py --path xxxpython train.py --configs arguments/i2v.py -e rose --name_override rosepython render.py --skip_train --configs arguments/i2v.py --skip_test --model_path "./output/xxxx/"Please see main.bash.

We show part of results in our web pages.

Image-to-4D results:

| frontview_mario | multiview_mario |

|---|---|

|

|

Text-to-4D results:

We first use stable-diffusion-xl to generate a static image. Prompt is 'an emoji of a baby panda, 3d model, front view'.

| frontview_panda | multiview-panda |

|---|---|

|

|

This work is built on many amazing research works and open-source projects, thanks a lot to all the authors for sharing!

- https://github.com/dreamgaussian/dreamgaussian

- https://github.com/hustvl/4DGaussians

- https://github.com/graphdeco-inria/gaussian-splatting

- https://github.com/graphdeco-inria/diff-gaussian-rasterization

- https://github.com/threestudio-project/threestudio

If you find this repository/work helpful in your research, please consider citing the paper and starring the repo ⭐.

@article{yin20234dgen,

title={4DGen: Grounded 4D Content Generation with Spatial-temporal Consistency},

author={Yin, Yuyang and Xu, Dejia and Wang, Zhangyang and Zhao, Yao and Wei, Yunchao},

journal={arXiv preprint arXiv:2312.17225},

year={2023}

}}