Traffic behavior in a roadway construction scene has significant safety impact. This project aims to understand a roadway construction scene in terms of roadway object location and visual cues. The work presents two algorithm

- Classify a an roadway image as workzon and non workzone

- Segmentation of object in workzone.

This work was presented at TRB 2022 Sundharam, V., Sarkar A., Hickman, J., Abbott, A. " Characterization, Detection, And Segmentation Of Work Zone1scenes From Naturalistic Driving Data", Transportation Research Record (2021, under review) – accepted at TRB Annual meeting 2021

- Step 1: Pull docker image

docker pull vtti/workzone-detection

- Step 2: Clone the repository to local machine

git clone https://github.com/VTTI/Segmentation-and-detection-of-work-zone-scenes.git

- Step 3: cd to local repository

cd [repo-name]

- Step 4: Run container from pulled image and mount data volumes

docker run -it --rm -p 9999:8888 -v $(pwd):/opt/app vtti/workzone-detection

You may get an error

failed: port is already allocatedIf so, expose a different port number

ssh -N -f -L 9999:localhost:9999 host@server.xyz

We introduce a new dataset called VTTI-WZDB2020, which is intended to aid in the development of automated systems that

can detect work zones.The dataset consists of work zone and non-work zone scenes in various naturalistic driving

conditions.

Organize the data as follows

./

|__ data

|__ Delivery_1_Workzone

|__ Delivery_2_new

|__ test (provided)

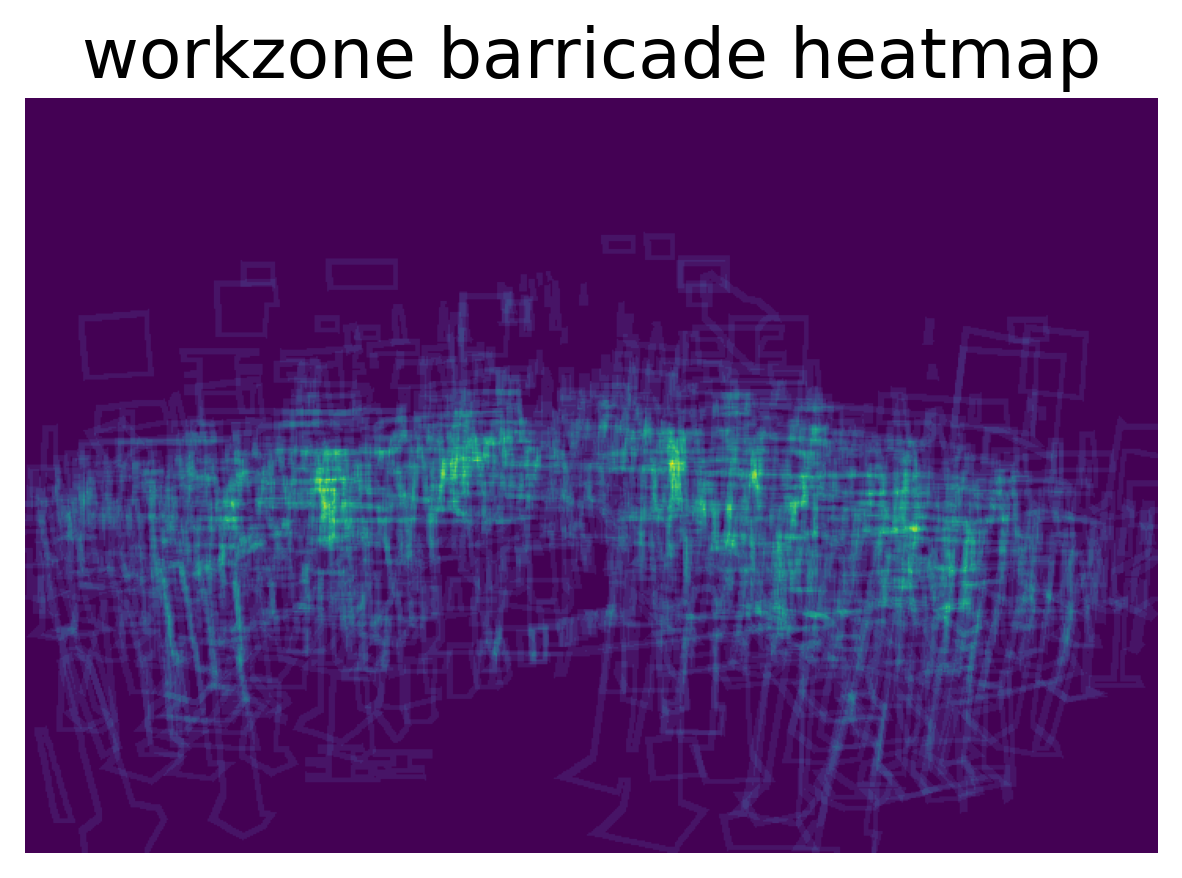

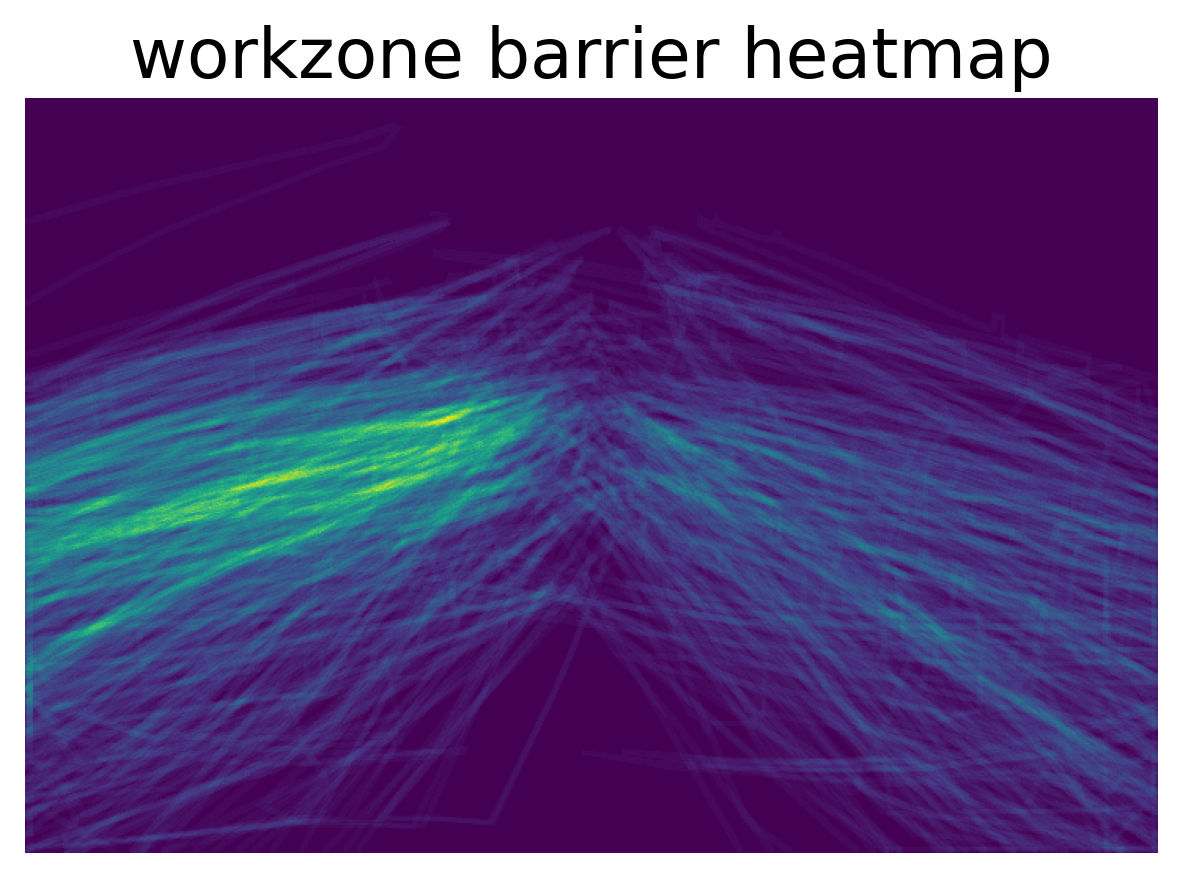

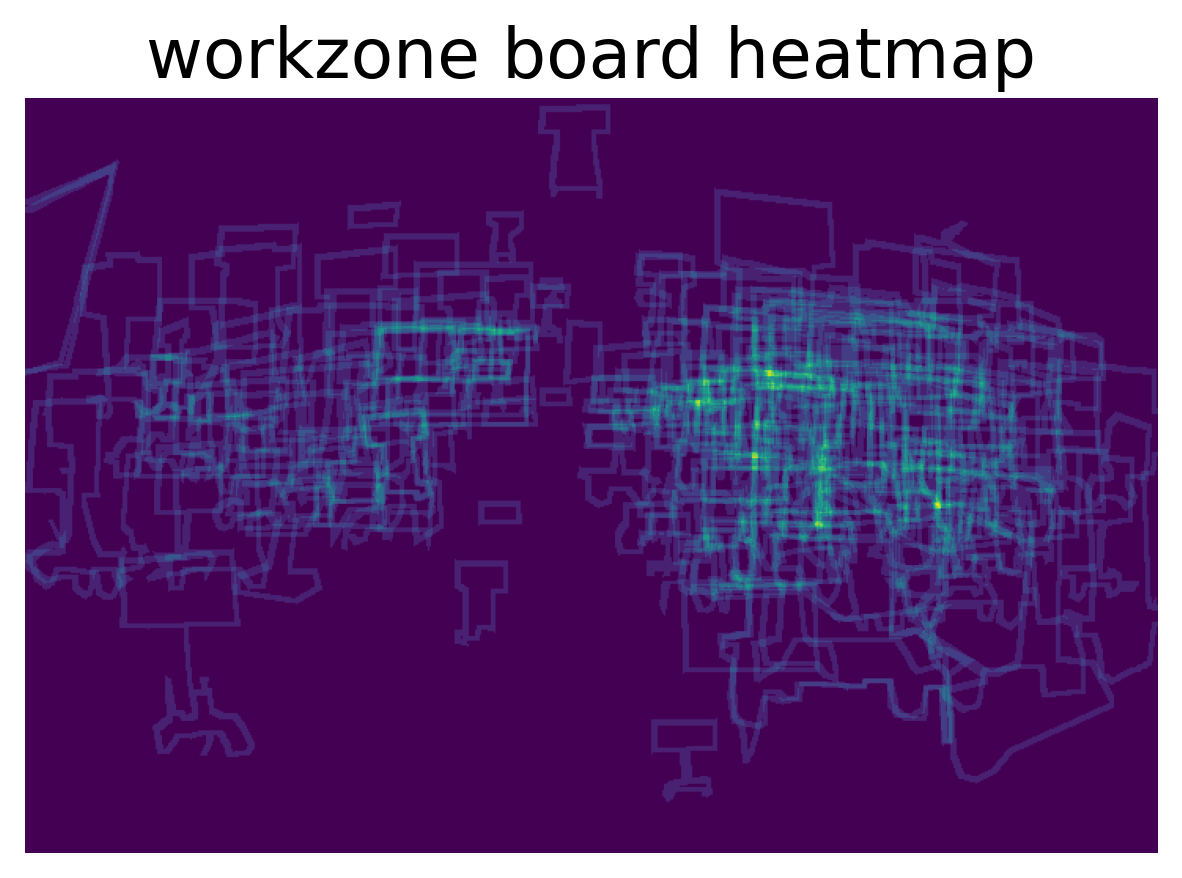

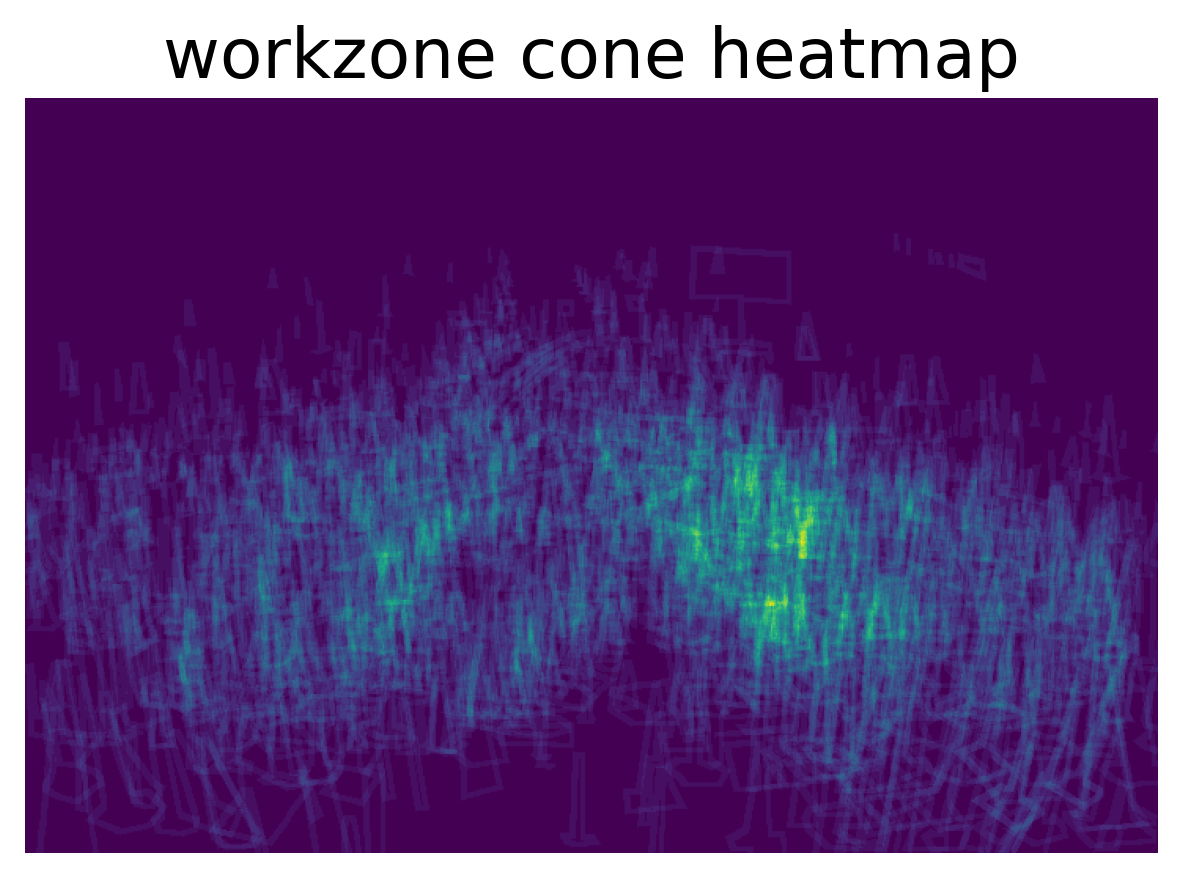

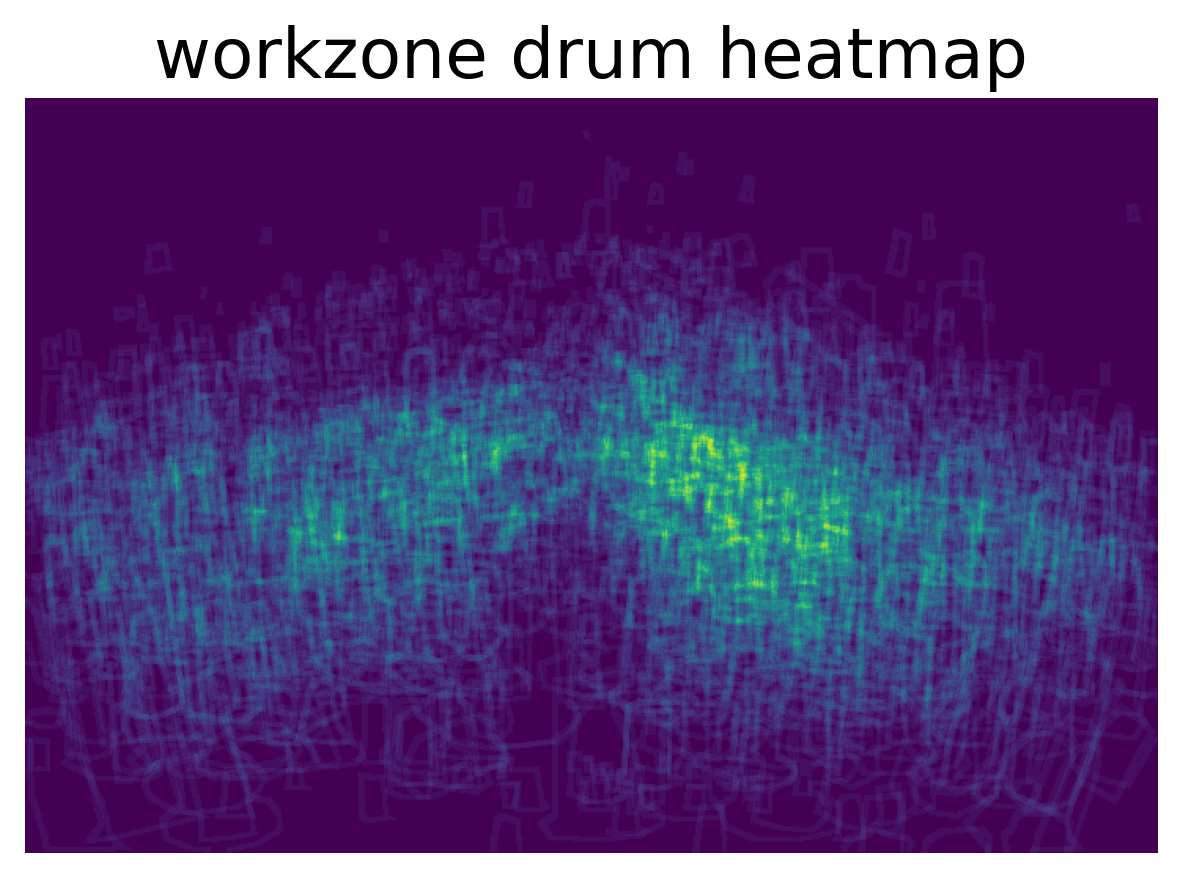

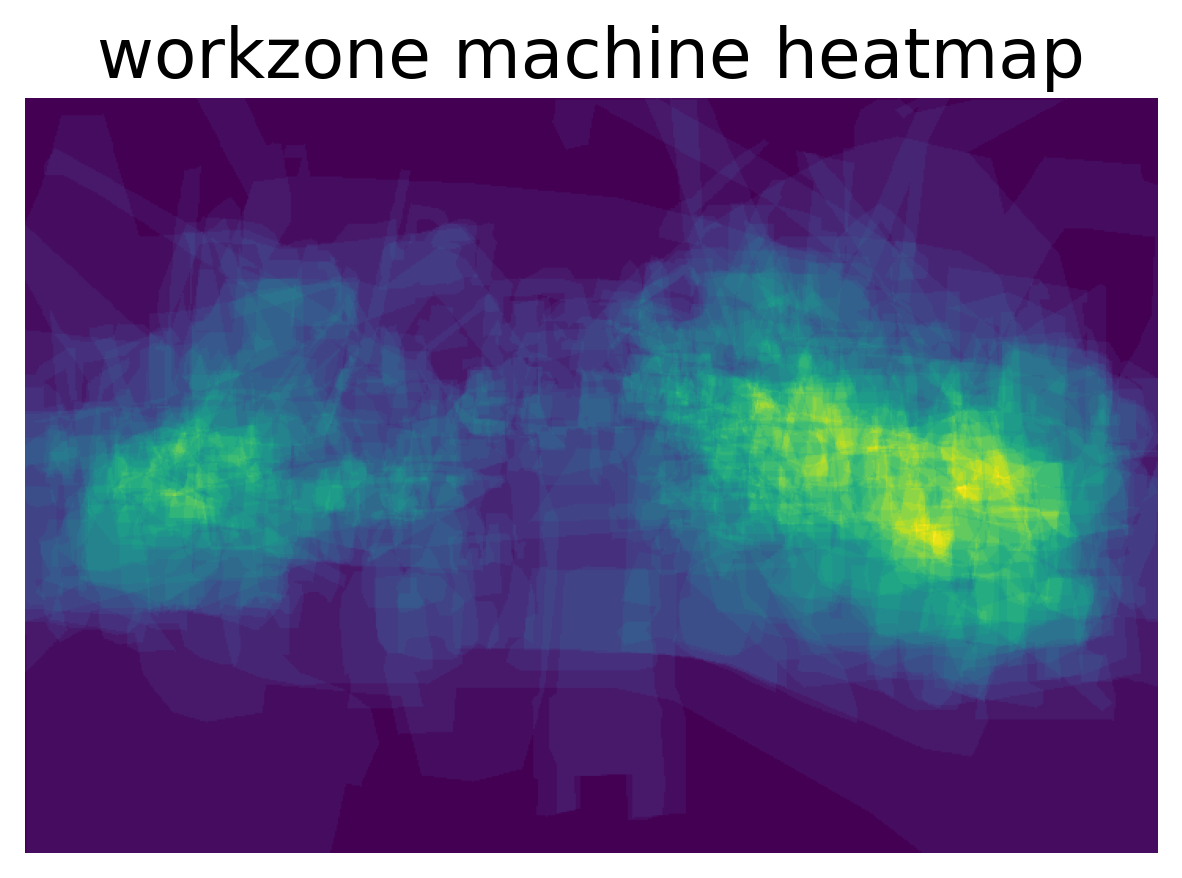

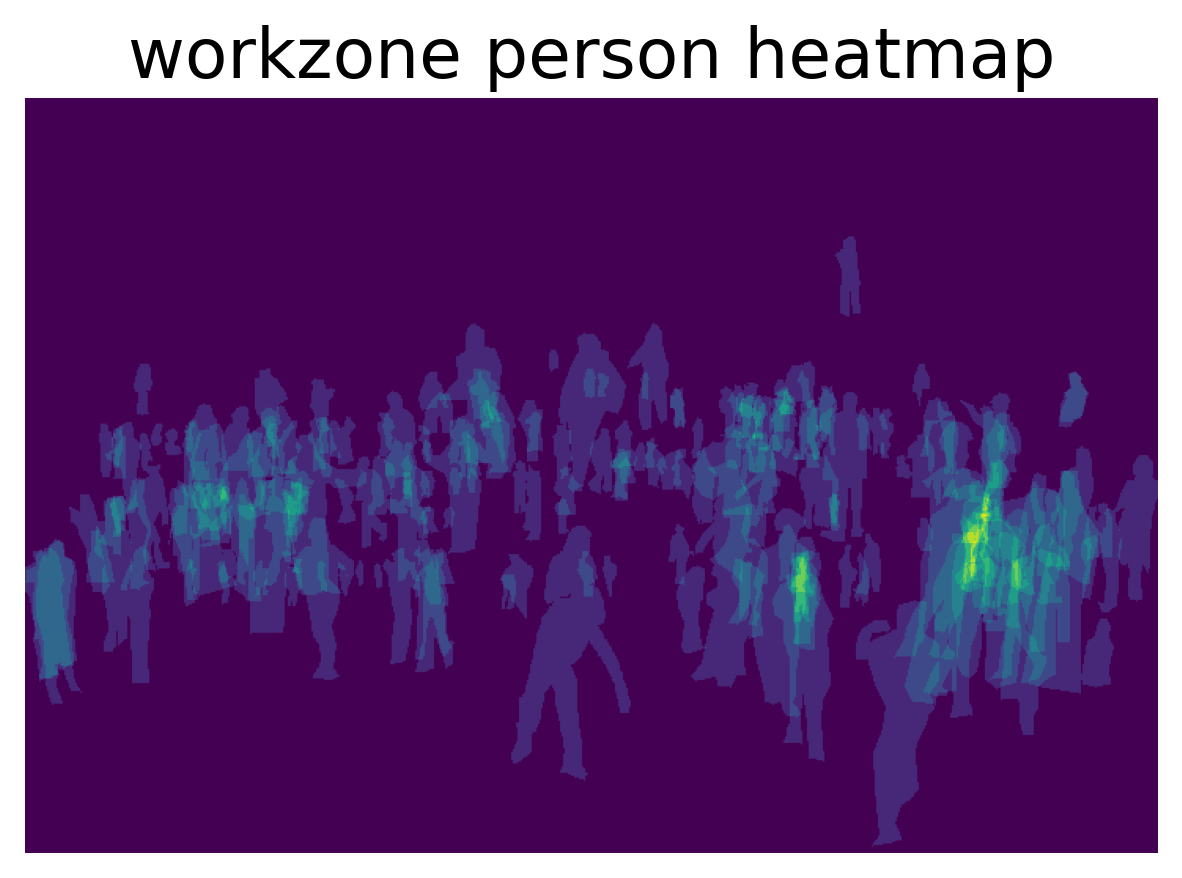

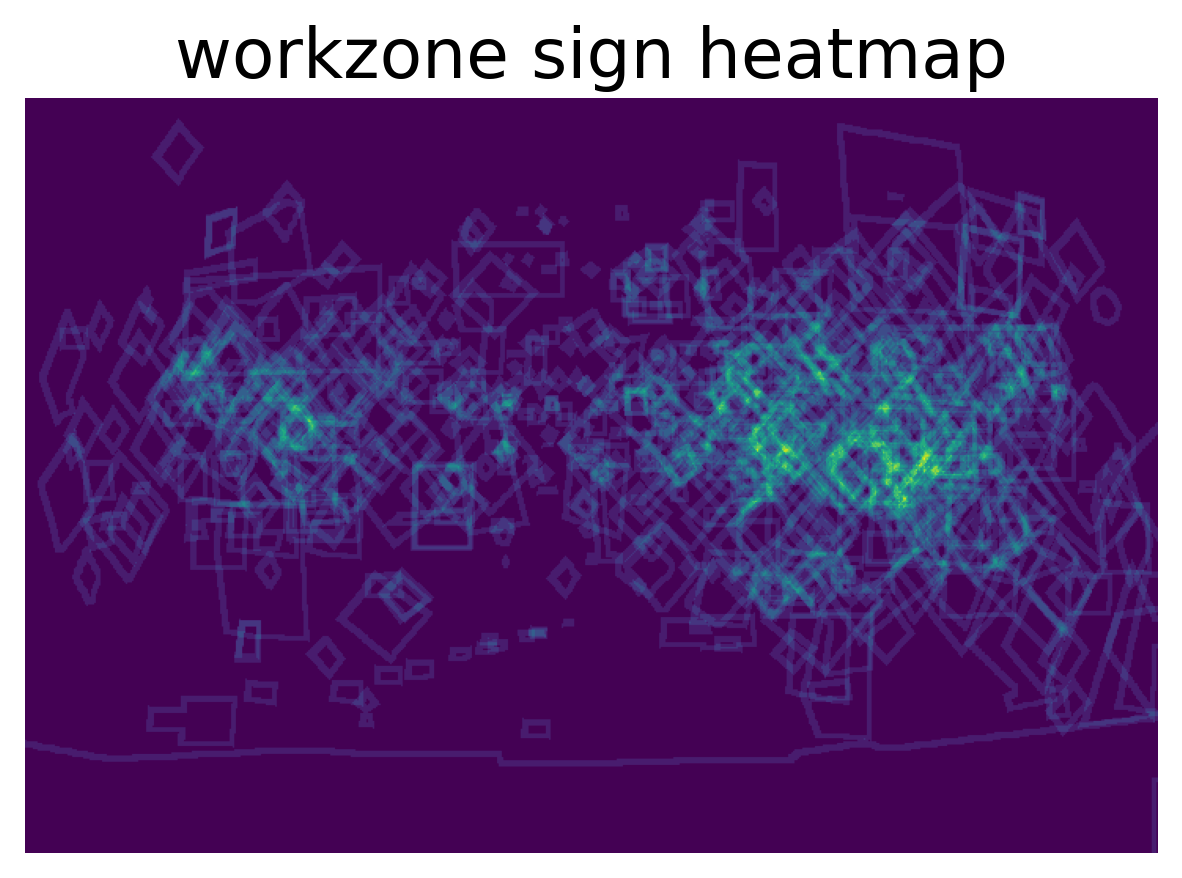

The statistic analysis of the data is presented for convenience in ./ipynb/analysis_workzone.ipynb. Additionally, individual results are present under ./output/analysis

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

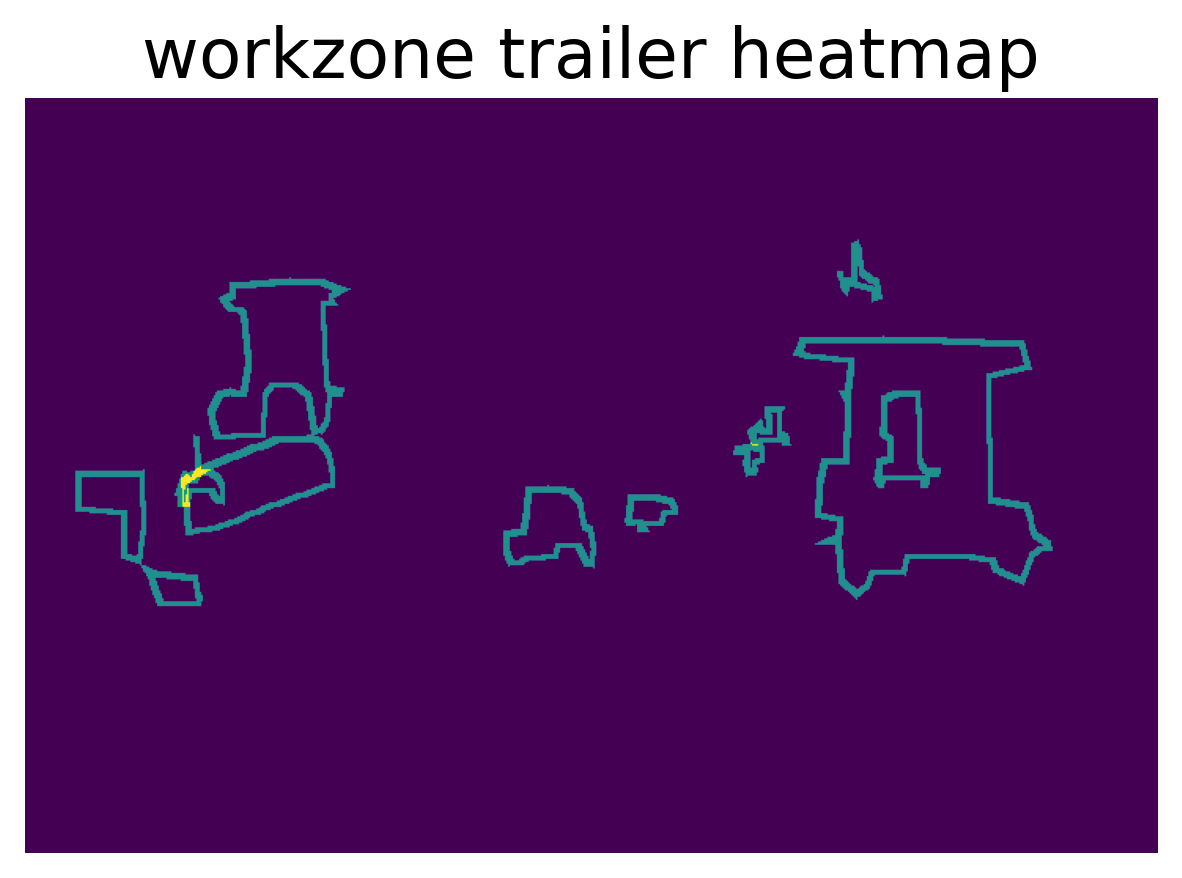

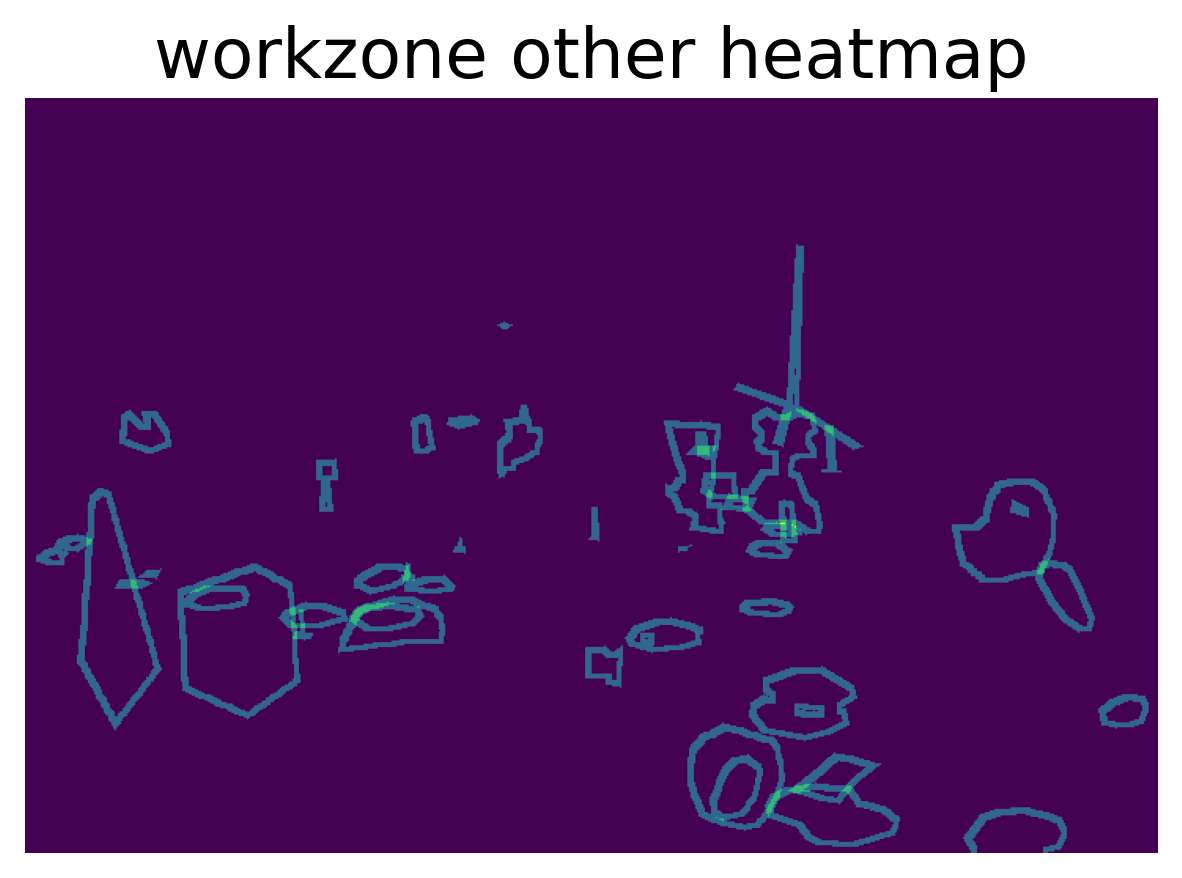

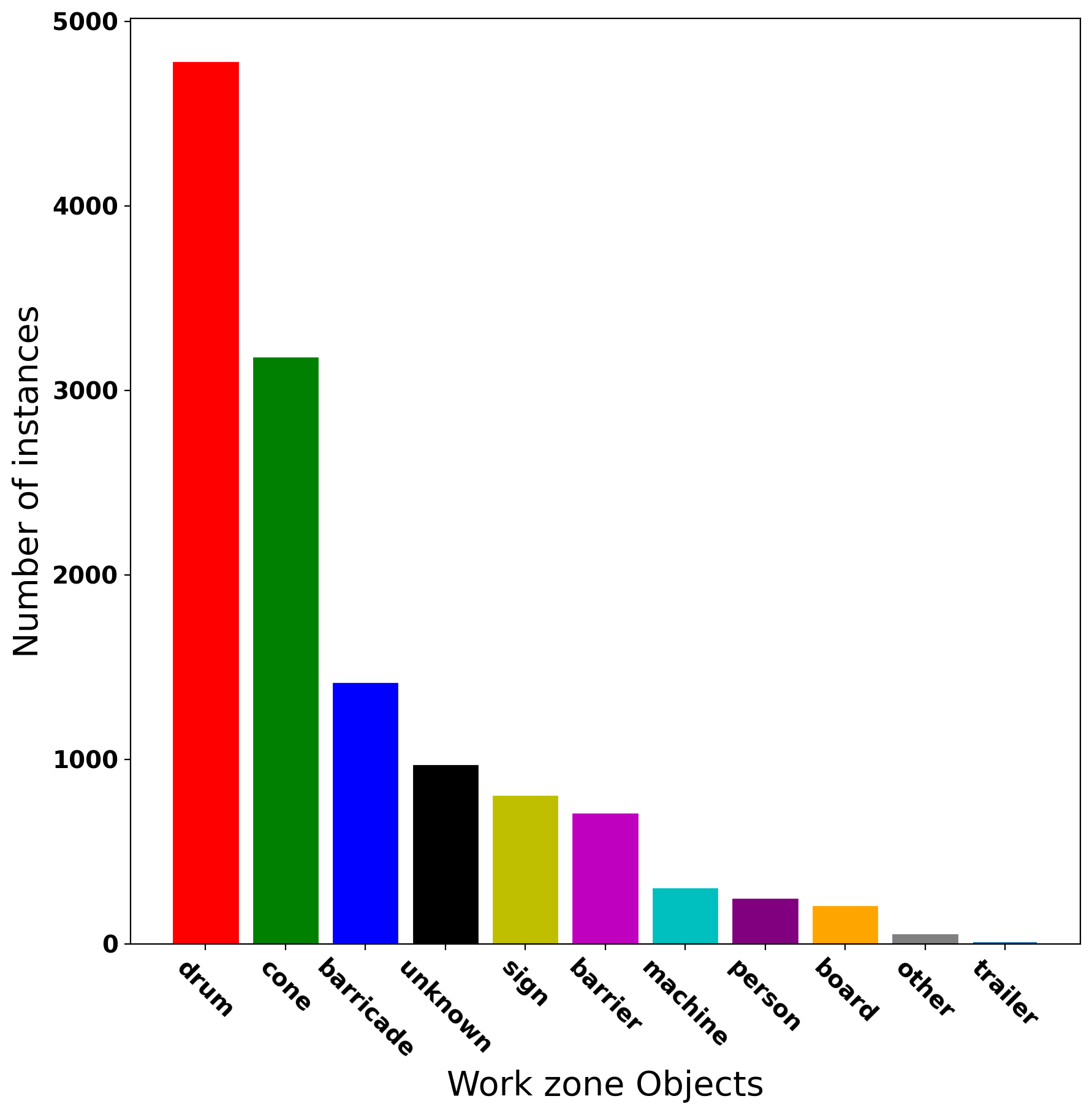

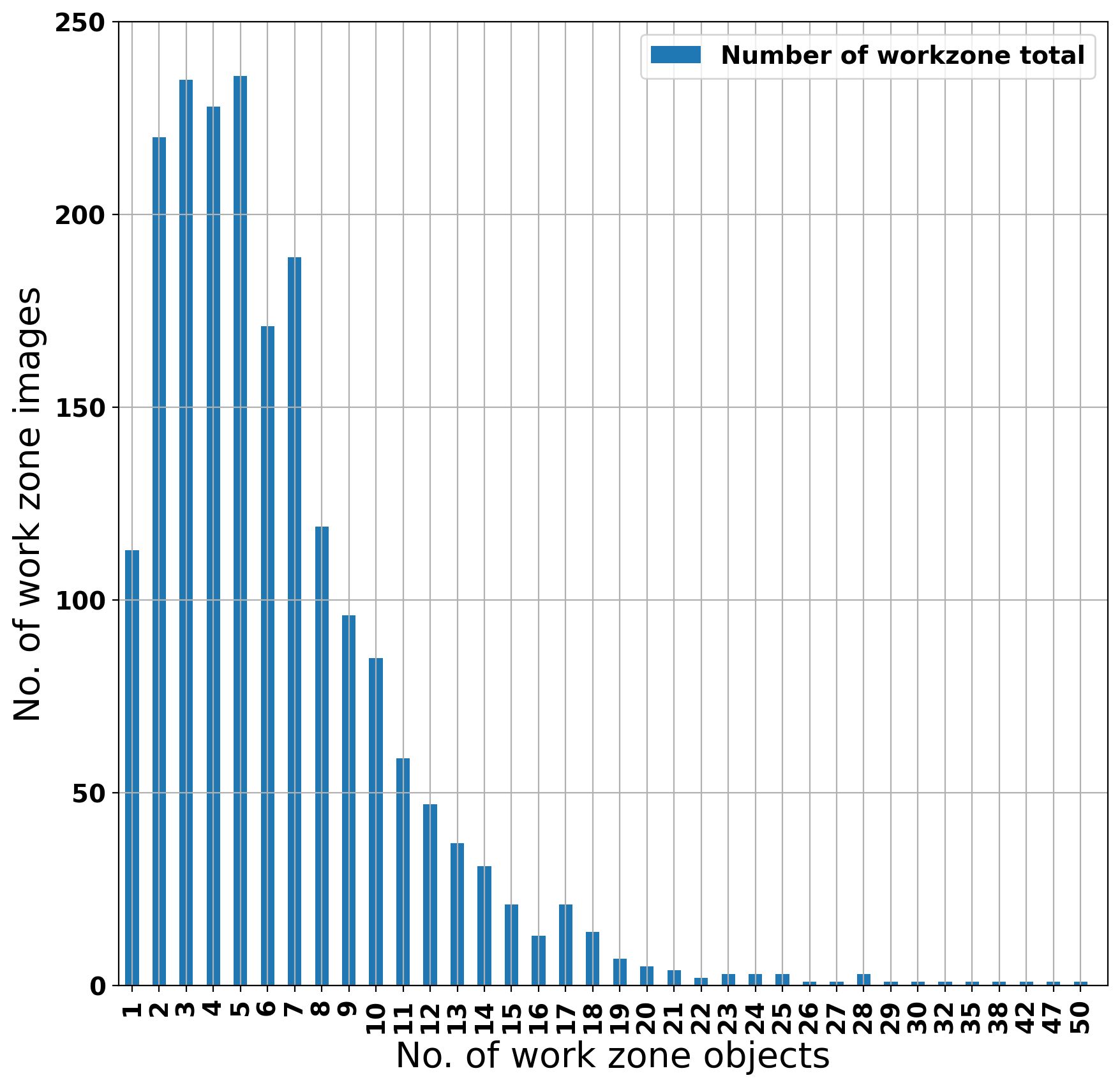

| Distribution of Work Zone Objects Per Image | Instance Distribution Of Work Zone Objects |

|---|---|

|

|

| Pixel Distribution |

|---|

| |

The first model is based on Unet architecture [1]. The configurations to run the Unet model are present in ./configs/config_unet.yaml. One can choose different backbones based on [1]. Also, the available choices for optimizers are: 'ADAM', 'ASGD', 'SGD', 'RMSprop' & 'AdamW'.

To train the model, give the required values in the config file. All the outputs will be stored under ./output

cd /opt/app python run_unet.py \ --config [optional:path to config file] --mode ['train', 'test', 'test_single'] \ --comment [optional:any comment while training] \ --weight [optional:custom path to weight] \ --device [optional:set device number if you have multiple GPUs]

For example, if one decides to change any parameter within the code to retrain the model, adding comment parameter will

create new folder to separate out results from different runs.

Using --mode as "train", trains the model and preforms testing on the test set as well. To run test on single images run

cd /opt/app python run_unet.py --mode "test_single"

New images can be added to ./data/test directory to perform additional testing on those images. Open source work zone images are also provided in the /data/test directory to perform cross-dataset testing. Also, custom path to weights can be provided for testing on images using --weight parameter (only applicable for testing on custom images present under ./data/test). By default, the best model path will be used. The training parameters are present below:-

| Training Parameters | Values |

|---|---|

| MODEL | Unet |

| BACKBONE | efficientnet-b0 |

| EPOCHS | 50 |

| LR | 0.001 |

| RESIZE_SHAPE | (480, 480) |

| BATCH_SIZE | 16 |

| OPTIMIZER | AdamW |

| #TRAINING Imgs. | 1643 |

| #VALIDATION Imgs. | 186 |

The best F1 score achieved on testing set of 173 images: 0.5869

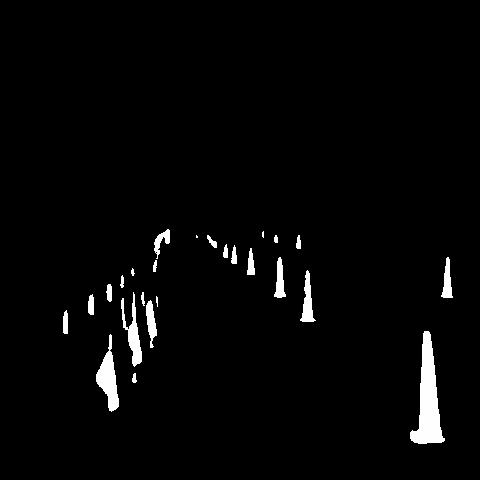

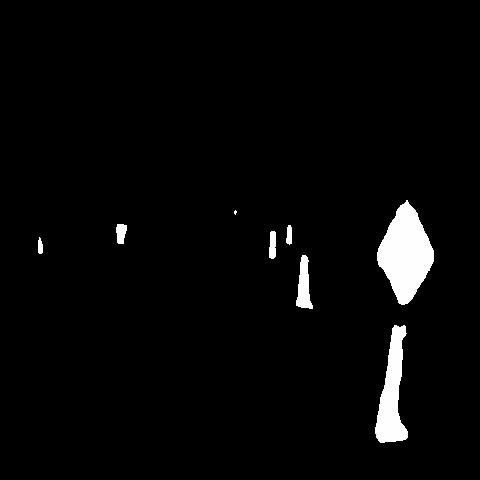

| Original Image | Predicted Work Zone Objects |

|---|---|

|

|

|

|

To run the model:

cd /opt/app python run_unet_patch.py \ --config [optional:path to config file] \ --mode ['train', 'test', 'test_single'] \ --comment [optional:any comment while training] \ --weight [optional:custom path to weight] \ --device [optional:set device number if you have multiple GPUs]

Training Parameters:

| Training Parameters | Values |

|---|---|

| MODEL | Unet |

| BACKBONE | densenet169 |

| EPOCHS | 100 |

| LR | 0.001 |

| CROP_SHAPE | (224, 224) |

| BATCH_SIZE | 32 |

| OPTIMIZER | AdamW |

| #TRAINING Imgs. | 1643 |

| #VALIDATION Imgs. | 186 |

The best F1 score achieved on testing set of 173 images: 0.611

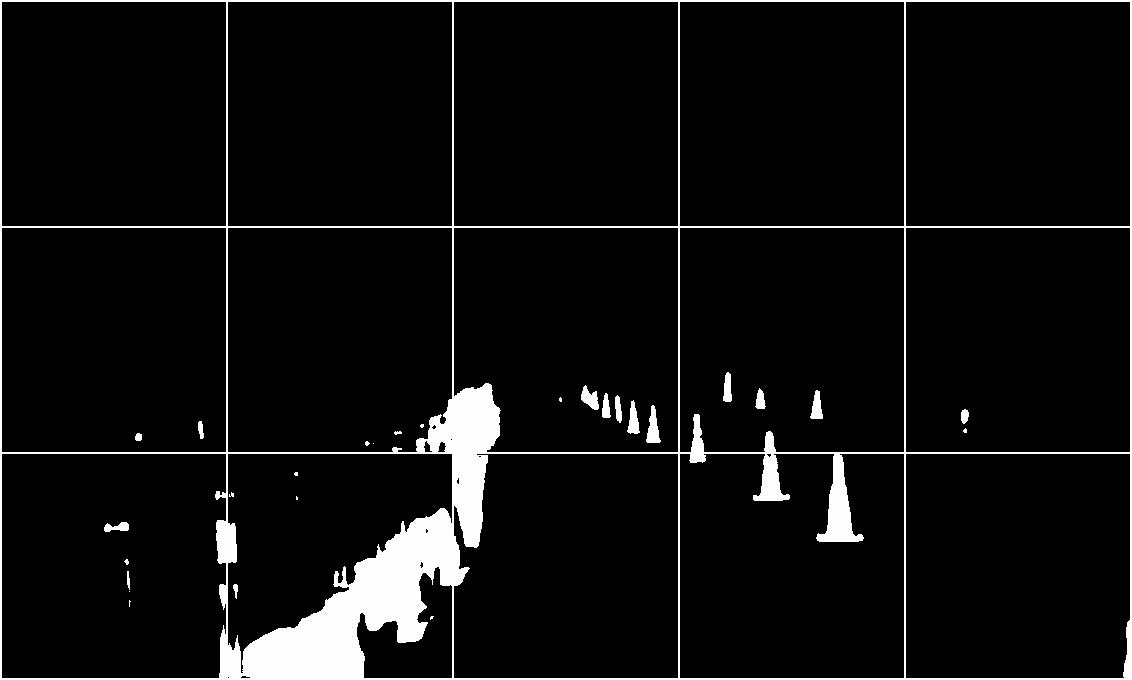

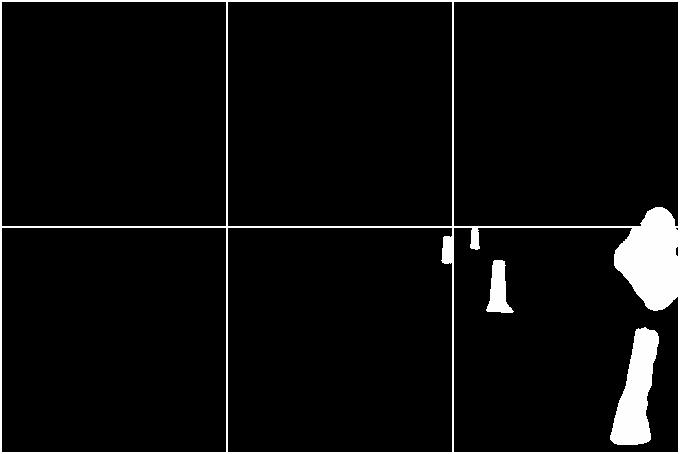

| Original Image | Predicted Work Zone Objects |

|---|---|

|

|

|

|

Additional results are present in ./output directory

Download the weights for the suitable pre-trained model into the project directory-

The config file (config_baseline.yaml) currently has ResNet18 as the model. To use ResNext50, use these values in the config-

MODEL: 'resnext50' BACKBONE: 'resnext50'

To run the model-

(Provide the relative path for the weight parameter to where you downloaded the above-mentioned pre-trained model weights.)

cd /opt/app python run_zone_baseline.py \ --config [optional:path to config file] \ --mode ['train', 'test', 'test_single'] \ --comment [optional:any comment while training] \ --weight [optional:custom path to weight] \ --device [optional:set device number if you have multiple GPUs]

To run the previous older version-

cd /opt/app python run_baseline.py \ --config [optional:path to config file] \ --mode ['train', 'test', 'test_single'] \ --comment [optional:any comment while training] \ --weight [optional:custom path to weight] \ --device [optional:set device number if you have multiple GPUs]

NOTE: This older version does not support ResNext50

Training Parameters:

| Training Parameters | Values |

|---|---|

| MODEL | Unet |

| BACKBONE | resnet18 |

| EPOCHS | 25 |

| LR | 0.001 |

| RESIZE_SHAPE | (240, 360) |

| BATCH_SIZE | 32 |

| OPTIMIZER | AdamW |

| #TRAINING Imgs. | 4250 |

| #VALIDATION Imgs. | 514 |

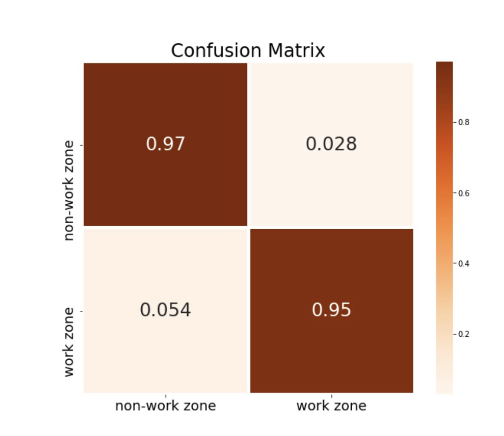

| Normalized confusion matrix |

|---|

|

[1] https://github.com/qubvel/segmentation_models.pytorch

[2] https://github.com/pytorch/pytorch