Wei Cao1* · Chang Luo1* · Biao Zhang2

Matthias Nießner1 · Jiapeng Tang1

1Technical University of Munich · 2King Abdullah University of Science and Technology

(* indicates equal contribution)

CVPR 2024

[Arxiv] [Paper] [Project Page] [Video]

We present Motion2VecSets,

a 4D diffusion model for dynamic surface reconstruction from sparse, noisy, or partial point cloud sequences.

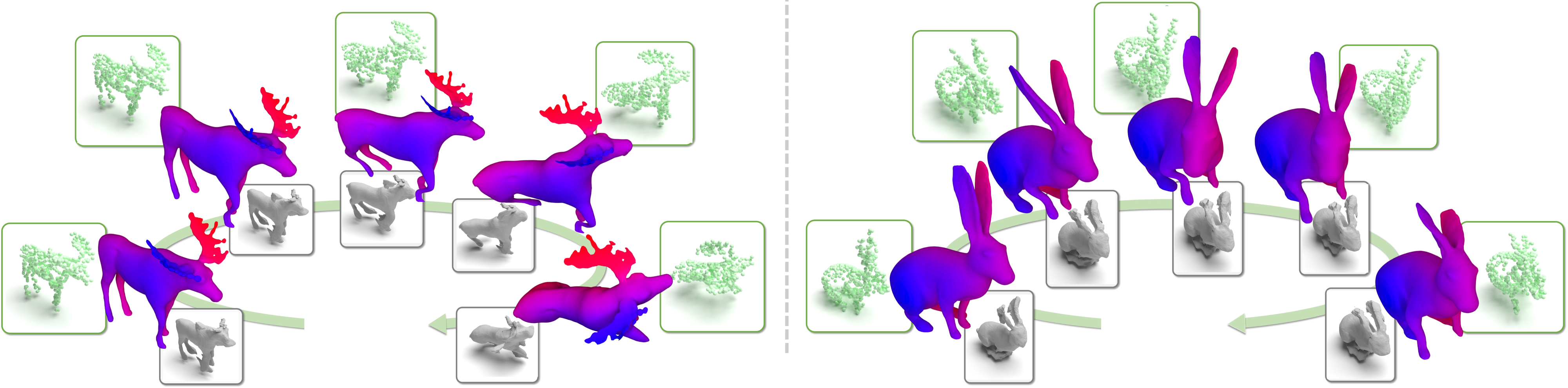

Compared to the existing state-of-the-art method CaDeX, our

method can reconstruct more plausible non-rigid object surfaces with complicated structures and achieve more

robust motion tracking.

We present Motion2VecSets,

a 4D diffusion model for dynamic surface reconstruction from sparse, noisy, or partial point cloud sequences.

Compared to the existing state-of-the-art method CaDeX, our

method can reconstruct more plausible non-rigid object surfaces with complicated structures and achieve more

robust motion tracking.

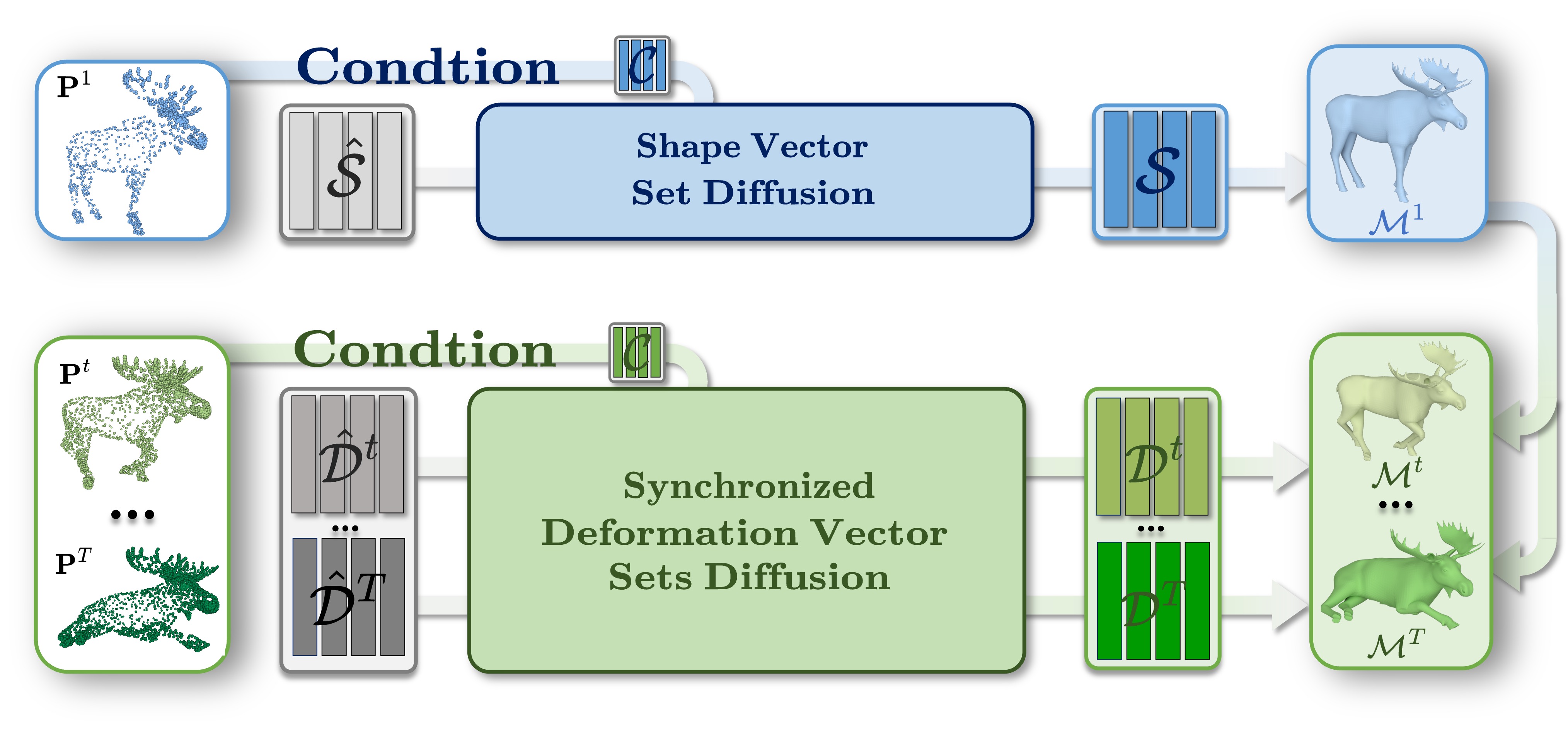

Given a sequence of sparse and noisy point clouds as inputs, labeled as Pt for t from 1 to T, Motion2VecSets outputs a continuous mesh sequence, labeled as Mt for t from 1 to T. The initial input frame, P1 (top left), is used as a condition in the Shape Vector Set Diffusion, which yields denoised shape codes, S, for reconstructing the geometry of the reference frame, M1 (top right). Concurrently, the subsequent input frames, Pt for t from 2 to T (bottom left), are utilized in the Synchronized Deformation Vector Sets Diffusion to produce denoised deformation codes, Dt for t from 2 to T, where each latent set, Dt, encodes the deformation from the reference frame, M1, to each subsequent frame, Mt.

Install the environment following scripts/init_environment.sh, to install with cuda 11.0, use the command bash scripts/init_environment.sh

You can find the data preparation scripts in /home/liang/m2v/dataset/dataset_generate or you can directly download the preprocessed dataset.

For pretrained models, you can directly put them in the ckpts directory, and there are two subfolders DFAUST and DT4D for two datasets respectively, you can get them from the Google Drive.

You can run the training with the following command, here we use the DFAUST dataset as an example. For DT4D dataset you may just change the path of config file.

# Shape

python core/run.py --config_path ./configs/DFAUST/train/dfaust_shape_ae.yaml # Shape AE

python core/run.py --config_path ./configs/DFAUST/train/dfaust_shape_diff_sparse.yaml # Diffusion Sparse Input

python core/run.py --config_path ./configs/DFAUST/train/dfaust_shape_diff_partial.yaml # Diffusion Partial Input

# Deformation

python core/run.py --config_path ./configs/DFAUST/train/dfaust_deform_ae.yaml # Deformation AE

python core/run.py --config_path ./configs/DFAUST/train/dfaust_deform_diff_sparse.yaml # Diffusion Sparse Input

python core/run.py --config_path ./configs/DFAUST/train/dfaust_deform_diff_partial.yaml # Diffusion Partial InputFor evaluation, you can run the following command:

python core/eval.py --config_path ./configs/DFAUST/eval/dfaust_eval_sparse.yaml # Test Sparse Unssen Sequence

python core/eval.py --config_path ./configs/DFAUST/eval/dfaust_eval_sparse.yaml --test_ui # Test Sparse Unssen Individual

python core/eval.py --config_path ./configs/DFAUST/eval/dfaust_eval_partial.yaml # Test Partial Unssen Sequence

python core/eval.py --config_path ./configs/DFAUST/eval/dfaust_eval_partial.yaml --test_ui # Test Partial Unssen IndividualYou can run demo/infer.py to get the predicted mesh sequence for the inputs located in demo/inputs, before running the demo, you need to download the pretrained models and put them in the ckpts directory.

@inproceedings{Wei2024M2V,

title={Motion2VecSets: 4D Latent Vector Set Diffusion for Non-rigid Shape Reconstruction and Tracking},

author={Wei Cao and Chang Luo and Biao Zhang and Matthias Nießner and Jiapeng Tang},

booktitle={Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition},

url={https://vveicao.github.io/projects/Motion2VecSets/},

year={2024}

}

This project is based on several wonderful projects:

- 3DShape2Vecset: https://github.com/1zb/3DShape2VecSet

- CaDeX: https://github.com/JiahuiLei/CaDeX

- LPDC: https://github.com/Gorilla-Lab-SCUT/LPDC-Net

- Occupancy Flow: https://github.com/autonomousvision/occupancy_flow

- DeformingThings4D: https://github.com/rabbityl/DeformingThings4D