This is a dedicated re-implementation of CLIP4STR: A Simple Baseline for Scene Text Recognition with Pre-trained Vision-Language Model .

- [02/05/2024] Add new CLIP4STR models pre-trained on DataComp-1B, LAION-2B, and DFN-5B. Add CLIP4STR models trained on RBU(6.5M).

This is a third-party implementation of the paper CLIP4STR: A Simple Baseline for Scene Text Recognition with Pre-trained Vision-Language Model.

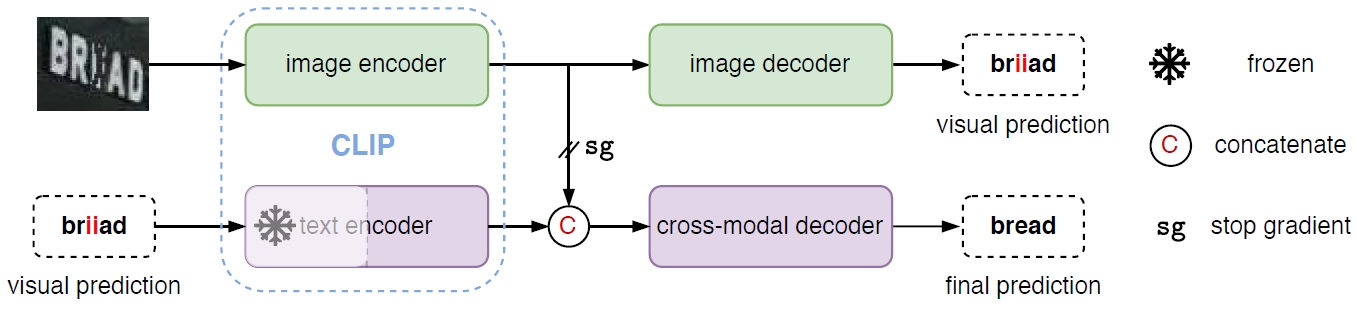

The framework of CLIP4STR. It has a visual branch and a cross-modal branch. The cross-modal branch refines the prediction of the visual branch for the final output. The text encoder is partially frozen.

CLIP4STR aims to build a scene text recognizer with the pre-trained vision-language model. In this re-implementation, we try to reproduce the performance of the original paper and evaluate the effectiveness of pre-trained VL models in the STR area.

First of all, you need to download the STR dataset.

-

We recommend you follow the instructions of PARSeq at its parseq/Datasets.md . The gdrive links are gdrive-link1 and gdrive-link2 from PARSeq.

-

For convenient, you can also download the STR dataset with real training images at BaiduYunPan str_dataset.

-

For the RBU(6.5M) training dataset, it is a combination of [the above STR dataset] + [val data of benchmarks (SVT, IIIT5K, IC13, IC15)] + [Union14M_L_lmdb_format]. For convenient, you can also download at BaiduYunPan str_dataset_ub.

-

weights of CLIP pre-trained models:

- CLIP-ViT-B/32

- CLIP-ViT-B/16

- CLIP-ViT-L/14

- OpenCLIP-ViT-B-16-DataComp-XL-s13B-b90K.bin

- OpenCLIP-ViT-L-14-DataComp-XL-s13B-b90K.bin

- OpenCLIP-ViT-H-14-laion2B-s32B-b79K.bin

- appleDFN5B-CLIP-ViT-H-14.bin

- For models from huggingface.co, you should rename them as the shown names.

Generally, directories are organized as follows:

${ABSOLUTE_ROOT}

├── dataset

│ │

│ ├── str_dataset_ub

│ └── str_dataset

│ ├── train

│ │ ├── real

│ │ └── synth

│ ├── val

│ └── test

│

├── code

│ │

│ └── clip4str

│

├── output (save the output of the program)

│

│

├── pretrained

│ └── clip (download the CLIP pre-trained weights and put them here)

│ └── ViT-B-16.pt

│

...

Requires Python >= 3.8 and PyTorch >= 1.12.

The following commands are tested on a Linux machine with CUDA Driver Version 525.105.17 and CUDA Version 11.3.

conda create --name clip4str python=3.8.5

conda install pytorch==1.12.0 torchvision==0.13.0 torchaudio==0.12.0 -c pytorch

pip install -r requirements.txt

If you meet problems in continual training of an intermediate checkpoint, try to upgrade your PyTorch

conda install pytorch==1.13.1 torchvision==0.14.1 torchaudio==0.13.1 pytorch-cuda=11.7 -c pytorch -c nvidia

CLIP4STR-B means using the CLIP-ViT-B/16 as the backbone, and CLIP4STR-L means using the CLIP-ViT-L/14 as the backbone.

| Method | Train data | IIIT5K | SVT | IC13 | IC15 | IC15 | SVTP | CUTE | HOST | WOST |

|---|---|---|---|---|---|---|---|---|---|---|

| 3,000 | 647 | 1,015 | 1,811 | 2,077 | 645 | 288 | 2,416 | 2,416 | ||

| CLIP4STR-B | MJ+ST | 97.70 | 95.36 | 96.06 | 87.47 | 84.02 | 91.47 | 94.44 | 80.01 | 86.75 |

| CLIP4STR-L | MJ+ST | 97.57 | 95.36 | 96.75 | 88.02 | 84.40 | 91.78 | 94.44 | 81.08 | 87.38 |

| CLIP4STR-B | Real(3.3M) | 99.20 | 98.30 | 98.23 | 91.44 | 90.61 | 96.90 | 99.65 | 77.36 | 87.87 |

| CLIP4STR-L | Real(3.3M) | 99.43 | 98.15 | 98.52 | 91.66 | 91.14 | 97.36 | 98.96 | 79.22 | 89.07 |

| Method | Train data | COCO | ArT | Uber | Checkpoint | |

|---|---|---|---|---|---|---|

| 9,825 | 35,149 | 80,551 | ||||

| CLIP4STR-B | MJ+ST | 66.69 | 72.82 | 43.52 | a5e3386222 | |

| CLIP4STR-L | MJ+ST | 67.45 | 73.48 | 44.59 | 3544c362f0 | |

| CLIP4STR-B | Real(3.3M) | 80.80 | 85.74 | 86.70 | d70bde1f2d | |

| CLIP4STR-L | Real(3.3M) | 81.97 | 85.83 | 87.36 | f125500adc |

All models are trained on RBU(6.5M).

| Method | Pre-train | Train | IIIT5K | SVT | IC13 | IC15 | IC15 | SVTP | CUTE | HOST | WOST |

|---|---|---|---|---|---|---|---|---|---|---|---|

| 3,000 | 647 | 1,015 | 1,811 | 2,077 | 645 | 288 | 2,416 | 2,416 | |||

| CLIP4STR-B | DC-1B | RBU | 99.5 | 98.3 | 98.6 | 91.4 | 91.1 | 98.0 | 99.0 | 79.3 | 88.8 |

| CLIP4STR-L | DC-1B | RBU | 99.6 | 98.6 | 99.0 | 91.9 | 91.4 | 98.1 | 99.7 | 81.1 | 90.6 |

| CLIP4STR-H | LAION-2B | RBU | 99.7 | 98.6 | 98.9 | 91.6 | 91.1 | 98.5 | 99.7 | 80.6 | 90.0 |

| CLIP4STR-H | DFN-5B | RBU | 99.5 | 99.1 | 98.9 | 91.7 | 91.0 | 98.0 | 99.0 | 82.6 | 90.9 |

| Method | Pre-train | Train | COCO | ArT | Uber | log | Checkpoint |

|---|---|---|---|---|---|---|---|

| 9,825 | 35,149 | 80,551 | |||||

| CLIP4STR-B | DC-1B | RBU | 81.3 | 85.8 | 92.1 | 6e9fe947ac_log | 6e9fe947ac, BaiduYun |

| CLIP4STR-L | DC-1B | RBU | 82.7 | 86.4 | 92.2 | 3c9d881b88_log | 3c9d881b88, BaiduYun |

| CLIP4STR-H | LAION-2B | RBU | 82.5 | 86.2 | 91.2 | 5eef9f86e2_log | 5eef9f86e2, BaiduYun |

| CLIP4STR-H | DFN-5B | RBU | 83.0 | 86.4 | 91.7 | 3e942729b1_log | 3e942729b1, BaiduYun |

- Before training, you should set the path properly. Find the

/PUT/YOUR/PATH/HEREinconfigs,scripts,strhub/vl_str, andstrhub/str_adapter. For example, the/PUT/YOUR/PATH/HEREin theconfigs/main.yaml. Then replace it with your own path. A global searching and replacement is recommended.

For CLIP4STR with CLIP-ViT-B, refer to

bash scripts/vl4str_base.sh

8 NVIDIA GPUs with more than 24GB memory (per GPU) are required.

For users with limited GPUs,

you can change trainer.gpus=A, trainer.accumulate_grad_batches=B, and model.batch_size=C under the condition A * B * C = 1024 in the bash scripts.

Do not modify the code, the PyTorch Lightning will handle the left.

For CLIP4STR with CLIP-ViT-L, refer to

bash scripts/vl4str_large.sh

We also provide the training script of CLIP4STR + Adapter described in the original paper,

bash scripts/str_adapter.sh

bash test.sh {gpu_id} {subpath_of_ckpt}

For example,

bash scripts/test.sh 0 clip4str_base16x16_d70bde1f2d.ckpt

If you want to read characters from an image, try:

bash test.sh {gpu_id} {subpath_of_ckpt} {image_folder_path}

For example,

bash scripts/read.sh 0 clip4str_base16x16_d70bde1f2d.ckpt misc/test_images

Output:

image_1576.jpeg: Chicken

@article{zhao2023clip4str,

title={Clip4str: A simple baseline for scene text recognition with pre-trained vision-language model},

author={Zhao, Shuai and Quan, Ruijie and Zhu, Linchao and Yang, Yi},

journal={arXiv preprint arXiv:2305.14014},

year={2023}

}

- baudm/parseq

- openai/CLIP

- mlfoundations/open_clip

- huggingface/transformers

- large-ocr-model/large-ocr-model.github.io

- Mountchicken/Union14M

- mzhaoshuai/CenterCLIP

- VamosC/CoLearning-meet-StitchUp

- VamosC/CapHuman

- Dr. Xiaohan Wang from Stanford University.