The implementation of TNNLS 2020 paper "3D Quasi-Recurrent Neural Network for Hyperspectral Image Denoising"

🌟 See also the follow up works of QRNN3D:

- DPHSIR - Plug-and-play QRNN3D that solve any HSI restoration task in one model.

- HSDT - State-of-the-art HSI denoising transformer that follows up 3D paradigam of QRNN3D.

- MAN - Improved QRNN3D with significant performance improvement and less parameters.

📉 Performance: QRNN3D < DPHSIR < MAN < HSDT

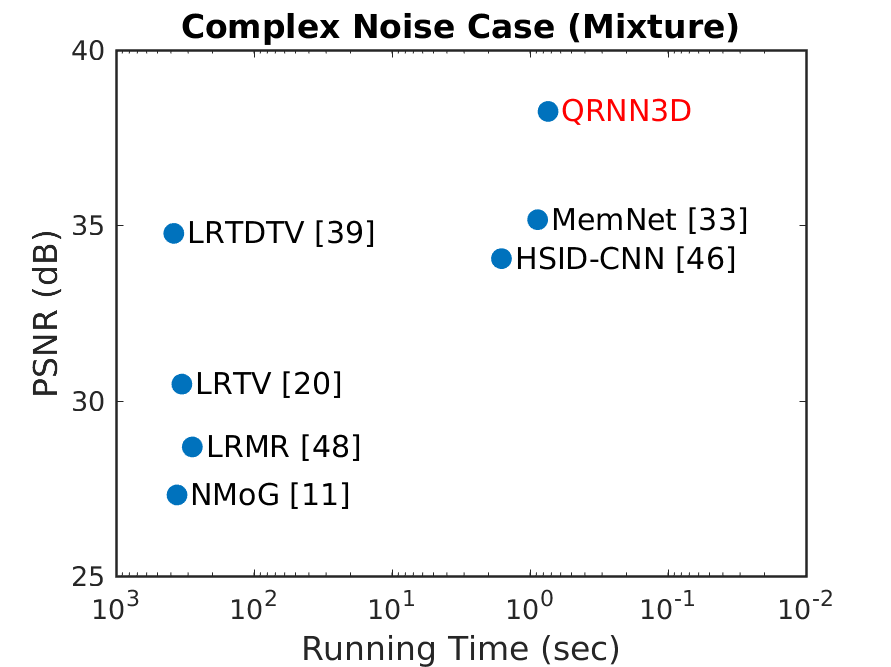

- Our network outperforms all leading-edge methods (2019) on ICVL dataset in both Gaussian and complex noise cases, as shown below:

- We demonstrated our network pretrained on 31-bands natural HSI database (ICVL) can be utilized to recover remotely-sensed HSI (> 100 bands) corrupted by real-world non-Gaussian noise due to terrible atmosphere and water absorptions

- Python >=3.5, PyTorch >= 0.4.1

- Requirements: opencv-python, tensorboardX, caffe

- Platforms: Ubuntu 16.04, cuda-8.0

Download ICVL hyperspectral image database from here (we only need .mat version)

- The train-test split can be found in

ICVL_train.txtandICVL_test_*.txt. (Note we split the 101 testing data into two parts for Gaussian and complex denoising respectively.)

Note cafe (via conda install) and lmdb are required to execute the following instructions.

-

Read the function

create_icvl64_31inutility/lmdb_data.pyand follow the instruction comment to define your data/dataset address. -

Create ICVL training dataset by

python utility/lmdb_data.py

Note matlab is required to execute the following instructions.

-

Read the matlab code of

matlab/generate_dataset*to understand how we generate noisy HSIs. -

Read and modify the matlab code of

matlab/HSIData.mto generate your own testing dataset

-

Download our pretrained models from OneDrive and move them to

checkpoints/qrnn3d/gauss/andcheckpoints/qrnn3d/complex/respectively. -

[Blind Gaussian noise removal]:

python hsi_test.py -a qrnn3d -p gauss -r -rp checkpoints/qrnn3d/gauss/model_epoch_50_118454.pth -

[Mixture noise removal]:

python hsi_test.py -a qrnn3d -p complex -r -rp checkpoints/qrnn3d/complex/model_epoch_100_159904.pth

You can also use hsi_eval.py to evaluate quantitative HSI denoising performance.

-

Training a blind Gaussian model firstly by

python hsi_denoising_gauss.py -a qrnn3d -p gauss --dataroot (your own dataroot) -

Using the pretrained Gaussian model as initialization to train a complex model:

python hsi_denoising_complex.py -a qrnn3d -p complex --dataroot (your own dataroot) -r -rp checkpoints/qrnn3d/gauss/model_epoch_50_118454.pth --no-ropt

If you find this work useful for your research, please cite:

@article{wei2020QRNN3D,

title={3-D Quasi-Recurrent Neural Network for Hyperspectral Image Denoising},

author={Wei, Kaixuan and Fu, Ying and Huang, Hua},

journal={IEEE Transactions on Neural Networks and Learning Systems},

year={2020},

publisher={IEEE}

}and follow up works

@article{lai2022dphsir,

title = {Deep plug-and-play prior for hyperspectral image restoration},

journal = {Neurocomputing},

volume = {481},

pages = {281-293},

year = {2022},

issn = {0925-2312},

doi = {https://doi.org/10.1016/j.neucom.2022.01.057},

author = {Zeqiang Lai and Kaixuan Wei and Ying Fu},

}

@inproceedings{lai2023hsdt,

author = {Lai, Zeqiang and Chenggang, Yan and Fu, Ying},

title = {Hybrid Spectral Denoising Transformer with Guided Attention},

booktitle={Proceedings of the IEEE International Conference on Computer Vision},

year = {2023},

}

@article{lai2023mixed,

title={Mixed Attention Network for Hyperspectral Image Denoising},

author={Lai, Zeqiang and Fu, Ying},

journal={arXiv preprint arXiv:2301.11525},

year={2023}

}Please contact me if there is any question (kxwei@princeton.edu kaixuan_wei@bit.edu.cn)