[Project Page] [arXiv] [PDF] [Suppli] [Slides] [BibTeX]

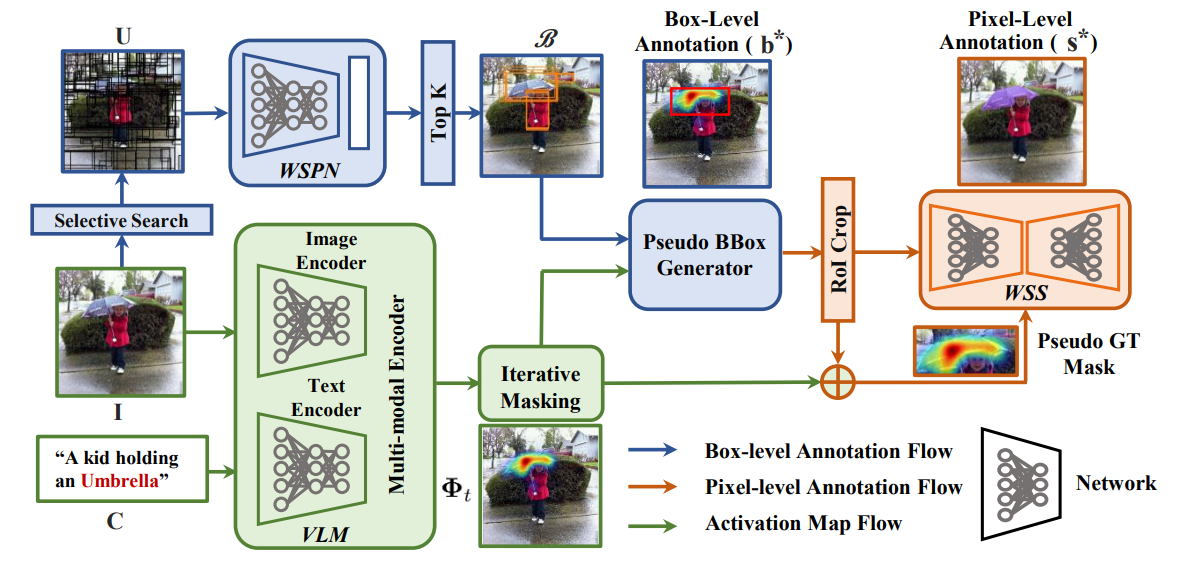

- Utilize a pre-trained Vision-Language model to identify and localize the object of interest using GradCAM.

- Employ a weakly-supervised proposal generator to generate bounding box proposals and select the proposal with the highest overlap with the GradCAM map.

- Crop the image based on the selected proposal and leverage the GradCAM map as a weak prompt to extract a mask using a weakly-supervised segmentation network.

- Using these generated masks, train an instance segmentation model (Mask-RCNN) eliminating the need for human-provided box-level or pixel-level annotations.

UBUNTU="18.04"

CUDA="11.0"

CUDNN="8"

conda create --name pseduo_mask_gen

conda activate pseduo_mask_gen

bash pseduo_mask_gen.sh

- Referring examples/README.md for data preparation

python pseudo_mask_generator.py

- Organize dataset in COCO format

python prepare_coco_dataset.py

- Extract text embedding using CLIP

# pip install git+https://github.com/openai/CLIP.git

python prepare_clip_embedding_for_open_vocab.py

- Check your final pseudo-mask by visualization

python visualize_coco_style_dataset.py

conda create --name maskfree_ovis

conda activate maskfree_ovis

cd $INSTALL_DIR

bash ovis.sh

git clone https://github.com/NVIDIA/apex.git

cd apex

python setup.py install --cuda_ext --cpp_ext

cd ../

cuda_dir="maskrcnn_benchmark/csrc/cuda"

perl -i -pe 's/AT_CHECK/TORCH_CHECK/' $cuda_dir/deform_pool_cuda.cu $cuda_dir/deform_conv_cuda.cu

python setup.py build develop

- Follow steps in datasets/README.md for data preparation

python -m torch.distributed.launch --nproc_per_node=8 tools/train_net.py --distributed \

--config-file configs/pretrain_pseduo_mask.yaml OUTPUT_DIR $OUTPUT_DIR

python -m torch.distributed.launch --nproc_per_node=8 tools/train_net.py --distributed \

--config-file configs/finetune.yaml MODEL.WEIGHT $PATH_TO_PRETRAIN_MODEL OUTPUT_DIR $OUTPUT_DIR

'Coming Soon...!!!'

If you found Mask-free OVIS useful in your research, please consider starring ⭐ us on GitHub and citing 📚 us in your research!

@inproceedings{vs2023mask,

title={Mask-free OVIS: Open-Vocabulary Instance Segmentation without Manual Mask Annotations},

author={VS, Vibashan and Yu, Ning and Xing, Chen and Qin, Can and Gao, Mingfei and Niebles, Juan Carlos and Patel, Vishal M and Xu, Ran},

booktitle={Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition},

pages={23539--23549},

year={2023}

}