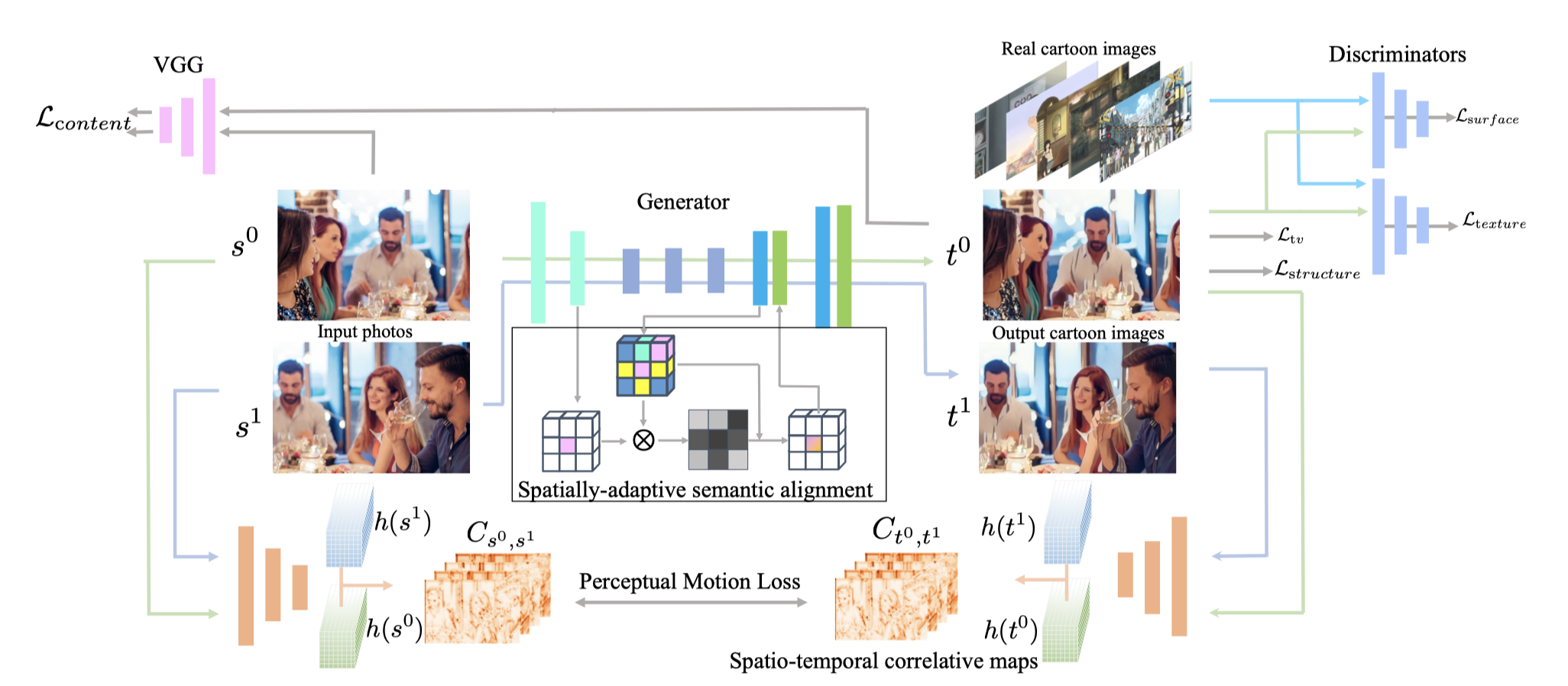

Unsupervised Coherent Video Cartoonization with Perceptual Motion Consistency. AAAI 2022. Arxiv

conda env create -f environment.yaml

conda activate video-animation- Preparing Training Data Download datasets from this drive and unzip to datasets folder.

- Download pretrained vgg from here and unzip, put it to

models/vgg19.npy - Start training.

CUDA_VISIBLE_DEVICES=0 python train.py --exp_name with-pmc --temporal_weight 1.0

Download Pretrained Network from google drive.

- Translate images in input directory and save into output directory.

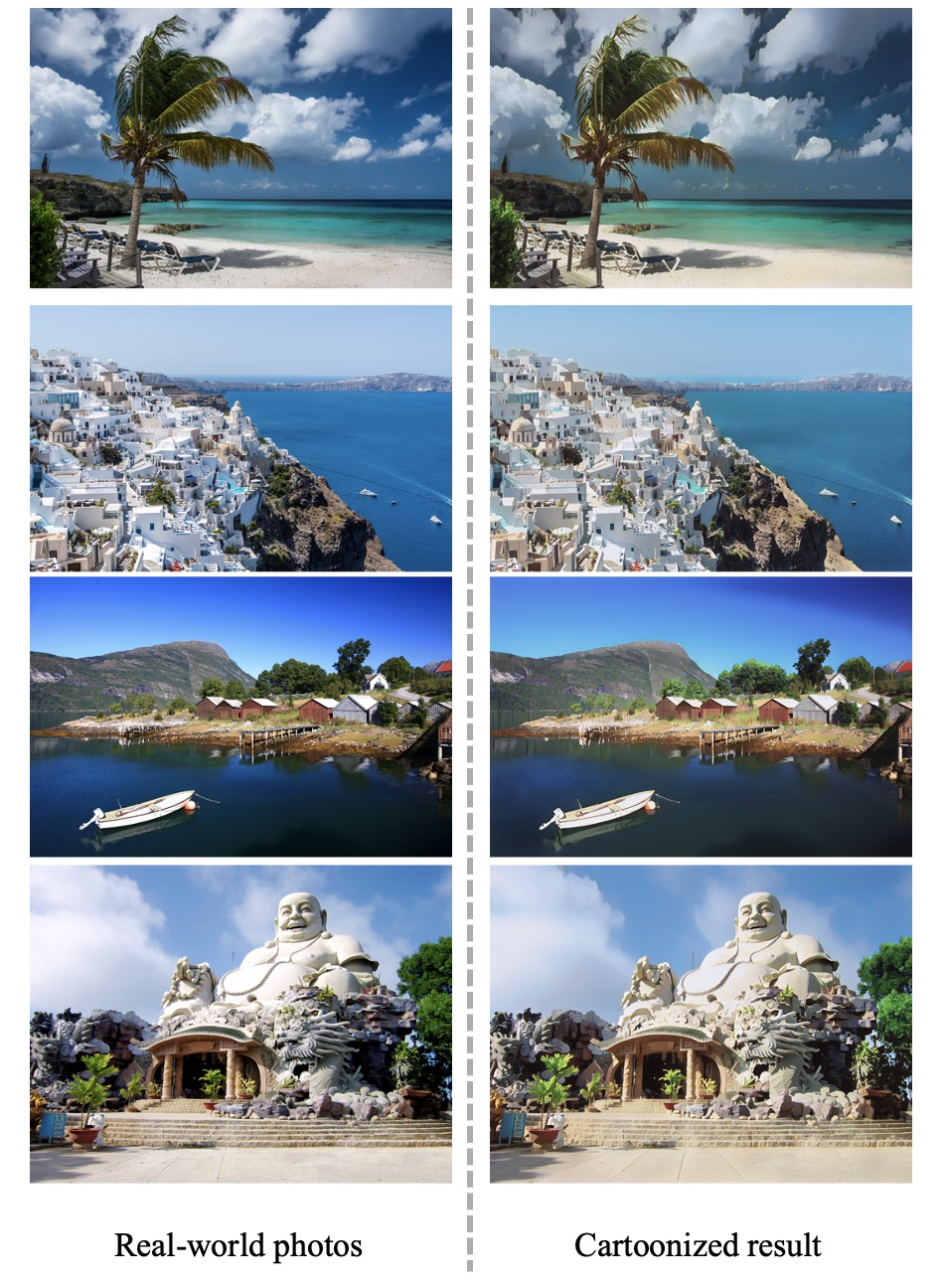

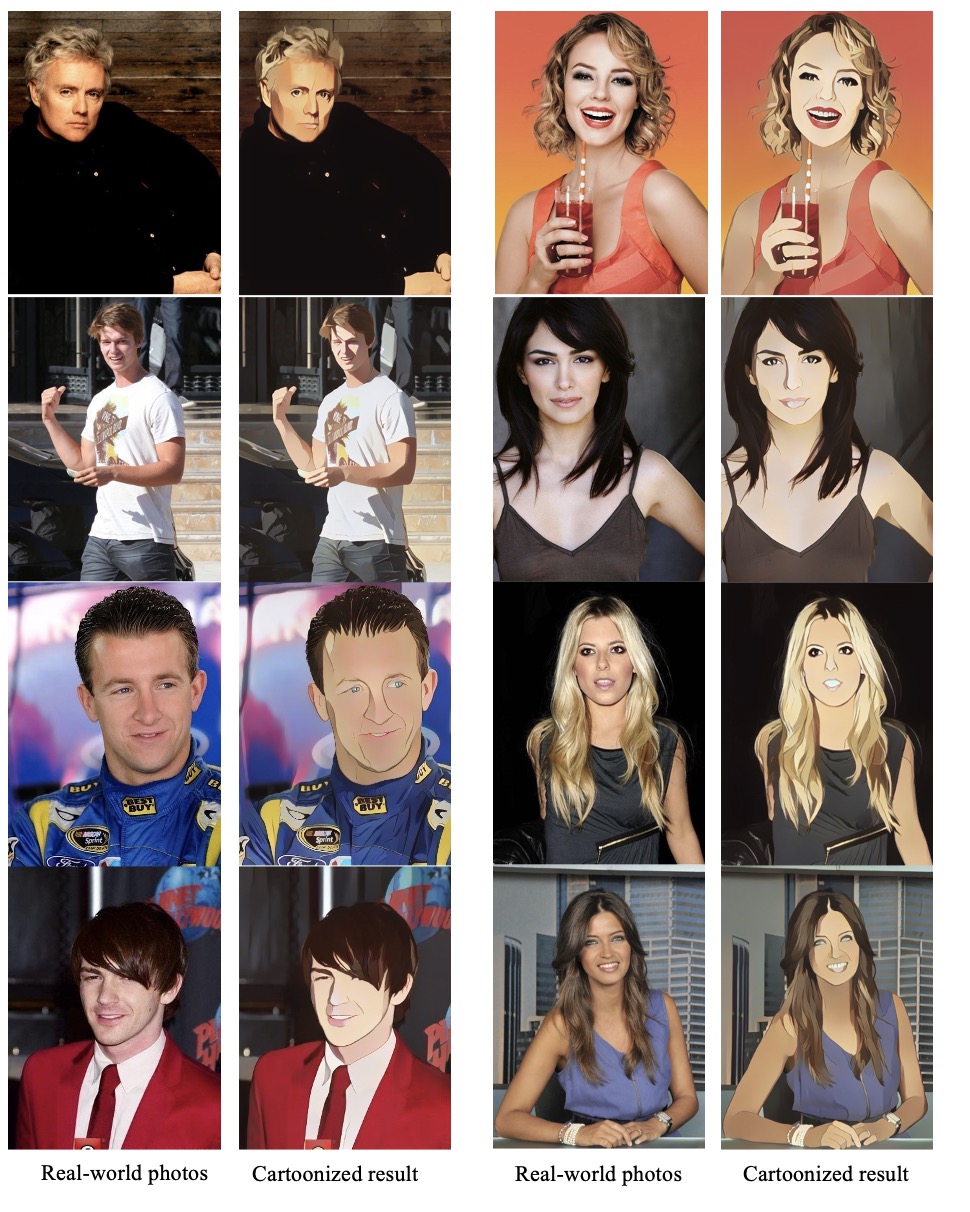

python inference.py --input_path ${your_input_folder} --output_path ${your_output_folder} --model_path pretrained.ckptpython translate_video.py --input_video ${your_input_video} --output_dir ${your_output_folder} --model_path pretrained.ckpt| Images |

|---|

|

|

|

|

@inproceedings{Liu2022UnsupervisedCV,

title={Unsupervised Coherent Video Cartoonization with Perceptual Motion Consistency},

author={Zhenhuan Liu and Liang Li and Huajie Jiang and Xin Jin and Dandan Tu and Shuhui Wang and Zhengjun Zha},

booktitle={AAAI},

year={2022}

}

- WhiteBoxGAN by @SystemErrorWang

- Pytorch Lightening