ViewFormer.mp4

ViewFormer: Exploring Spatiotemporal Modeling for Multi-View 3D Occupancy Perception via View-Guided Transformers, ECCV 2024

- [2024/7/01]: 🚀 ViewFormer is accepted by ECCV 2024.

- [2024/5/15]: 🚀 ViewFormer ranks 1st on the occupancy trick of RoboDrive Challenge!

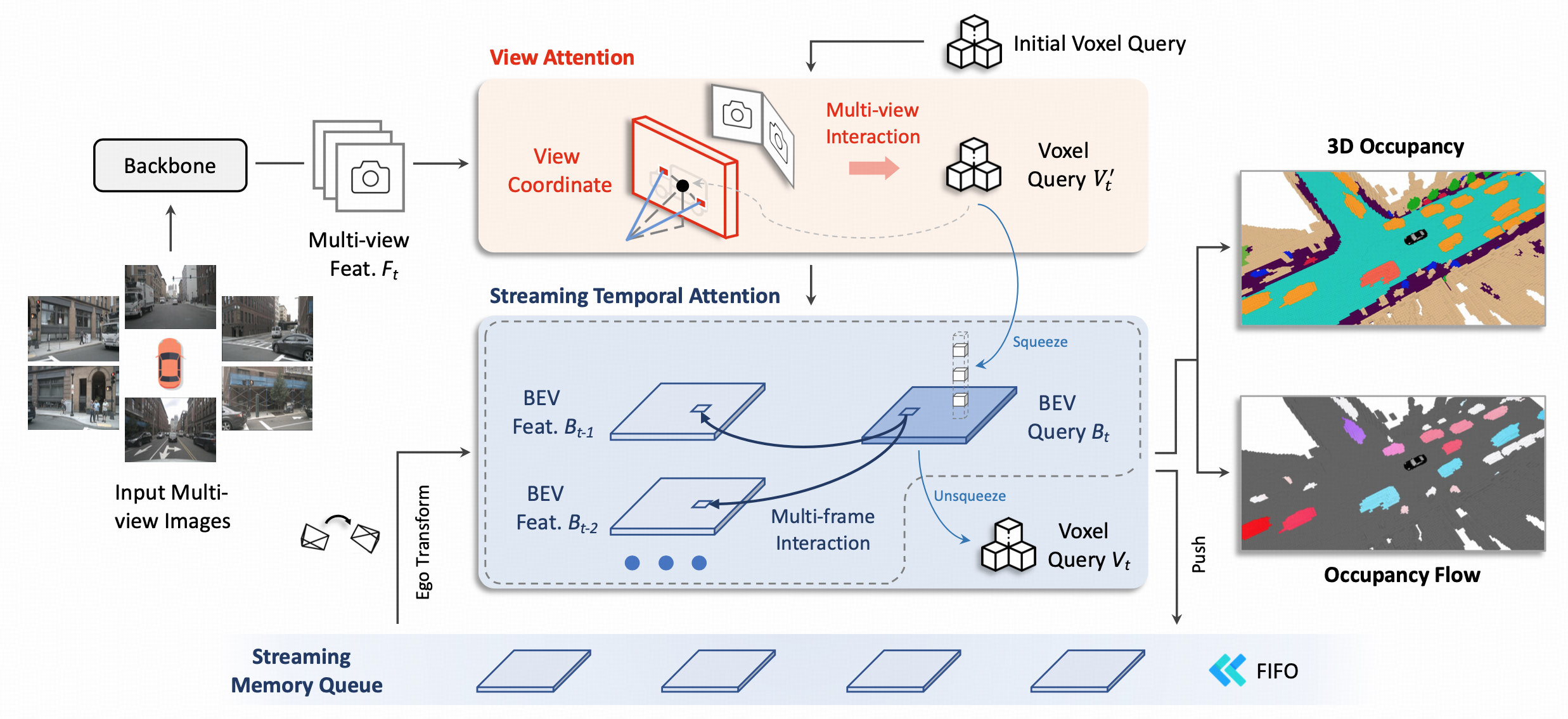

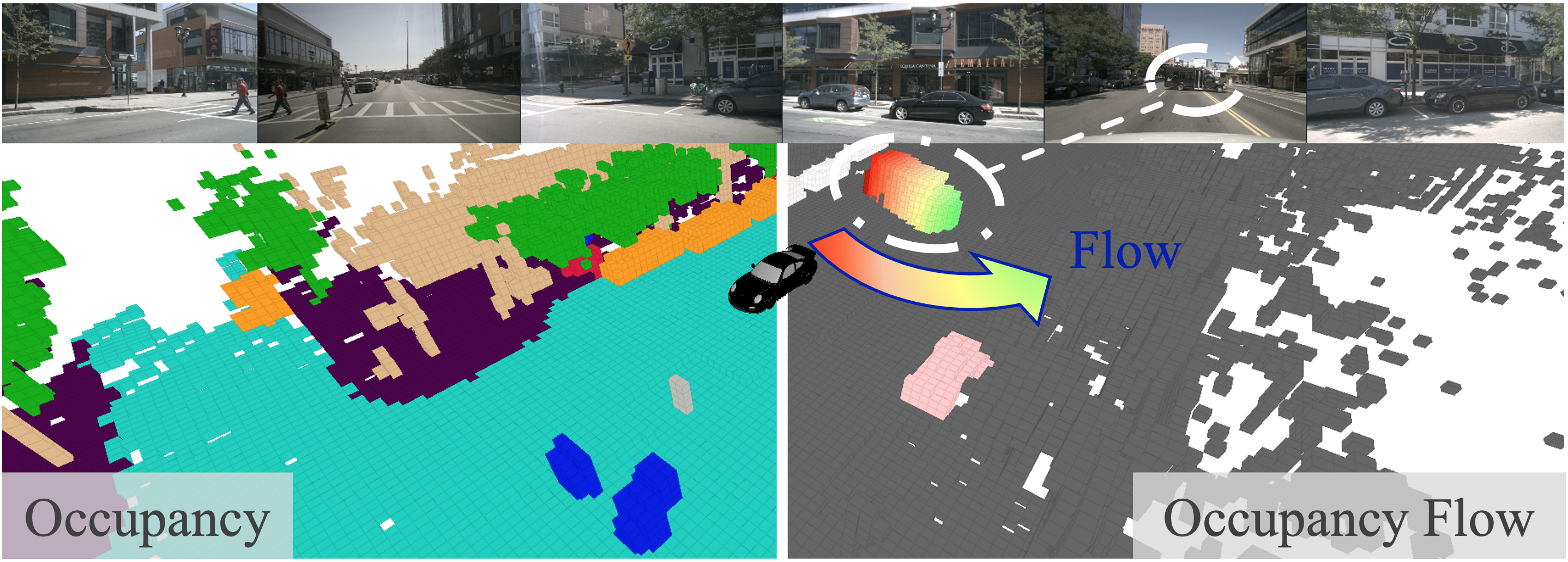

3D occupancy, an advanced perception technology for driving scenarios, represents the entire scene without distinguishing between foreground and background by quantifying the physical space into a grid map. The widely adopted projection-first deformable attention, efficient in transforming image features into 3D representations, encounters challenges in aggregating multi-view features due to sensor deployment constraints. To address this issue, we propose our learning-first view attention mechanism for effective multi-view feature aggregation. Moreover, we showcase the scalability of our view attention across diverse multi-view 3D tasks, including map construction and 3D object detection. Leveraging the proposed view attention as well as an additional multi-frame streaming temporal attention, we introduce ViewFormer, a vision-centric transformer-based framework for spatiotemporal feature aggregation. To further explore occupancy-level flow representation, we present FlowOcc3D, a benchmark built on top of existing high-quality datasets. Qualitative and quantitative analyses on this benchmark reveal the potential to represent fine-grained dynamic scenes. Extensive experiments show that our approach significantly outperforms prior state-of-the-art methods.

Please follow our documentations to get started.

Results on Occ3D(based on nuScenes) Val Set.

| Method | Backbone | Pretrain | Lr Schd | mIoU | Config | Download |

|---|---|---|---|---|---|---|

| ViewFormer | R50 | R50-depth | 90ep | 41.85 | config | model |

| ViewFormer | InternT | COCO | 24ep | 43.61 | config | model |

Note:

- Since we do not adopt the CBGS setting, our 90-epoch schedule is equivalent to the 20-epoch schedule in FB-OCC, which extends the training period by approximately 4.5 times.

Results on FlowOcc3D Val Set.

| Method | Backbone | Pretrain | Lr Schd | mIoU | mAVE | Config | Download |

|---|---|---|---|---|---|---|---|

| ViewFormer | InternT | COCO | 24ep | 42.54 | 0.412 | config | model |

Note:

- The difference between COCO pre-trained weights and ImageNet pre-trained weights in our experiments is minimal. ImageNet pre-trained weights achieve slightly higher accuracy. We maintain the COCO pre-trained weights here to fully replicate the accuracy reported in our paper.

We are grateful for these great works as well as open source codebases.

- 3D Occupancy: Occ3D, OccNet, FB-OCC.

- 3D Detection: MMDetection3d, DETR3D, PETR, BEVFormer, BEVDepth, SOLOFusion, StreamPETR.

Please also follow our visualization tool Oviz, if you are interested in the visualization in our paper.

If this work is helpful for your research, please consider citing the following BibTeX entry.

@article{li2024viewformer,

title={ViewFormer: Exploring Spatiotemporal Modeling for Multi-View 3D Occupancy Perception via View-Guided Transformers},

author={Jinke Li and Xiao He and Chonghua Zhou and Xiaoqiang Cheng and Yang Wen and Dan Zhang},

journal={arXiv preprint arXiv:2405.04299},

year={2024},

}