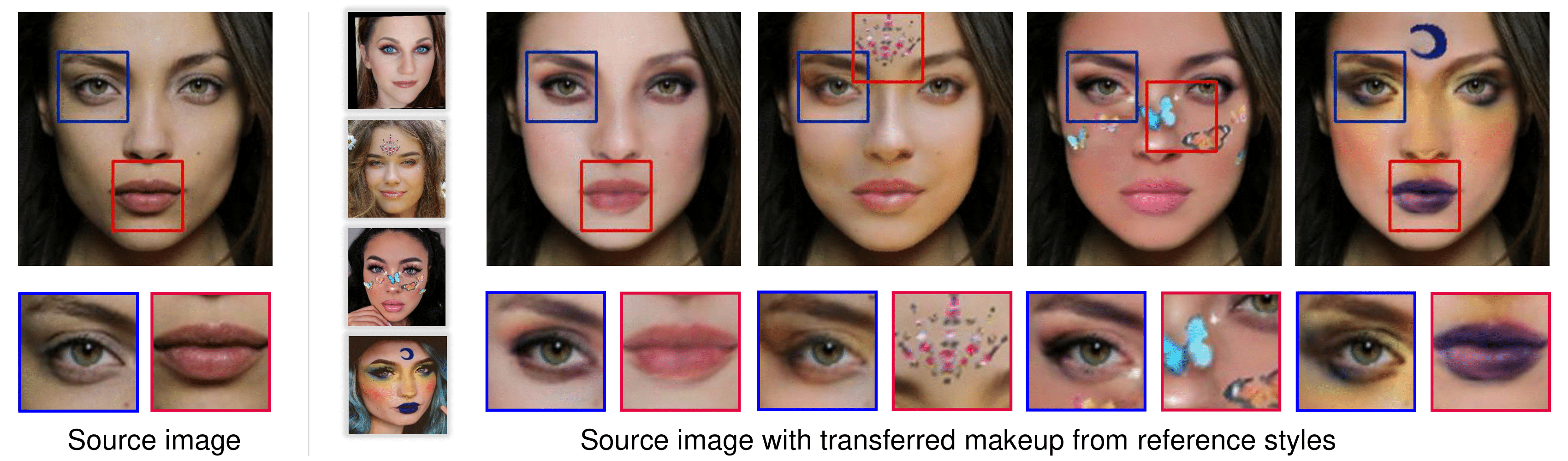

- CPM is a holistic makeup transfer framework that outperforms previous state-of-the-art models on both light and extreme makeup styles.

- CPM consists of an improved color transfer branch (based on BeautyGAN) and a novel pattern transfer branch.

- We also introduce 4 new datasets (both real and synthesis) to train and evaluate CPM.

📢 New: We provide ❝Qualitative Performane Comparisons❞ online! Check it out!

|

|---|

| CPM can replicate both colors and patterns from a reference makeup style to another image. |

Details of the dataset construction, model architecture, and experimental results can be found in our following paper:

@inproceedings{m_Nguyen-etal-CVPR21,

author = {Thao Nguyen and Anh Tran and Minh Hoai},

title = {Lipstick ain't enough: Beyond Color Matching for In-the-Wild Makeup Transfer},

year = {2021},

booktitle = {Proceedings of the {IEEE} Conference on Computer Vision and Pattern Recognition (CVPR)}

}

Please CITE our paper whenever our datasets or model implementation is used to help produce published results or incorporated into other software.

We introduce ✨ 4 new datasets: CPM-Real, CPM-Synt-1, CPM-Synt-2, and Stickers datasets. Besides, we also use published LADN's Dataset & Makeup Transfer Dataset.

CPM-Real and Stickers are crawled from Google Image Search, while CPM-Synt-1 & 2 are built on Makeup Transfer and Stickers. (Click on dataset name to download)

| Name | #imgs | Description | - |

|---|---|---|---|

| CPM-Real | 3895 | real - makeup styles |  |

| CPM-Synt-1 | 5555 | synthesis - makeup images with pattern segmentation mask |  |

| CPM-Synt-2 | 1625 | synthesis - triplets: makeup, non-makeup, ground-truth |  |

| Stickers | 577 | high-quality images with alpha channel |  |

Dataset Folder Structure can be found here.

By downloading these datasets, USER agrees:

- to use these datasets for research or educational purposes only

- to not distribute the datasets or part of the datasets in any original or modified form.

- and to cite our paper whenever these datasets are employed to help produce published results.

- python=3.7

- torch==1.6.0

- tensorflow-gpu==1.14

- segmentation_models_pytorch

# clone the repo

git clone https://github.com/VinAIResearch/CPM.git

cd CPM

# install dependencies

conda env create -f environment.yml- Download CPM’s pre-trained models: color.pth and pattern.pth. Put them in

checkpointsfolder.

mkdir checkpoints

cd checkpoints

wget https://public.vinai.io/CPM_checkpoints/color.pth

wget https://public.vinai.io/CPM_checkpoints/pattern.pth- Download [PRNet pre-trained model] from Drive. Put it in

PRNet/net-data

➡️ You can now try it in Google Colab

# Color+Pattern:

CUDA_VISIBLE_DEVICES=0 python main.py --style ./imgs/style-1.png --input ./imgs/non-makeup.png

# Color Only:

CUDA_VISIBLE_DEVICES=0 python main.py --style ./imgs/style-1.png --input ./imgs/non-makeup.png --color_only

# Pattern Only:

CUDA_VISIBLE_DEVICES=0 python main.py --style ./imgs/style-1.png --input ./imgs/non-makeup.png --pattern_onlyResult image will be saved in result.png

As stated in the paper, the Color Branch and Pattern Branch are totally independent. Yet, they shared the same workflow:

-

Data preparation: Generating texture_map of faces.

-

Training

Please redirect to Color Branch or Pattern Branch for further details.

🌿 If you have trouble running the code, please read Trouble Shooting before creating an issue. Thank you 🌿

-

[Solved]

ImportError: libGL.so.1: cannot open shared object file: No such file or directory:sudo apt update sudo apt install libgl1-mesa-glx -

[Solved]

RuntimeError: Expected tensor for argument #1 'input' to have the same device as tensor for argument #2 'weight'; but device 1 does not equal 0 (while checking arguments for cudnn_convolution)Add CUDA VISIBLE DEVICES before .py. Ex:CUDA_VISIBLE_DEVICES=0 python main.py -

[Solved]

RuntimeError: cuda runtime error (999) : unknown error at /opt/conda/conda-bld/pytorch_1595629403081/work/aten/src/THC/THCGeneral.cpp:47sudo rmmod nvidia_uvm sudo modprobe nvidia_uvm

docker build -t name .