- Project Description 📝

- Project Structure 🏗️

- Necessary Installations 🛠️

- Train Pipeline 🚂

- Continuous Integration Pipeline 🔁

- Alert Reports 📧

- Prediction App 🎯

- Neptune.ai Dashboard 🌊

- Docker Configuration 🐳

- GitHub Actions 🛠️

- Running the Project 🚀

Welcome to the Sales Conversion Optimization Project! 📈 This project focuses on enhancing sales conversion rates through meticulous data handling and efficient model training. The goal is to optimize conversions using a structured pipeline and predictive modeling.

We've structured this project to streamline the process from data ingestion and cleaning to model training and evaluation. With an aim to empower efficient decision-making, our pipelines incorporate quality validation tests, drift analysis, and rigorous model performance evaluations.

This project aims to streamline your sales conversion process, providing insights and predictions to drive impactful business decisions! 📊✨

Let's dive into the project structure! 📁 Here's a breakdown of the directory:

-

steps Folder 📂

- ingest_data

- clean_data

- train_model

- evaluation

- production_batch_data

- predict_prod_data

-

src Folder 📁

- clean_data

- train_models

-

pipelines Folder 📂

- training_pipeline

- ci_cd_pipeline

-

models Folder 📁

- saved best H20.AI model

-

reports Folder 📂

- Failed HTML Evidently.ai reports

-

production data Folder 📁

- Production batch dataset

-

Other Files 📄

- requirements.txt

- run_pipeline.py

- ci-cd.py

This organized structure ensures a clear separation of concerns and smooth pipeline execution. 🚀

To ensure the smooth functioning of this project, several installations are required:

-

Clone this repository to your local machine.

git clone https://github.com/VishalKumar-S/Sales_Conversion_Optimization_MLOps_Project cd Sales_Conversion_Optimization_MLOps_Project -

Install the necessary Python packages.

pip install -r requirements.txt

-

ZenML Integration

pip install zenml["server"] zenml init #to initialise the ZeenML repository zenml up

-

Neptune Integration

zenml experiment-tracker register neptune_experiment_tracker --flavor=neptune \ --project="$NEPTUNE_PROJECT" --api_token="$NEPTUNE_API_TOKEN" zenml stack register neptune_stack \ -a default \ -o default \ -e neptune_experiment_tracker \ --set

Make sure to install these dependencies to execute the project functionalities seamlessly! 🌟

In this pipeline, we embark on a journey through various steps to train our models! 🛤️ Here's the process breakdown:

- run_pipeline.py: Initiates the training pipeline.

- steps/ingest_Data: Ingests the data, sending it to the data_validation step.

- data_validation step: Conducts validation tests and transforms values.

- steps/clean_Data: Carries out data preprocessing logics.

- data_Drift_validation step: Conducts data drift tests.

- steps/train_model.py: Utilizes h2o.ai AUTOML for model selection.

- src/train_models.py: Implements the best model on the cleaned dataset.

- model_performance_Evaluation.py: Assesses model performance on a split dataset.

- steps/alert_report.py: Here, if any of teh validation test suites, didn't meet the threshold condition, email will be sent to the user, along with the failed Evidently.AI generated HTML reports.

Each step is crucial in refining and validating our model. All aboard the train pipeline! 🌟🚆

The continuous integration pipeline focuses on the production environment and streamlined processes for deployment. 🔄

Here's how it flows:

- ci-cd.py: Triggered to initiate the CI/CD pipeline.

- steps/production_batch_data: Accesses production batch data from the Production_data folder

- pipelines/ci_cd_pipeline.py: As we already discussed earlier, we conduct Data Quality, Data Drift as previously we did, if threshold fails, email reports are sent.

- steps/predict_production_Data.py: Utilizes the pre-trained best model to make predictions on new production data. Then, we conduct Model Performance validation as previously we did, if threshold fails, email reports are sent.

This pipeline is crucial for maintaining a continuous and reliable deployment process. 🔁✨

In our project, email reports are a vital part of the pipeline to notify users when certain tests fail. These reports are triggered by specific conditions during the pipeline execution. Here's how it works:

Upon data quality or data drift test or model performance validation tests failures, an email is generated detailing:

- Number of total tests performed.

- Number of passed and failed tests.

- Failed test reports attached in HTML format.

This email functionality is integrated into the pipeline steps via Python scripts (steps/alert_report.py). If a particular test threshold fails, the execution pauses and an email is dispatched. Successful test completions proceed to the next step in the pipeline.

This notification system helps ensure the integrity and reliability of the data processing and model performance at each stage of the pipeline.

We also send failed alert reports via Discord and Slack platforms.

Discord: #failed-alerts

Slack: #sales-conversion-test-failures

The Prediction App is the user-facing interface that leverages the trained models to make predictions based on user input. 🎯

To run the streamlit application,

bash streamlit run app.py

- 🌐 Streamlit Application: User-friendly interface for predictions and monitoring.

- 🚀 Prediction App: Input parameters for prediction with a link to Neptune.ai for detailed metrics.

- 📊 Interpretability Section: Explore detailed interpretability plots, including SHAP global and local plots.

- 📈 Data and Model Reports: View reports on data quality, data drift, target drift, and model performance.

- 🛠️ Test Your Batch Data Section: Evaluate batch data quality with 67 validation tests, receive alerts on failures.

This app streamlines the process of making predictions, interpreting model outputs, monitoring data, and validating batch data.

- Fields: Impressions, Clicks, Spent, Total_Conversion, CPC.

- Predict button generates approved conversion predictions.

- 🔗 Neptune.ai Metrics

- 📝 Detailed Interpretability Report: View global interpretability metrics.

- 🌐 SHAP Global Plot: Explore SHAP values at a global level.

- 🌍 SHAP Local Plot: Visualize SHAP values for user-input data.

- 📉 Data Quality Report: Assess data quality between reference and current data.

- 📊 Data Drift Report: Identify drift in data distribution.

- 📈 Target Drift Report: Monitor changes in target variable distribution.

- 📉 Model Performance Report: Evaluate the model's performance.

- Check options to generate specific reports.

- Click 'Submit' to view generated reports.

- 📂 Dataset Upload: Upload your batch dataset for validation.

- 📧 Email Alerts: Provide an email for failure alerts.

- 🔄 Data Validation Progress: 67 tests to ensure data quality.

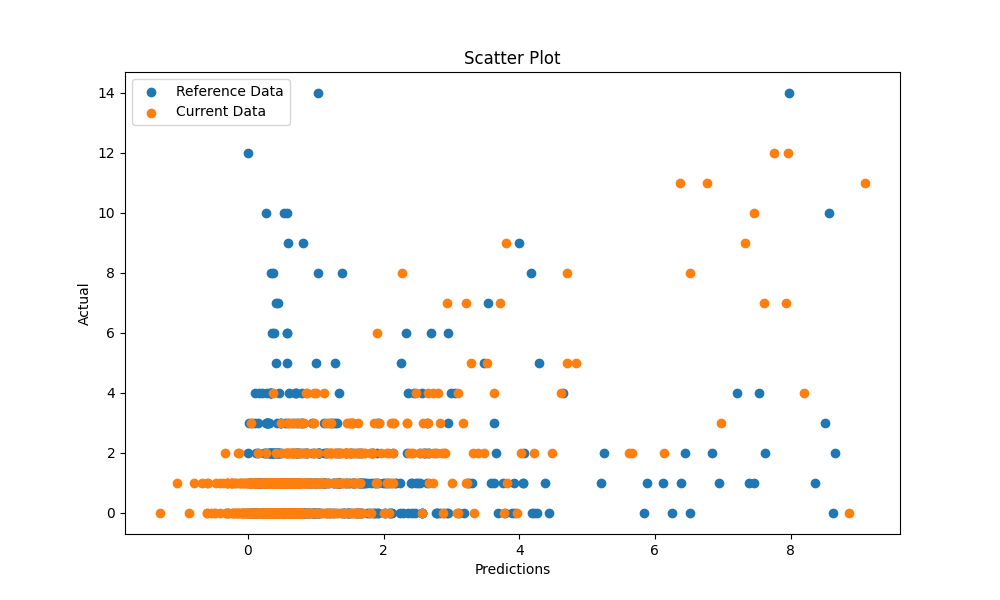

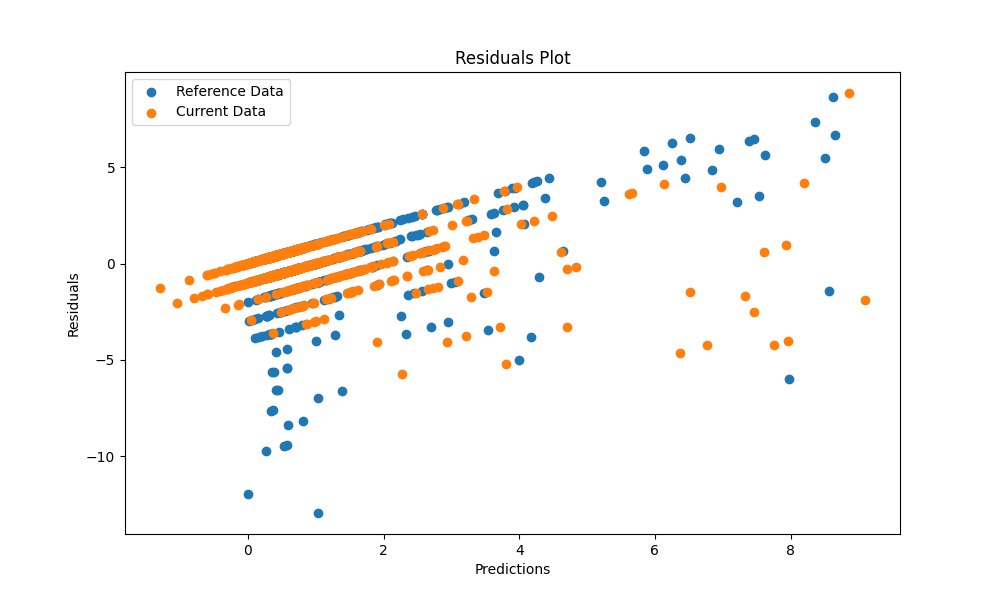

- 📊 Visualizations: Scatter plot and residuals plot for validation results.

Successful tests validation:

For more details, check the respective sections in the Streamlit app.

This application provides an intuitive interface for users to make predictions and monitoring effortlessly. 📊✨ Explore the power of data-driven insights with ease and confidence! 🚀🔍

Neptune.ai offers an intuitive dashboard for comprehensive tracking and management of experiments, model metrics, and pipeline performance. Let's dive into its features:

- Visual Metrics: Visualize model performance metrics with interactive charts and graphs for seamless analysis. 📈📊

- Experiment Management: Track experiments, parameters, and results in a structured and organized manner. 🧪📋

- Integration Capabilities: Easily integrate Neptune.ai with pipeline steps for automated tracking and reporting. 🤝🔗

- Collaboration Tools: Facilitate teamwork with collaborative features and easy sharing of experiment results. 🤝💬

- Code and Environment Tracking: Monitor code versions and track environments used during experimentation for reproducibility. 🛠️📦

Necessary Commands:

-

Necessary imports:

import neptune from neptune.types import File from zenml.integrations.neptune.experiment_trackers.run_state import get_neptune_run

-

Initiate the neptune run

neptune_run = get_neptune_run() -

To track the pandas dataframe:

neptune_run["data/Training_data"].upload(File.as_html(df)) -

Track HTML reports:

neptune_run["html/Data Quality Test"].upload("Evidently_Reports/data_quality_suite.html")

-

Track plot and graph visualisations:

neptune_run["visuals/scatter_plot"].upload(File.as_html(fig1)) -

Track model metrics:

model["r2"].log(perf.r2()) model["mse"].log(perf.mse()) model["rmse"].log(perf.rmse()) model["rmsle"].log(perf.rmsle()) model["mae"].log(perf.mae())

Neptune.ai Dashboard Code files:

Neptune.ai Dashboard Datasets:

Neptune.ai Dashboard visualisations:

Neptune.ai Dashboard HTML reports:

Neptune.ai Dashboard model metrics:

Access my Neptune.ai Dashboard here

Neptune.ai enhances the project by providing a centralized platform for managing experiments and gaining deep insights into model performance, contributing to informed decision-making. 📊✨

Docker is an essential tool for packaging and distributing applications. Here's how to set up and use Docker for this project:

Running the Docker Container: Follow these steps to build the Docker image and run a container:

```bash

docker build -t my-streamlit-app .

docker run -p 8501:8501 my-streamlit-app

```

Best Practices: Consider best practices such as data volume management, security, and image optimization.

- Configured CI/CD workflow for automated execution

🎯 Predictions Scatter Plot: Visualizes model predictions against actual conversions. 📈 Residuals Plot: Illustrates the differences between predicted and actual values.

Integrated into CI/CD pipeline:

- Automatic generation on every push event.

- Visual insights available directly in the repository.

🌟 These reports enhance transparency and provide crucial insights into model performance! 🌟

Follow these steps to run different components of the project:

-

Training Pipeline:

- To initiate the training pipeline, execute

python run_pipeline.py

-

Continuous Integration Pipeline:

- To execute the CI/CD pipeline for continuous integration, run

python run_ci_cd.py

-

Streamlit Application:

- Start the Streamlit app to access the prediction interface using,

streamlit run app.py

Each command triggers a specific part of the project. Ensure dependencies are installed before executing these commands. Happy running! 🏃♂️✨