This repo contains the official PyTorch code and pre-trained models for Agent Attention.

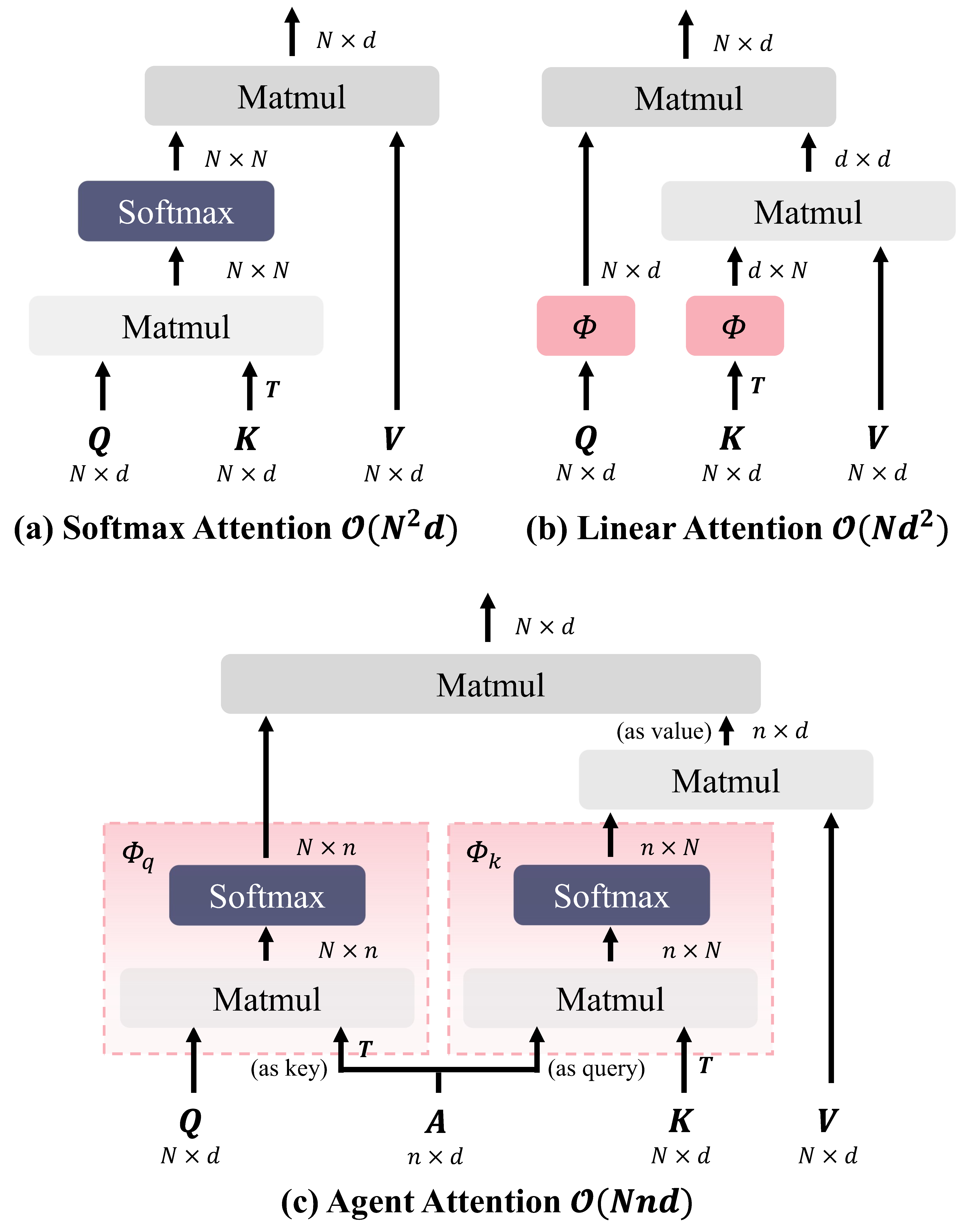

The attention module is the key component in Transformers. While the global attention mechanism offers robust expressiveness, its excessive computational cost constrains its applicability in various scenarios. In this paper, we propose a novel attention paradigm, Agent Attention, to strike a favorable balance between computational efficiency and representation power. Specifically, the Agent Attention, denoted as a quadruple

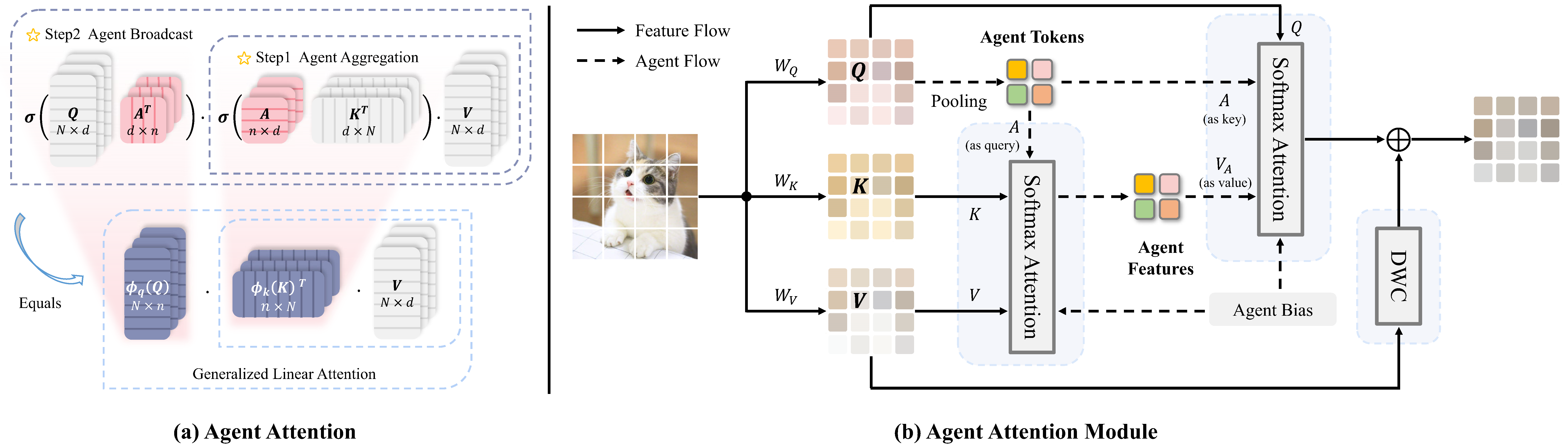

An illustration of our agent attention and agent attention module. (a) Agent attention uses agent tokens to aggregate global information and distribute it to individual image tokens, resulting in a practical integration of Softmax and linear attention.

Please go to the folder agent_transformer for specific document.

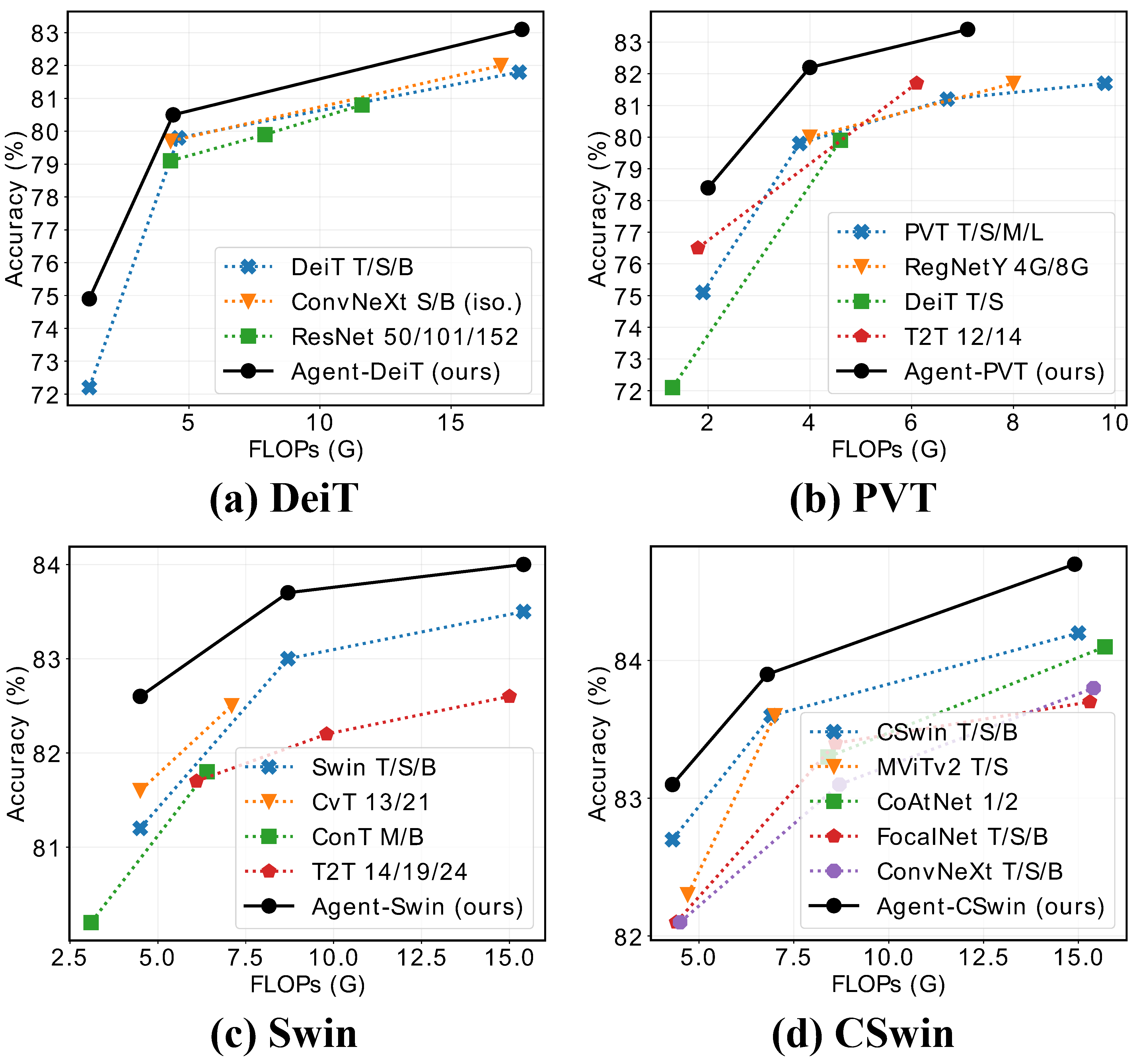

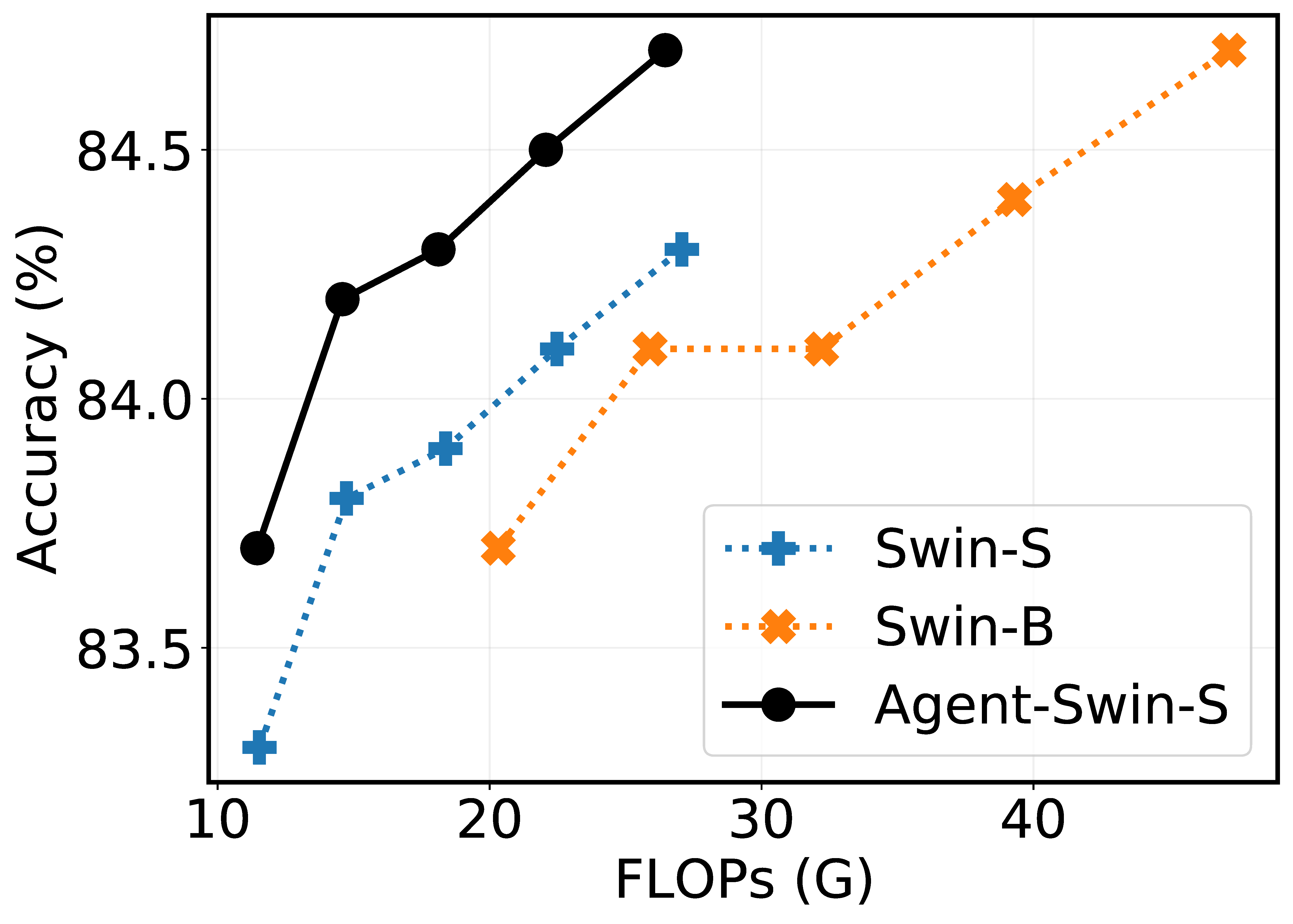

- Comparison of different models on ImageNet-1K.

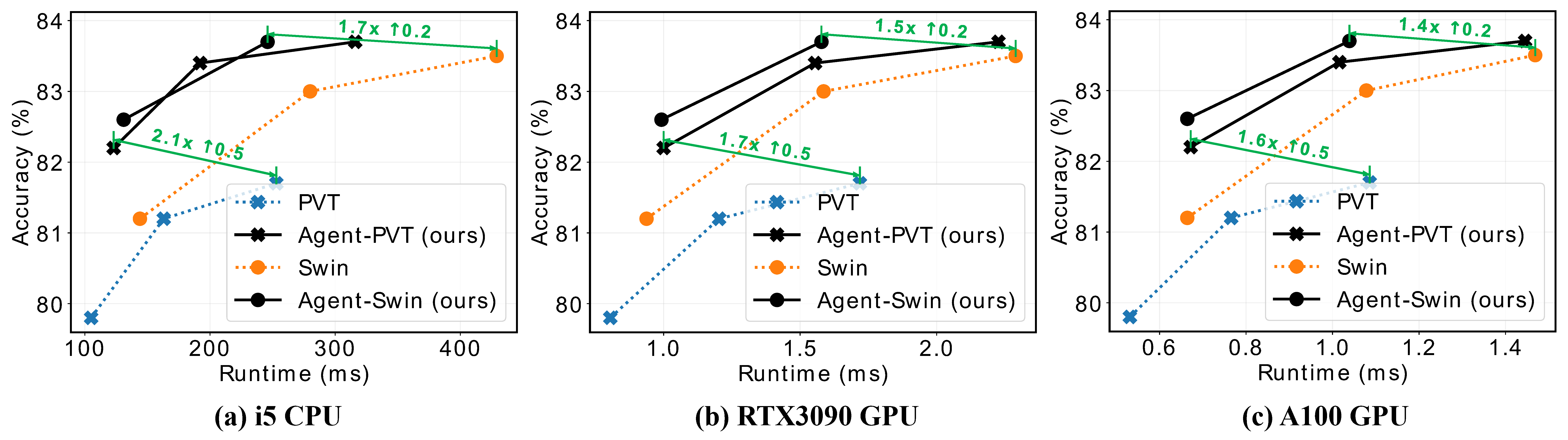

- Accuracy-Runtime curve on ImageNet.

- Increasing resolution to

${256^2, 288^2, 320^2, 352^2, 384^2}$ .

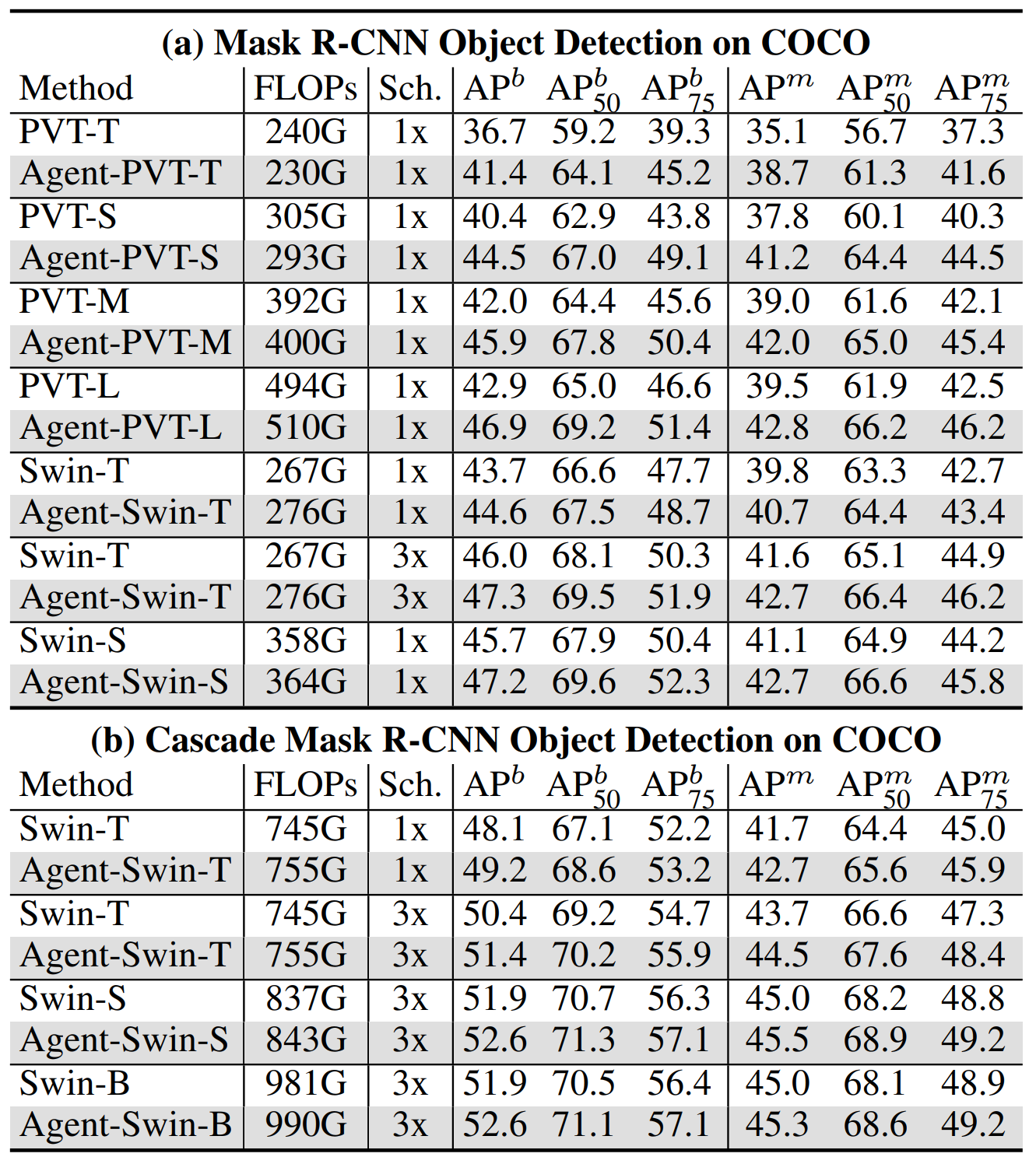

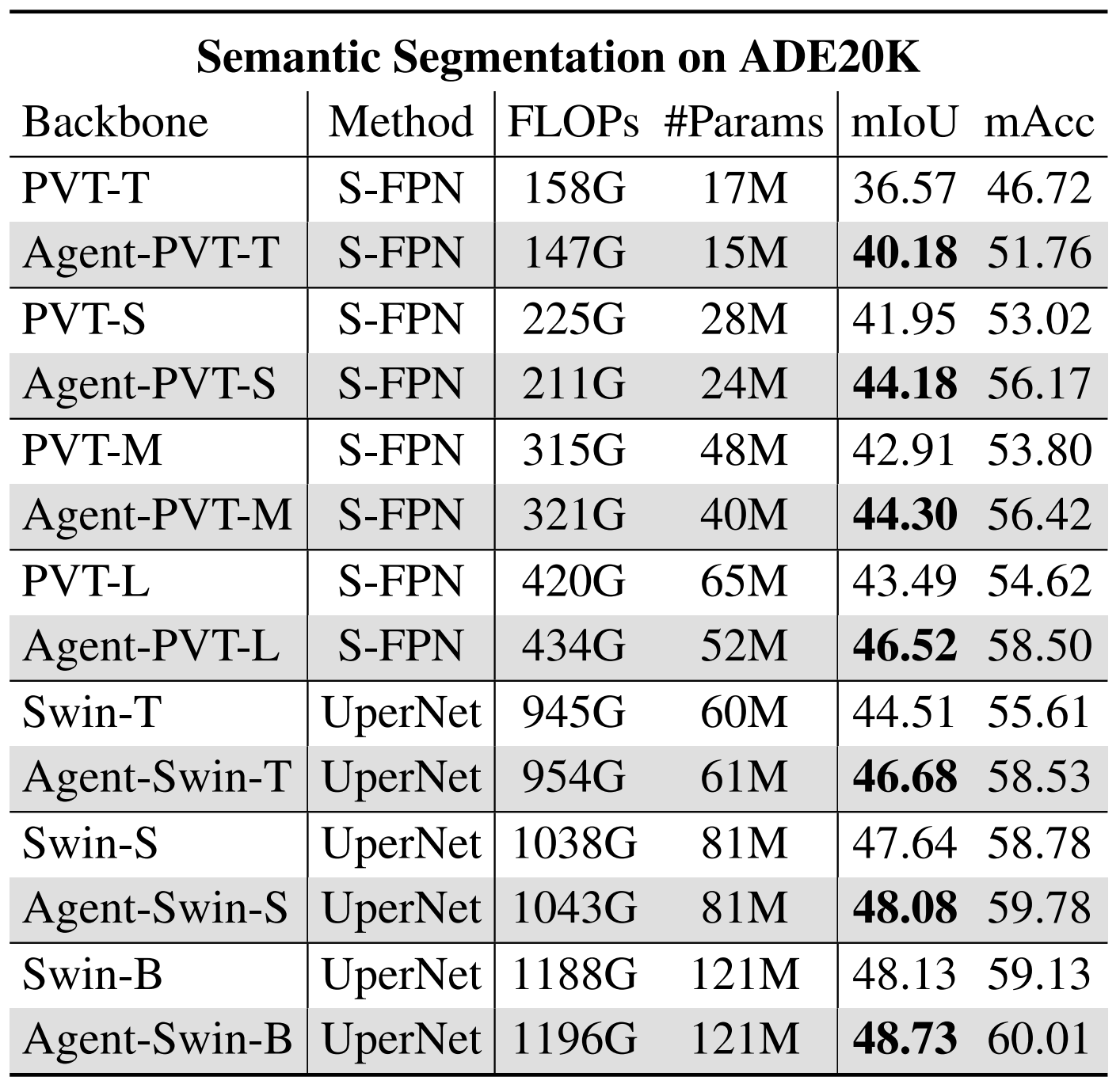

Please go to the folder detection, segmentation for specific documents.

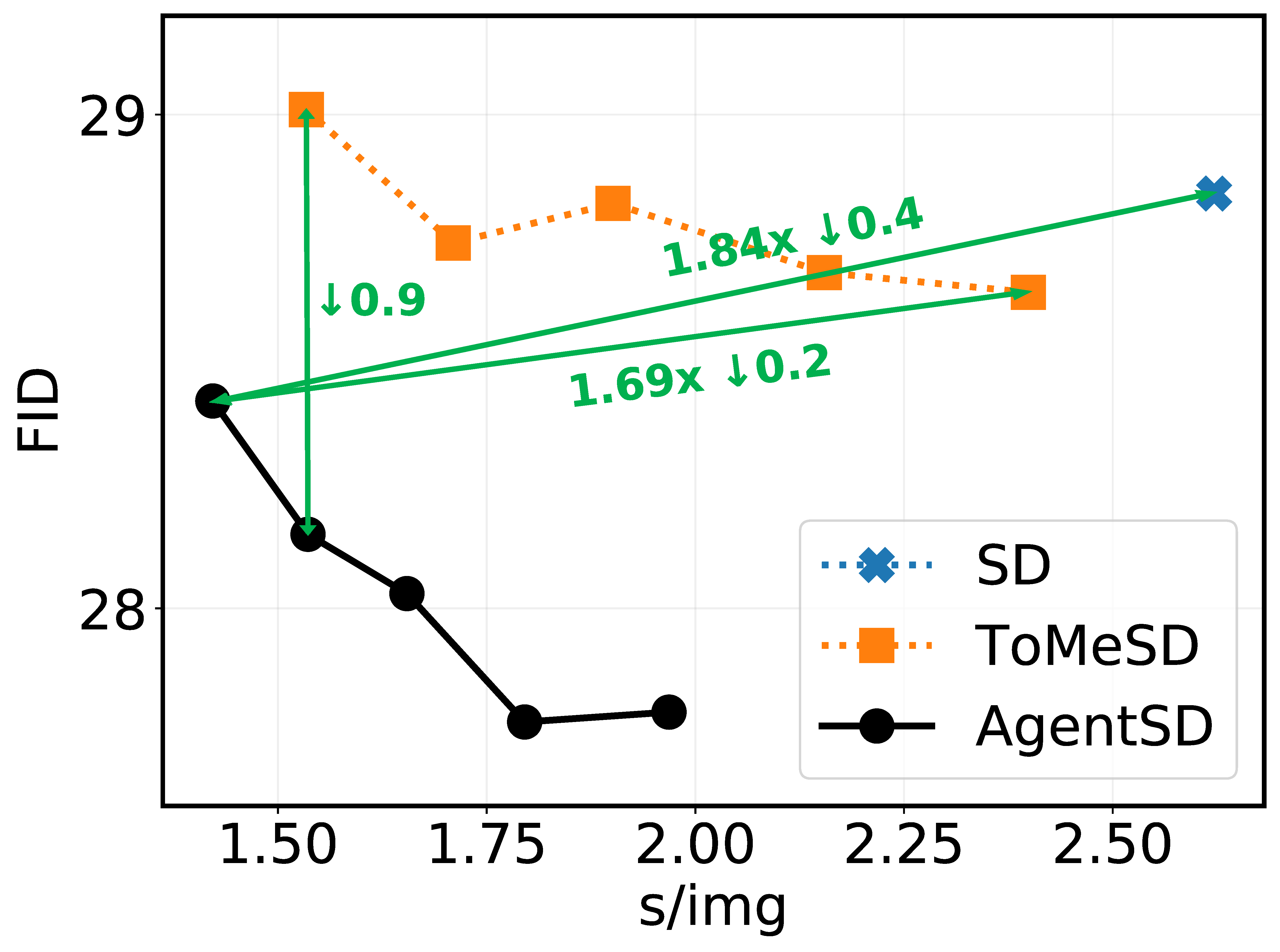

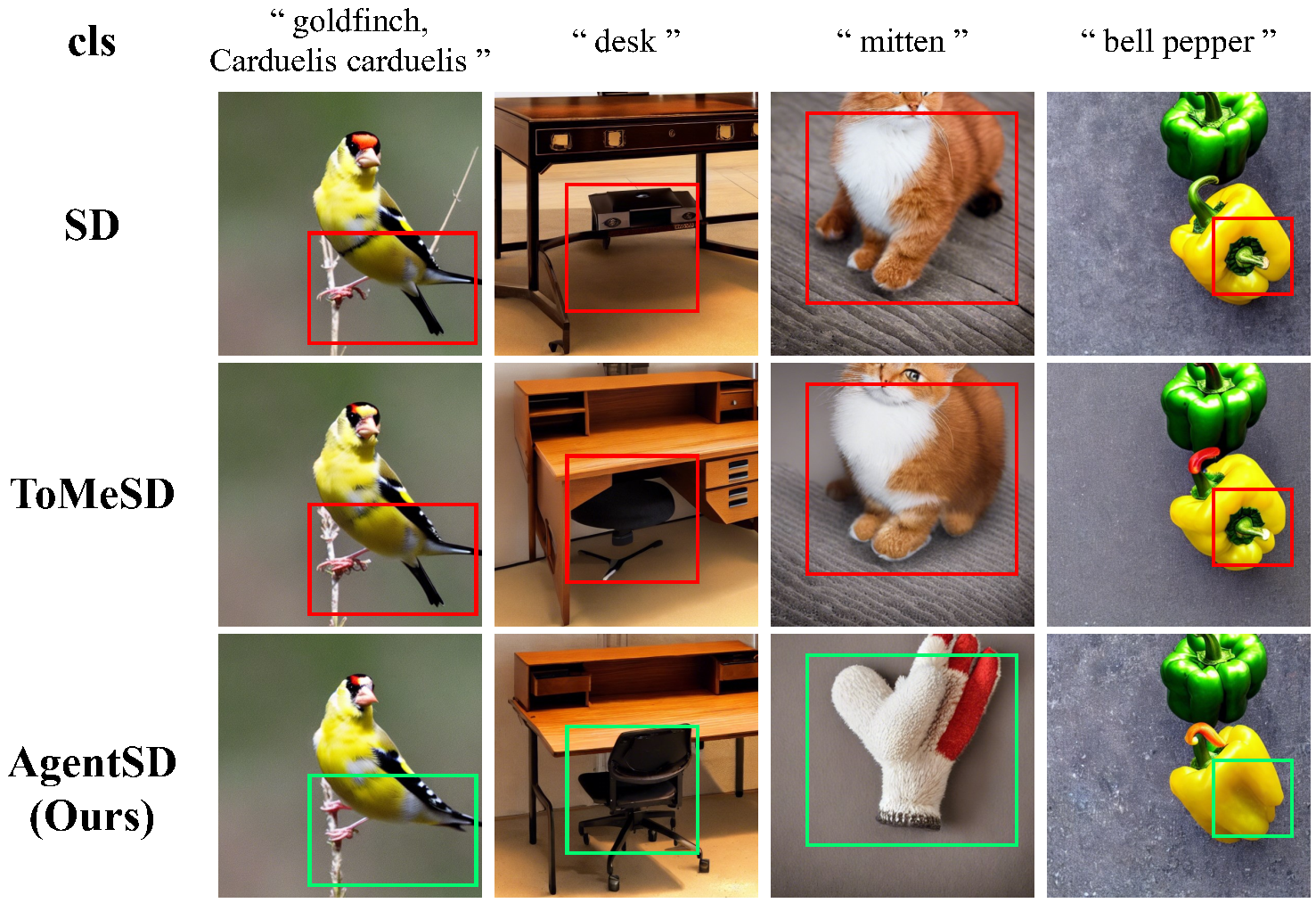

When applied to Stable Diffusion, our agent attention accelerates generation and substantially enhances image generation quality without any additional training. Please go to the folder agentsd for specific document.

- Quantitative Results of Stable Diffusion, ToMeSD and our AgentSD.

- Samples generated by Stable Diffusion, ToMeSD (

$r=0.4$ ) and AgentSD ($r=0.4$ ).

- Classification

- Segmentation

- Detection

- Agent Attention for Stable Diffusion

Our code is developed on the top of PVT, Swin Transformer, CSwin Transformer and ToMeSD.

If you find this repo helpful, please consider citing us.

@article{han2023agent,

title={Agent Attention: On the Integration of Softmax and Linear Attention},

author={Han, Dongchen and Ye, Tianzhu and Han, Yizeng and Xia, Zhuofan and Song, Shiji and Huang, Gao},

journal={arXiv preprint arXiv:2312.08874},

year={2023}

}If you have any questions, please feel free to contact the authors.

Dongchen Han: hdc23@mails.tsinghua.edu.cn

Tianzhu Ye: ytz20@mails.tsinghua.edu.cn