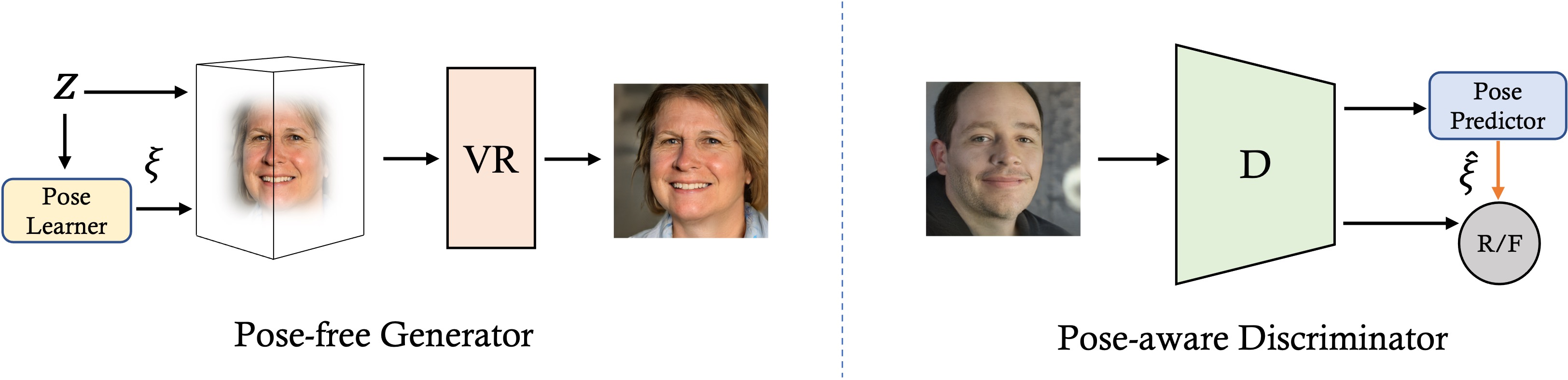

Figure: Framework of PoF3D, which consists of a pose-free generator and a pose-aware discriminator. The pose-free generator maps a latent code to a neural radiance field as well as a camera pose, followed by a volume renderer (VR) to output the final image. The pose-aware discriminator first predicts a camera pose from the given image and then use it as the pseudo label for conditional real/fake discrimination, indicated by the orange arrow.

Learning 3D-aware Image Synthesis with Unknown Pose Distribution

Zifan Shi*, Yujun Shen*, Yinghao Xu, Sida Peng, Yiyi Liao, Sheng Guo, Qifeng Chen, Dit-Yan Yeung

CVPR 2023

(* indicates equal contribution)

Figure: Images and geometry synthesized by PoF3D under random views,

without any pose prior.

[Paper] [Project Page] [Supp]

This work proposes PoF3D that frees generative radiance fields from the requirements of 3D pose priors. We first equip the generator with an efficient pose learner, which is able to infer a pose from a latent code, to approximate the underlying true pose distribution automatically. We then assign the discriminator a task to learn pose distribution under the supervision of the generator and to differentiate real and synthesized images with the predicted pose as the condition. The pose-free generator and the pose-aware discriminator are jointly trained in an adversarial manner. Extensive results on a couple of datasets confirm that the performance of our approach, regarding both image quality and geometry quality, is on par with state of the art. To our best knowledge, PoF3D demonstrates the feasibility of learning high-quality 3D-aware image synthesis without using 3D pose priors for the first time.

We test our code on PyTorch 1.9.1 and CUDA toolkit 11.3. Please follow the instructions on https://pytorch.org for installation. Other dependencies can be installed with the following command:

pip install -r requirements.txtOptionally, you can install neural renderer for GeoD support (which is not used in the paper):

cd neural_renderer

pip install .

FFHQ and ShapeNet cars are borrowed from EG3D. Please follow their instructions to get the dataset. Cats dataset can be downloaded from the link.

We provide the scripts for training on FFHQ, Cats and ShapeNet Cars dataset. Please update the path to the dataset in the scripts. The training can be started with:

./ffhq.sh or ./cats.sh or ./shapenet_cars.shNote that we provide the GeoD supervision option on FFHQ and Cats dataset. But using the default configuration will not use it (which is the configuration we used in the paper). If you would like to try with GeoD, please modify the corresponding arguments.

The pretrained models are available here.

To perform the inference, you can use the command:

python gen_samples_pose.py --outdir ${OUTDIR}

--trunc 0.7

--shapes true

--seeds 0-100

--network ${CHECKPOINT_PATH}

--shape-res 128

--dataset ${DATASET}where DATASET denotes the dataset name when training the model. It can be 'ffhq', 'cats', or 'shapenetcars'. CHECKPOINT_PATH should be replaced with the path to the checkpoint. OUTDIR denotes the folder to save the outputs.

@article{shi2023pof3d,

title = {Learning 3D-aware Image Synthesis with Unknown Pose Distribution},

author = {Shi, Zifan and Shen, Yujun, and Xu, Yinghao and Peng, Sida and Liao, Yiyi and Guo, Sheng and Chen, Qifeng and Dit-Yan Yeung},

booktitle = {CVPR},

year = {2023}

}