Regulatory Knowledge Graph built in collaboration with Abu Dhabi Global Market. O Original paper: A case study for Compliance as Code with graphs and language models: public release of the Regulatory Knowledge Graph .

In order to automate compliance and RegTech process we've designed an approach aimed to convert human-readable regulations into Regulation-As-A-Code: executable rules parsed from texts with the help of transformer based LM trained on NER task and relation extraction task. This allows us to support the Open World Assumption and have an extendable ontology. Rules are extracted as a subgraph with GNN thus supplement inference with causality reasoning as follows:

If an ‘ENT’ with this ‘PERM’ was doing this ‘ACT’ or ‘FS’ with ‘PROD’ using ‘TECH’ then to avoid this ‘RISK’ they should ‘MIT’.

The project status:

- Design the approach and initial taxonomy for labeling ✅

- Train models to extract entities for graph nodes. ✅

- Train models to extract relations for graph edges.

- Train GNN for rules extraction

The project got inspiration from the DARPA's research around surveillance of the media field, where video and text feeds were parsed by ML to extract events, event's time, event's place, entities involved and other supplementary information and store that in the Knowledge DB for a downstream analysis. Find more details on how it is applicable to the RegTech and compliance and more insights for higher level vision of the Knowledge Graph project here in athe article at Linkedin

- Download the data and checkout the instructions in the

./data/folder. You may need lfs for that. - Open the Neo4j Browser and test one of the cypher queries.

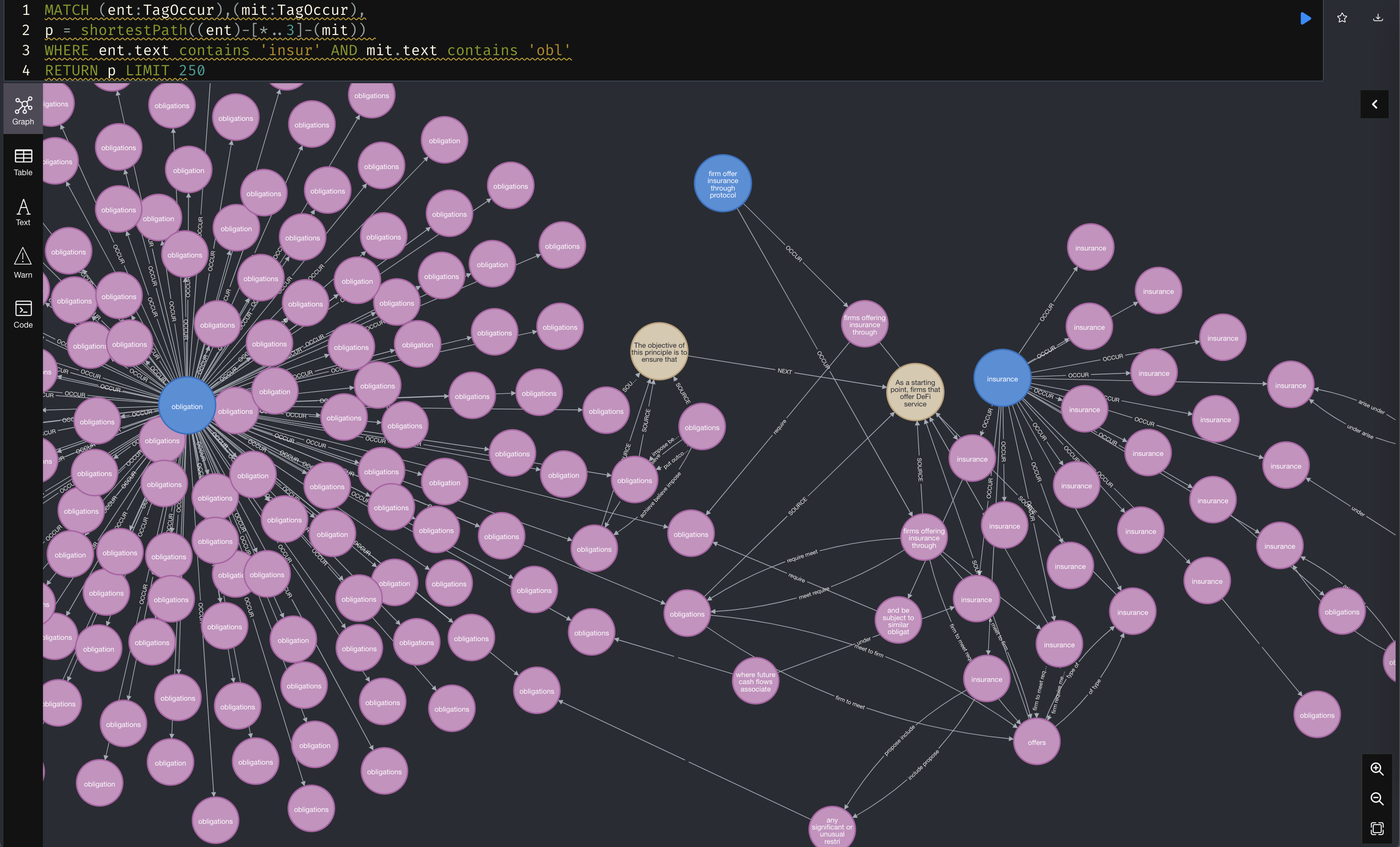

- You may check all the shortest path in the Regulatory Knowledge Graph between

insuranceandobligation:MATCH (ent:TagOccur),(mit:TagOccur), p = shortestPath((ent)-[*..3]-(mit)) WHERE ent.text contains 'insur' AND mit.text contains 'obl' RETURN p LIMIT 250

- The result is interactive and should look like this:

- Check more examples at

./reports/folder

NeuralCoref is used for coreference resolution.

Spacy To train NER models for nodes creation and custom lemmatizer:

| Bert-based NER | Precision | Recall | F1 |

|---|---|---|---|

| ACT | 91.03 | 91.67 | 91.35 |

| FS | 94.97 | 79.41 | 86.50 |

| PROD | 90.32 | 93.33 | 91.80 |

| TECH | 94 | 93.73 | 93.87 |

| RISK | 86.24 | 80.50 | 83.27 |

| MIT | 81.54 | 55.58 | 66.11 |

| PERM | 91.01 | 96.43 | 93.64 |

| ENT | 96.57 | 97.40 | 96.98 |

| POS-Regexp based models | Size | Source |

|---|---|---|

| DEF | 370 | COBS labeled |

There is a stub for relation extraction which is based on the combination of Spacy and NetworkX

Neo4J is used for graph storage with APOC extension, cypher execution and visualisations

-

Knowledge graph for ADGM regulations in the

./datafolder, which consist of:"Nodes" "value.count" "Document" 26 "Paragraph" 22027 "Tag" 35498 "TagOccur" 173853 "Relations" "value.count" "NEXT" 37381 "INSIDE" 22027 "OCCUR" 173853 "SOURCE" 186693 "REL" 789253 -

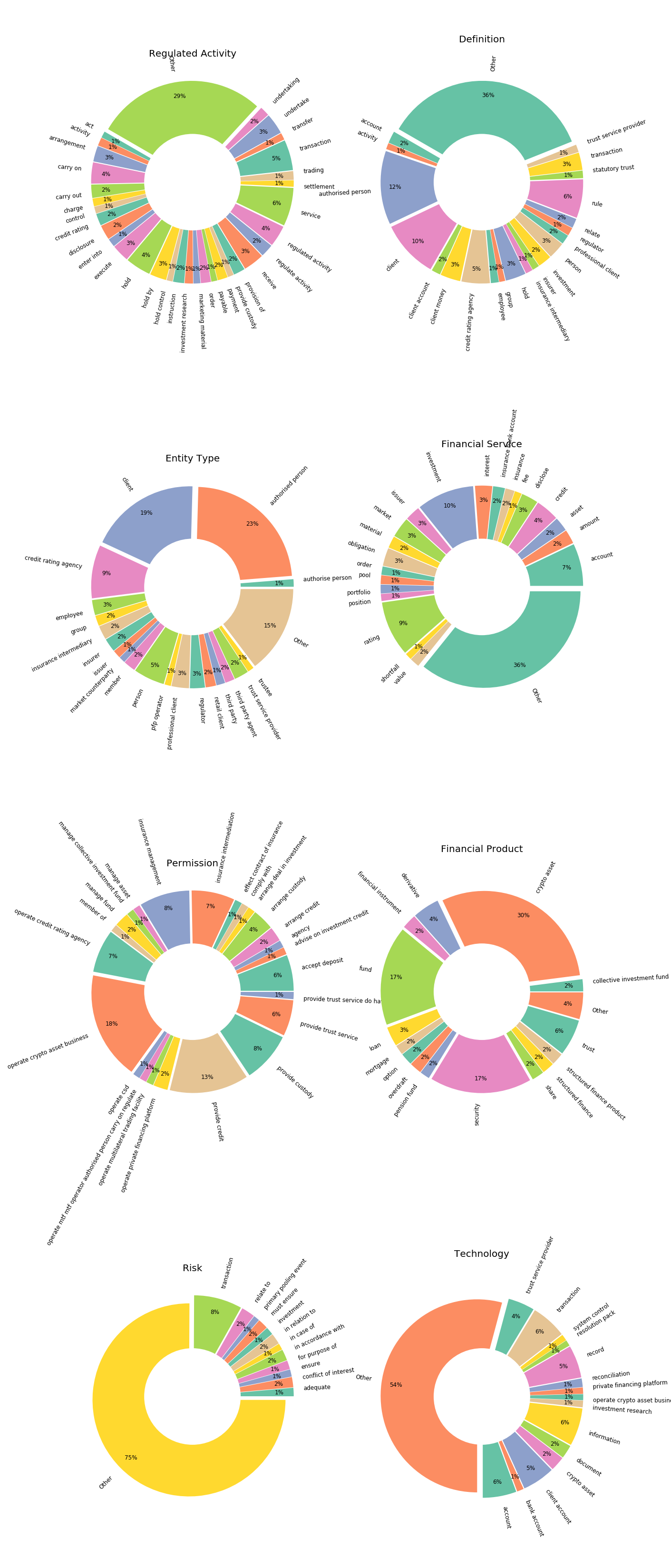

Reports to showcase various topics coverage by regulatory documents in the ./reports folder:

-

Example cypher queries to try in the Reports Readme

TBD