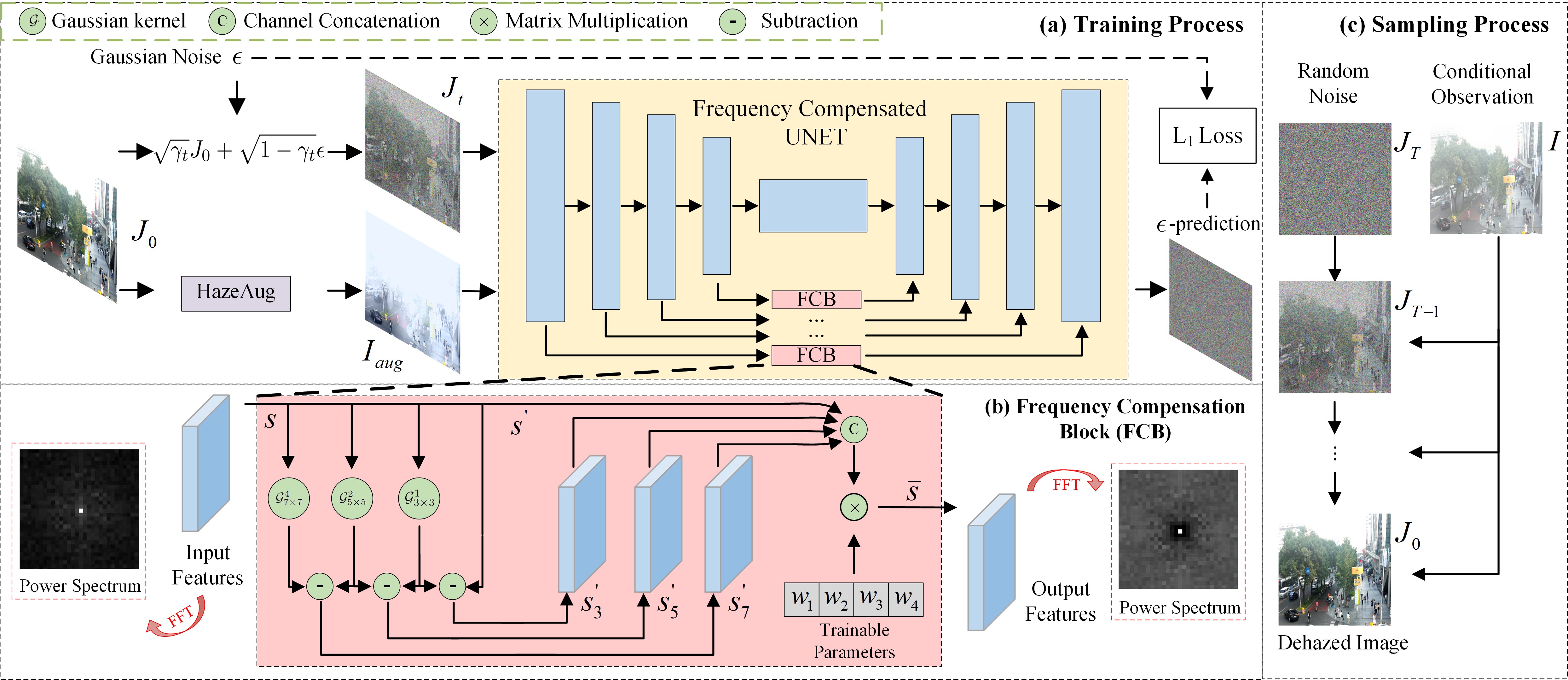

This is an official implementation of Frequency Compensated Diffusion Model for Real-scene Dehazing by Pytorch.

-

This repo is a modification on the SR3 Repo.

-

Install third-party libraries.

pip install -r requirement.txt Download train/eval data from the following links:

Training: RESIDE

Testing: I-Haze / O-Haze / Dense-Haze / Nh-Haze / RTTS

mkdir datasetRe-organize the train/val images in the following file structure:

#Training data file structure

dataset/RESIDE/

├── HR # ground-truth clear images.

├── HR_hazy_src # hazy images.

└── HR_depth # depth images (Generated by MonoDepth (github.com/OniroAI/MonoDepth-PyTorch)).

#Testing data (e.g. DenseHaze) file structure

dataset/{name}/

├── HR # ground-truth images.

└── HR_hazy # hazy images.then make sure the correct data paths ("dataroot") in config/framework_da.json.

We prepared the pretrained model at:

| Type | Weights |

|---|---|

| Generator | OneDrive |

Download the test set (e.g O-Haze). Simply put the test images in "dataroot" and set the correct path in config/framework_da.json about "dataroot";

Download the pretrained model and set the correct path in config/framework_da.json about "resume_state":

"path": {

"log": "logs",

"tb_logger": "tb_logger",

"results": "results",

"checkpoint": "checkpoint",

"resume_state": "./ddpm_fcb_230221_121802"

}

"val": {

"name": "dehaze_val",

"mode": "LRHR",

"dataroot": "dataset/O-HAZE-PROCESS",

...

}# infer

python infer.py -c [config file]The default config file is config/framework_da.json. The outputs images are located at /data/diffusion/results. One can change output path in core/logger.py.

Prepare train dataset and set the correct paths in config/framework_da.json about "datasets";

If training from scratch, make sure "resume_state" is null in config/framework_da.json.

# infer

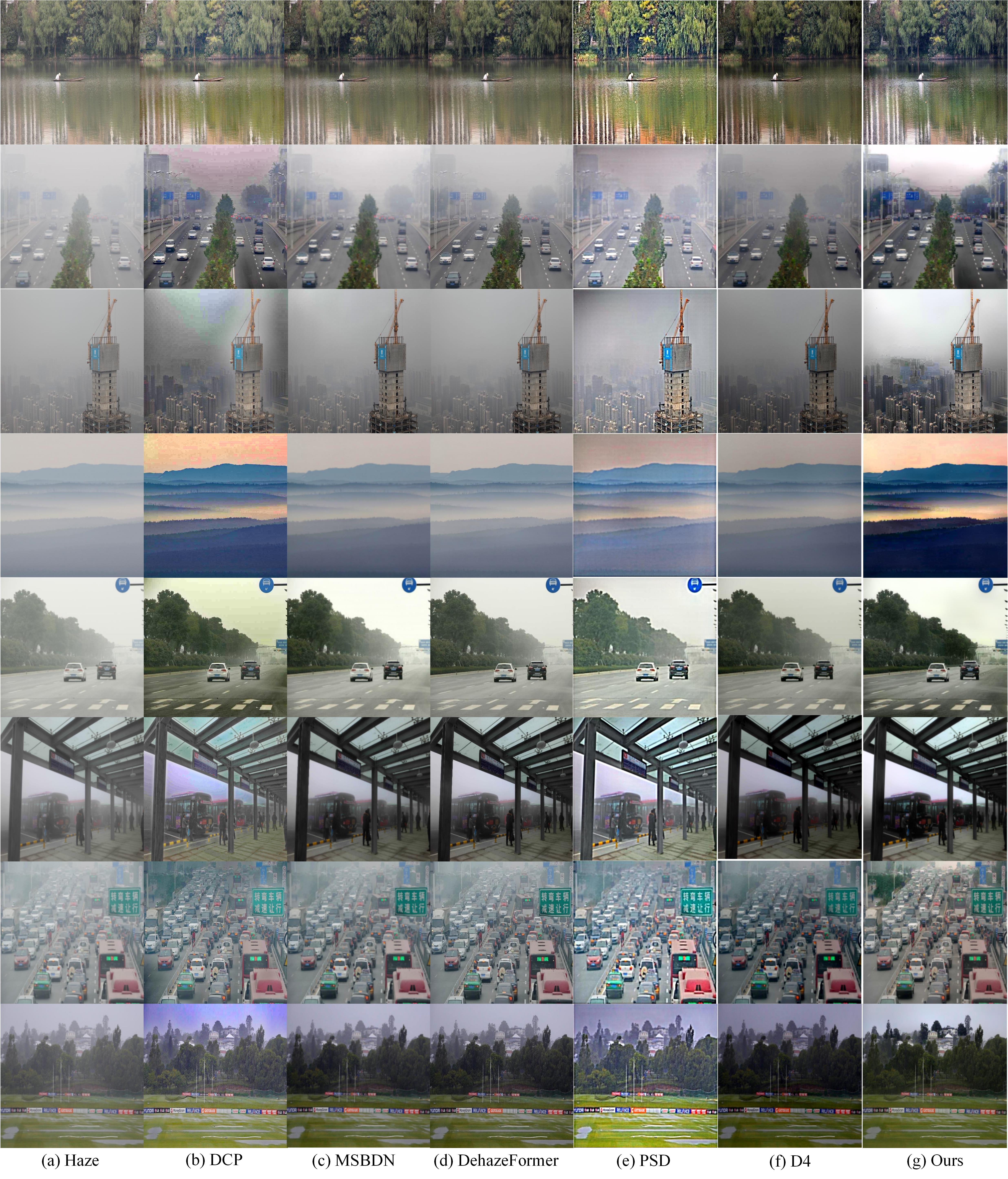

python train.py -c [config file]Quantitative comparison on real-world hazy data (RTTS). Bold and underline indicate the best and the second-best, respectively.

-

Upload configs and pretrained models

-

Upload evaluation scripts

-

Upload train scripts