This is the official PyTorch implementation of the following publication:

SparseDC: Depth completion from sparse and non-uniform inputs

Chen Long, Wenxiao Zhang, Zhe Chen, Haiping Wang, Yuan Liu, Peiling Tong, Zhen Cao, Zhen Dong, Bisheng Yang

Information Fusion 2024

Paper | Project-page | Video

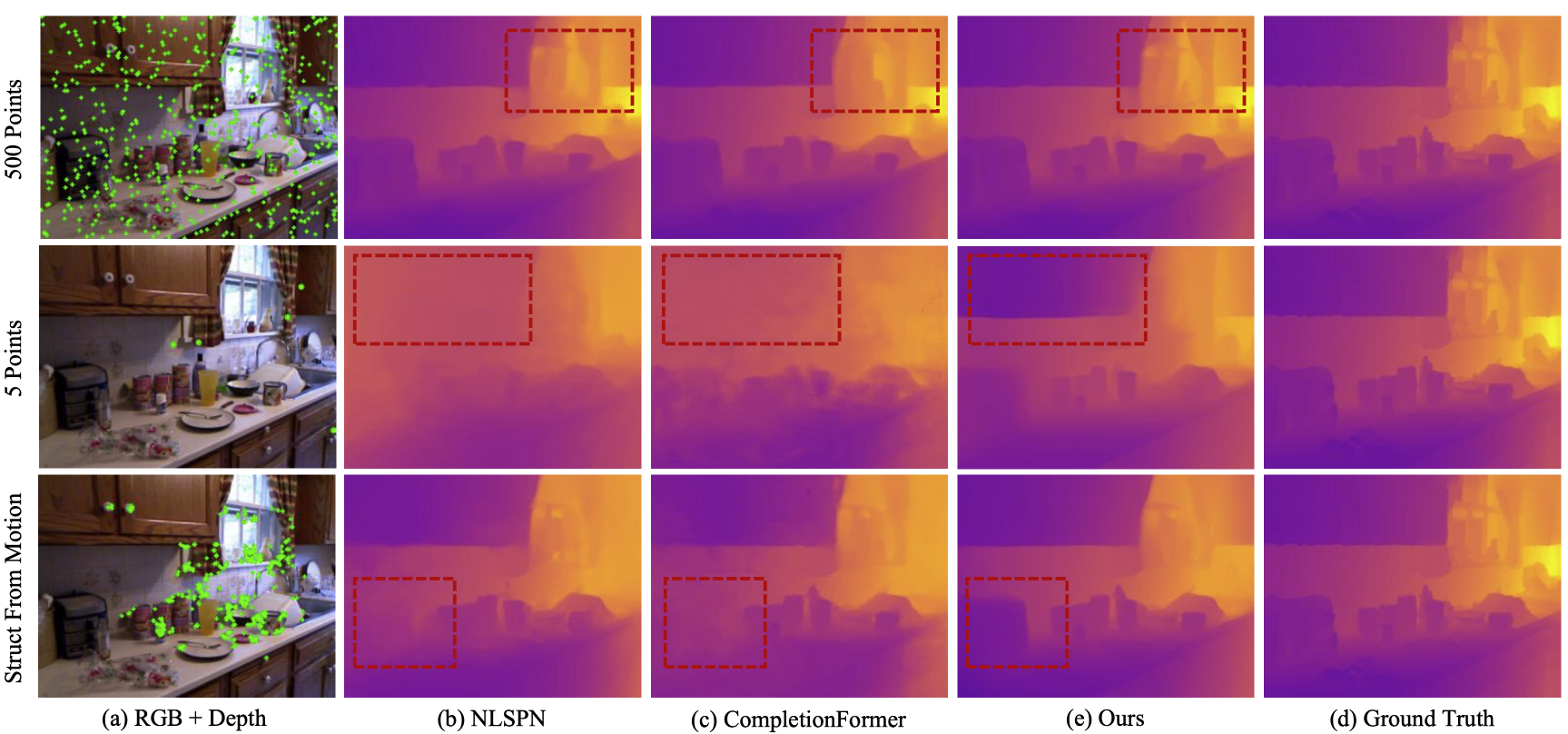

SparseDC: Depth completion from sparse and non-uniform inputs

Abstract: We propose SparseDC, a model for Depth Completion of Sparse and non-uniform depth inputs. Unlike previous methods focusing on completing fixed distributions on benchmark datasets (e.g., NYU with 500 points, KITTI with 64 lines), SparseDC is specifically designed to handle depth maps with poor quality in real usage. The key contributions of SparseDC are two-fold. First, we design a simple strategy, called SFFM, to improve the robustness under sparse input by explicitly filling the unstable depth features with stable image features. Second, we propose a two-branch feature embedder to predict both the precise local geometry of regions with available depth values and accurate structures in regions with no depth. The key of the embedder is an uncertainty-based fusion module called UFFM to balance the local and long-term information extracted by CNNs and ViTs. Extensive indoor and outdoor experiments demonstrate the robustness of our framework when facing sparse and non-uniform input depths.

- 2024-04-10: SparseDC is accepted by Information Fusion! 🎉

- 2023-12-04: Code, Preprint paper are available! 🎉

The code has been trained on:

- Ubuntu 20.04

- CUDA 11.3

- Python 3.9.18

- Pytorch 1.12.1

- GeForce RTX 4090

$\times$ 2.

- First, create the conda environment:

conda env create -f environment.yaml conda activate sparsedc - Second, Install the MMCV

mim install mmcv-full - Finally, Build and Install the DCN Module for refiner.

cd src/plugins/deformconv python setup.py build install

We used two datasets for training and three datasets for evaluation.

We used preprocessed NYUv2 HDF5 dataset provided by Fangchang Ma.

$ cd PATH_TO_DOWNLOAD

$ wget http://datasets.lids.mit.edu/sparse-to-dense/data/nyudepthv2.tar.gz

$ tar -xvf nyudepthv2.tar.gzNote that the original full NYUv2 dataset is available at the official website.

Then, you should generate a json file containing paths to individual images. We use the data lists for NYUv2 borrowed from the NLSPN repository. You can put this json into your data dir.

After that, you will get a data structure as follows:

nyudepthv2

├── nyu.json

├── train

│ ├── basement_0001a

│ │ ├── 00001.h5

│ │ └── ...

│ ├── basement_0001b

│ │ ├── 00001.h5

│ │ └── ...

│ └── ...

└── val

└── official

├── 00001.h5

└── ...

KITTI DC dataset is available at the KITTI DC Website.

For color images, KITTI Raw dataset is also needed, which is available at the KITTI Raw Website. You can refer to this script for data preparation.

During testing, you can use this script to subsample depth maps, or you can directly download our processed data from GoogleDrive. Then, put the test data in the data folder kitti_depth/data_depth_selection/val_selection_cropped/.

The overall data directory is structured as follows:

├── kitti_depth

| ├──data_depth_annotated

| | ├── train

| | ├── val

| ├── data_depth_velodyne

| | ├── train

| | ├── val

| ├── data_depth_selection

| | ├── test_depth_completion_anonymous

| | |── test_depth_prediction_anonymous

| | ├── val_selection_cropped

| | | |── velodyne_raw_lines64

| | | |── ...

| | | |── velodyne_raw_lines4

├── kitti_raw

| ├── 2011_09_26

| ├── 2011_09_28

| ├── 2011_09_29

| ├── 2011_09_30

| ├── 2011_10_03

SUN RGB-D dataset is available at the SUN RGB-D Website.

We used processed dataset provided by ankurhanda. The refined depth images are contained in the depth_bfx folder in the SUN RGB-D dataset. Also, you can directly download our organized data from GoogleDrive.

You can download the pretrained model from GoogleDrive, and put it in folder pretrain/.

To train SparseDC, you should prepare the dataset, and replace the "data_dir" to your data path. Then, you use the follow command:

$ python train.py experiment=final_version # for NYUDepth

$ python train.py experiment=final_version_kitti # for KITTIDCTo eval SparseDC on three benchmarks, you can use the following commands:

$ ./eval_nyu.sh final_version final_version pretrain/nyu.ckpt

$ ./eval_kitti.sh final_version_kitti final_version_kitti_test pretrain/kitti.ckpt

$ ./eval_sunrgbd.sh final_version final_version pretrain/nyu.ckptIf you find this repo helpful, please give us a 😍 star 😍. Please consider citing SparseDC if this program benefits your project

@article{LONG2024102470,

title = {SparseDC: Depth completion from sparse and non-uniform inputs},

journal = {Information Fusion},

volume = {110},

pages = {102470},

year = {2024},

issn = {1566-2535},

doi = {https://doi.org/10.1016/j.inffus.2024.102470},

url = {https://www.sciencedirect.com/science/article/pii/S1566253524002483},

author = {Chen Long and Wenxiao Zhang and Zhe Chen and Haiping Wang and Yuan Liu and Peiling Tong and Zhen Cao and Zhen Dong and Bisheng Yang},

keywords = {Depth completion, Uncertainty, Information fusion},

}We sincerely thank the excellent projects:

- PE-Net for DataLoader;

- NLSPN for depth completion metric loss calculation;

- FreeReg for readme template;

- Lightning-hydra-template for code organization.